CS6515 OMSCS - Graduate Algorithms Notes

Exam Preparation notes can be found over at:

Dynamic Programming

Introduction

- Step1: State the subproblem, i.e define what $D[i][j][k]$ represents. In general:

- Try prefix (or suffix)

- Then try substrings

- If you figured out a problem using substrings, think whether it can be solved with prefix/suffix.

- At least for this course, we are not testing suffix.

- Step2: Express the subproblem in the form of a recurrence relation, including the base cases.

- Step3: Write out the pseudocode.

- Step4: Time complexity analysis.

Longest increasing subsequence (LIS) (DP1)

Given a following sequence, find the length of the longest increasing subsequence. For example, given Input: 5, 7, 4, -3, 9, 1, 10, 4, 5, 8, 9, 3, the longest subsequence will be -3, 1, 4, 5, 8, 9, and the answer will be 6.

Extra Information:

When considering the LIS, the key question to ask is given a subsequence $x_1, …, x_{n-1}$, when will $x_n$ affect the LIS. When does this happen? Is only when $x_n$ is appended to the LIS of $x_1,…,x_{n-1}$.

Concretely, suppose the $LIS(x_1,…,x_{n-1}) = x_{1^*},…,x_j$, then LIS of $x_1,…,x_{n-1}, x_n$ will only change if $x_n$ is included, otherwise the LIS remains the same. This can only happen if $x_n > x_j$.

Subproblem:

Define the subproblem $L(i)$ to be be the length of the LIS on $x_1,…,x_i$. The important point is $L(i)$ must include $x_i$.

Recurrence relation:

Pseudocode:

Note, $L(i)$ must be at least 1, where the LIS is just itself.

1

2

3

4

5

6

7

8

9

10

S = [x1,...,xn]

L = [0, ..., 0]

for i = 1-> n:

# Stores the current best of L[i], which must be at least 1

L[i] = 1

for j = 1 -> i-1:

if S[i] > S[j] and L[j] + 1 >= L[i]:

L[i] = L[j] + 1 # Update current best

return max(L)

Complexity:

There is a double for loop with $i$ taking up to the value $n$. Hence, the time complexity is $O(n^2)$ and space complexity is $O(n)$.

Longest Common Subsequence (DP1)

Given two sequences, $x_1,..,x_n$ and $y_1,…,y_m$, find the longest common subsequence. For example given X = BCDBCDA and Y = ABECBAB, then the longest subsequence is BCBA.

Extra information:

Note that X and Y can be of different lengths, so, given a $X[:i]$ and $Y[:j]$. Let $L(i,j)$ denote the LCS of $X[:i]$ and $Y[:j]$, then there are two cases to consider:

if $x_i \neq y_j$, then either of the 3 cases can occur:

- $x_i$ is not used in the final solution, $L(i-1,j)$

- $y_j$ is not used in the final solution, $L(i, j-1)$

- Both is not used in the final solution

- If the last character of both is not used, then we can just drop both!

So, we can just consider the first two cases and take the max, i.e max$(L(i-1,j), L(i,j-1))$

if $x_i = y_j$, again there are 3 cases to consider:

- $x_i$ is not used in the final solution, $L(i-1,j)$

- $y_i$ is not used in the final solution, $L(i, j-1)$

- Both $x_i$ and $y_j$ are used in the final solution, $1+L(i-1,i-j)$.

- Note this means that the LCS $X[:i],Y[:j]$ ends with both $x_i$ and $y_j$ respectively

This is equal to max$\{L(i-1,j), L(i,j-1), 1+L(i-1,i-j)\}$.

However, we only need to consider the last case $1+L(i-1,i-j)$. This is because if the final solution must contain either $x_i$ or $y_j$ - otherwise we can just simply append them to the end. It may be possible that $x_i = y_{j-c}$, where it corresponds to some earlier occurrence in Y. But if this is true, that means $y_{j-c} = y_j$ and it does not matter.

Subproblem:

Let $L(i,j)$ denote the LCS of $X[:i],Y[:j]$, where $1 \leq i\leq n, 1\leq j \leq m$

Recurrence relation:

Firstly, $L(0,j) = 0, L(i,0) = 0$, then:

\[L(i,j) = \begin{cases} max(L(i-1,j), L(i,j-1)), & \text{if } x_i \neq y_j\\ 1+L(i-1,j-1), & \text{otherwise} \end{cases}\]Pseudocode:

1

2

3

4

5

6

7

8

9

10

11

12

13

for i = 1->n:

L[i][0] = 0

for j = 1->m:

L[0][j] = 0

for i = 1->n:

for j = 1->m:

if X[i] == Y[j]:

L[i][j] = 1+ L[i-1][j-1]

else:

L[i][j] = max(L[i-1][j], L[i][j-1])

return L[n][m]

Complexity:

Two loops, $n$ and $m$, so $O(nm)$

Backtracking

After the matrix L is constructed, we can backtrace to find out the common subsequence until we reach $L[1][1]$ - (note, this might be 0,0 depending on the indexing you use).

We consider the following cases:

- If $x_i = y_j$, this means that a character was added and then we move diagonally up.

- Else, we check to the left and top of coordinates (i,j).

- The left coordinate is (i-1,j)

- The top coordinate is (i,j-1)

- If the left coordinate is greater or equal to the top coordinate, we move left.

- This means we ignored $x_i$

- Else, we move top.

- This means that we ignored $y_j$

- Repeat until converge to initial starting point.

1

2

3

4

5

6

7

8

9

10

11

12

13

i=n, j=m

string = ""

while i > 1 and j>1:

if X[i] == Y[j]:

string = string + X[i]

i = i - 1

j = j - 1

if L[i-1][j] >= L[i][j-1]:

i = i - 1

else:

j = j - 1

return reverse(string)

Extra note - the steps of $i = i - 1$ and $j = j - 1$ can be reversed, and may lead to different results if there are multiple valid LCS.

Contiguous Subsequence Max Sum (DP1)

A contiguous subsequence of a list S is a subsequence made up of consecutive elements of S. For instance, if S is 5, 15, −30, 10, −5, 40, 10, then 15, −30, 10 is a contiguous subsequence but 5, 15, 40 is not. Give a linear-time algorithm for the following task:

Input: A list of numbers, a1, a2, . . . , an.

Output: The contiguous subsequence of maximum sum (a subsequence of length zero has sum zero).

For the preceding example, the answer would be 10, −5, 40, 10, with a sum of 55.

(Hint: For each j ∈ {1, 2, . . . , n}, consider contiguous subsequences ending exactly at position j.)

Extra notes:

Considering $S(i)$ which includes $x_i$. Since $x_i$ is part of the solution, then, the max sum is either itself, or add optimal max sum before itself if it is positive, i.e $S(i-1)$.

Subproblem:

Let $S(i)$ denote the max sum of the sequence $x_1,…,x_i$ including i.

Recurrence relation:

Pseudocode:

1

2

3

4

5

seq = [s0,s1,...,sn]

S = [0,...,0]

for i -> 1 to n:

S[i] = seq[i] + max(0,S[i-1])

return max(S)

Complexity:

Only one for loop, hence $O(n)$.

Knapsack (DP2)

Given $n$ items with weights $w_1,w_2,…, w_n$ each with value $v_1,…,v_n$ and capacity $W$, we want to find the subset of objects S, such that we maximize values max($\sum_{i\in S} v_i$ ) but $\sum_{i \in S} w_i < B$ where $B$ is the capacity of the bag.

Extra notes:

Problem 1:

Obviously, a greedy approach is bad, and the time complexity is $O(2^n)$, where each bit represents whether the object is included or not included.

Problem 2:

Consider the following problem, where we have 4 objects, with values 15,10,8,1 and weights 15,12,10,5 with total capacity 22. The optimal solution is items 2,3.

Then, K(1) = $x_1$ with $V=15$, K(2) is also $x_1$ with $V=15$. But, how do we express K(3) in terms of K(2) and K(1) which took the first object? So there is no real way to define K in terms of its previous sub problems!

Consider this new approach $K(I,B)$ where we also consider w as part of the sub problem - that is what is the optimal set of items given capacity b, for $ 1 \leq I \leq N, 1 \leq b \leq B$. Then by doing so, when we consider $K(3,22)$, there are two cases to consider:

- If $x_3$ is part of the solution, then we look up $K(2,22-v_3) = K(2,12)$

- Notice that $K(2,12)$ will give us item 2 with $w_2 = 12$.

- If $x_3$ is not part of the solution, then we look up $K(2,22)$

Subproblem:

Define $K(i,b)$ to be the optimal solution involving the first $i$ items with capacity $b$, $\forall i \in [1,N], w \in [1,B] $

Recurrence relation:

The recurrence can be defined as follows:

\[K(i,b) = max(v_i + K(i-1, b - w_i), K(i-1,b))\]The base cases will be $K(0,b) = 0, K(i,0) = 0 \forall i, b$

That is, if item $x_i$ is included, then we add the corresponding value $v_i$ and subtract the weight of item i $w_i$. If it is not included, then we simply take the first $i-1$ items.

Pseudocode:

1

2

3

4

5

6

7

8

9

10

11

12

K = zeros(N,W)

weights = [w1,...,wn]

values = [v1,...,vn]

for i = 1 to N:

K[i,0] = 0

for b = 1 to B:

K[0, b] = 0

for i = 1 to N:

for b = 1 to B:

K[i,b] = max(values[i] + K[i-1, b - w_i], K[i-1,b])

return K(N,W)

Complexity:

There is two loops, N, and W, so, $O(NB)$.

However, note that $B$ is an integer which can be represented with $2^m$ bits, so the complexity can be rewritten as $O(N2^m)$ which is exponential runtime.

- Knapsack is also known as a Pseudo-polynomial time problem

- Why is knapsack problem pseudo polynomial?

To do backtracking,

1

2

3

4

5

6

7

8

# Starting from index 1

included = [0] * N

for i = 1 to N:

if K[i,b] != K[i-1,B]:

# this means item i is included

included[i] = 1

B = B - weights[i]

Then, included will be a binary representation of which items to be included.

Knapsack with repetitions (DP2)

This problem is a variant of the earlier problem, but you can add an infinite amount of $x_i$ in your knapsack.

Subproblem and Recurrence relation::

We can do the same as the above problem, and updating $K(i-1, b-w_i)$ to $K(i,b-w_i)$

\[K(i,b) = max(v_i + K(i, b-w_i), K(i-1,b))\]But notice that this can be further simplified, we do not need to take into consideration of item $x_i$. We can just simply at each step, iterate through all the possible items to find the best item to add to the knapsack.

\[K(b) = \underset{i}{max} \{ v_i + K(w - w_i): i \leq i \leq n, w_i \leq b \}\]Pseudocode:

1

2

3

4

5

6

7

for w = 1 to B:

K(b) = 0

for i = 1 to N:

# if item x_i is below the weight limit and beats the current best

if w_i <= w & K(b) < v_i + K(b-w_i):

K(b) = v_i + K(b-w_i)

return K(B)

Complexity:

The complexity does not change, but the space complexity is now based on B.

Backtracking

To do backtracking, we have a separate array (multiset) $S(b) = [[],…,[]]$ so whenever an item $x_i$ get added, simply set $S(w) = S(w-w_i) + [i]$.

Note, if we find a better solution to add item $x_i$, then $S(w)$ will be overwritten.

Chain Multiply (DP2)

Consider two matrices $X_{a,b}$ and $Y_{b,c}$, the computation cost of calculating $XY$ is $O(abc)$.

Suppose we have 3 matrices $A_{2,5}, B_{5,10}, C_{10,2}$,

- Calculating $((AB)C)$ will require $\underbrace{2 \times 5 \times 10}_{AB} + 2\times 10\times 2 = 140$ computations

- While calculating $(A(BC))$ will require $\underbrace{5\times 10\times 2}_{BC} + 2\times 5\times 2 = 120$ computations

So, the point here is that the order of multiplication matters.

The problem now is given an initial sequence of matrices $A_1…,A_n$, suppose the optimal point to split is at $k$, then the left sequence will be $A_1….A_k$. Then, for this left sequence, what will be the optimal split? So, the point at which the split occurs is dynamic.

graph TD;

A --> B1

A --> B2

A["$$A_i A_{i+1}...A_j$$"]

B1["$$A_i ... A_l$$"]

B2["$$A_{l+1}... A_n$$"]

Remember, for a dynamic programming solution, each of the subtrees must also be optimal.

Subproblem:

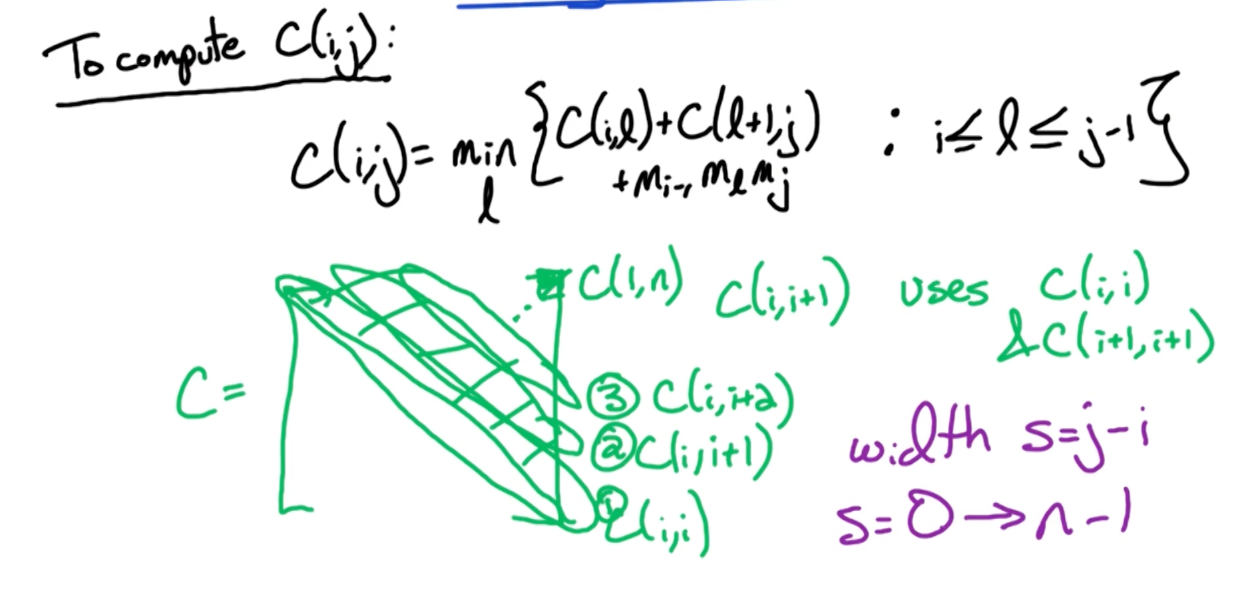

So, we denote $C(i,j)$ be the optimal cost of multiplying $A_i…A_j$

For an arbitrary matrix, $A_i$, the dimensions is $m_{i-1},m_{i}$

Recurrence relation:

where $C(i,l)$ is the cost of the left subtree, $c(l+1,j)$ is the right substree and $m_{i-1} m_l m_j$ is the cost of combining the two sub trees.

Pseudocode:

So, $C(i,i) = 0, \forall i$. Then, we calculate $C(i,i+1), C(i, i+2), …, C(1,n)$ which is the solution that we are looking for.

- Start at the diagonal and move up, to index this, consider $C(i,i+2)$ and $C(i,i)$, the difference is $i+2$, and $i$. Let’s call this the width, $s = j-i$.

- Then, $j$ can be calculated with $j = i+s$

- So we do this until we get width = $n-1$, so $s=0\rightarrow n-1$.

To rephrase this, given that we fill the diagonal first, we move one step to the right of the diagonal, i.e $C(2,i+1),.., C(n, i+1)$. Then, how many elements do we need to compute? n-1 elements.

Now, suppose we move $s$ steps to the right of the diagonal, then, there will be $n-s$ elements to compute.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# This step will populate the diagonals

for i=1 -> n:

C(i,i)=0

# How many steps we move to the right of the diagonal

for s=1 -> n-1:

# How many elements we need to compute

for i=1 -> n-s:

# Compute the j coordinate

Let j=i+s

C(i,j) = infinity

for l=i -> j-1:

curr = (m[i-1] * m[l] * m[j]) + C(i,l) + C(l+1,j)

if C(i,j) > curr:

C(i,j) = curr

return C(1,n)

Complexity:

The time complexity is 3 inner for loops, so, $O(n^3)$.

Backtracking

To perform backtracking, we need another matrix, to keep track for each $i,j$, what is the corresponding $l$ in which the best split took place. Then, backtracking can be done via recursion. Here is an example:

1

2

3

4

5

6

7

8

9

10

def traceback_optimal_parens(s, i, j):

if i == j:

return f"A{i+1}" # Single matrix, no parenthesis needed

else:

k = s[i][j]

# Recursively find parenthesization for the left and right subchains

left_part = traceback_optimal_parens(s, i, k)

right_part = traceback_optimal_parens(s, k + 1, j)

return f"({left_part} x {right_part})"

This divides each step into two subproblems, and the combine steps is a constant function, so, $T(n) = 2 T ( n/ 2) + 1$. Using master theorem, the time complexity is $O(n)$.

Extra notes on chain multiply:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# this is how far away from the diagonals

for width in range(1,n):

"""

For example if you are 2 away from the diagonal,

means you need n-2 x coordinates

"""

for i in range(n - width):

"""

This is the corresponding y coordinate,

which is just adding the width to the x coordinate

From the current x-coordinate, move width steps.

For example, lets look at the diagonal 2 points away

(0,0), (0,1), (0,2), (0,3), (0,4)

(_,_), (1,1), (1,2), (1,3), (1,4)

(_,_), (_,_), (2,2), (2,3), (2,4)

(_,_), (_,_), (_,_), (3,3), (3,4)

(_,_), (_,_), (_,_), (_,_), (4,4)

which is (0,2), (1,3), (2,4)

so, x runs from 0 to 2

while y runs from 2 to 4 which is adding the width

"""

j = i + width

for l in range(i,j):

"""

This l is the split, suppose i = 0, j = 5

0|1234, 01|234, 012|34, 0123|4 which is considering

all combinations

"""

# D[i][j] = Operation(D[i][l],D[l+1][j])

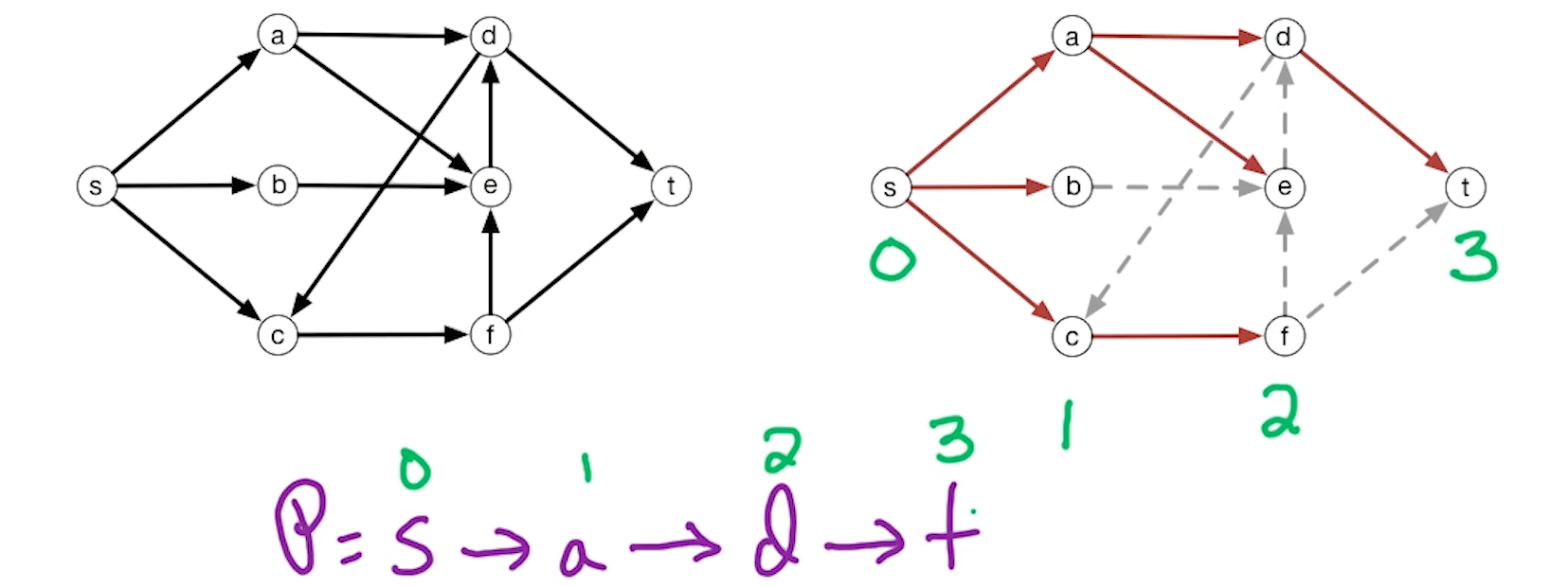

Bellman-Ford (DP3)

Given $\overrightarrow{G}$ with edge weights and vertices, find the shortest path from source node $s$ to target node $z$. We assume that it has no negative weight cycles (for now).

Subproblem:

Denote $P$ to be the shortest path from $s$ to $z$. If there are multiple $P$ possible, we can assume any one of them. Since $P$ visits each vertex at most once, then:

\[\lvert P \lvert \leq (n-1)\text{edges}\]DP idea: Condition on the prefix of the path. Use $i = 0 \rightarrow n-1$ edges on the paths.

For $0 \leq i \leq n-1, z \in V$, let $D(i,z)$ denote the length of the shortest path from S to Z using $\leq i$ edges.

Recurrence relation:

Base case: $D(0,s) = 0 \forall z \neq s, D(0, z) = \infty$

\[D(i,z) = \underset{y:\overrightarrow{yz}\in E}{min} \{D(i-1,y) + w(y,z), D(i-1,Z) \}\]If there is no solution, then, it will still be $\infty$.

Otherwise, suppose the edge z is reachable from y, then there are two cases to consider. Consider the intermediate edge from y to z, if this edge $\overrightarrow{yz}$ is in the solution, add $w(y,z)$. The other case is a solution already exists without $\overrightarrow{yz}$, which will be denoted by $D(i-1,Z)$. We simply take the minimum of the two.

Pseudocode:

1

2

3

4

5

6

7

8

9

10

For z = 1 to length(V):

D(0, z) = infinity

for i = 1 to n-1:

for all z in V:

D(i,z) = D(i-1,z)

for all edges(y,z) in E:

if D(i,z) > D(i-1,y) + w(y,z):

D(i,z) = D(i-1,y) + w(y,z)

# Return the last row

Return D(n-1,:)

Note, the last row basically returns all paths at most length $n-1$ from source node $s$ to all other vertex $z \in V$

Complexity:

Initially, you might think that there the complexity is $O(N^2E)$ because of the 3 nested for loops. But, in the 2nd and 3rd nested for loop, it is actually going through all edges (If you go through all nodes and all the edges within each node, it is actually going through all the edges). So the time complexity is actually $O(NE)$.

Now, how can you use bellman ford to detect if there is a negative cycle? Notice that the algorithm runs until n-1. Run it for one more time, and compare the difference. If the solution is different, then, some negative weights must exists.

In other words, check for:

\[D(n,z) < D(n-1,z), \exists z \in V\]Suppose we wanted to find all pairs, and use bellman ford, what will the solution look like?

Given $\overrightarrow{G} = (V,E)$, with edge weights $w(e)$. For $y,z \in V$, let $dist(y,z)$ be the legnth of shortest path $y \rightarrow z$..

Goal: Find $dist(y,z), \forall y,z \in V$

The time complexity in this case will be $O(N^2M)$. Also, if $\overrightarrow{G}$ is a fully connected graph, it means there are $M=N^2$ edges, making it a $O(N^4)$ algorithm. What can we do about this? Look at Flord Warshall!

A side note about Dijkstra algorithm, it is a greedy algorithm with time complexity $O(N + E log(N))$, so it is better than Bellman Ford if you know that there are no negative edges

Floyd-Warshall (DP3)

Subproblem:

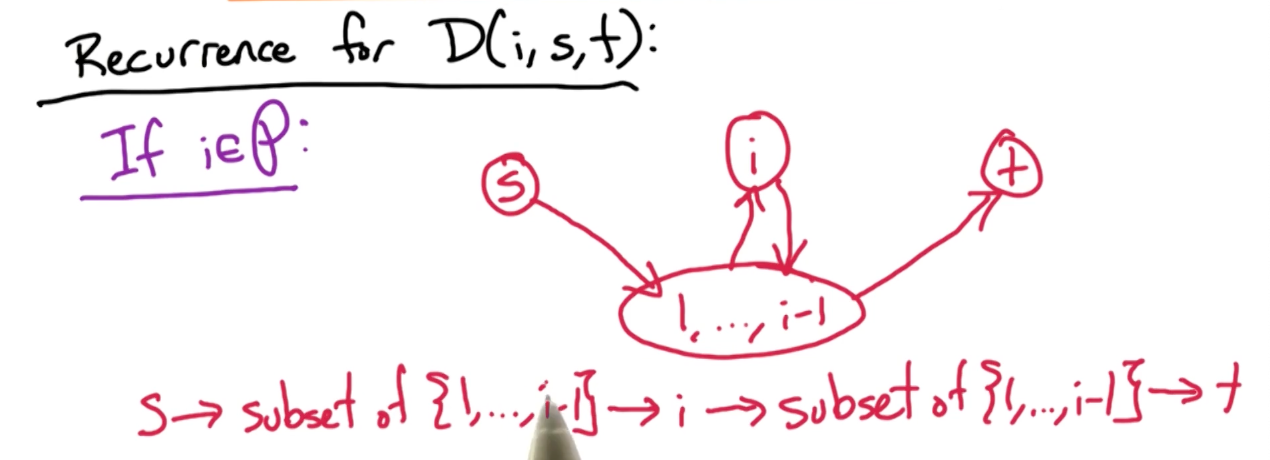

For $0 \leq i \leq n$ and $1 \leq s, t \leq n$, let $D(i,s,t)$ be the length of the shortest path from $s\rightarrow t$ using a subset ${1, …, i}$ as intermediate vertices. Note, the key here is intermediate vertices.

So, $D(0,s,t)$ means you can go to t from s directly without any intermediate vertices.

Recurrence relation:

The base case is when $i = 0$, then there are two cases, either you can go to t from s, in which case it is $w_{s,t}$, else $\infty$.

What about $D(i,s,t)$? There are again two cases to consider, either the intermediate vertex $i$ is in the path $P$ or otherwise.

If it is not in the optimal path $P$, then the $D(i,s,t) = D(i-1,s,t)$.

If it is in the optimal path $P$, then this is the scenario where it can happen.

Notice that you go from $S$ to a subset (can be an empty set) or vertices ${1,…,i-1}$, go to vertex $i$, back to the subset before reaching vertex $t$. So there is this 4 paths to consider.

So, the first two paths can be represented by $D(i-1, s,i)$, that is using the subset of vertices ${1,…,n-1}$ to reach vertex $i$, and, the next two paths can be represented by $D(i-1,i,t)$, that is reaching vertex $t$ from $i$ using subset of vertices ${1,…,n-1}$

Pseudocode:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

Inputs: G, w

for s=1->n:

for t=1->n:

if (s,t) in E

then D(0,s,t)=w(s,t)

else D(0,s,t) = infty

for i=1->n:

for s=1->n:

for t=1->n:

D(i,s,t)=min{ D(i-1,s,t), D(i-1,s,i) + D(i-1,i,t) }

# return the last slice, has N^2 entries.

Return D(n,:,:)

Complexity:

The complexity here is clearly $O(N^3)$.

To detect negative weight cycles, can check the diagonal of the matrix D.

- If there is a negative cycle, then there should be a negative path length from a vertex to itself, i.e $D(n,y,y) < 0, \exists y \in V $.

- This is equivalent to checking whether there is any negative entries on the diagonal matrix $D(n,:,:)$.

For bellman ford algorithm, it depends on the s and z to determine if a negative weight path exists, a single source shortest path algorithm. But for Floyd Warshall, it does all pair shortest path, and hence it is guaranteed to find the negative cycle, if it exists.

Divide and conquer

Master theorem

Note, this is the simplified form of the Master Theorem, which states that:

\[T(n) = \begin{cases} O(n^d), & \text{if } d > \log_b a \\ O(n^d \log n), & \text{if } d = \log_b a \\ O(n^{\log_b a}), & \text{if } d < \log_b a \end{cases}\]For constants $a>0, b>1, d \geq 0$.

The recurrence relation under the Master Theorem is of the form:

\[T(n) = aT\left(\frac{n}{b}\right) + O(n^d)\]where:

- $a$: Number of subproblems the problem is divided into.

- $b$: Factor by which the problem size is reduced.

- $O(n^d)$: Cost of work done outside the recursive calls, such as dividing the problem and combining results.

Understanding the Recursion Tree

To analyze the total work, think of the recursion as a tree where:

- Each level of the tree represents a step in the recursion.

- The root of the tree represents the original problem of size $n$.

- The first level of the tree corresponds to dividing the problem into $a$ subproblems, each of size $\frac{n}{b}$.

- The next level corresponds to further dividing these subproblems, and so on.

Cost at the $k-th$ level:

-

Number of Subproblems:

\[\text{Number of subproblems at level } k = a^k\]At each split, it produces $a$ sub problems, after $k$ splits, hence $a^k$.

-

Size of Each Subproblem:

\[\text{Size of each subproblem at level } k = \frac{n}{b^k}\]During each step, the problem is reduced by $b$. E.g in binary search, each step reduces by half, $\frac{n}{2}$. After $k$ steps, it will be $\frac{n}{2^k}$

-

Cost of Work at Each Subproblem:

\[\text{Cost of work at each subproblem at level } k = O\left(\left(\frac{n}{b^k}\right)^d\right)\]This is the cost of merging. For example in merge sort, cost of merging is $O(n)$ which is linear.

-

Thus, total cost at level k :

\[\text{Total cost at level } k = a^k \times O\left(\left(\frac{n}{b^k}\right)^d\right) = O(n^d) \times \left(\frac{a}{b^d}\right)^k\]

Then, $k$ goes from $0$ (the root) to $log_n b$ levels (leaves), so,

\[\begin{aligned} S &= \sum_{k=0}^{log_b n} O(n^d) \times \left(\frac{a}{b^d}\right)^k \\ &= O(n^d) \times \sum_{k=0}^{log_b n} \left(\frac{a}{b^d}\right)^k \end{aligned}\]Recall that for geometric series, $\sum_{i=0}^{n-1} ar^i = a\frac{1-r^n}{1-r}$. So, we need to consider $\frac{a}{b^d}$. So, when $n$ is large:

Case 1:

If $d> log_b a$, then $ a < b^d $ thus $\frac{a}{b^d} < 1$. So when $n$ is large,

\[\begin{aligned} S &= O(n^d) \times \sum_k^\infty (\frac{a}{b^d})^k \\ &= O(n^d) \times \frac{1}{1-\frac{a}{b}} \\ &\approx O(n^d) \end{aligned}\]For case 1, the work is dominated by the cost of the non-recursive work (i.e., the work done in splitting, merging, or processing the problem outside of the recursion) dominates the overall complexity.

Case 2:

if $d = log_b a$, then $a = b^d$ thus $\frac{a}{b^d} = 1$.

\[\begin{aligned} S &= O(n^d) \times \sum_k^{log_b n} \underbrace{(\frac{a}{b^d})^k}_{1} \\ &= O(n^d) \times log_b n \\ &\approx O(n^d log_b n) \\ &\approx O(n^d log n) \\ \end{aligned}\]Notice that the log base is just a ratio that we can further simplify.

For case 2, The work is evenly balanced between dividing/combining the problem and solving the subproblems. Another way to think about it is, at each step there is $n^d$ work, with $log_b n \approx log n $ levels, so the total work is $O(n^d log n)$

Case 3:

If $d < log_b a$ then $a > b^d$ thus $\frac{a}{b^d} > 1$.

\[\begin{aligned} S &= O(n^d) \times \sum_{k=0}^{log_b n} (\frac{a}{b^d})^k \\ &= O(n^d) \times \frac{(\frac{a}{b^d})^{log_b n}-1}{\frac{a}{b^d}-1}\\ &\approx O(n^d) \times (\frac{a}{b^d})^{log_b n}-O(n^d) \\ &\approx O(n^d) \times (\frac{a}{b^d})^{log_b n}\\ &= O(n^d) \times \frac{a^{log_b n}}{(b^{log_b n})^d}\\ &= O(n^d) \times \frac{a^{log_b n}}{n^d}\\ &= O(a^{log_b n}) \\ &= O(a^{(log_a n)(log_b a)}) \\ &= O(n^{log_b a}) \end{aligned}\]For case 3, the work is dominated by the cost of recursive subproblems dominates the overall complexity

Fast Integer multiplication (DC1)

Given two (large) n-bit integers $x \& y$, compute $ z = xy$.

Recall that we want to look at the running time as a function of the number of bits. The naive way will take $O(N^2)$ time.

Inspiration:

Gauss’s idea: multiplication is expensive, but adding/subtracting is cheap.

2 complex numbers, $a+bi\ \&\ c+di$, wish to compute $(a+bi)(c+di) = ac - bd + (bc + ad)i$. Notice that in this case we need 4 real number multiplications, $ac,bd,bc,ad$. Can we reduce this 4 multiplications to 3? Can we do better?

Obviously it is possible! The key is that we are able to compute $bc+ad$ without computing the individual terms; not going to compute $bc, ad$ but instead $bc+ad$, consider the following:

\[\begin{aligned} (a+b)(c+d) &= ac + bd + (bc + ad) \\ (bc+ad) &= \underbrace{(a+b)(c+d)}_{term3} - \underbrace{ac}_{term1} - \underbrace{bd}_{term2} \end{aligned}\]So, we need to compute $ac, bd, (a+b)(c+d)$ to obtain $(a+bi)(c+di)$. We are going to use this idea to multiply the n-bit integers faster than $O(n^2)$

Input: n-bits integers x,y and assume $n$ is a power of 2 - for easy computation and analysis.

Idea: break input into 2 halves of size $\frac{n}{2}$, e.g $x = x_{left} \lvert x_{right}$, $y = y_{left} \lvert y_{right}$

Suppose $x=2=(10110110)_2$:

- $x_{left} = x_L = (1011)_2 = 11$

- $x_{right} = x_R= (0110)_2 = 6$

- Note, $182 = 11 \times 2^4 + 6$

- In general, $x = x_L \times 2^{\frac{n}{2}} + x_R$

Divide and conquer:

\[\begin{aligned} xy &= ( x_L \times 2^{\frac{n}{2}} + x_R)( y_L \times 2^{\frac{n}{2}} + y_R) \\ &= 2^n \underbrace{x_L y_L}_a + 2^{\frac{n}{2}}(\underbrace{x_Ly_R}_c + \underbrace{x_Ry_L}_d) + \underbrace{x_Ry_R}_b \end{aligned}\]

1

2

3

4

5

6

7

8

9

10

11

EasyMultiply(x,y):

input: n-bit integers, x & y , n = 2**k

output: z = xy

xl = first n/2 bits of x, xr = last n/2 bits of x

same for yl, yr.

A = EasyMultiply(xl,yl), B= EasyMultiply(xr,yr)

C = EasyMultiply(xl,yr), D= EasyMultiply(xr,yl)

Z = (2**n)* A + 2**(n/2) * (C+D) + B

return(Z)

running time:

- $O(n)$ to break up into two $\frac{n}{2}$ bits, $x_L,x_R, y_L,y_R$

- To calculate A,B,C,D, its $4T(\frac{n}{2})$

- To calculate $z$, is $O(n)$

- Because e.g we need to shift $A$ by $2^n$ bits.

So the total complexity is: $4T(\frac{n}{2}) + O(n)$

Let $T(n)$ = worst-case running time of EasyMultiply on input of size n, and by master theorem, this yields $O(n^{log_2 4}) = O(n^2)$

As usual, as with everything, can we do better? Can we change the number 4 to something better like 3? I.e we are trying to reduce the number of 4 sub problems to 3.

Better approach:

\[\begin{aligned} xy &= ( x_L \times 2^{\frac{n}{2}} + x_R)( y_L \times 2^{\frac{n}{2}} + y_R) \\ &= 2^n \underbrace{x_L y_L}_1 + 2^{\frac{n}{2}}(\underbrace{x_Ly_R + x_Ry_L}_{\text{Change this!}}) + \underbrace{x_Ry_R}_4 \end{aligned}\]Using Gauss idea:

\[\begin{aligned} (x_L+x_R)(y_L+y_R) &= x_L y_L + x_Ry_R + (x_Ly_R+x_Ry_L) \\ (x_Ly_R+x_Ry_L) &= \underbrace{(x_L+x_R)(y_L+y_R)}_{c}- \underbrace{x_L y_L}_a + \underbrace{x_Ry_R}_b \\ \therefore xy &= 2^n A + 2^{\frac{n}{2}} (C-A-B) + B \end{aligned}\]

1

2

3

4

5

6

7

8

9

10

11

FastMultiply(x,y):

input: n-bit integers, x & y , n = 2**k

output: z = xy

xl = first n/2 bits of x, xr = last n/2 bits of x

same for yl, yr.

A = FastMultiply(xl,yl), B= FastMultiply(xr,yr)

C = FastMultiply(xl+xr,yl+yr)

Z = (2**n)* A + 2**(n/2) * (C-A-B) + B

return(Z)

With Master theorem, $3T(\frac{n}{2}) + O(n) \rightarrow O(n^{log_2 3})$

Example:

Consider $z=xy$, where $x=182, y=154$.

- $x = 182 = (10110110)_2, y= 154 = (10011010)_2$

- $x_L = (1011)_2 = 11$

- $x_R = (0110)_2 = 6$

- $y_L = (1001)_2 = 9$

- $y_R = (1010)_2 = 10$

- $A = x_Ly_L= 11*9 = 99$

- $B = x_Ry_R = 6*10 = 60$

- $C = (x_L + x_R)(y_L + y_R) = (11+6)(9+10) = 323 $

Linear-time median (DC2)

Given an unsorted list $A=[a_1,…,a_n]$ of n numbers, find the median ($\lceil \frac{n}{2}\rceil$ smallest element).

Quicksort Recap:

- Choose a pivot p

- Partition A into $A_{< p}, A_{=p}, A_{>p}$

- Recursively sort $A_{< p}, A_{>p}$

Recall in quicksort the challenging component is choosing the pivot, and if we reduce the partition by only 1, this results in the worse case can result in $O(n^2)$. So what is a good pivot? the median but how can we find this median without sorting which is $O(n log n)$.

The key insight here is we do not need to consider all of $A_{< p},A_{=p}, A_{>p}$, we just need to consider 1 of them.

1

2

3

4

5

6

7

8

9

Select(A,k):

Choose a pivot p (How?)

Partition A in A < p, A = p, A > p

if k <= |A_{<p}|

then return Select(A_{<p},k)

if |A_{<p}| < k <= |A_{>p}| + |A_{=p}|

then return p

if k > |A_{>p}| + |A_{=p}|

then return Select(A_{>p}, k - |A_{<p}| - |A_{=p})|

The question becomes, how do we find such a pivot?

The pivot matters a lot, because if the pivot is the median, then, our partition will be $\frac{n}{2}$ which fields $T(n) = T(\frac{n}{2}) + O(n)$, which will achieve a running time of $O(n)$.

However, it turns out we do not need $\frac{1}{2}$, we just need something that can reduce the problem at each step, for example $T(n) = T(\frac{3n}{4}) + O(n)$ where each step only eliminates $\frac{1}{4}$ of the problem space, which will still give us $O(n)$. It turns out we just need the constant factor to be less than 1 (do you know why?), so, even 0.99 will work!

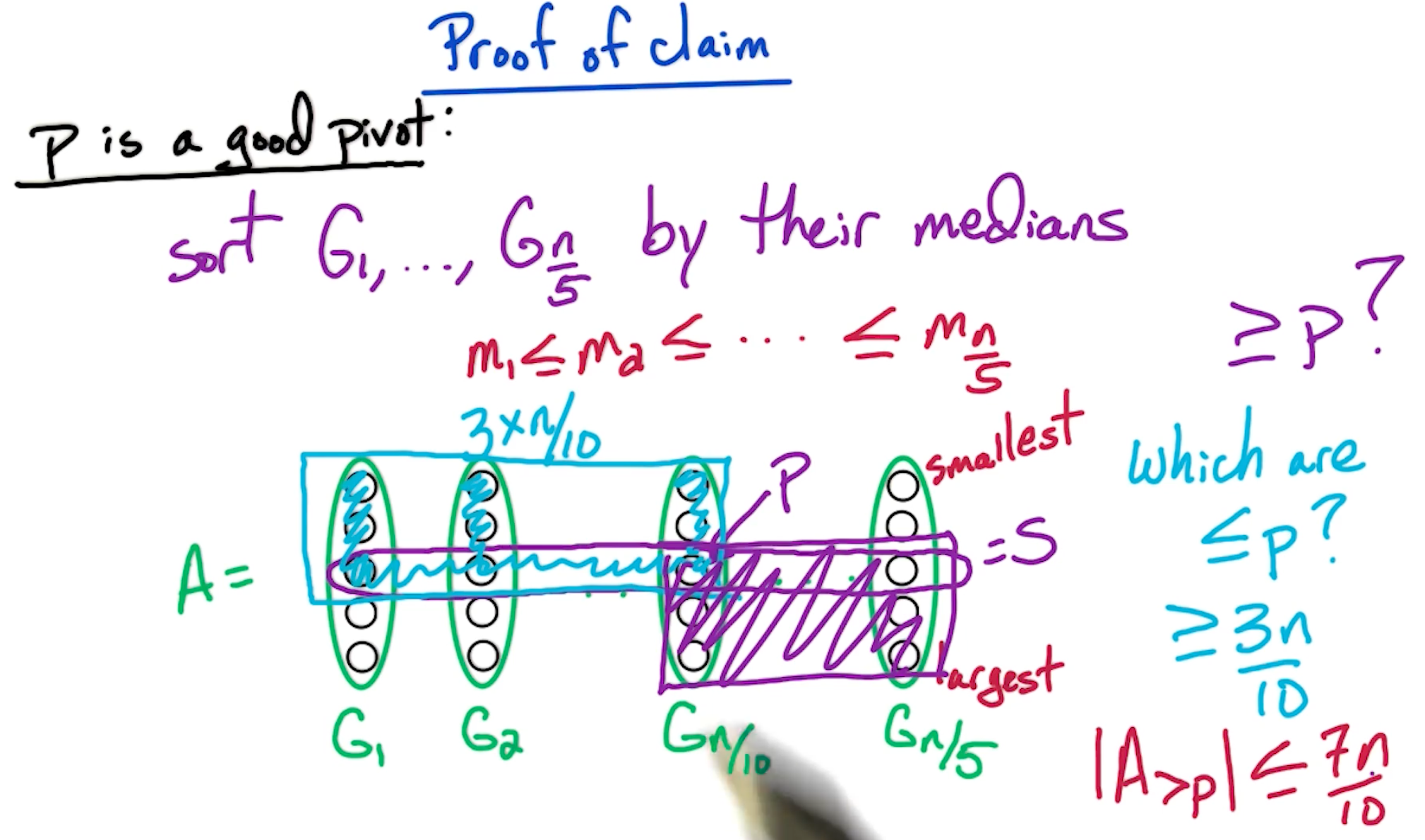

For the purpose of this lectures, we consider a pivot $p$ is good, if it reduces the problem space by $\frac{1}{4}$. i.e $\lvert A_{<p} \lvert \leq \frac{3n}{4} \& \lvert A_{>p} \lvert \leq \frac{3n}{4} $.

Assume for now we have a sorted array, then, the probability of selecting a good pivot is when the number is between $[\frac{n}{4},\frac{3n}{4} ]$, so the probability is half. We can validate the pivot by tracking the size of the split. So this is as good as flipping a coin - what is the expected number of trails before you get a heads? The answer is 2. However, there is still a chance that you keep getting tails, so it does not still guarantee the worst case runtime is $O(n)$. Consider the following:

\[\begin{aligned} T(n) &= T\bigg(\frac{3}{4} n\bigg) + \underbrace{T\bigg(\frac{n}{5}\bigg)}_{\text{cost of finding good pivot}} + O(n)\\ &\approx O(n) \end{aligned}\]To accomplish this, choose a subset $S \subset A$, where $\lvert S \rvert = \frac{n}{5}$. Then, we set the pivot = $median(S)$.

But, now we face another problem, how do we select this sample $S$? ![]() - one problem after another.

- one problem after another.

Introducing FastSelect(A,k):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

Input: A - an unsorted array of size n

k an integer with 1 <= k <= n

Output: k'th smallest element of A

FastSelect(A,k):

Break A into ceil(n/5) groups G_1,G_2,...,G_n/5

# doesn't matter how you break A

For j=1->n/5:

sort(G_i)

let m_i = median(G_i)

S = {m_1,m_2,...,m_n/5} # these are the medians of each group

p = FastSelect(S,n/10) # p is the median of the medians (= median of elements in S)

Partition A into A_<p, A_=p, A_>p

# now recurse on one of the sets depending on the size

# this is the equivalent of the quicksort algorithm

if k <= |A_<p|:

then return FastSelect(A_<p,k)

if k > |A_>p| + |A_=p|:

then return FastSelect(A_>p,k-|A_<p|-|A_=p|)

else return p

Analysis of run time:

- Splitting into $\frac{n}{5}$ groups - $O(n)$

- Since we are sorting a fix number of elements (5), it is order $O(1)$, over $\frac{n}{5}$, so, it is still $O(n)$.

- The first

FastSelecttakes $T(\frac{n}{5})$ time. - The final recurse takes $T(\frac{3n}{4})$

The key here is $\frac{3}{4} + \frac{1}{5} < 1$

Prove of the claim that p is a good pivot

Fun exercise: Why did we choose size 5? And not size 3 or 7?

Recall earlier, for blocks of 5, the time complexity is given by:

\[T(n) = T(\frac{n}{5}) + T(\frac{7n}{10}) + O(n)\]and, $\frac{7}{10} + \frac{1}{5} < 1$.

Now, let us look at the case of 3, assuming we split into n/3 groups:

1

2

3

11 21 ... n/6 1 ... n/3 1

12 22 ... n/6 2 ... n/3 2

13 23 ... n/6 3 ... n/3 3

Number of elements: 2 * n/6 = n/3. Likewise, our remaining partition is of size 2n/3.

Then our formula will look:

\[\begin{aligned} T(n) &= T(\frac{2n}{3})+ T(\frac{n}{3}) + O(n) \\ &= O(n logn) \end{aligned}\]Similarly, suppose we use size 7,

- Number of elements less than or equal to n/14 4 is n/14 * 4 = 2n/7.

- Number of remaining elements more than n/14 4 is 5n/7.

In this case, we get the equation:

\[\begin{aligned} T(n) &= T(\frac{5n}{7})+ T(\frac{n}{7}) + O(n) \\ &= O(n) \end{aligned}\]Although in this case we still achieve $O(n)$, even though $\frac{5}{7} + \frac{1}{7} = 0.85 < \frac{1}{5} + \frac{7}{10} = 0.9$, we increased the cost of sorting our sub list sorting. (Nothing is free! ![]() )

)

For more information, feel free to look at this link by brillant.org!

Solving Recurrences (DC3)

- Merge sort: $T(n) = 2T(\frac{n}{2}) + O(n) = O(nlogn)$

- Naive Integer multiplication: $T(n) = 4T(\frac{n}{2}) + O(n) = O(n^2)$

- Fast Integer multiplication: $T(n) = 3T(\frac{n}{2}) + O(n) = O(n^{log_2 3})$

- Median: $T(n) = T(\frac{3n}{4}) + O(n) = O(n)$

An example:

For some constant $c>0$, and given $T(n) = 4T(\frac{n}{2}) + O(n)$:

\[\begin{aligned} T(n) &= 4T(\frac{n}{2}) + O(n) \\ &\leq cn + 4T(\frac{n}{2}) \\ &\leq cn + 4[T(4\frac{n}{2^2} + c \frac{n}{2})]\\ &= cn(1+\frac{4}{2}) + 4^2 T(\frac{n}{2^2}) \\ &\leq cn(1+\frac{4}{2}) + 4^2 [4T\frac{n}{2^3} + c(\frac{n}{2^2})] \\ &= cn (1+\frac{4}{2} + (\frac{4}{2})^2) + 4^3 T(\frac{n}{2^3}) \\ &\leq cn(1+\frac{4}{2} + (\frac{4}{2})^2 + ... + (\frac{4}{2})^{i-1}) + 4^iT(\frac{4}{2^i}) \end{aligned}\]If we let $i=log_2 n$, then $\frac{n}{2^i}$ = 1.

\[\begin{aligned} &\leq \underbrace{cn}_{O(n)} \underbrace{(1+\frac{4}{2} + (\frac{4}{2})^2 + ... + (\frac{4}{2})^{log_2 n -1})}_{O(\frac{4}{2}^{log_2 n}) = O(n^2/n) = O(n)} + \underbrace{4^{log_2 n}}_{O(n^2)} \underbrace{T(1)}_{c}\\ &= O(n) \times O(n) + O(n^2) \\ &= O(n^2) \end{aligned}\]Geometric series

\[\begin{aligned} \sum_{j=0}^k \alpha^j &= 1 + \alpha + ... + \alpha^k \\ &= \begin{cases} O(\alpha^k), & \text{if } \alpha > 1 \\ O(k), & \text{if } \alpha = 1 \\ O(1), & \text{if } \alpha < 1 \end{cases} \end{aligned}\]- The first case is what happened in Naive Integer multiplication

- The second case is the merge step in merge sort, where all terms are the same.

- The last step is finding the median where $\alpha = \frac{3}{4}$

Manipulating polynomials

It is clear that $4^{log_2 n} = n^2$, what about $3^{log_2 n} = n^c$

First, note that $3 = 2^{log_2 3}$

\[\begin{aligned} 3^{log_2n} &= (2^{log_2 3})^{log_2 n} \\ &= 2^{\{log_2 3\} \times \{log_2 n\}} \\ &= (2^{log_2 n})^{log_2 3} \\ &= n^{log_2 3} \end{aligned}\]Another example: $T(n) = 3T(\frac{n}{2}) + O(n)$

\[\begin{aligned} T(n) &\leq cn(1+\frac{3}{2} + (\frac{3}{2})^2 + ... + (\frac{3}{2})^{i-1}) + 3^iT(\frac{3}{2^i}) \\ &\leq \underbrace{cn}_{O(n)} \underbrace{(1+\frac{3}{2} + (\frac{3}{2})^2 + ... + (\frac{3}{2})^{log_2 n -1})}_{O(\frac{3}{2}^{log_2 n}) = O(3^{log_2 n}/2^{log_2 n})} + \underbrace{3^{log_2 n}}_{3^{log_2 n}} \underbrace{T(1)}_{c}\\ &= \cancel{O(n)}\times O(3^{log_2 n}/\cancel{2^{log_2 n}}) + O(3^{log_2 n}) \\ &= O(3^{log_2 n}) \end{aligned}\]General Recurrence

For constants a > 0, b > 1, and given: $T(n) = a T(\frac{n}{b}) + O(n)$,

\[T(n) = \begin{cases} O(n^{log_b a}), & \text{if } a > b \\ O(n log n), & \text{if } a = b \\ O(n), & \text{if } a < b \end{cases}\]Feel free to read up more about master theorem!

FFT (DC4+5)

FFT stands for FAst Fourier Transform.

Polynomial Multiplication:

\[\begin{aligned} A(x) &= a_0 + a_1x + a_2 x^2 + ... + a_{n-1} x^{n-1} \\ B(x) &= b_0 + b_1x + b_2 x^2 + ... + b_{n-1} x^{n-1} \\ \end{aligned}\]We want:

\[\begin{aligned} C(x) &= A(x)B(x) \\ &= c_0 + c_1x + c_2 x^2 + ... + c_{2n-2} x^{2n-2} \\ \text{where } c_k &= a_0 b_k + a_1 b_{k-1} + ... + a_kb_0 \end{aligned}\]We want to solve, given $a = (a_0, a_1, …, a_{n-1}), b= (b_0, b_!, …, b_{n-1})$, compute $c = a*b = (c_0, c_1, …, c_{2n-2})$

Note, this operation is called the convolution denoted by *.

For the naive algorithm, it will take $O(k)$ time for $c_k, \rightarrow O(n^2)$ total time. We are going to introduce a $O(nlogn)$ time algorithm.

Polynomial basics

- Two Natural representations for $A(x) = a_0 + a_1 x + … + a_{n-1}x^{n-1}$

- coefficients $a = (a_0, …, a_{n-1})$

- More intuitive to represent

- values: $A(x_1), …, A(x_n)$

- Easier to use to multiply

- coefficients $a = (a_0, …, a_{n-1})$

Lemma: Polynomial of degree $n-1$ is uniquely determine by its values at any $n$ distinct points.

- For example, a line is determined by two points, and, in general a $n-1$ polynomial is defined by any $n$ points on that polynomial.

What FFT does, is it $values \Longleftrightarrow coefficients$

- Take coefficients as inputs

- Convert to values

- Multiply,

- Convert back

One important point is that FFT converts from the coefficients to the values, not for any choice of $x_1,…,x_n$ but for a particular well chosen set of points. Part of the beauty of the FFT algorithm is this choice.

MP: values

Key idea: Why is it better to multiply in values?

Given $A(x_1), … ,A(x_{2n}), B(x_1), …, B(X_2n)$, we can compute

\[C(x_i) = A(x_i)B(x_i), \forall 1 \geq x \geq 2n\]Since each step is O(1), the total running time is $O(n)$. We will show that the conversion to values using FFT is $O(nlogn)$ while the values step is $O(n)$. Note, why do we take A and B at two n points? Well, C is a polynomial of degree at most 2n-2, so we needed at least $2n-1$ points, so $2n$ suffices.

FFT: opposites

Given $a = (a_0, a_1,…, a_{n-1})$ for poly $A(x) = a_0 + a_1x+…+a_{n-1}x^{n-1}$, we want to compute $A(x_1), …., A(x_{2n})$

- The key thing to note that we get to choose the 2n points $x_1,…,x_{2n}$.

- How to choose them?

- The crucial observation is suppose $x_1,…,x_n$ are opposite of $x_{n+1},…,x_{2n}$

- $x_{n+1} = -x_1, …, x_{2n} = -x_n$

- Suppose our $2n$ points satisfies this $\pm$ property

- We will see how this property will be useful.

FFT: splitting $A(x)$

Look at $A(x_i) \& A(x_{n+i} = A(-x_i))$, lets break these up to even and odd terms.

- Even terms are of the form $a_{2k}x^{2k}$

- Since the powers are even, this is the same for $A(x_i) = A(x_{n+i})$.

- Odd terms are $a_{2k+1}x^{2k+1}$

- Then these are the opposite, $A(-x_i) = A(x_{n+i})$.

(Note, this was super confusing for me, but, just read on)

So, it makes sense to split up $A(x)$ into odd and even terms. So, lets partition the coefficients for the polynomial into:

- $a_{even} = (a_0, a_2, …, a_{n-2})$

- $a_{odd} = (a_1, a_3, …, a_{n-1})$

FFT: Even and Odd

Again, given $A(x)= a_0 + a_1 x + … + a_{n-1}x^{n-1}$,

\[\begin{aligned} A_{even}(y) &= a_0 + a_2y + a_4y^2 + ... + a_{n-2}y^{\frac{n-2}{2}} \\ A_{odd}(y) &= a_1 + a_3y + a_5y^2 + ... + a_{n-1}y^{\frac{n-2}{2}} \\ \end{aligned}\]Notice that both have degree $\frac{n}{2}-1$. Then, (take some time to convince yourself)

\[A(x) = A_{even} (x^2) + x A_{odd}(x^2)\]Can you see the divide and conquer solution? We start with a $n$ size polynomial, and split it to two $\frac{n}{2}, A_{even},A_{odd}$

- We reduce the original polynomial from size $n-1$ to $\frac{n-2}{2}$.

- However, if we need a $x$ at two endpoints, we still need $A_{even}, A_{odd}$ endpoints the squared of these original two endpoints.

- So while the degree went down by a factor of two, but the number of points we need to evaluate has not gone down

- This is when we will make use of the $\pm$ property.

FFT: Recursion

Let’s suppose that the two end points that we want to evaluate satisfies the $\pm$ property: $x_1,…,x_n$ are opposite of $x_{n+1},…, x_{2n}$

\[\begin{aligned} A(x_i) &= A_{even}(x_i^2) + x_i A_{odd}(x_i^2) \\ A(x_{n+i}) &= \underbrace{A(-x_i)}_{opposite} = A_{even}(x_i^2) - x_i A_{odd}(x_i^2) \end{aligned}\]Notice that the values are reused for $A_{even}(x_i^2), A_{odd}(x_i^2)$

Given $A_{even}(y_1), …, A_{even}(y_n) \& A_{odd}(y_1), …, A_{odd}(y_n)$ for $y_1 = x_1^2, …m y_n=x_n^2$, in $O(n)$ time get $A(x_1),…,A(x_2n)$.

FFT: summary

To get $A(x)$ of degree $\leq n-1$ at $2n$ points $x_1,…,x_{2n}$

- Define $A_{even}(y), A_{odd}(y)$ of degree $\leq \frac{n}{2}-1$

- Recursively evaluate at n points, $y_1 = x_1^2 = x_{n+1}^2, …, y_n = x_n^2 = x_{2n}^2$

- In $O(n)$ time, get $A(x)$ at $x_1,…,x_{2n}$.

This gives us a recursion of

\[T(n) = 2T(\frac{n}{2}) + O(n) = O(nlogn)\]FFT: Problem

Note that we need to choose numbers such that $x_{n+i} = -x_i$ for $i \in [1,n]$.

then the points we are considering are the square of these two end points $y_1 = x_1^2, …, y_n = x_n^2$, and we also want these end points to also satisfies the $\pm$ property.

But, what happens at the next level?

- $y_1 = - y_{\frac{n}{2}+1}, …, y_{\frac{n}{2}} = -y_n$

- So, we need $x_1^2 = - x^2_{\frac{n}{2}+1}$

- Wait a minute, but how can a positive number $x_1^2$ be equals to a negative number?

- This is where complex number comes in.

To be honest, I am super confused, I ended up watching this youtube video:

Complex numbers

Given a complex number $z = a+bi$ in the complex plane, or the polar coordinates $(r,\theta)$. Note that for some operations such as multiplication, polar coordinates is more efficient.

How to convert between complex plane and polar coordinates?

\[(a,b) = (r cos\theta, r sin\theta)\]- $r = \sqrt{a^2 + b^2}$

- $\theta$ is a little more complicated, it depends which quadrant of the complex plane you are at.

There is also Euler’s formula:

\[re^{i\theta} = r(cos\theta + isin\theta)\]So, multiplying in polar makes it really easy:

\[r_1e^{i\theta_1}r_2e^{i\theta_2} = (r1r2)e^{i(\theta_1 + \theta_2)}\]complex roots

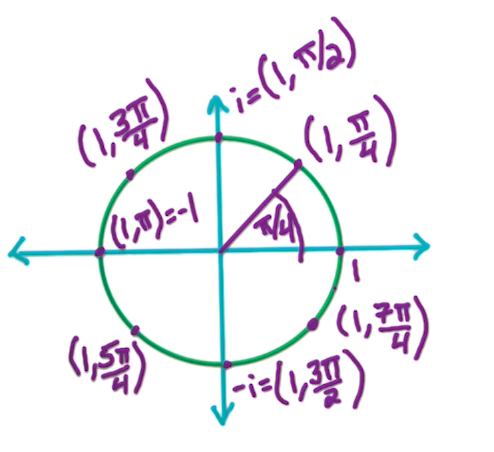

- When n=2, roots are 1, -1

- When n=4, roots are 1, -1, i, -i

In general, the n-th roots of unity $z$ where $z^n = 1$ is $(1, 2\pi j)$ for integer $j$. $2\pi j$ is on the positive real axis, as $2\pi$ is one “loop” around the circle.

Note, since $r =1, r^n=1$ so they basically form a circle with radius 1 from the origin. Then,

\[n\theta = 2\pi j \Rightarrow \theta \frac{2\pi j}{n}\]So the $n^{th}$ roots notation can be expressed as

\[(1, \frac{2\pi}{n}j), j \in [0,...,n-1]\]We re-write this as:

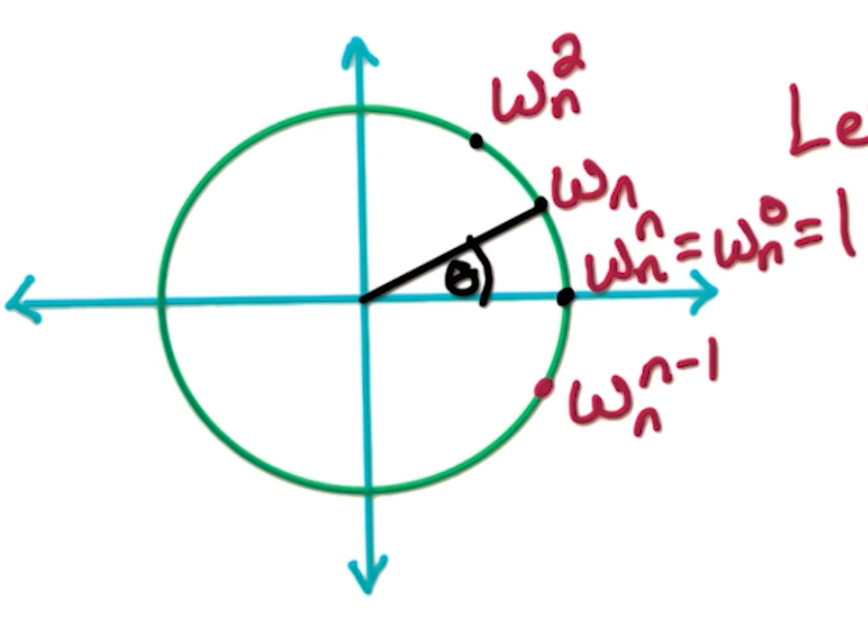

\[\omega_n = (1, \frac{2\pi}{n}) = e^{2\pi i /n }\]With that, we can formally define the the $n^{th}$ roots of unity, $\omega_n^0, \omega_n^1, …., \omega_n^{n-1}$.

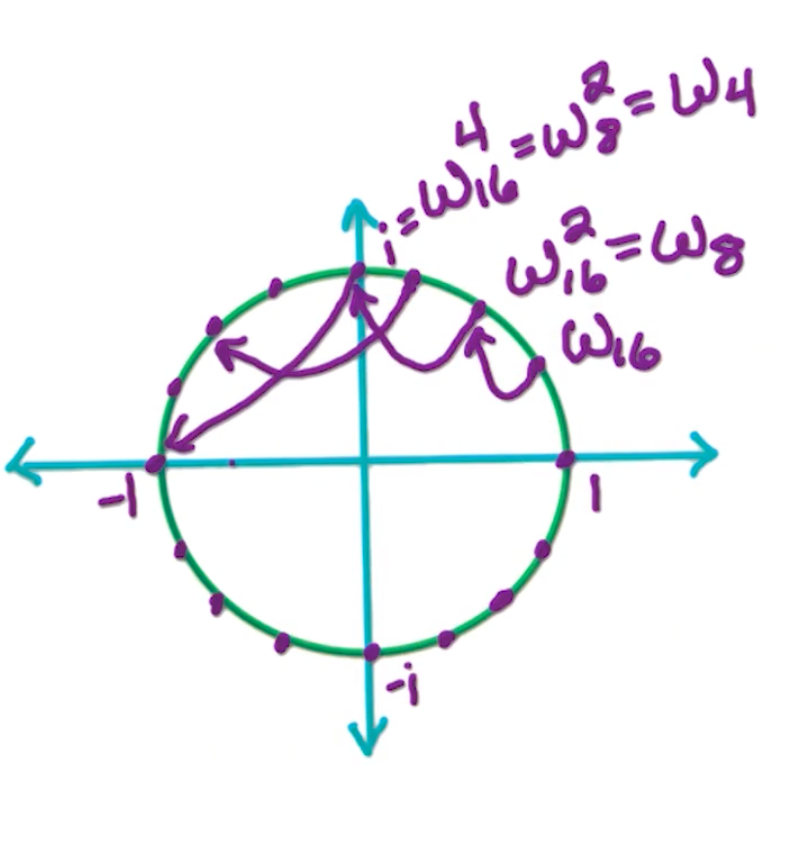

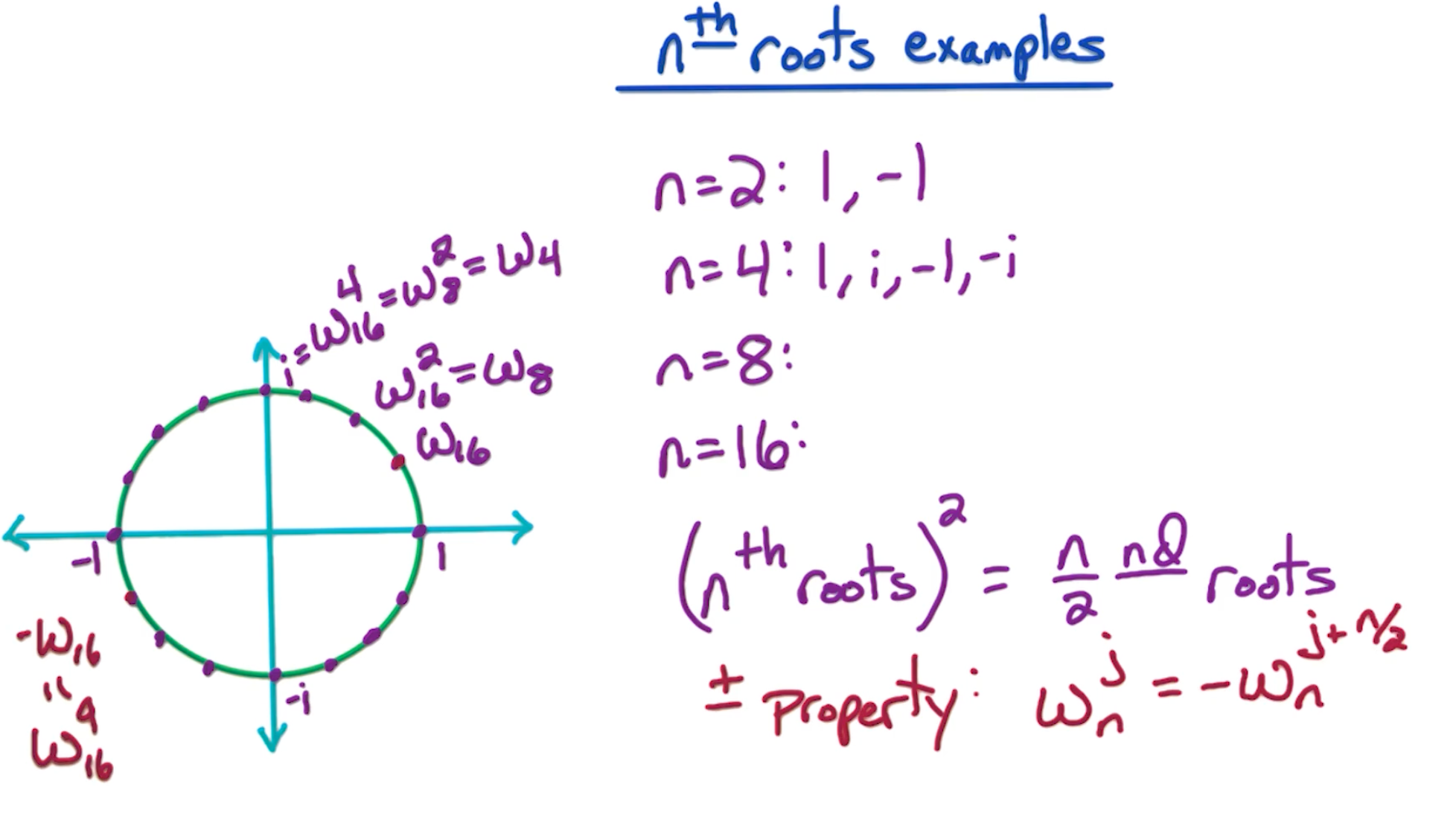

\[\omega_n^j = e^{2\pi ij /n } = (1, \frac{2\pi j}{n})\]Suppose we want 16 roots, we can see the circle being divided into 16 different parts.

And, note the consequences:

- $(n^{th} roots)^2 = \frac{n}{2} roots$

- e.g $\omega_{16}^2 = \omega_8$

- Also, the $\pm$ property, where $\omega_{16}^1 = -\omega_{16}^9$

key properties

- The first property - For even $n$, satisfy the $\pm$ property

- first $\frac{n}{2}$ are opposite of last $\frac{n}{2}$

- $\omega_n^0 = -\omega_n^{n/2}$

- $\omega_n^1 = -\omega_n^{n/2+1}$

- $…$

- $\omega_n^{n/2 -1} = -\omega_n^{n/2 + n/2 - 1} = -\omega_n^{n-1}$

- The second property, for $n=2^k$,

- $(n^{th} roots)^2 = \frac{n}{2} roots$

- $(\omega_n^j)^2 = (1, \frac{2\pi}{n} j)^2 = (1, \frac{2\pi}{n/2} j) = w_{n/2}^j$

- $(\omega_n^{n/2 +j})^2 = (\omega_n^j)^2 = w_{n/2}^j $

So why do we need this property that the nth root squared are the n/2nd roots? Well, we’re going to take this polynomial A(x), and we want to evaluate it at the nth roots. Now these nth roots satisfy the $\pm$ property, so we can do a divide and conquer approach.

But then, what do we need? We need to evaluate $A_{even}$ and $A_{odd}$ at the square of these nth roots, which will be the $\frac{n}{2}$ nd roots. So this subproblem is of the exact same form as the original problem. In order to evaluate A(x) at the nth roots, we need to evaluate these two subproblems, $A_{even}$ and $A_{odd}$ at the $\frac{n}{2}$ nd roots. And then we can recursively continue this algorithm.

FFT Core

Again, the inputs are $a= (a_0, a_1, …, a_{n-1})$ for Polynomial $A(x)$ where n is a power of 2 with $\omega$ is a $n^{th}$ roots of unity. The desired output is $A(\omega^0), A(\omega^1), …, A(\omega^{n-1})$

We let $\omega = \omega_n = (1, \frac{2\pi}{n}) = e^{2\pi i/n}$

And the outline of FFT as follows:

- if $n=1$, return $A(1)$

- let $a_{even} = (a_0, a_2, …, a_{n-2}), a_{odd} = (a_1, …, a_{n-1})$

- Call $FFT(a_{even},\omega^2)$ get $A_{even}(\omega^0), A_{even}(\omega^2) , …, A_{even}(\omega^{n-2})$

- Call $FFT(a_{odd},\omega^2)$ get $A_{odd}(\omega^0), A_{odd}(\omega^2) , …, A_{odd}(\omega^{n-2})$

- Recall that if $\omega = \omega_n$, then $(\omega^j_n)^2 = \omega_{n/2}^j$

- In other words, the square of n roots of unity gives us $n/2$ roots of unity.

- For $j = 0 \rightarrow \frac{n}{2} -1$:

- $A(\omega^j) = A_{even}(\omega^{2j}) + \omega^j A_{odd}(\omega^{2j})$

- $A(\omega^{\frac{n}{2}+j}) = A(-\omega^j) = A_{even}(\omega^{2j}) - \omega^j A_{odd}(\omega^{2j})$

- Return $(A(\omega^0), A(\omega^1), …, A(\omega^{n-1}))$

We can write this in a more concise manner:

- if $n=1$, return $(a_0)$

- let $a_{even} = (a_0, a_2, …, a_{n-2}), a_{odd} = (a_1, …, a_{n-1})$

- Call $FFT(a_{even},\omega^2) = (s_0, s_1, …, s_{\frac{n}{2}-1})$

- Call $FFT(a_{odd},\omega^2) = (t_0, t_1, …, t_{\frac{n}{2}-1})$

- For $j = 0 \rightarrow \frac{n}{2} -1$:

- $r_j = s_j + w^j t_j$

- $r_{\frac{n}{2}+j} = s_j - w^j t_j$

The running time is given by the recurrence $2T(n/2) + O(n) = O(n log n)$

Inverse FFT view

We first express the forward direction:

For point $x_j$, $A(x_j) = a_0 + a_1x_j + a_2x_j^2 + , … + a_{n-1}x_j^{n-1}$

For points $x_0, x_1, …, x_{n-1}$:

\[\begin{bmatrix} A(x_0) \\ A(x_1) \\ \vdots \\ A(x_{n-1}) \end{bmatrix} = \begin{bmatrix} 1 & x_0 & x_0^2 & ... & x_0^{n-1} \\ 1 & x_1 & x_1^2 & ... & x_1^{n-1} \\ & & \ddots & & \\ 1 & x_{n-1} & x_{n-1}^2 & ... & x_{n-1}^{n-1} \\ \end{bmatrix} \begin{bmatrix} a_0 \\ a_1\\ \vdots \\ a_{n-1} \end{bmatrix}\]We let $x_j = w_n^j$: (Note anything to power 0 is 1)

\[\begin{bmatrix} A(1) \\ A(\omega_n) \\ A(\omega_n^2)\\ \vdots \\ A(\omega_n^{n-1}) \end{bmatrix} = \begin{bmatrix} 1 & 1 & 1 & ... & 1 \\ 1 & \omega_n & \omega_n^2 & ... & \omega_n^{n-1} \\ 1 & \omega_n^2 & \omega_n^4 & ... & \omega_n^{2(n-1)} \\ & & \ddots & & \\ 1 & \omega_n^{n-1} & \omega_n^{2(n-1)} & ... & \omega_n^{(n-1)(n-1)} \\ \end{bmatrix} \begin{bmatrix} a_0 \\ a_1 \\ a_2 \\ \vdots \\ a_{n-1} \end{bmatrix}\]Two interesting properties:

- The matrix is symmetric

- The matrix is made up of functions of $\omega_n$

We denote the above as

\[A = M_n(\omega_n) * a = FFT(a, \omega_n)\]And the inverse can be calculated as:

\[M_n(\omega_n)^{-1} A = a\]Without proof, it turns out that $M_n(\omega_n)^-1 = \frac{1}{n}M_n(\omega_n^{-1})$ (Feel free eto watch yourself)

What is $\omega_n^{-1}$ ? i..e $\omega_n \times \omega_n^{-1} = 1$

The inverse turns out to be $\omega_n^{n-1}$, $\omega_n \times \omega_n^{n-1} = \omega_n^n = \omega_n^0 = 1$

So,

\[M_n(\omega_n)^-1 = \frac{1}{n}M_n(\omega_n^{-1}) = \frac{1}{n}M_n(\omega_n^{n-1})\]We did all this, so, the inverse FFT given $A$,is simply:

\[na = M_n(\omega_n^{n-1}) A = FFT(A, \omega_n^{n-1}) \\ \therefore a = \frac{1}{n} FFT(A, \omega_n^{n-1})\]Poly mult using FFT

Input: Coefficients $a = (a_0, …, a_{n-1})$ and $b = (b_0, …, b_{n-1})$ Output: $C(x) = A(x)B(x)$ where $c = (c_0, …, c_{2n-2})$

- FFT$(a,\omega_{2n}) = (r_0, …, r_{2n-1})$

- FFT$(b,\omega_{2n}) = (s_0, …, s_{2n-1})$

- for $j = 0 \rightarrow 2n-1$

- $t_j = r_j \times s_j$

- Have $C(x)$ at $2n^{th}$ roots of unity and run inverse FFT

- $(c_0, …, c_{2n-1}) = \frac{1}{2n}FFT(t, \omega_{2n}^{2n-1})$

Graphs

Strongly Connected Components (GR1)

Here is a recap on DFS (for a undirected graph)

1

2

3

4

5

6

7

8

9

DFS(G):

input G=(V,E) in adjacency list representation

output: vertices labeled by connected components

cc = 0

for all v in V, visited(v) = False, prev(v) = NULL

for all v in V:

if not visited(v):

cc++

Explore(v)

Now lets define Explore:

1

2

3

4

5

6

7

Explore(z):

ccnum(z) = cc

visited(z) = True

for all (z,w) in E:

if not visited(w):

Explore(w)

prev(w) = z

The ccnum is the connected component number of $z$.

The overall running time is $O(n+m), n = \lvert V \lvert, m = \lvert M \lvert$. This is because you visit each node once, and, at each node, you try the edges, hence the total run time is total nodes and total edges in order to reach all nodes.

What if our graph is now directed? We can still use DFS, but add pre or postorder numbers and remove the counters.

Notice hte difference nad adding of the clock variable:

1

2

3

4

5

6

7

DFS(G):

clock = 1

for all v in V, visited(v) = False, prev(v) = NULL

for all v in V:

if not visited(v):

cc++

Explore(v)

Now lets define Explore:

1

2

3

4

5

6

7

8

Explore(z):

pre(z) = clock;clock++

visited(z) = True

for all (z,w) in E:

if not visited(w):

Explore(w)

prev(w) = z

post(z) = clock; clock++

Here is an example:

Assuming we start at B, this is how our DFS looks like define as Node(pre,post):

Blue edge reflects that it is “used” during DFS but not used because the node has been visited.

There are various type of edges, for a given edge $z \rightarrow w$:

- Treeedge such as $B\rightarrow A, A\rightarrow D$

- where $post(z) > post(w)$, because of how you recurse back up during DFS

- Back edges $E \rightarrow A, F\rightarrow B$

- $post(z) < post(w)$

- Edges that goes back up.

- Forward edges $D \rightarrow G, B \rightarrow E$

- Same as the tree edges

- $post(z) > post(w)$

- Cross edges $F \rightarrow H, H \rightarrow G$

- $post(z) > post(w)$

Notice that only for the back edges it is different in terms of the post order and behaves differently from the other edges.

Cycles

A graph $G$ has a cycle if and only if its DFS tree has a back edge.

Proof:

Given $a \rightarrow b \rightarrow c \rightarrow … \rightarrow j \rightarrow a$ which is a cycle. Suppose somewhere down the line we have this node $i$, then the sub tree (descendants) of $i$ must contain $i-1$ which contains a backedge to $i$.

For the other direction, it is obvious, Consider the back edge $B \rightarrow A$, then the cycle exists.

Toplogical sorting

Topologically sorting a DAG (directed acyclic graph that has no cycles): order vertices so that all edges go from lower $\rightarrow$ higher. Recall that since it has no cycles, it has no back edges. So the post order numbers must be $post(z) > post(w)$ for any edge $z \rightarrow w$.

So, to do this, we can order vertices by decreasing post order number. Note that for this case, since we have $n$ vertices, we can create a array of size $2n$ and insert the nodes according to their post order number. So, our sorting of post order numbers runtime is $O(n)$.

What are the valid Topological order? : $XY(ZWU\lvert ZUW\lvert UZW)$

Note, for instance, XYUWZ is not a valid topological order! (Basically Z must come before W) - Because there’s a directed edge from Z to W, Z must come before W in any valid topological ordering of this graph.

Analogy: Imagine you’re assembling a toy. Piece Y is needed for both piece Z and piece W. But, piece Z is also needed for piece W. You must attach Y to Z first, then Z to W. Even though Y is needed for W, you don’t attach it directly to W.

DAG Structure

- Source vertex: no incoming edges

- Highest post order

- Sink vertex = no outgoing edges

- Lowest post order

By now, you are probably thinking, what does all this has to do with strongly connected component (SCC)? We will show that it is possible to do so with two DFS search.

Connectivity in DAG

Vertices $v$ & $w$ are strongly connected if there is a path $v \rightarrow w$ and $w \rightarrow v$

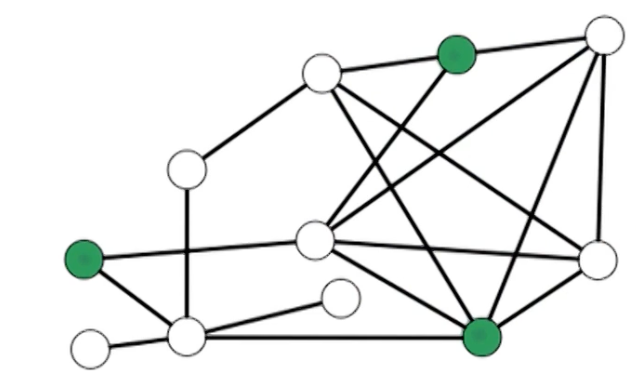

So, SCC defined as strongly connected component, is the maximal set of strongly connected vertices.

Example:

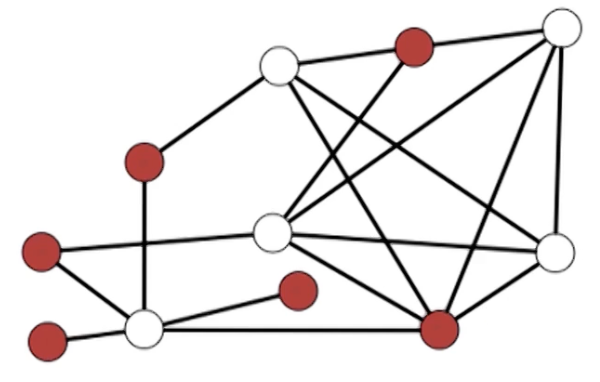

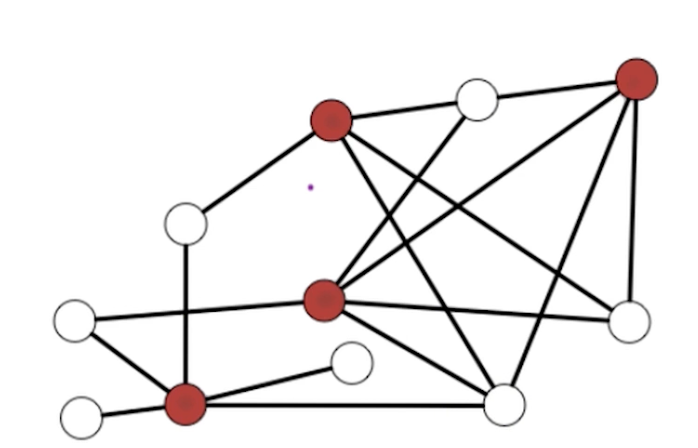

How many strongly connected components (SCC) does the graph has? 5

-

Ais a SCC by itself since it can reach many other nodes but no other nodes can reach A -

{H,I,J,K,L}Can reach each other {C,F,G}{B,E}{D}

We can simplify the above graph to the following meta graph:

Notice that this meta graph is a DAG and it is always the case. This should be obvious because if two strongly connected components are involved in a cycle, then they will be combined to form a bigger SCC.

So, every directed graph is a DAG of it’s strongly connected components. You can take any graph, break it up into SCC and then topologically sort this SCC so that all edges go left to right.

Motivation

There are many ways we can do this, such as start with sinking vertices or source vertices. But, instead we can find the sink SCC, so, we find SCC S, output it, and remove it and repeat it. It turns out, Sinks SCC are easier to work with!

Recall we take any $v \in S$, where $S$ is the sick SCC. For example from the earlier graph we run Explore(v), and we run explore from any of of the vertices in {H,I,J,K,L}, we will explore these vertices and not any other because it is a sink SCC.

What if we find a vertex in the source component? You ended up exploring the whole graph, too bad! So, how can we be smart about this and find a vertex that lies in a sink component? Because if we can do so (somehow magically select a vertex in the sink SCC), then we are guaranteed to find all the nodes in the sink SCC.

So, how can we find such a vertex?

Recall that in a DAG, the vertex with the lowest postorder number is a sink.

In a directed directed G, can we use the same property? Does the property for a general graph, such that v with the lowest post order always lie in a sink SCC? HA of course its not true, do you think it will be that easy?

Notice that B has the lowest post order (3) but it belongs to the SCC {A,B}.

What about the other way around? Does v with the highest post order always lie in a source SCC? Turns out, this is true! How can we make use of this? Simple, just reverse it! So the source SCC of the reverse graph is the sink SCC!

So, for directed $G=(V,E)$, look at $G^R = (V,E^R)$, so, the source SCC in $G$ = sink SCC in $G^R$. So, we just flip the graph, run DFS, take the highest post order which is the source SCC in $G^R$, that will be the sink in $G$.

Example of SCC

lets consider the same graph but now we reverse it, and we start at node C

We start from c from the reverse graph $G^R$, find the SCC at {C, G, F, B, A, E}, then we proceed to {D} before going to {L}. you may notice that the choosing of which vertex might be important, suppose you pick at any vertex at {H,I,J,K,L}, it will be able to reach all vertices except {D} so that starting vertex will still have the highest post number.

Then, we sort it and get this following order: L, K, J, I, H, D, C, B, E, A, G, F. So now, we run DFS from the original graph starting at $G$.

- At first step, we start at $L$, so, we will reach

{L,K,J,I,H}label as 1 and strike them out. - We then visit $D$, and reach

{D}, label as 2 and strike them out - We do the same for $C$, reach

{C,F,G}label as 3 and strike them out - Then do the same for

{B,E}label as 4 - and finally

{A}label as 5

So, the L, K, J, I, H, D, C, B, E, A, G, F is mapped to {1,1,1,1,1,2,3,4,4,5,3,3}. Another interesting observation of the new labels {1,2,3,4,5} , we have the following graph:

Notice that this metagraph, they go from $5 \rightarrow to 1$. So, the {1,1,1,1,1,2,3,4,4,5,3,3} also outputs the topological order in reverse order! So we can take any graph, run two iterations of DFS, finds its SCC, and structure these SCC in topological order.

SCC algorithm

1

2

3

4

5

6

SCC(G):

input: directed G=(V,E) in adjacency list

1. Construct G^R

2. Run DFS on G^R

3. Order V by decreasing post order number

4. Run undirected connected components alg on G based on the post order number

Proof of claim:

Given two SCC $S$ and $S’$, and there is an edge $v \in S \rightarrow w \in S’$, the claim is the max post number in $S$ is always greater than max post number of $S’$.

The first case is if we start from $z \in S’$, then, we finish exploring in $S’$ before moving to $S$, so post numbers in $S$ will be bigger.

The second case is if we start $z \in S$, then $z$ will be the root node since we can travel to $S’$ from $z$. Since $z$ is the root node, then it must have the highest post order number.

BFS & Dijkstras

DFS : connectivity

BFS:

input: $G=(V,E)$ & $s \in V$

output: for all $v \in V$, dist(v) = min number of edges from $s$ to $v$ and $prev(v)$.

Dijkstra’s:

input: $G=(V,E)$ & $s \in V$, $\ell(e) > 0 \forall e \in E$

output: $\forall v \in V$, dist(v) = length of shortest $s\rightarrow v$ path.

Note, Dijkstra uses the min-heap (known as priority queue) that takes $logn$ insertion run time. So, the overall runtime for Dijkstra is $O((n+m)logn)$.

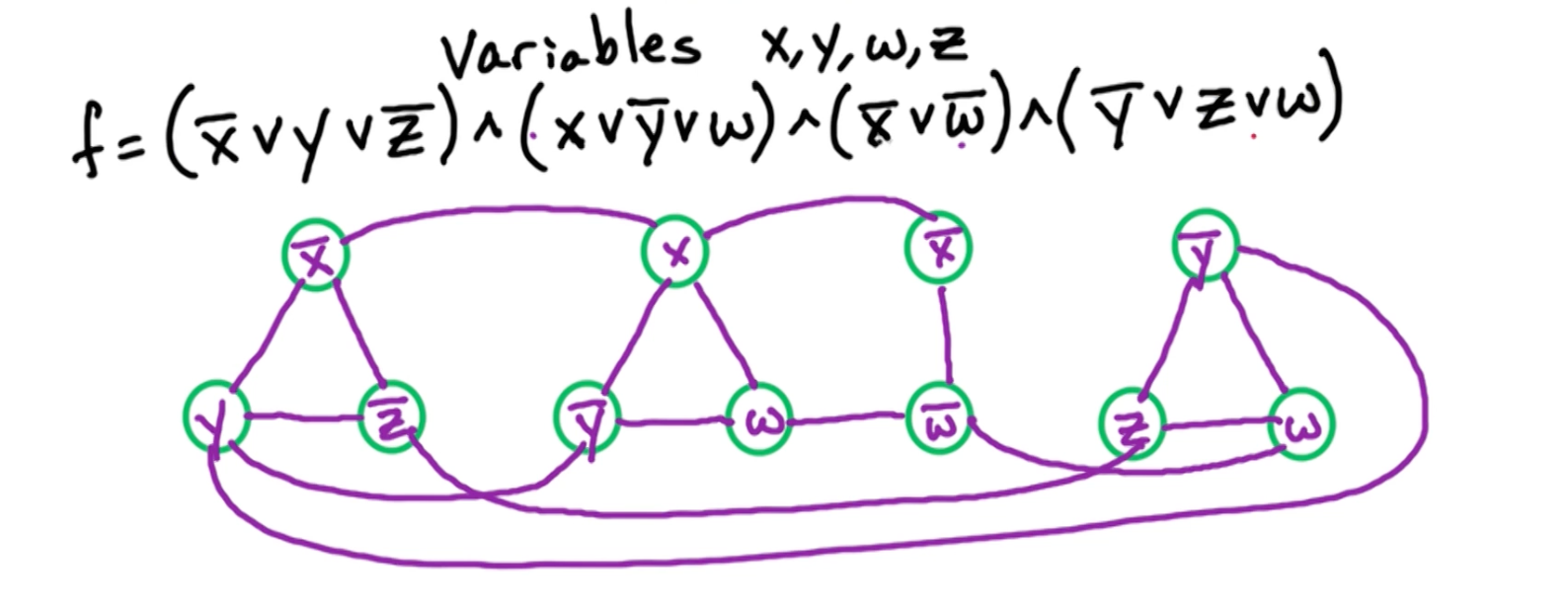

2-Satisfiability (GR2)

Boolean formula:

- $n$ variables with $x_1, …, x_n$

- $2n$ literals $x_1, \bar{x_1}, …, x_n, \bar{x_n}$ where $\bar{x_i} = \lnot x_i$

- We use $\land$ for the and condition and $\lor$ for the or condition.

CNF

Now, we define CNF (conjunctive normal form):

Clause: OR of several literals $(x_3 \lor \bar{x_t} \lor \bar{x_1} \lor x_2)$ F in CNF: AND of m clauses: $(x_2) \land (\bar{x_3} \lor x_4) \land (x_3 \lor \bar{x_t} \lor \bar{x_1} \lor x_2) \land (\bar{x_2} \lor \bar{x_1})$

Notice that for F to be true, that means for each condition we need at least one literal to be true.

SAT

Input: formula f in CNF with $n$ variables and $m$ clauses

output: assignment (assign T or F to each variable) satisfying if one exists, NO if none exists.

Example: $ f = (\bar{x_1} \lor \bar{x_2} \lor x_3) \land (x_3 \lor x_3) \land (\bar{x_3} \lor \bar{x_1}) \land (\bar{x_3})$

And an example that will work is $x_1 = F, x_2 = T, x_3 = F$.

K-SAT

For K sat, the input is formula f in CNF with $n$ variables and $m$ clauses each of size $\leq k$. So the above function $f$ is an example. In general:

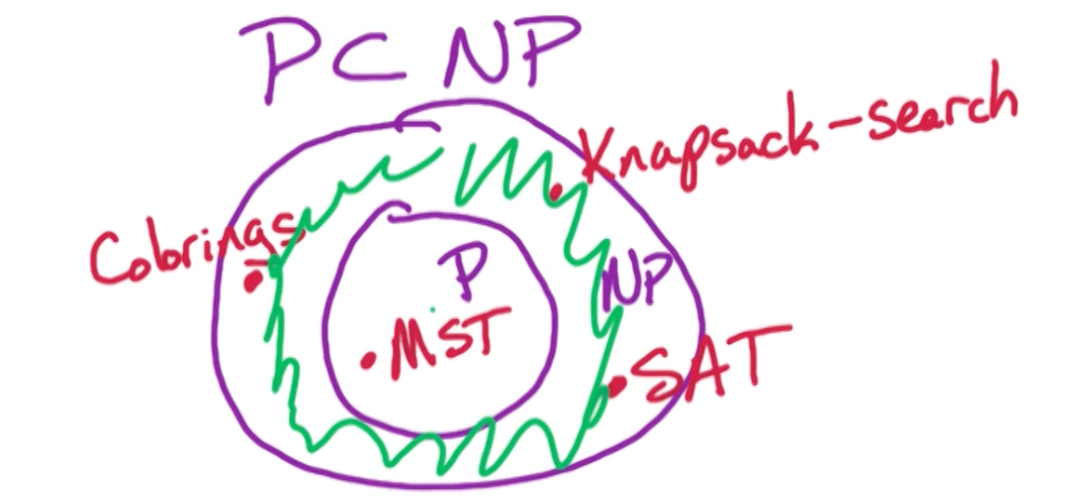

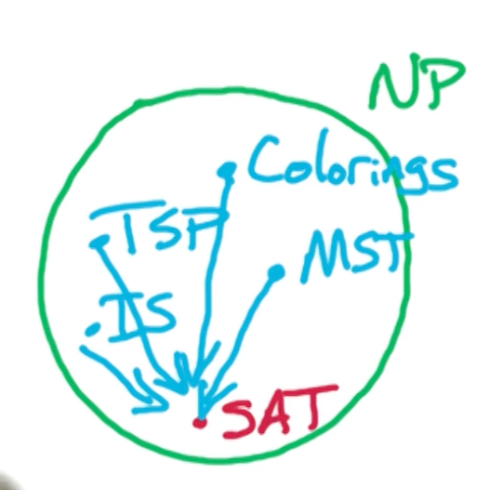

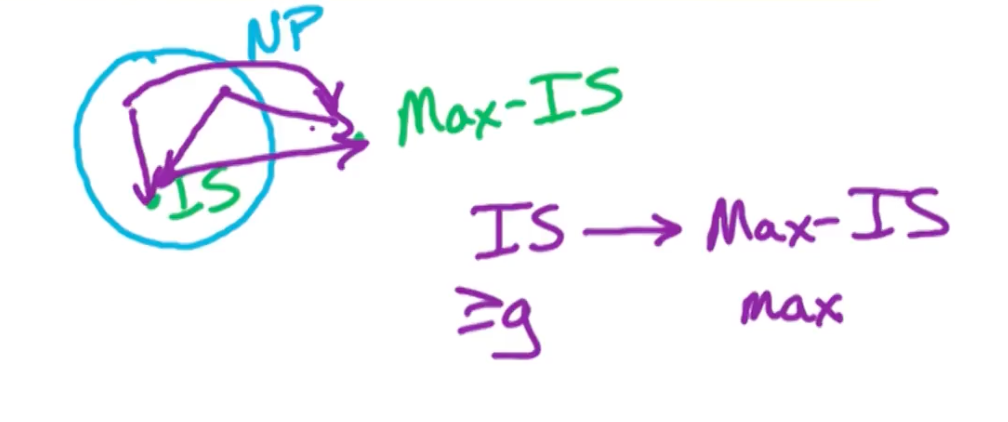

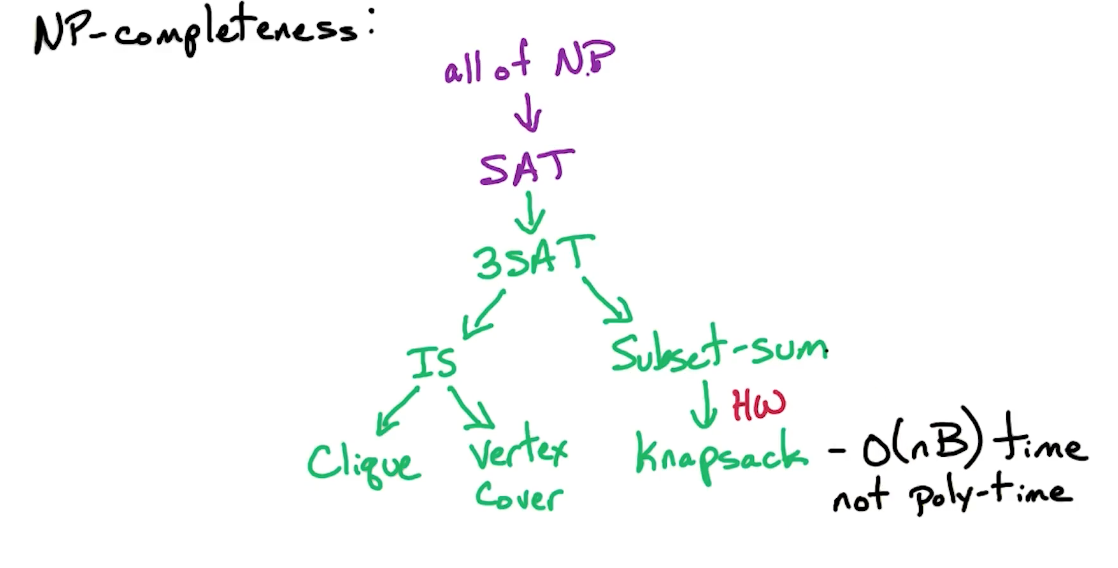

- SAT is NP-complete

- K-SAT is NP complete $\forall k \geq 3$

- Poly-time algorithm using SCC for 2-SAT

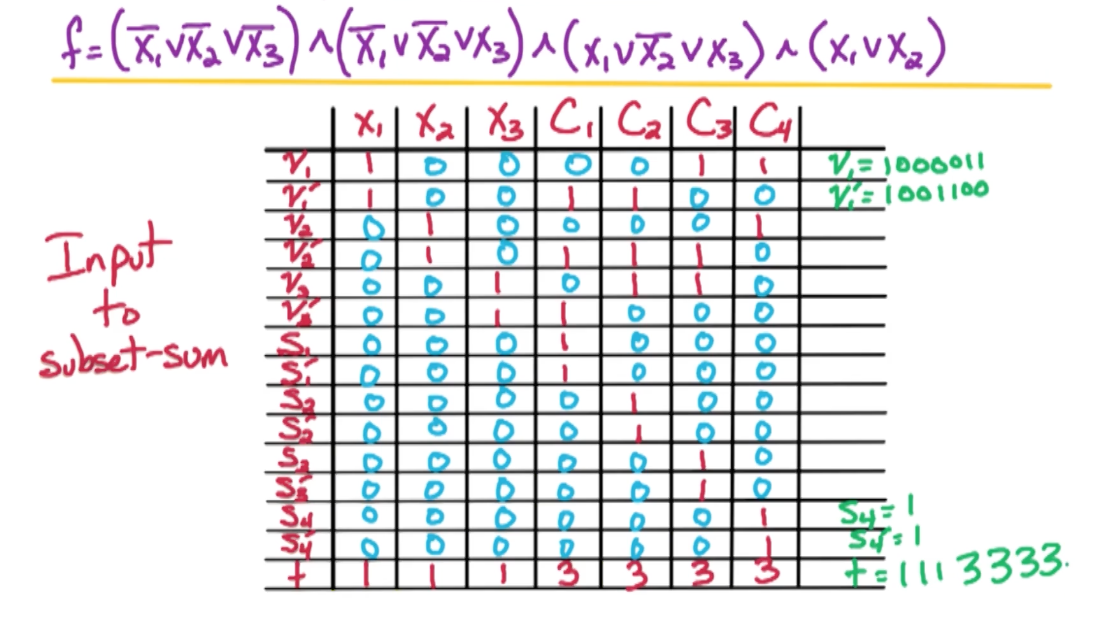

For example consider the following input f for 2-SAT:

\[f = (x_3 \lor \bar{x_2}) \land (\bar{x_1}) \land (x_1 \lor x_4) \land (\bar{x_4} \lor x_2) \land (\bar{x_3} \lor x_4)\]We want to simplify unit-clause which is a clause with 1 literal such as $(\bar{x_1})$. This is because to satisfy $\bar{x_1}$ there is only one way to set $x_1 = F$.

- Take a unit clause say literal $a_i$

- Satisfy it (set $a_i = T$)

- Remove clauses containing $a_i$ and drop $\bar{a_i}$

- let $f’$ be the resulting formula

For example:

\[\begin{aligned} f &= (x_3 \lor \bar{x_2}) \land (\cancel{\bar{x_1}}) \land (\cancel{x_1} \lor x_4) \land (\bar{x_4} \lor x_2) \land (\bar{x_3} \lor x_4) \\ &= (x_3 \lor \bar{x_2}) \land (x_4) \land (\bar{x_4} \lor x_2) \land (\bar{x_3} \lor x_4) \end{aligned}\]So, the original $f$ is satisfiable if $f’$ is. Notice that there is a unit clause $(x_4)$ and we can remove it. Eventually I am either going to left with an empty set, or a formula where all clauses are of size 2.

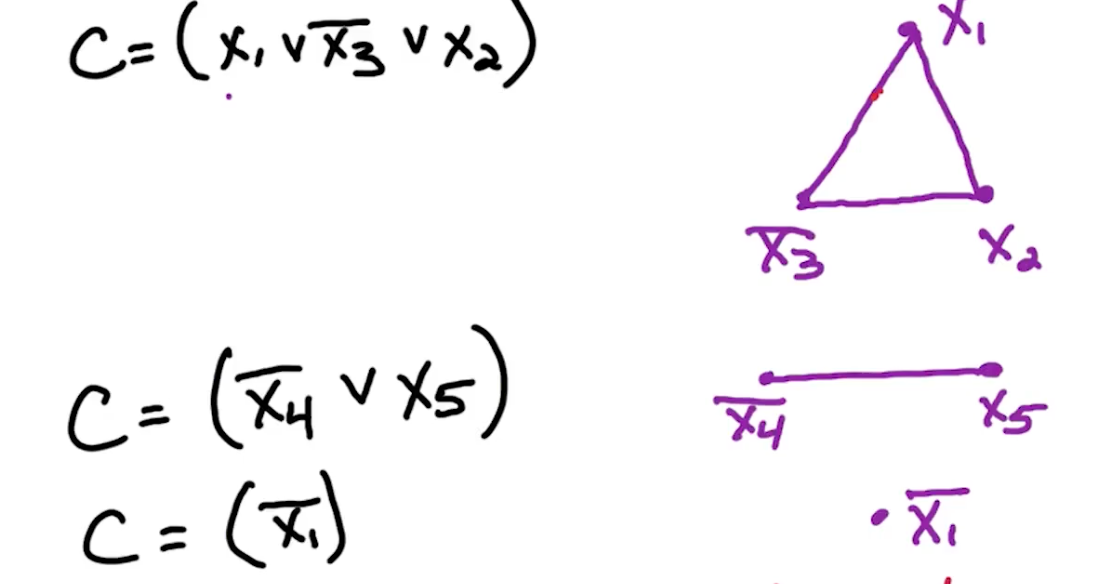

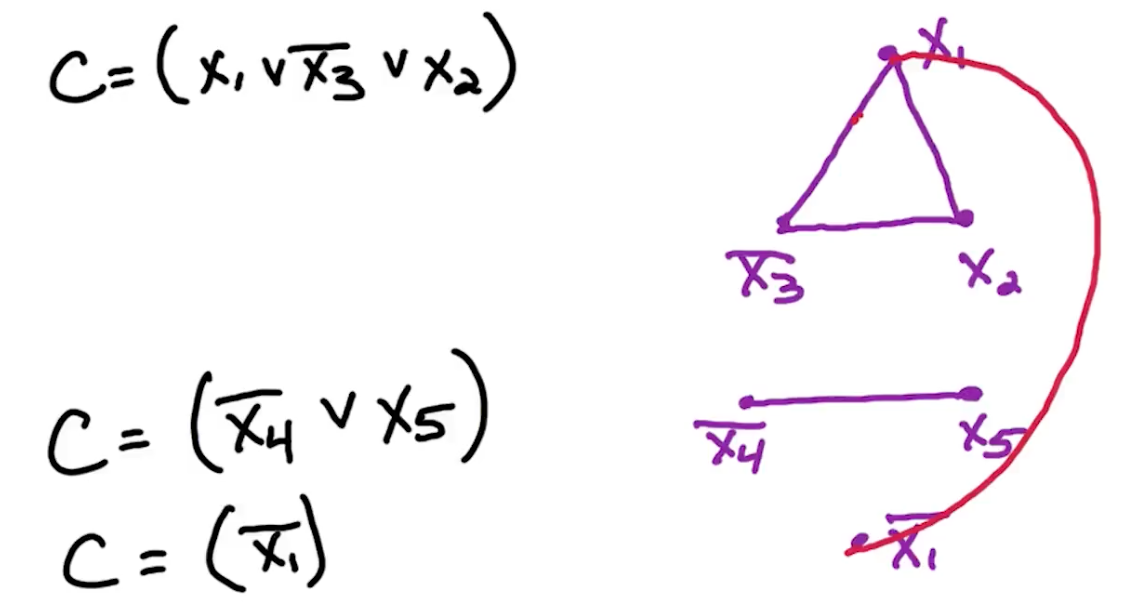

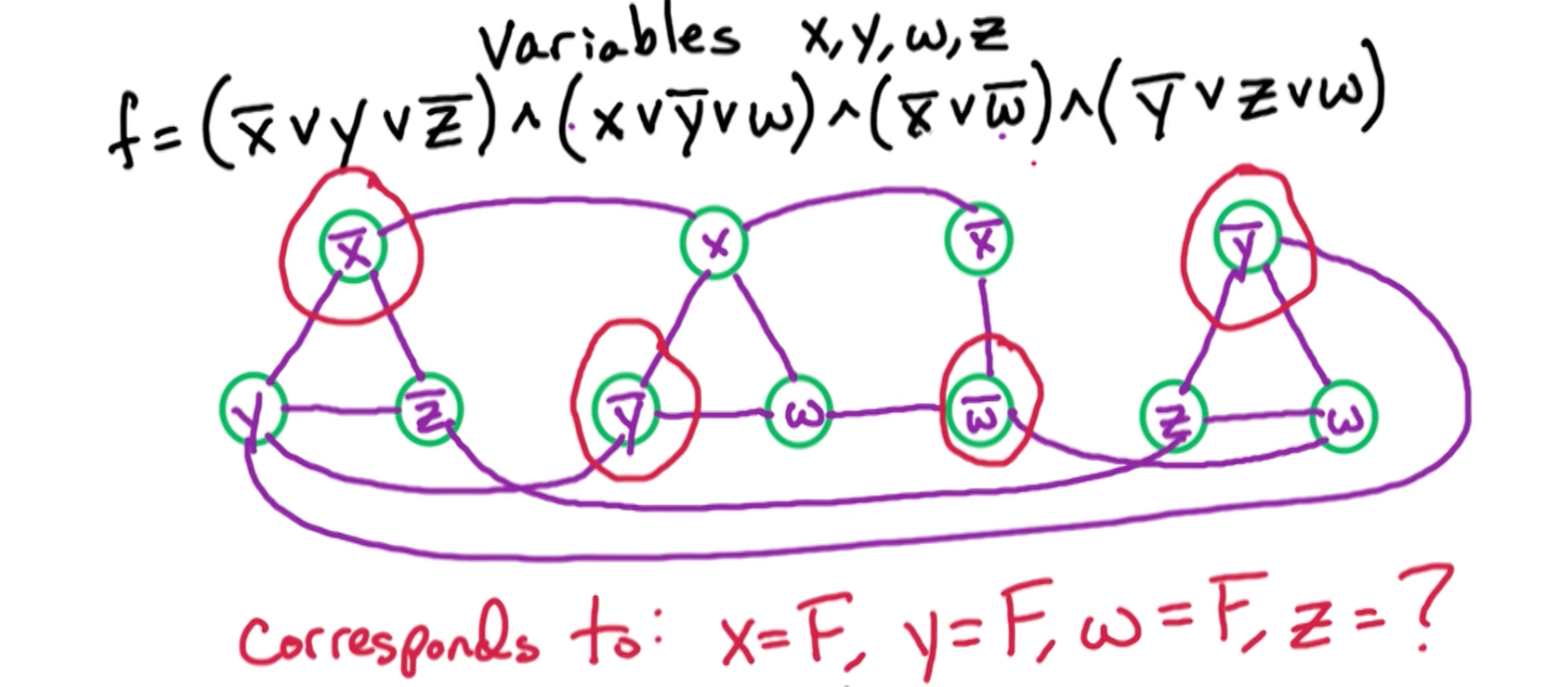

SAT-graph

Take $f$ with all clauses of size $=2$, $n$ variables and $m$ clauses, we create a directed graph:

- $2n$ vertices corresponding to $x_1, \bar{x_1}, …, x_n, \bar{x_n}$

- $2m$ edges corresponding to 2 “implications” per clause

Consider the following example: $f = (\bar{x_1} \lor \bar{x_2}) \land (x_2 \lor x_3) \land (\bar{x_3} \lor \bar{x_1})$

- Notice that if we set $x_1 = T \rightarrow x_2 = F$, and likewise $x_2 = T \rightarrow x_1 = F$

In general given $(\alpha \lor \beta)$, then you need $\bar{\alpha} \rightarrow \beta$ and $\bar{\beta} \rightarrow \alpha$

If we observe the graph, we notice that there is a path from $x_1 \rightarrow \bar{x_1}$, which is a contradiction. If $x_1 = F$, then it might be ok? In general, if there are paths such that $x_1 \rightarrow \bar{x_1}$ and $\bar{x_1} \rightarrow x_1$, then $f$ is not satisfiable because $\bar{x_1},x_1$ is in the same SCC.

In general:

- If for some $i$, $x_i, \bar{x_i}$ are in the same SCC, then $f$ is not satisfiable.

- If for some $i$, $x_i, \bar{x_i}$ are in different SCC, then $f$ is satisfiable.

2-SAT Algo

- Take source scc $S’$ and set $S’ = F$

- Take sink scc $\bar{S’}$ and set $\bar{S’} = T$

- When we done this, then we can remove all these literals from the graph!

This works because of a key fact: if $\forall i$, $x_i \bar{x_i}$ are in different SCC’s, then $S$ is a sink SCC if and only if $\bar{S}$ is a source SCC.

1

2

3

4

5

6

2SAT(F):

1. Construct graph G for f

2. Take a sink SCC S

- Set S = T ( and bar(S) = F)

- remove S, bar(S)

- repeat until empty

Proof:

The first claim is we show that path $\alpha \to \beta \iff \bar{\beta} \to \bar{\alpha}$

Take path $\alpha \to \beta$, say $\gamma_0 \to \gamma_1 \to … \to \gamma_l$ where $\gamma_0 = \alpha, \gamma_l = \beta$

Recall that $(\bar{\gamma_1} \lor \gamma_2)$ is represented in the graph as $(\gamma_1 \to \gamma_2)$, since if $\gamma_1 = T$ then $\gamma_2$ must also be $T$. $(\bar{\gamma_1} \lor \gamma_2)$ is also represented in the graph as $(\bar{\gamma_2} \to \bar{\gamma_1})$. This shows that $\bar{\gamma_0} \gets \bar{\gamma_1} \gets … \gets \bar{\gamma_l}$ which implies $\bar{\beta} \to \bar{\alpha}$ since $\gamma_0 = \alpha, \gamma_l = \beta$.

Using this claim, we can show 2 more things:

If $\alpha,\beta \in S$, then $\bar{\alpha}, \bar{\beta} \in \bar{S}$. This is true because if there are paths in $\alpha \leftrightarrow \beta$ since they are in the same SCC, this means that there are paths $\bar{\beta} \leftrightarrow \bar{\alpha}$ using the above claim and they belong to SCC.

It remains to show that $S$ must be a sink SCC and $\bar{S}$ is a source SCC.

We take a sink SCC S, for $\alpha \in S$, that means there are no edges from $\alpha \to \beta$ which implies no edges such that $\bar{\beta} \to \bar{\alpha}$. In other words, no outgoing edges from $\alpha$ means there is no incoming edges to $\bar{\alpha}$. This shows that $\bar{S}$ is a source SCC!

MST (GR3)

For the minimum spanning tree, we are going to go through the krusal algorithm but mainly focus on the correctness of it. Note that krusal algorithm is a greedy algorithm which makes use of the cut property. This property is also useful in proving prim’s algorithm.

MST algorithm

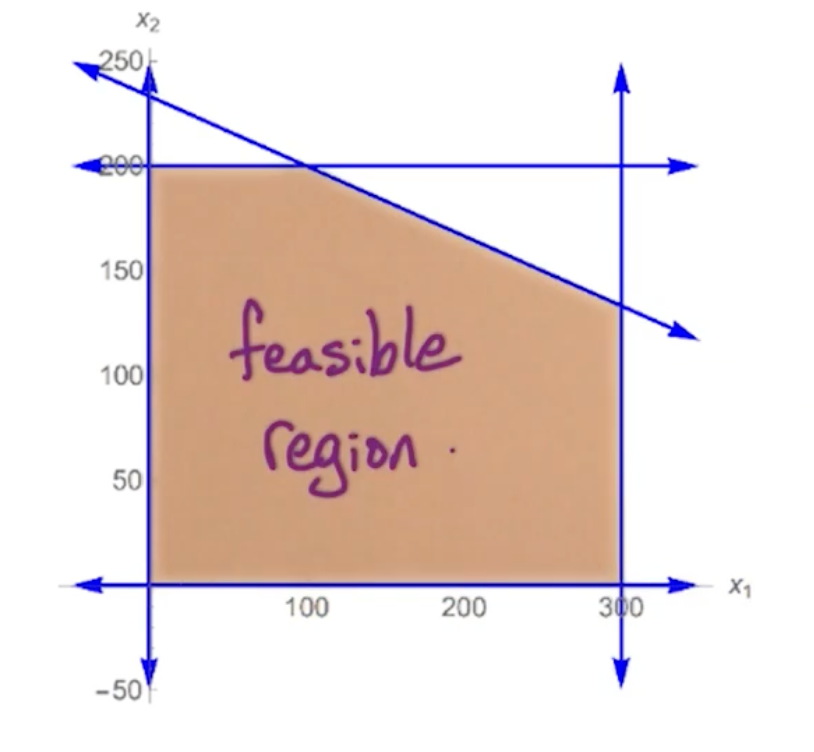

Given: undirected $G=(V,E)$ with weights $w(e)$ for $e\in E$

Goal: find minimum size, connected subgraph of minimum weight. This connected subgraph is known as the spanning tree (refer this as T). So we want $T \subset E, w(t) = \sum_{e\in T} w(e)$

1

2

3

4

5

6

7

8

Kruskals(G):

input: undirected G = (V,E) with weights w(e)

1. Sort E by increasing weight

2. Set X = {null}

3. For e=(v,w) in E (in increasing order)

if X U e does not have a cycle:

X = X U e (U here denotes union)

4. Return X

Runtime analysis:

- Step one takes $O(mlogn)$ time where $m = \lvert E \lvert , v = \lvert V \lvert$

- This was a little confusing to me and why the lecture said $O(mlogm) = O(mlogn)$. It turns out that the max of $m$ is $n^2$ for a fully connected graph,so $O(mlogm) = O(mlogn^2) = O(2mlogn) = O(mlogn)$.

- For this we can make use of union-find data structure.

- Let $c(v)$ be the component containing $v$ in $(V,X)$

- Let $c(w)$ be the component containing $w$ in $(V,X)$

- We check if $c(v) \neq c(w)$ (by them having different representative), then add $e$ to $X$.

- We then apply union to both of them.

- The union-find data structure takes $O(logn)$ time

- Since we are doing it for all edges, then $O(mlogn)$.

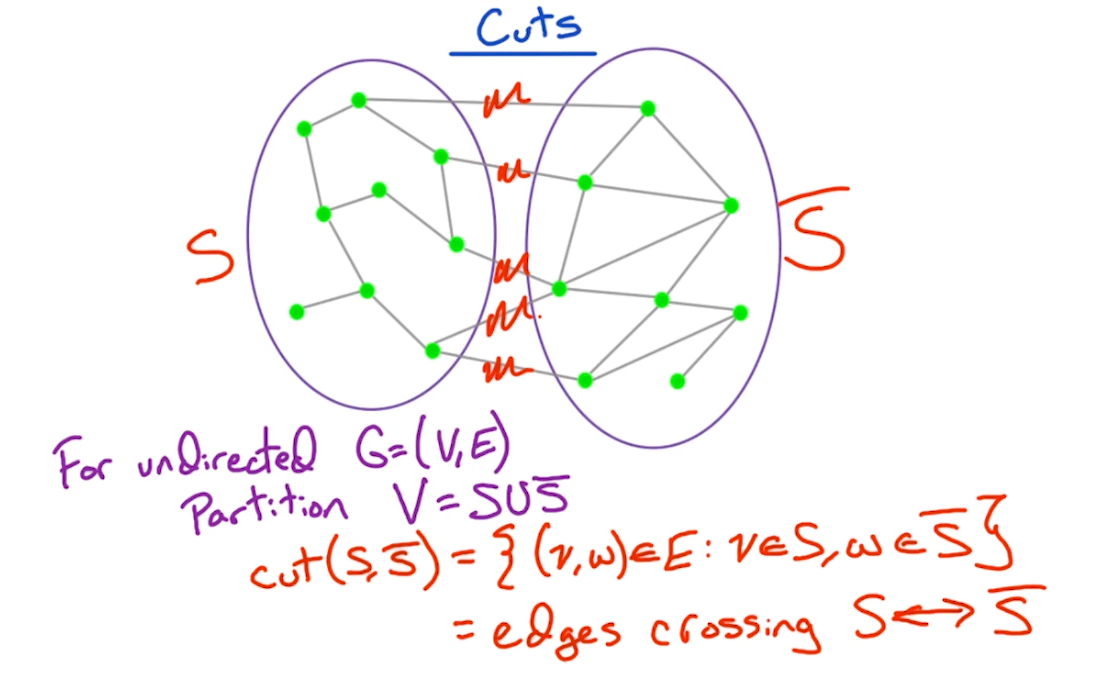

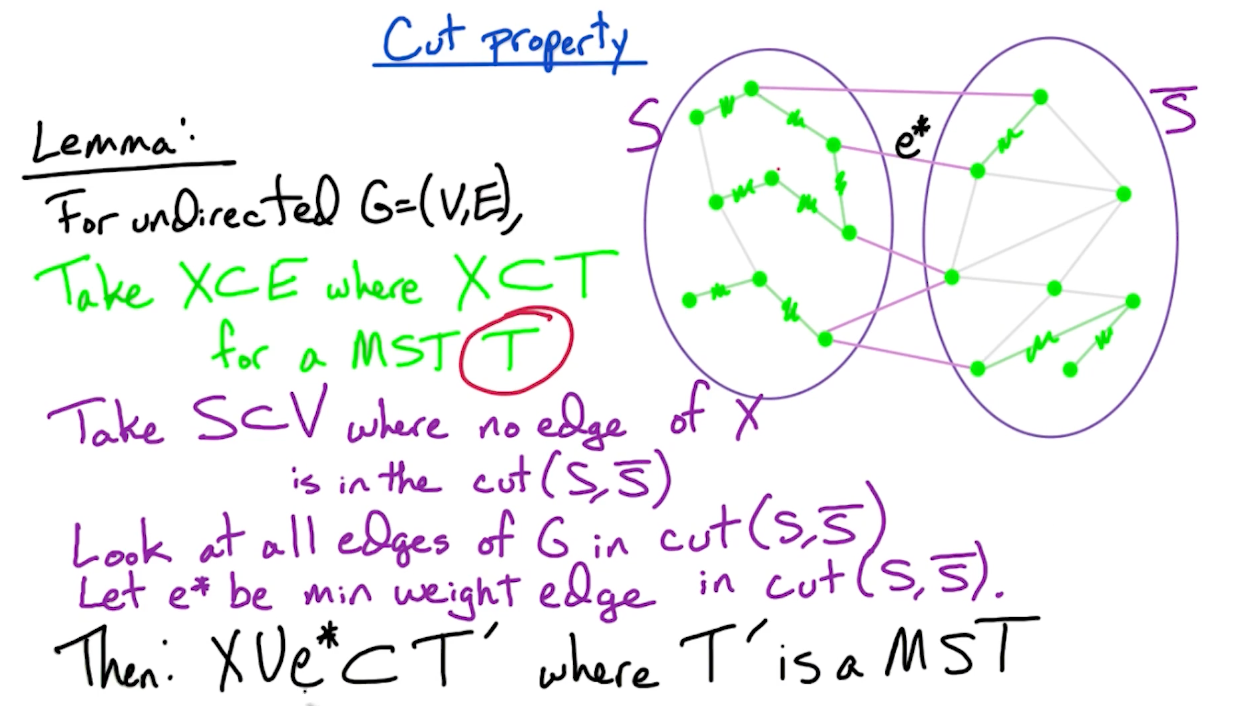

Cut property

To prove the correctness, we first need to define the cut property:

In other words, the cut of a graph is a set of edges which partition the vertices into two sets. In later part, we will look at problems such as minimum/maximum cut to partition the graphs into two components.

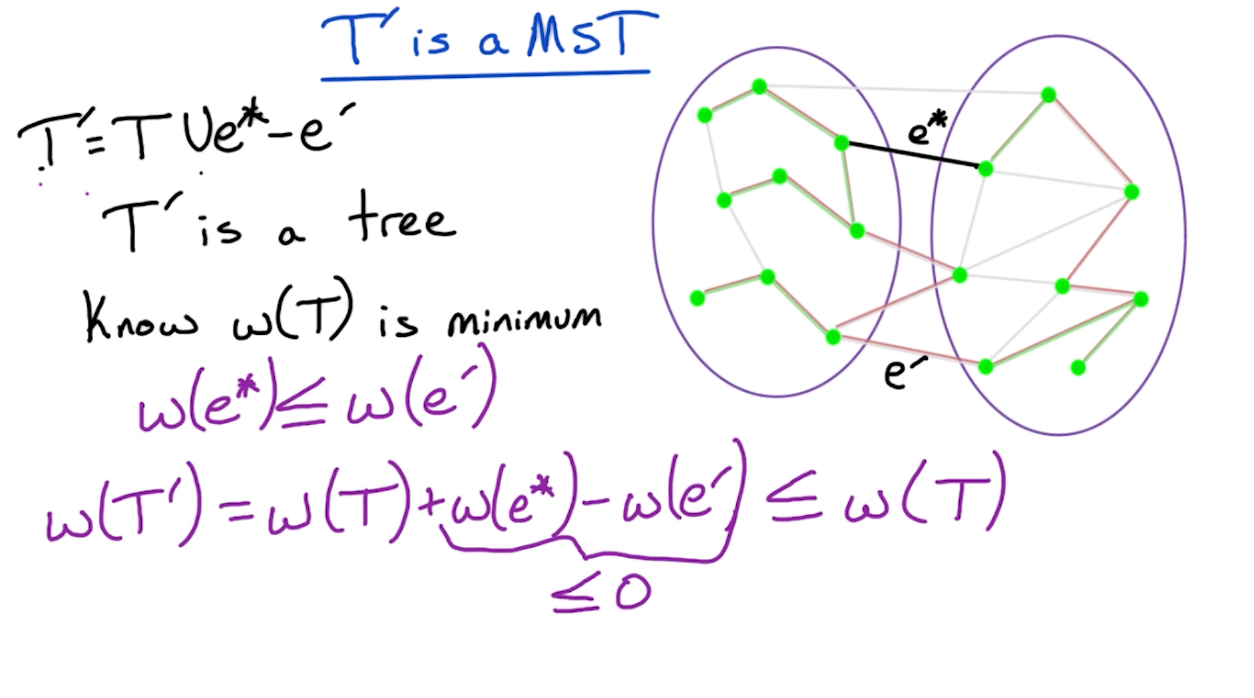

The core of the proof is:

- Use induction, and assume that $X \subset E$ where $X \subset T$ for a MST $T$. The claim is when we add an edge from $S, \bar{S}$, we form another MST $T’$.

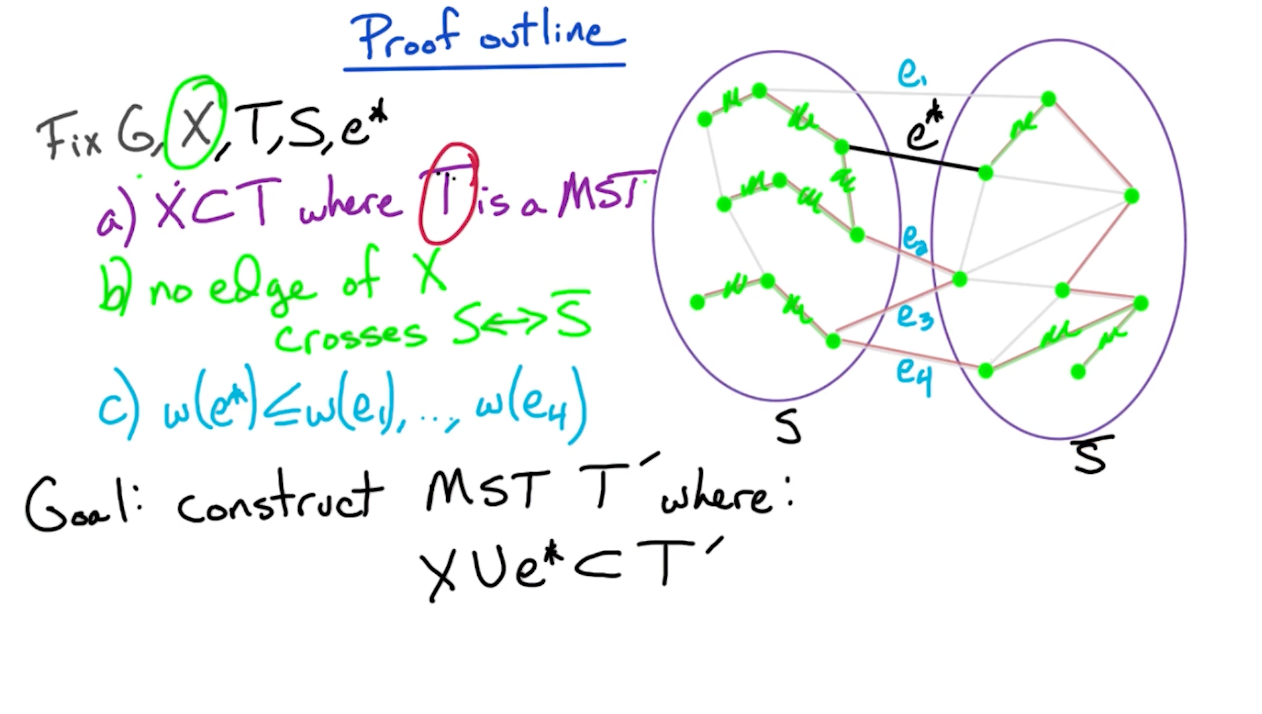

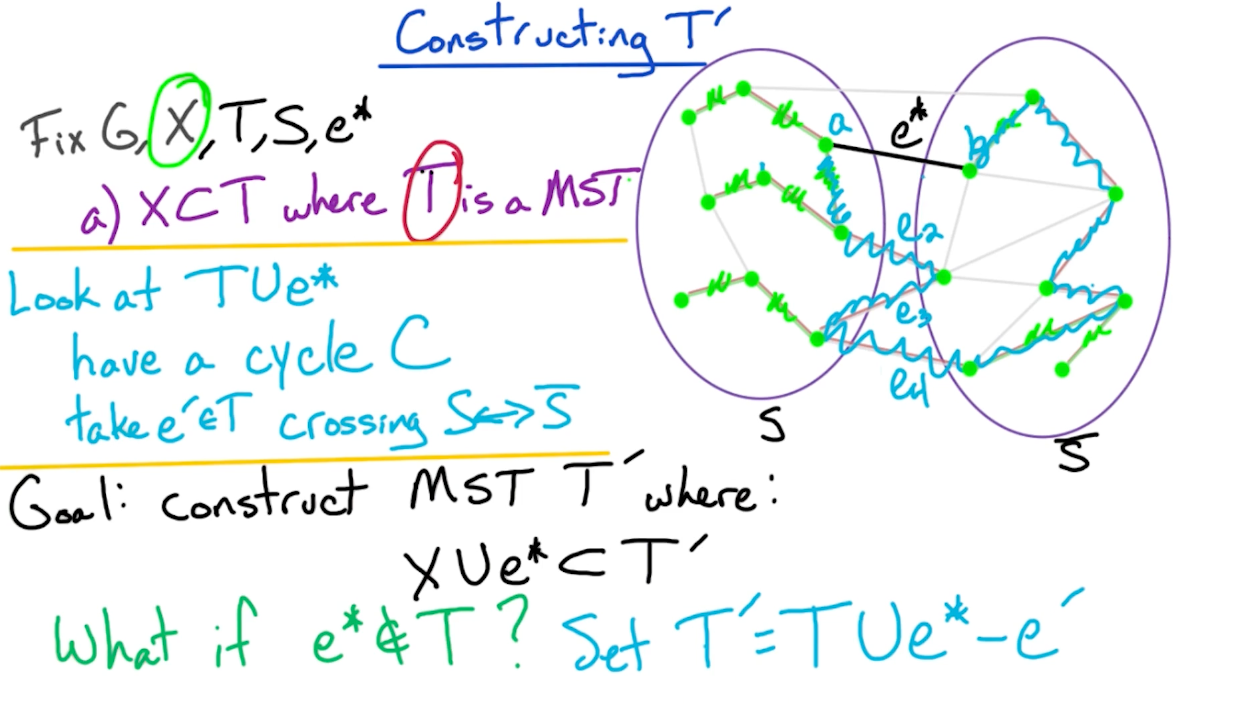

Proof outline

So, we need to consider two cases, if $e^* \in T$ or $e^* \notin T$.

- If $e^* \in T$, our job is done, as there is nothing to show.

- If $e^* \notin T$ (such as the diagram above), then we modify $T$ in order ot add edge $e^*$ and construct a new MST $T’$

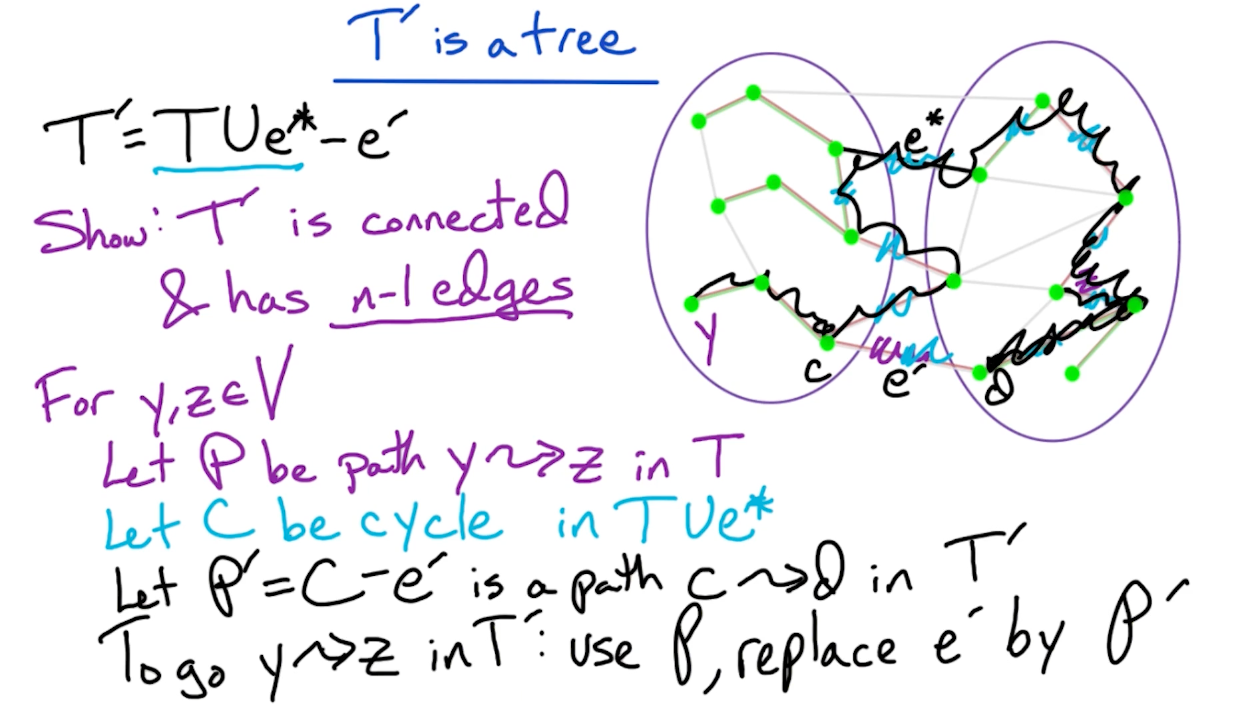

Next, we show that $T’$ is still a tree:

- Remember if a tree with size $n$ has $n-1$ edges then it must be connected.

- Actually, it turns out that $w(T’) = w(T)$, otherwise it would contradict the fact that $T$ is a MST.

Prim’s algorithm

MST algorithm is akin tio Dijkstra’s algorithm, and use the cut property to prove correctness of Prim’s algorithm.

The prim’s algorithm selects the root vertex in the beginning and then traverses from vertex to vertex adjacently. On the other hand, Krushal’s algorithm helps in generating the minimum spanning tree, initiating from the smallest weighted edge.

Maxflow

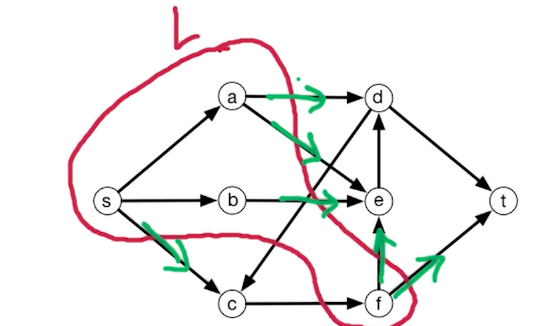

Ford-Fulkerson (MF1)

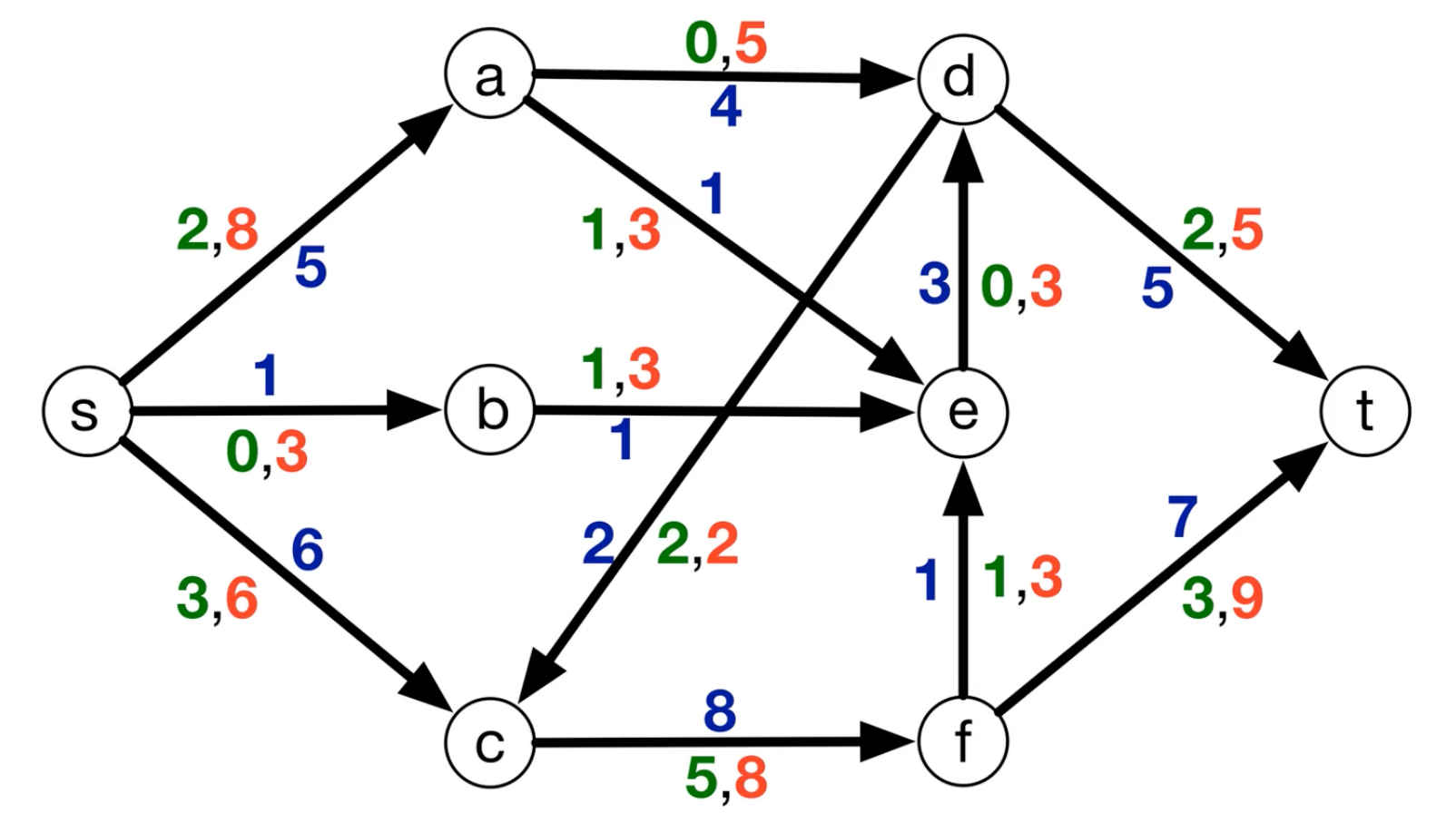

Problem formulation:

- Input: Directed graph $G=(V,E)$ designated $s,t \in V$ for each $e \in E$, capacity $c_e >0$

- Goal: Maximize flow $s \rightarrow t$, $f_e$ = flows along $e$ subjected to the following:

- Capacity constraints: * $\forall e \in E, 0\leq f_e \leq c_e$

- Conversation of flow: $\forall v \in V - {S \cup T}$, flow-in to $v$ = flow-out of $v$

- $\sum_{\overrightarrow{wv} \in E} f_{wv} = \sum_{\overleftarrow{vz} \in E} f_{vz}$

Other details:

- For the max flow problems, the cycles are ok!

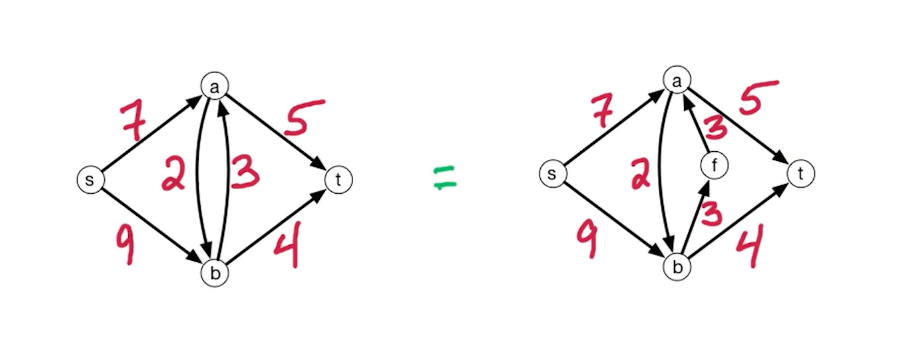

-

Anti parallel edges:

- Notice the edge between $a\leftrightarrow b$, you want to break this anti parallel edges (Because of the residual network that we will see later)

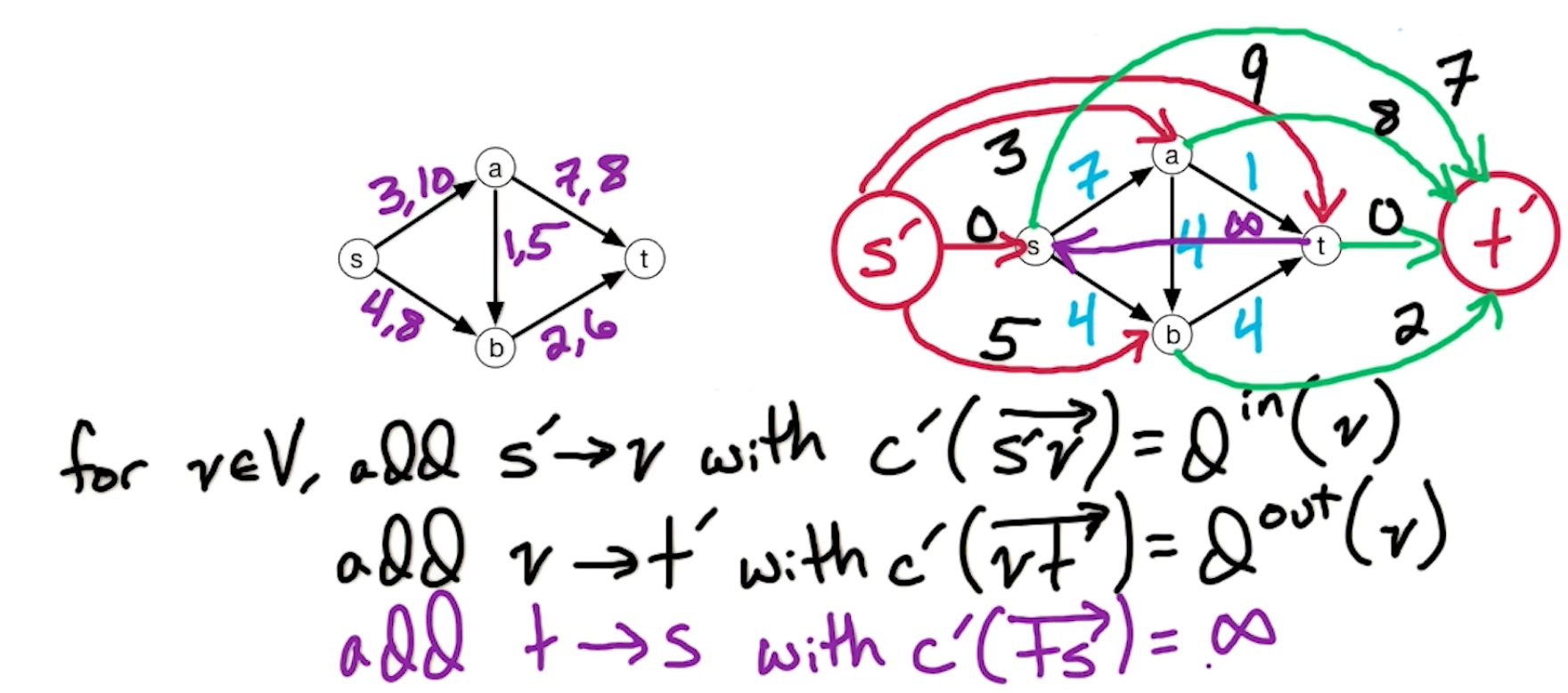

Residual Network

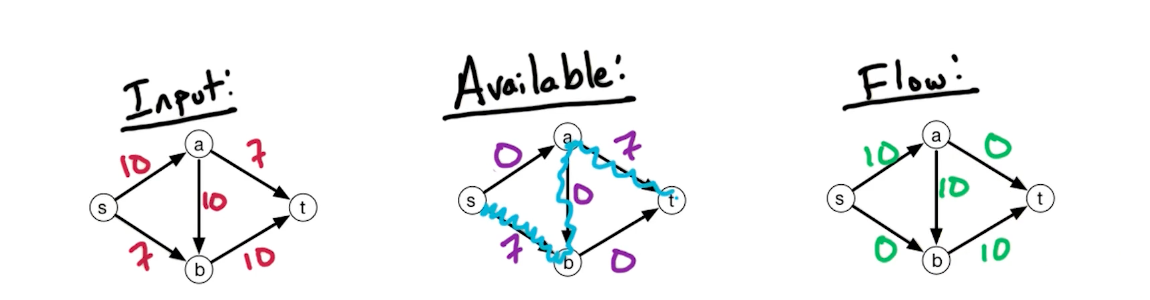

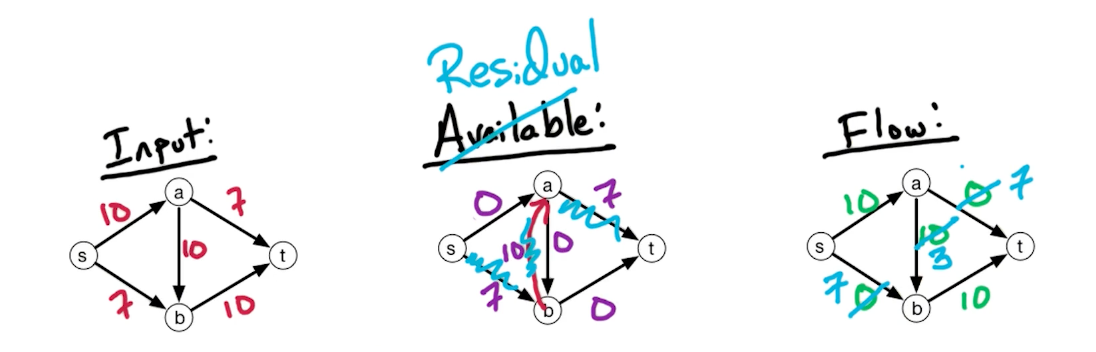

Consider the following network, we initially run a path $s \rightarrow a \rightarrow b \rightarrow t$

But, it turns out that there is still capacity of $7$ left, what can we do? We build a backward edge with weights from the previous path, as shown with the red arrow below.

Now, we can pass on a flow of $7$ and the max flow is $17$.

In general, the residual network $G^f = (V,E^f)$, for flow network $G=(V,E)$ with $c_e : e \in E$, and flow $f_e : e \in E$,

- if $\overrightarrow{vw} \in E\ \&\ f_{vw} < c_{vw}$, then add $\overrightarrow{vw}$ to $G^f$ with capacity $c_{vw} - f_{vw}$ (remaining available)

- if $\overrightarrow{vw} \in E\ \&\ f_{vw} > 0$, then add $\overrightarrow{wv}$ to $G^f$ with capacity $f_{vw}$

In other words, if you flow is below capacity provided, add another edge in parallel with edge weight as the flow. If your flow is greater than 0 (and all capacity is used), add a backward edge with the capacity flow. Also, because we remove the parallel edges, we are allowed to add the forward edge and backward edge without inequalities.

Ford-Fulkerson Algorithm

- Set $f_e = 0$ for all $ e \in E$

- Build the residual network $G^f$ for the current flow $f$

- Initially it will all be zero

- Check for a path $s\rightarrow t$ in $G^f$ using DFS/BFS

- If there is no such path, then output $f$.

- If there is a path, denote it a $\mathcal{P}$

- Given $\mathcal{P}$, let $c(\mathcal{P})$ denote the minimum capacity along $\mathcal{P}$ in $G^f$

- Augment $f$ by $c(\mathcal{P})$ units along $\mathcal{P}$

- For every forward edge, we increase the flow along that edge by this amount

- For the backward edge, we decrease the flow in the other direction

- Repeat from the build residual network step (until you return output $f$)

The proof is based on max-flow = min-cut theorem, which will be covered in MF2.

For Time complexity, we assume all capacities are integers (Edmonds-Karp algorithm eliminates this assumptions). This assumption implies that whenever we augment hte flow, we augment by an integer amount.

- This implies that the flow increases by $\geq 1$ unit per round

- Let $C$ denote the size of max flow, then we have at most $C$ rounds.

- Since the graph is connected, $\mathcal{P}$ is $n-1$ edges, so to update the residual network takes $O(n)$

- To check for the path $\mathcal{P}$, either with BFS or DFS, takes $O(n+m)$, since the graph is connected, it reduces to $O(m)$.

- Augment $f$ by $c(\mathcal{P})$ also takes $O(n)$

- So overall the complexity of each round is $O(m)$, over $C$ rounds, hence the total runtime is $O(Cm)$.

Other Algorithms

For Ford-Fulkerson

- the running time depends on the integer $C$, if you recall from knapsack, the running time is a pseudo-polynomial.

- We use BFS/DFS to find any path from $s\rightarrow t$

For Edmonds-karp

- Algorithm takes $O(m^2 n)$ time

- We take the shortest path from $s \rightarrow t$, in this case shortest means the number of edges and do not care about the weights on the edges. To find such a path, we run BFS. The number of rounds in this case is going to be $O(mn)$, and each round takes $O(m)$ time, the total is $O(m^2n)$.

- So the runtime is independent of the max flow and no longer require it to be integer values

Orlin

- $O(mn)$, generally the best for general graphs for the exact solution of the max-flow problem.

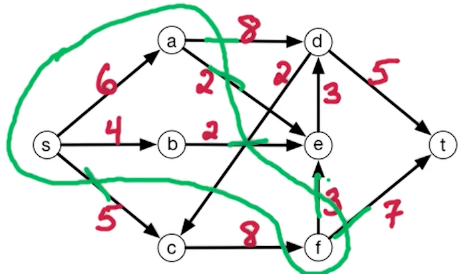

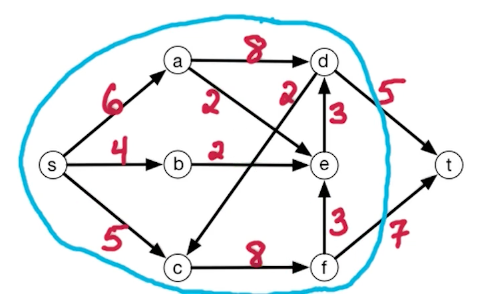

Max-Flow Min-Cut (MF2)

Recall that the Ford-Fulkerson algorithm stop when there is no more augmenting path in residual $G^{f*}$. (star here means the max flow, i.e optimal solution)

Lemma: For a flow $f^\ast$ if there is no augmenting path in $G^{f\ast}$ then $f^\ast$ is a max-flow.

Min-cut Problem

Recall that a cut is a partition of vertices $V$ into two sets, $V = L \cup R$. Define st-cut to be a cut where $s\in L, t\in R$

Notice that a cut does not need to be connected, F is not connected to A and B in this subset. We are interested in the capacity of this st-cut.

Define capacity from $L\rightarrow R$

\[Capacity(L,R) = \sum_{\overrightarrow{vw} \in E: v \in L, w \in R} c_{vw}\]Notice edges such as $C \rightarrow F$ do not count.

So the problem formulation of the min st-cut problem is as follows:

- Input: flow network

- Output: st-cut (L,R) with minimum capacity.

For example consider this cut, the capacity is $8+2+2+3+7+5 = 27$

The cut with minimum capacity is as follows

The min st-cut is 12, which i is equal to the max-flow 12. $L$ contains everybody but $t$. So the theorem we want to prove is the size of hte max flow equals to the size of the min st-cut.

Theorem

Note, in the max-flow problem, it is always from s to t, but on the other side it is called the st-cut problem because we want the cut to separate s and t. There could be a minimum cut such that s,t belongs to the same set.

To proof this, we show that max-flow $\leq$ min st-cut, and vice versa. This will show that max-flow == st-cut.

LHS

To show max-flow $\leq$ min st-cut, show that for any flow $f$ and any st-cut $(L,R)$:

\[size(f) \leq capacity (L,R)\]Note - this is for any flow, which includes the max over f, and the minimum over the capacity.

Claim: $size(f) = f^{out}(L) - f^{in}(L)$

\[\begin{aligned} & f^{out}(L) - f^{in}(L) \\ &= \sum_{\overrightarrow{vw} \in E: v \in L, w \in R} f_{vw} - \sum_{\overrightarrow{wv} \in E: v \in L, w \in R} f_{wv} \\ &= \sum_{\overrightarrow{vw} \in E: v \in L, w \in R} f_{vw} - \sum_{\overrightarrow{wv} \in E: v \in L, w \in R} f_{wv} + \sum_{\overrightarrow{vw} \in E: v \in L, w \in L} f_{vw} - \sum_{\overrightarrow{wv} \in E: v \in L, w \in L} f_{wv} \end{aligned}\]Notice that the first term and the third term, when combined, you get all edges out of v, and likewise all edges into c, and we filter out the vertex $s$. Notice that the source vertex has no input.

\[\begin{aligned} & f^{out}(L) - f^{in}(L) \\ & = \sum_{v\in L} f^{out}(v) - \sum_{v\in L} f^{in}(v) \\ & = \sum_{v\in L-S} (\underbrace{f^{out}(v)-f^{in}(v)}_{0}) + f^{out}(s) + \underbrace{f^{in}(s)}_{0}\\ &= size(f) \end{aligned}\]So, the total flow out of $f$, is the size of $f$!. Coming back, we have:

\[size(f) = f^{out}(L) - f^{in}(L) \leq f^{out}(L) \leq capacity(L,R)\]The last part is true, because the total flow out of L, must be the capacity out of L to R.

RHS

Now, we prove the reverse inequality:

\[max_f size(f) \geq min_{(L,R)} capacity(L,R)\]Take flow $f^\ast$ from Ford-Fulkerson algorithm, and $f^\ast$ has no st-path in residual $G^{f\ast}$. We will construct $(L,R)$ where:

\[size(f^*) = cap(L,R)\]Similarly, this is for any size and any capacity, we can set the left to be the max, and right to be the min:

\[max_f size(f) \geq size(f^*) = cap(L,R) \geq min_{(L,R)} capacity(L,R)\]Take flow $f^\ast$ with no st-path in residual $G^{f\ast}$. Let $L$ be the vertices reachable from $s$ in $G^{f\ast}$. We know that $t \notin L$ because there is no such path that exists. So, let $R = V-L$. This also implies that $t \in R$.

For $\overrightarrow{vw} \in E, v\in L, w \in R$, this edge does not appear in the residual network (Because there is no path from L to R). This means that the edge must be fully capacitated $f_{vw}^* = c_{vw}$, and therefore the forward edge does not appear in the residual network so the flow along this edge equals to its capacity. Now since every edge from L to R is fully capacitated, the total flow out of $L$ must be equal to capacity$(L,R)$. This shows that $f^{*out}(L) = capacity(L,R)$.

Consider the opposite, consider edges $\overrightarrow{zy}, z \in R, y \in L$, which is edges that are going from R into L. The flow $f^\ast_{zy} = 0$ because the reverse edge does not appear in the residual network. Because if there is an edge $\overrightarrow{zy}$, then it would be reachable from $L$, then it would be included in the set $L$. Since the back edge does not appear in the residual network, then the forward edge has to have flow zero. This shows that $f^{\ast in}(L) = 0$.

Combining this two, shows:

\[size(f^*) = f^{*out}(L) - f^{*in}(L) = capacity(L,R)\]We have shown both sides of the inequality, which concludes that max-flow == min st-cut. We have also shown that for any flow that has no augmenting path in the residual network, we can construct a st-cut where the size of the flow equals to the capacity of the s-t cut $size(f^*) = cap(L,R)$. The only way to have equality here is if both of these are optimal, which is max flow and min st-cut to have the minimum capacity.

Note, this theorem also gives us another way of finding the min cut. We basically find the max flow, and set L to be all vertices reachable from s in the residual $G^{f*}$.

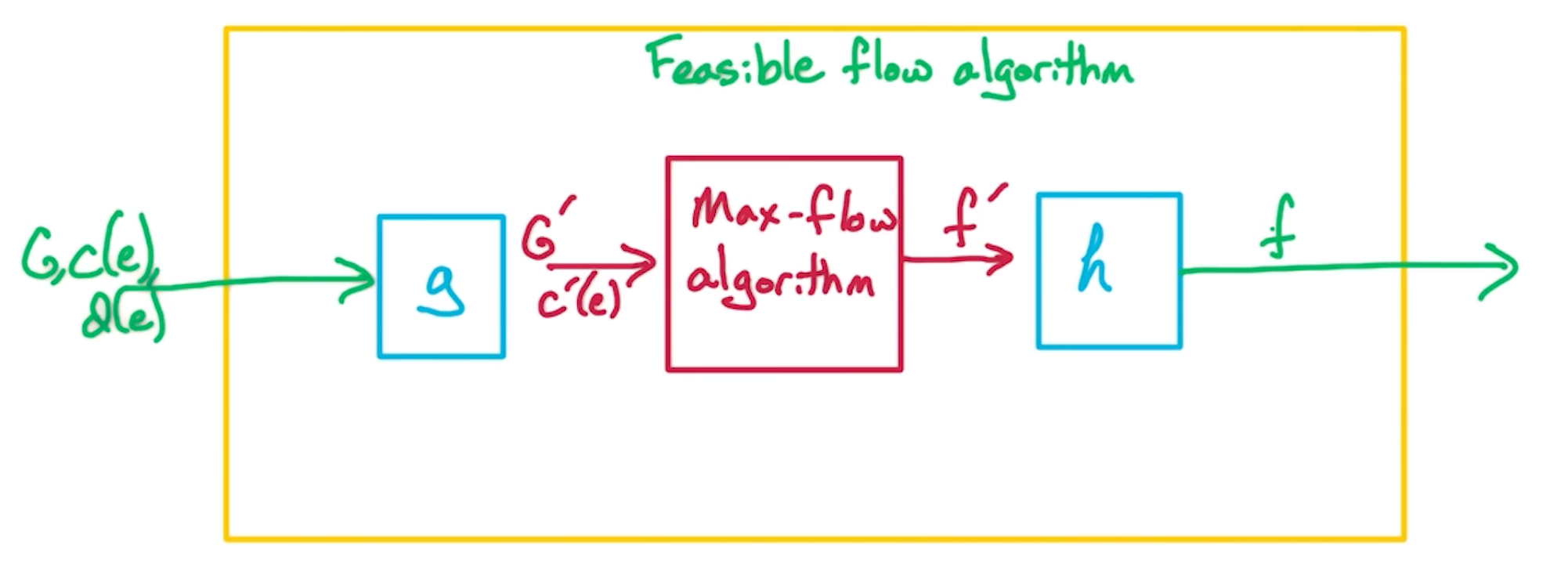

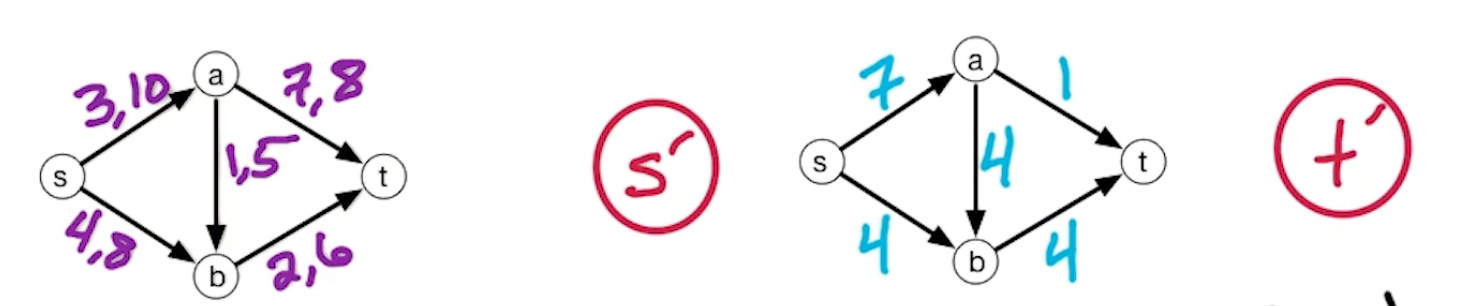

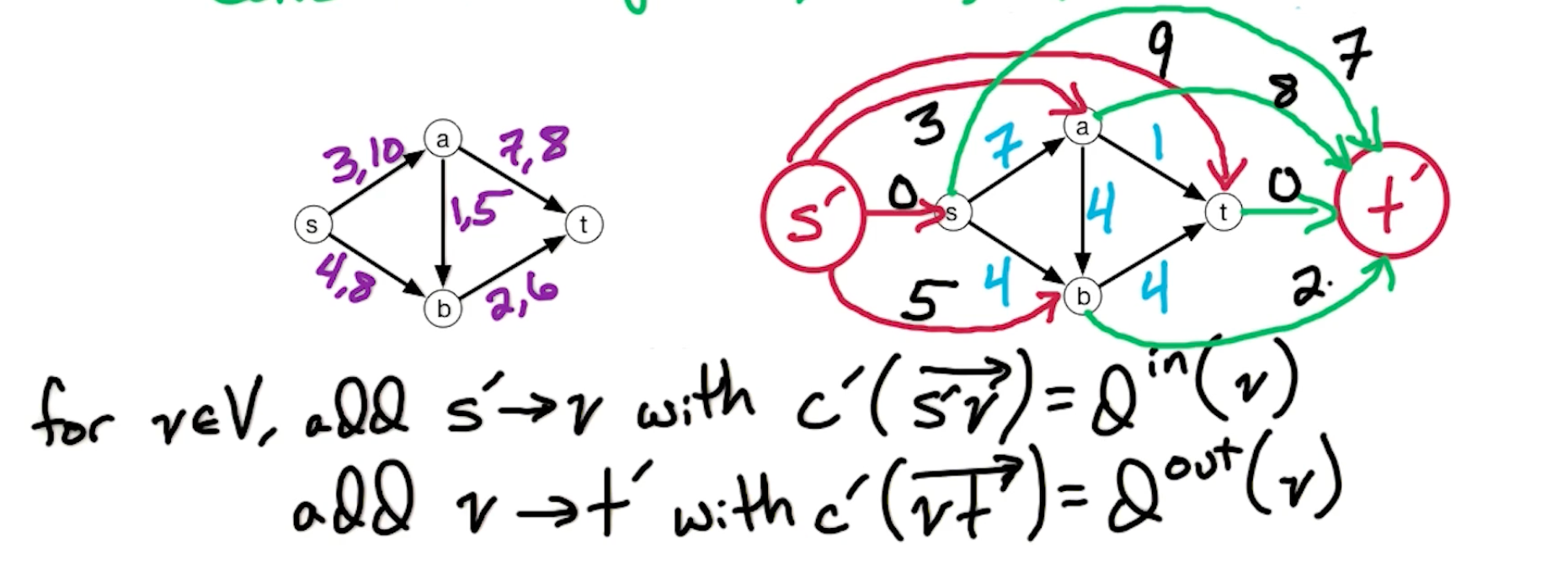

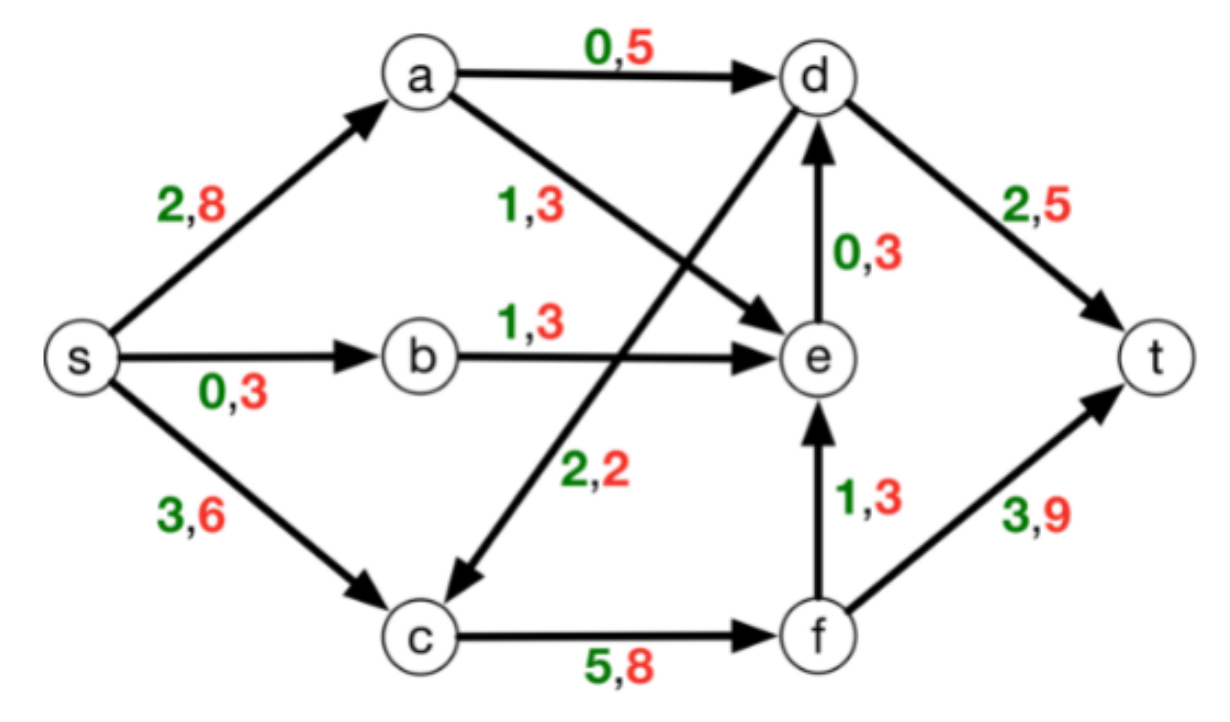

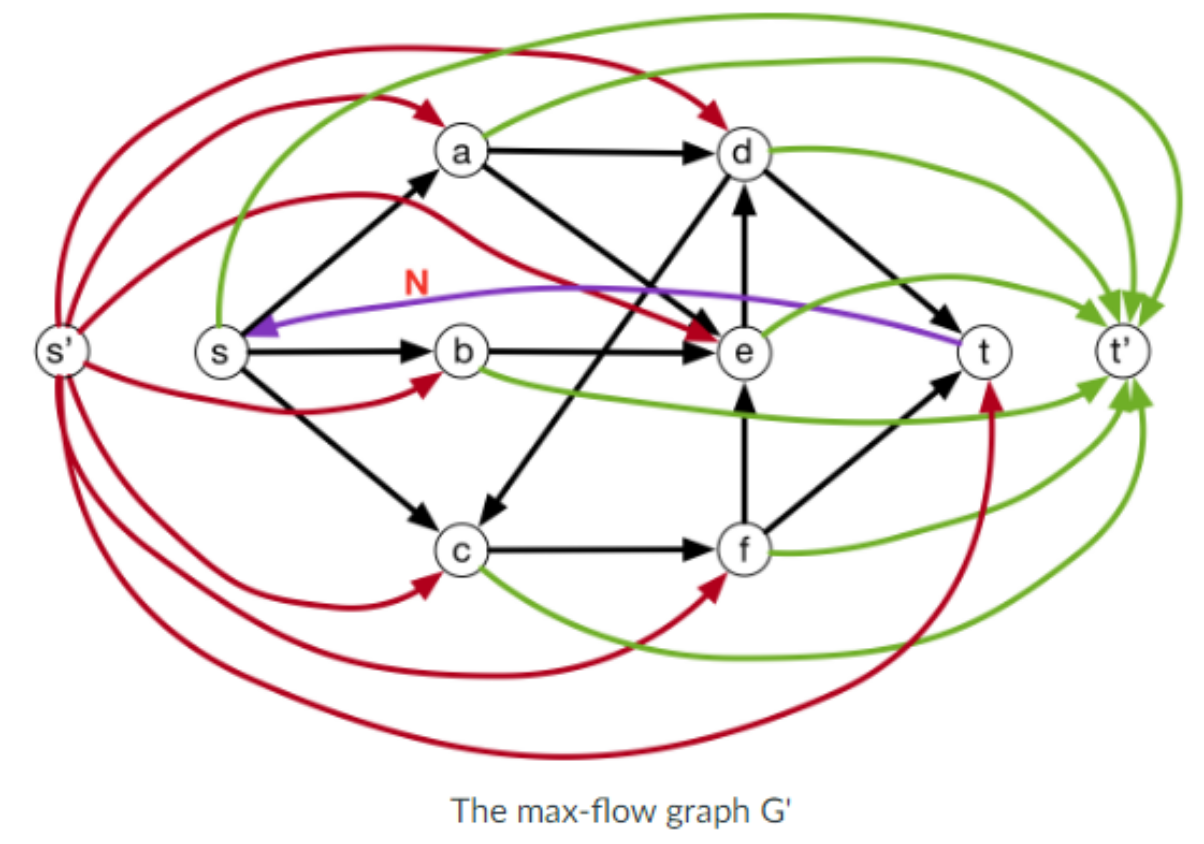

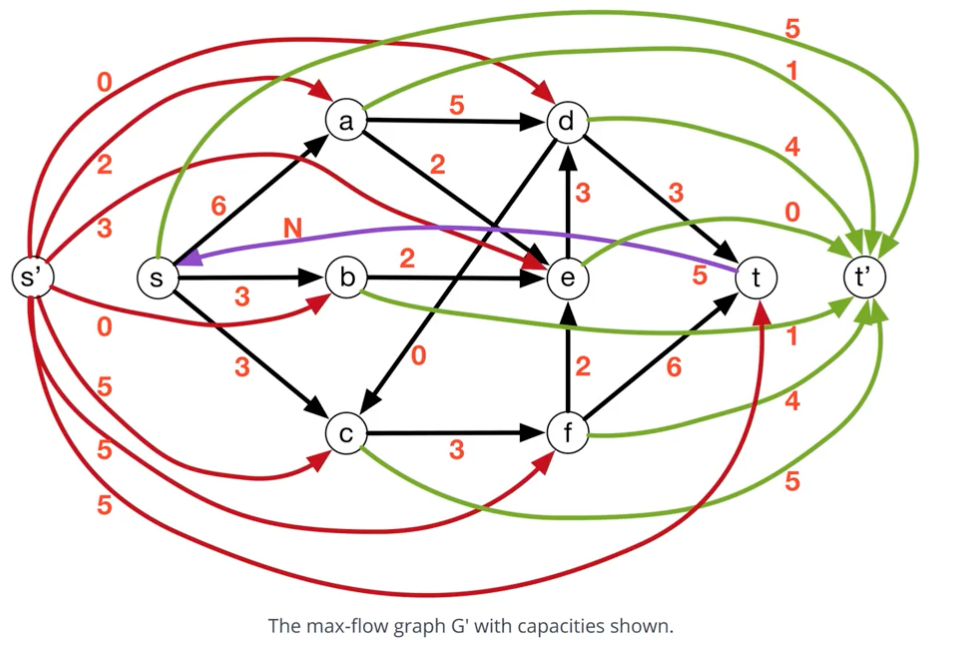

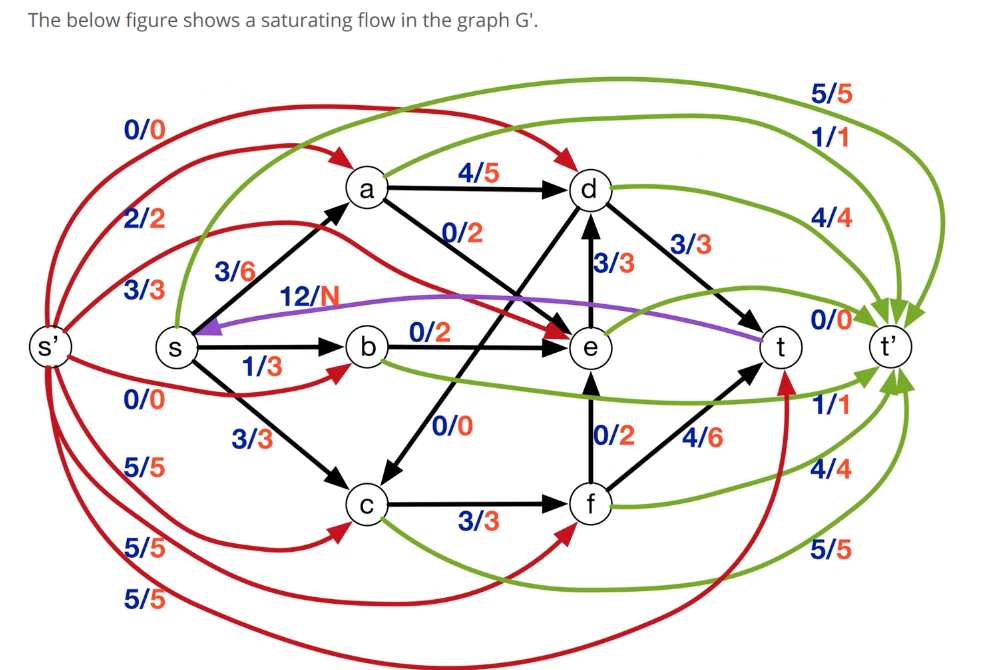

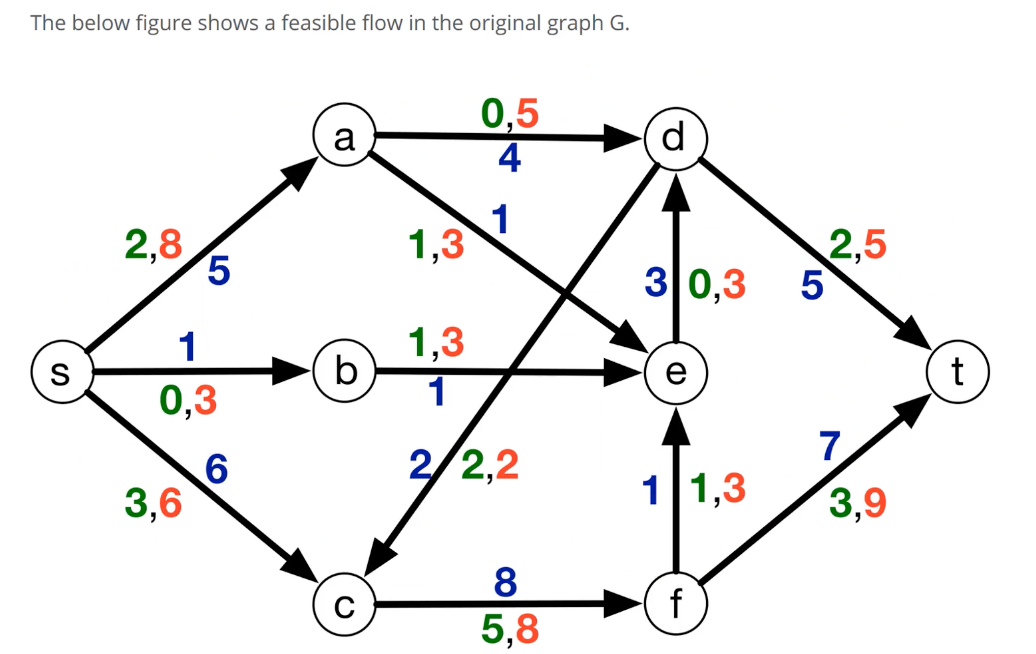

Edmonds-Karp (MF4)