CS8803 OMSCS - GPU hardware and software notes

Content of these notes are from Georgia Tech OMSCS 7295 (Formally 8803): GPU Hardware and Software by Prof. Hyesoon Kim. They kindly allowed this to be shared publicly, please use them responsibly!

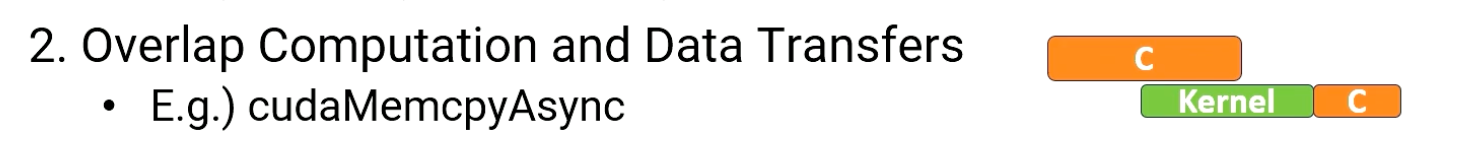

Module 1: Introduction of GPU

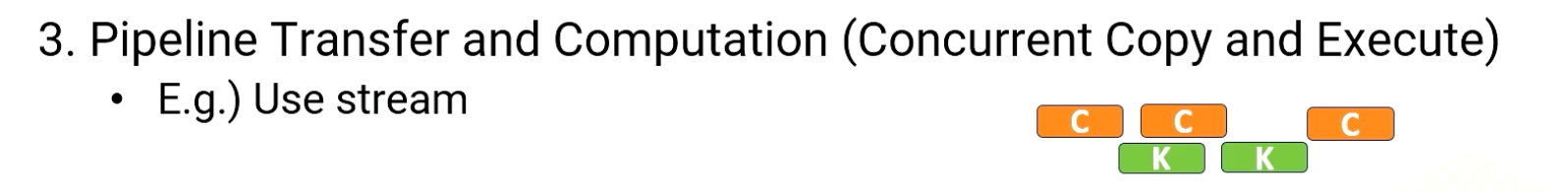

Objectives

- Describe the basic backgrounds of GPU

- Explain the basic concept of data parallel architectures

Readings

Required Readings:

- CUDA Programming guide, section 1-2, section 4

- Performance analysis and tuning for general purpose graphics processing units (GPGPU) (Chapter 1)

Optional Reading:

Module 1 Lesson 1: Instructor and Course Introduction

Course Learning Objectives:

- Describe how GPU architecture works and be able to optimize GPU programs

- Conduct GPU architecture or compiler research

- Explain the latest advancements in hardware accelerators

- Build a foundation for understanding the CUDA features (the course does not teach the latest CUDA features)

Course Prerequisites:

- Basic 5-stage CPU pipeline

- C++/Python Programming skill set

- Prior GPU programming experience is not necessary.

What is GPU?

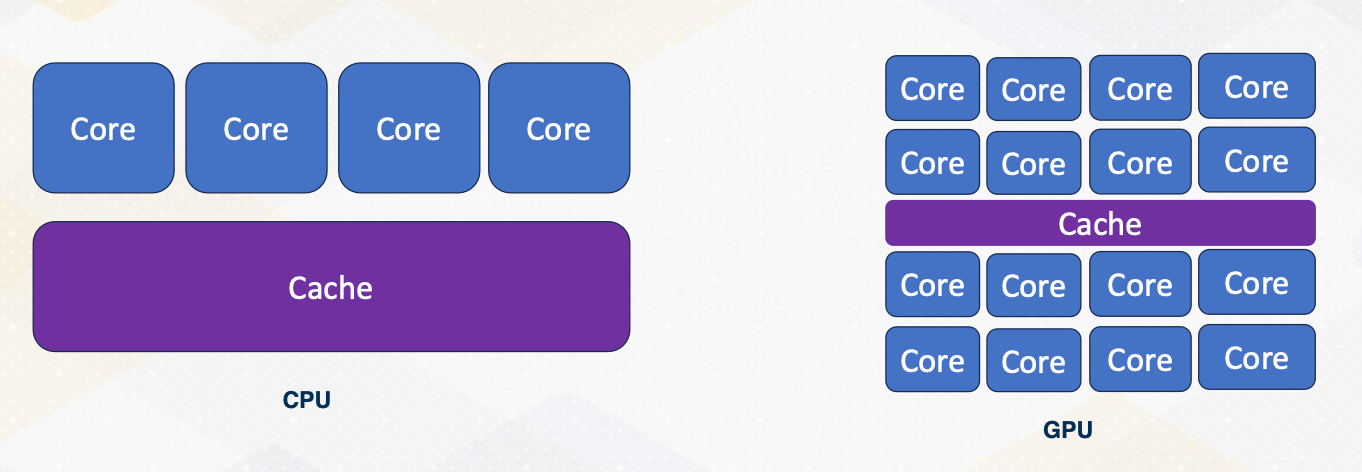

Have you ever wondered why GPUs are so relevant today? The answer can vary widely. While GPUs initially gained prominence in 3D graphics, they have since evolved into powerful parallel processors. Nowadays, GPUs are often associated with ML accelerators and high performance computing, showcasing their versatility. Let’s briefly compare CPUs and GPUs. One key distinction lies in their target applications. CPUs are tailored for single threaded applications aiming for maximum speed. In contrast, GPUs excel in parallelism, handling numerous threads simultaneously. CPUs prioritize precise exceptions, crucial for program correctness and debugging. On the other hand, GPUs focus on parallel computation and don’t prioritize precise exceptions as much. They leverage dedicated hardware for handling O/S and I/O operations, which naturally leads to that CPUs act as host and GPUs act as an accelerator.

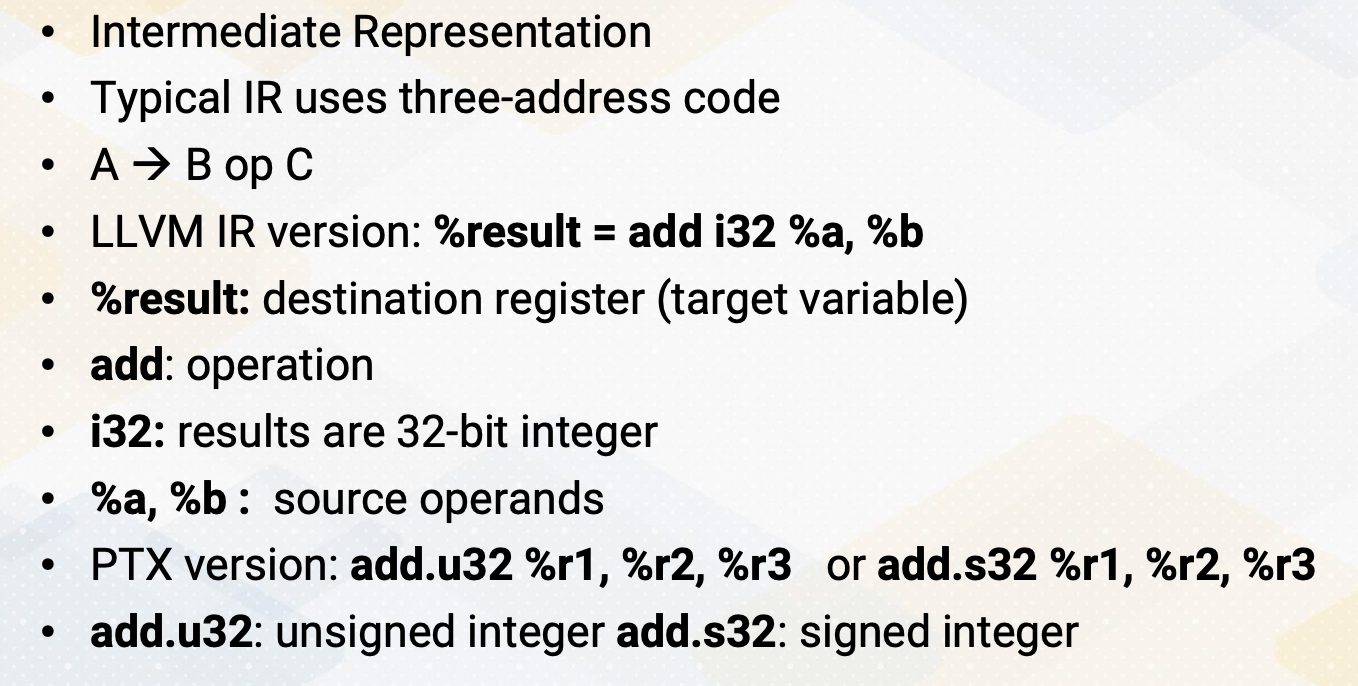

GPU ISA

ISA refers to instruction set architecture.

Let’s touch on ISA. CPUs typically feature open ISAs, ensuring software compatibility across different hardware platforms. In contrast, GPUs operate in accelerator mode, with a driver translating code from one ISA to another for specific hardware. PTX was introduced to provide a public version of ISA, which is a virtual ISA.

| CPU | GPU | |

|---|---|---|

| Target applications | Latency sensitive applications | Throughput sensitive applications |

| Support precise exceptions? | Yes | No |

| Host-accelerator | Host | Accelerator |

| ISA | Public or open | Open/Closed |

| Programming model | SISD/SIMD | SPMD |

Additional information:

- SISD - Single Instruction, Single Data

- SIMD - Single Instruction, Multiple Data

- SPMD - Single Program, Multiple Data

SIMD (Single Instruction, Multiple Data):

- Imagine a group of chefs in a kitchen, all performing the same chopping motion (instruction) on different vegetables (data) at the same time. That’s SIMD!

- Strengths: Great for data-parallel tasks with repetitive operations on independent data elements. Think image processing, vector calculations, etc.

- Limitations: Requires data with consistent structure and can struggle with branching/conditional logic due to lockstep execution.

SPMD (Single Program, Multiple Data):

- Picture a team of artists, each working on a different section of the same painting (program) using their own techniques (data). That’s SPMD!

- Strengths: More flexible than SIMD, able to handle diverse tasks with branching and conditional logic. Often used for algorithms with complex workflows and distributed computing across multiple processors.

- Limitations: May have overhead due to thread management and communication needs.

Lastly, the program model for CPUs primarily centers around single threaded or single data operations while GPUs employ the single program multiple data model emphasizing parallelism.

Module 1 Lesson 2: Modern Processor Paradigms

Course Learning Objectives:

- Describe CPU design and techniques to improve the performance on CPUs

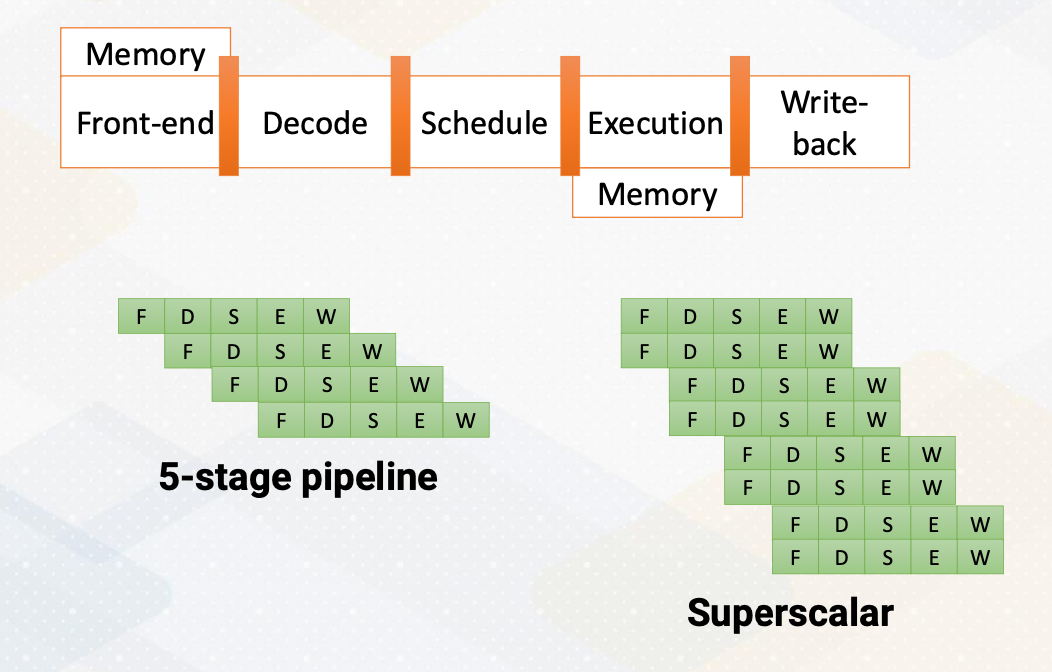

The foundation of CPU design consists of five stages; front-end, decode, rename, schedule, and execution.

- In the fetch stage, also called the front-end stage, instructions are fetched typically from an I-cache. In the decode stage, an instruction is decoded. In this stage, if this is an X86 instruction, a single instruction generates multiple micro uops.

- After instructions are decoded, typically registered files are accessed.

- The scheduler selects which instructions would be executed. In an in-order processor, the scheduler selects instructions based on the program order.

- For an out-of-order processor, it selects whichever ready to be excuted regardless of program order to find more ready instructions.

- The execution stage performs actual computation or also access the memory.

- In the write- back stage the result would be written back.

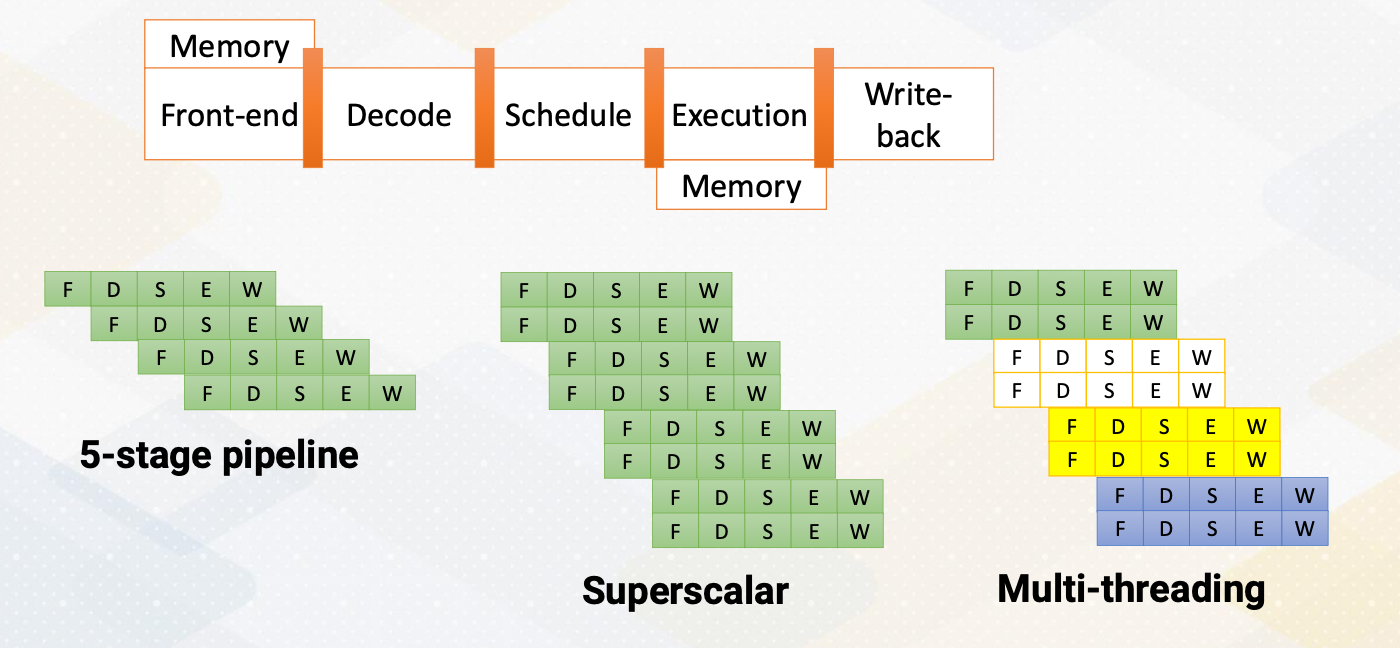

The above diagram illustrates the difference between a single issue processor versus superscalar processor. Superscalar processor enhances CPU performance by executing more than one instruction. They fetch, decode, and execute multiple instructions concurrently, effectively doubling performance.

Increasing Parallelism (1)

There are several ways of increasing execution parallelism. Superscalar processor increases the parallelism by handling more than one instruction, which essentially increases instruction level parallelism (ILP). ILP is crucial for CPU performance, but it determines how many instructions can run in parallel. Processors seek independent instructions to improve ILP and overall efficiency. In the superscalar processor, IPC instruction per cycle would be greater than one.

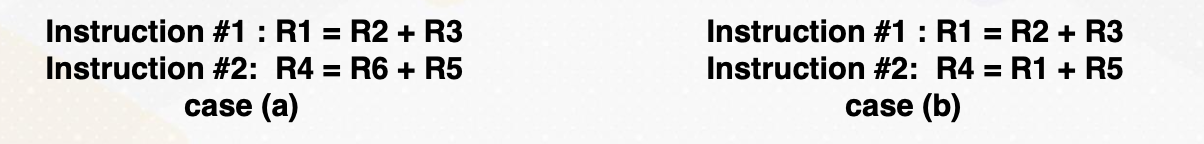

Instruction Level Parallelism

If a machine has two execution units, how many instructions can be executed simultaneously?

The slide shows two examples. In the first, two instructions are dependent, meaning they don’t rely on each other’s results. In this case, they can be executed concurrently, improving performance. In the second scenario, dependencies exist.

- Instructions are independent in case (a). Both instructions can be executed together.

- Instructions are dependent in case (b). Instruction #2 can be executed after instruction #1 is completed.

- Instruction level parallelism (ILP) represents parallelism.

- Out of order processor helps to improve ILP→find more independent instructions to be executed

- Improving ILP has been one of the main focuses in CPU designs.

Increasing CPU Performance

There are two main approaches. First, a deeper pipelines that increases a frequency, but it requires a better branch prediction. Second, CPUs have larger caches, which can reduce cache misses, thereby reducing memory access time significantly.

Increasing Parallelism (2)

Another way of increasing parallelism is multi-threading. Multi-threading increase CPU performance by enabling multiple threads. By simply switching to another thread, it can examine more instructions that might be ready. Multi-threading is one of the key ingredients of high performance GPUs. Luckily, multi-threading does not require significant amount of hardware resource.

Multiprocessors

Multiprocessors are another powerful way of increasing performance. It has many processors by increasing the entire resource. Multiprocessor techniques are commonly used in both CPUs and GPUs. In this video, we explored how CPUs can enhance their performance by optimizing insuction level parallesm, increasing operating frequency, and scaling the number of cores in the system.

Module 1 Lesson 3: How to Write Parallel Programs

Course Learning Objectives:

- Describe different parallel programming paradigms

- Recognize Flynn’s classical taxonomy

- Explore a CUDA program example

Amdahl’s law

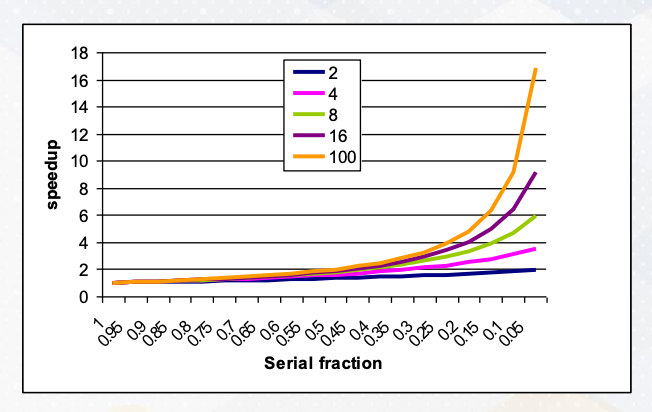

Let’s establish a fundamental concept in parallel programming known as Amdahl’s law. Imagine it as the processor, can execute parallel task and we have many parallel processors. We can quantify this performance boost using the formula:

\[\frac{1}{\frac{P}{N}+S}\]where P represents parallelism, S stands for the serial section, and the N is the number of processors involved.

Visualizing this, we see that we have ample parallelism is available and the serial sections are minimal. Our performance gains scale almost linearly with the number of processors at play. However, when serial sections get significant, the performance scalability takes a hit. This underscores the critical importance of parallelizing applications with minimum serial sections and abundant parallelism. Even if you possess ample hardware resource, if a serial fraction exists, even 10%, it can impede your performance.

What to Consider for Parallel Programming

When we can parallelize the work, we can use parallel processors. But how do we write effective parallel programs?

- Increase parallelism by writing parallel programs

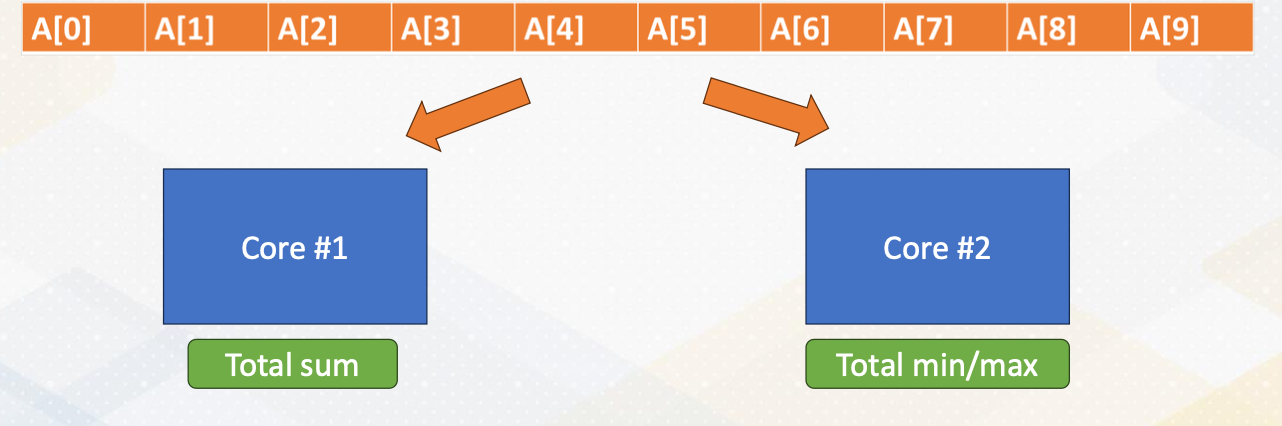

- Let’s think of an array addition and find min/max value

Task decomposition

- One core performs addition.

- The other core performs min/max operation

- Array [A] has to be sent to both cores.

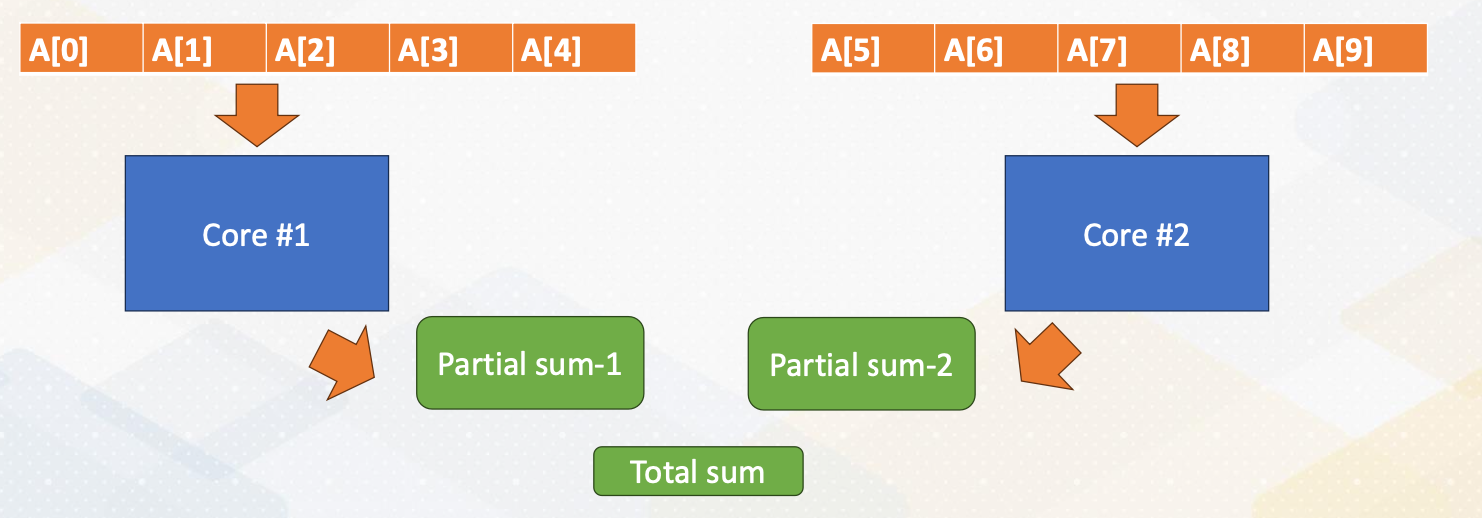

Data decomposition

- Split data into two cores

- Reduction operation is needed (both sum and min/max).

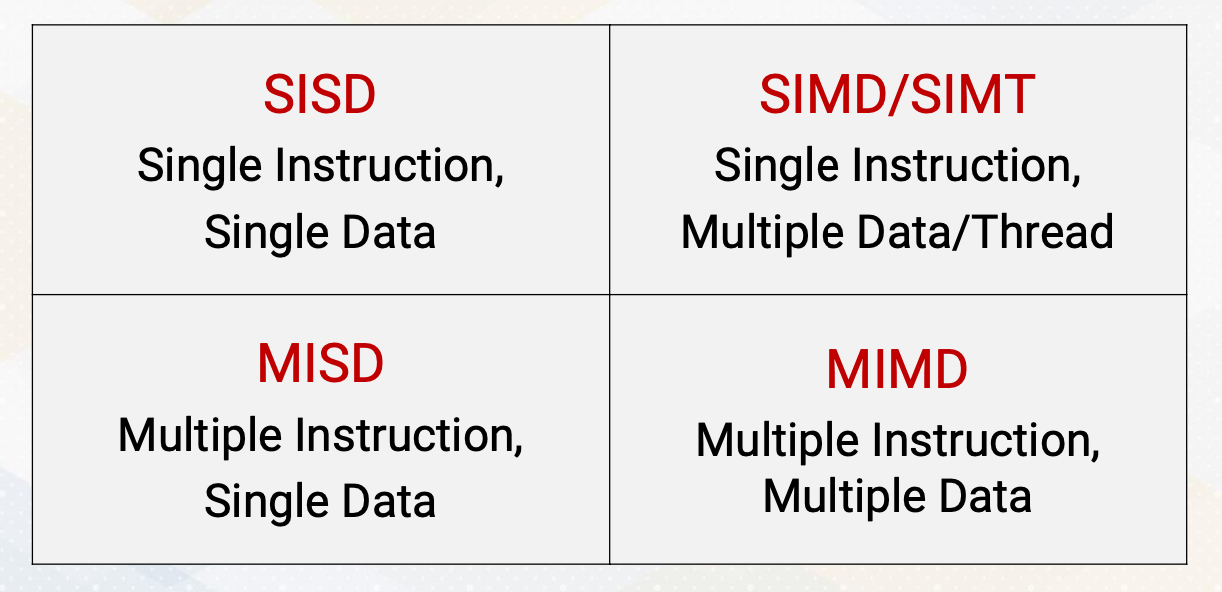

Flynn’s Classical Taxonomy

- SISD - Single Instruction, Single Data

- SIMD/SIMT - Single Instruction, Multiple Data / Thread

- MISD - Multiple Instruction, Single Data

- MIMD - Multiple instruction, Multiple Data

CPU Vs GPU:

- CPUs can be SISD, SIMD, and MIMD.

- And GPUs are typically SIMT.

The difference between SIMD and SIMT will be discussed later. Both fetches one instruction and operates multiple data. And CPU uses vector processing units for SIMD. And GPU have SIMT with many ALU (arithmetic logic unit) units.

Let’s look for another terminology, SPMD Programming, Single Program Multiple Data Programming. GPUs for instance favor SPMD, single program multiple data programming.

- All cores(threads) are performing the

same work(based on same program). But they are working ondifferent data. - Data decomposition is the typical programming pattern of

SPMD. -

SPMDis typical GPU programming pattern and the hardware’s execution model isSIMT. -

GPUprogramming uses SPMD programming style which utilizesSIMThardware.

To exemplify SPMD, let’s take a look at a CUDA program example. CUDA program has host and kernel. And here we’ll focus on the kernel code which runs on GPU device. The sum of array is also done by the following code. Each thread runs on the GPU, performing a specific task, which is adding its own value to the Array A. Thread IDs differentiate the task and all execute in parallel.

1

2

3

vector_sum() {

index ; // differentiate by thread ids

sum += A[index]; }

All cores(threads) are executing the same vector_sum().

Module 1 Lesson 4: Introduction to GPU Architecture

Course Learning Objectives:

- Gain a high-level understanding of GPU architecture

- Describe key terms including “streaming multiprocessors” and ”warp” and “wave-front”

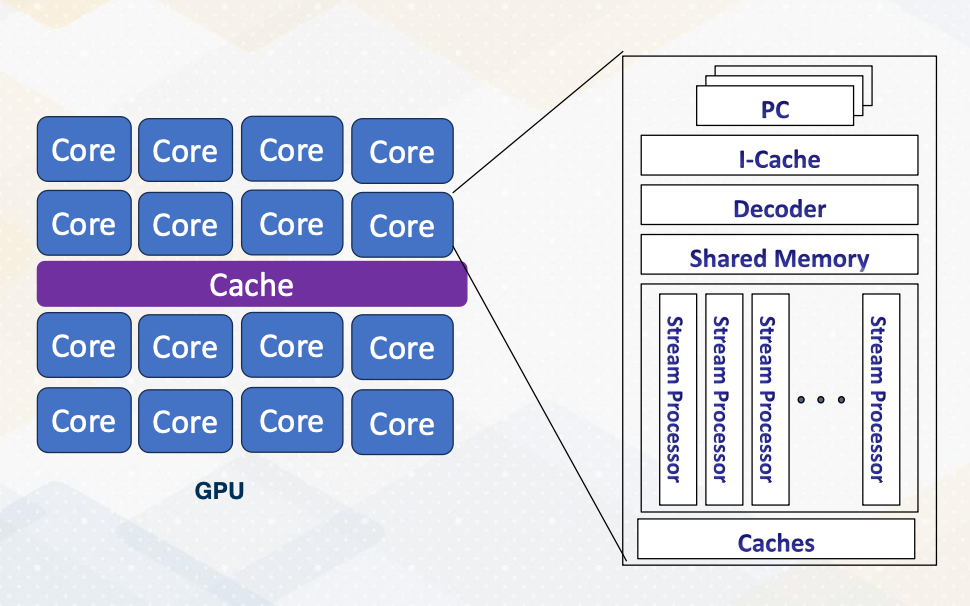

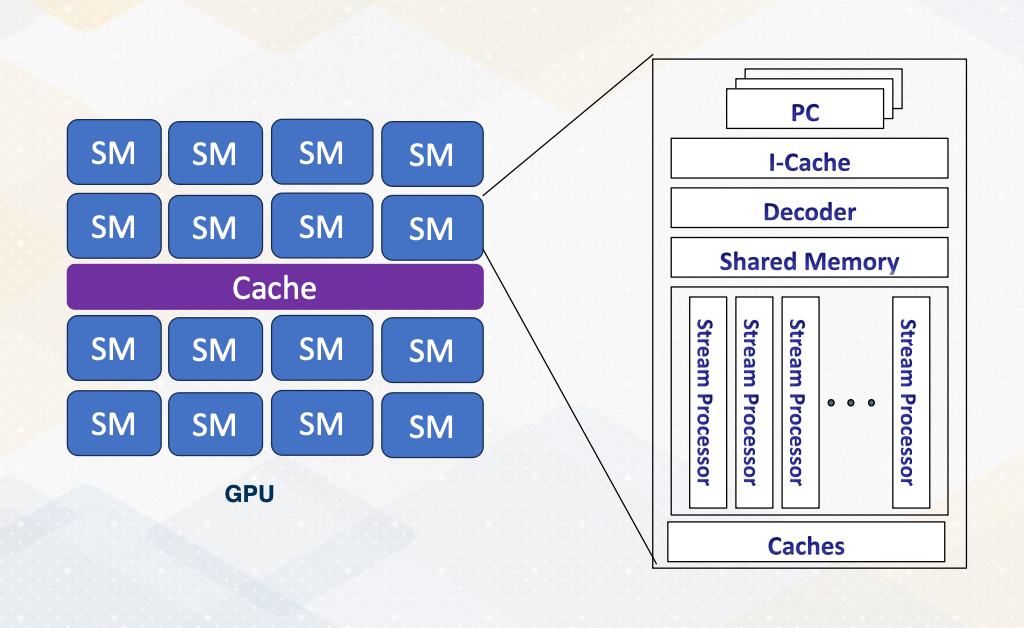

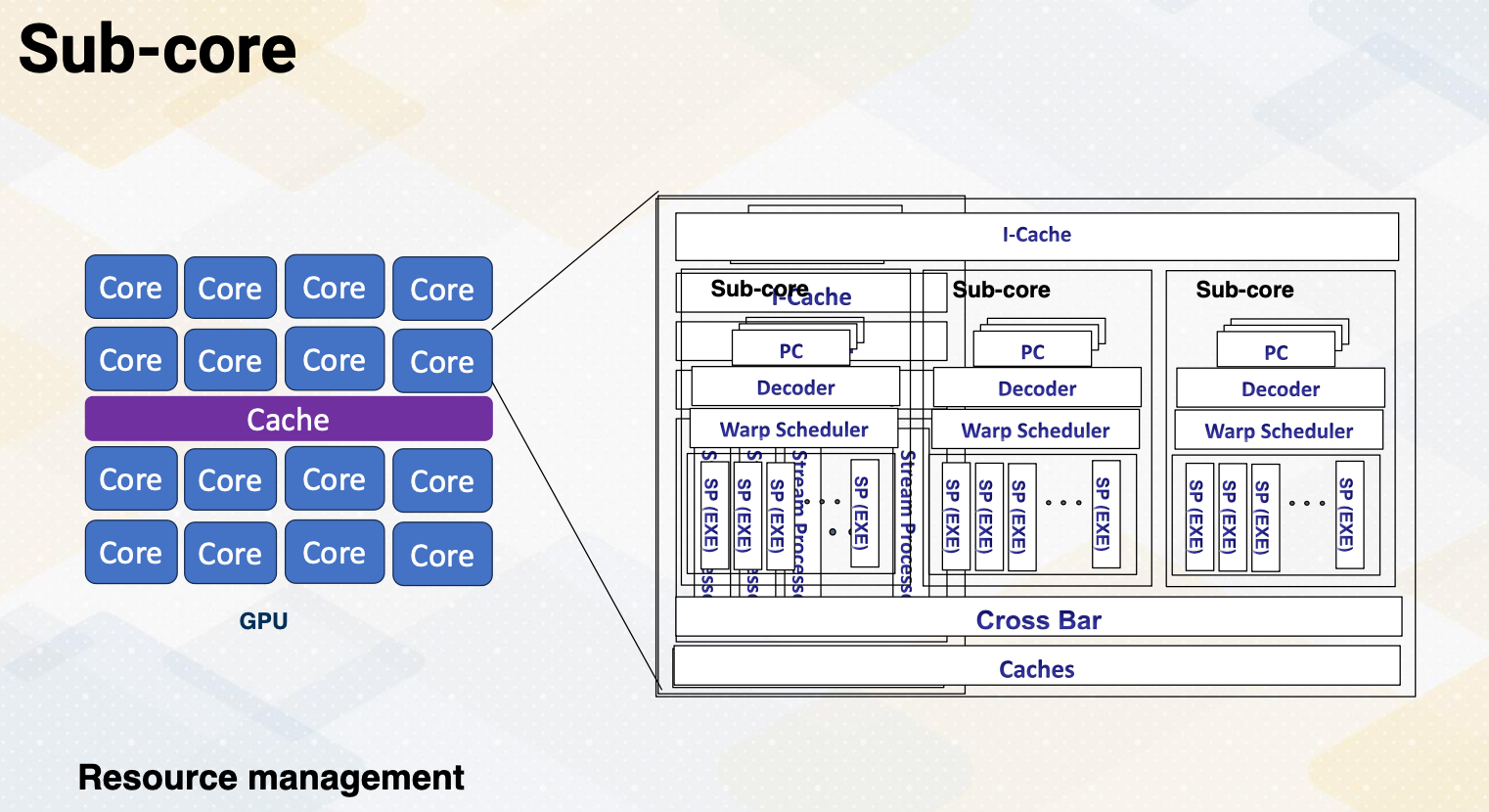

GPU Architecture Overview

- Each core can execute multiple threads.

- CPU Cores are referred to as “ streaming multiprocessors (SM)” in NVIDIA term or SIMT multiprocessors

- Stream processors (SP) are ALU units, SIMT lane or cores on GPUs.

- In this course core ≠ stream processor

This slide shows an overview of GPU architecture. A GPU is composed of numerous cores, each can execute multiple threads. These cores work in harmony to handle complex task. The concept of CPU cores is more on streaming multiprocessor inside one SM you will find various components including an instruction cache decoder, shared memory, and multiple execution units referred to as stream processors in GPU terminology. These stream processors are essentially one SIMT lane or ALU and it is called cores on GPUs. To reduce the confusion between CPU cores and GPU cores in this course we will not refer SIMT lane as a core.

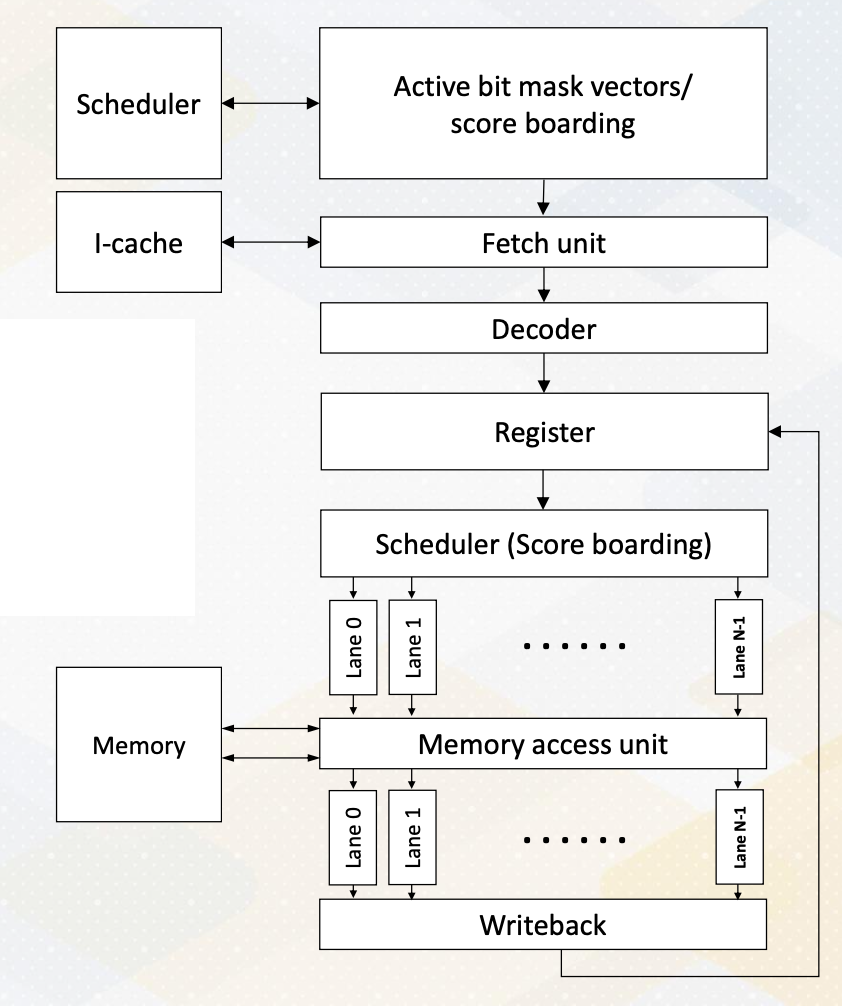

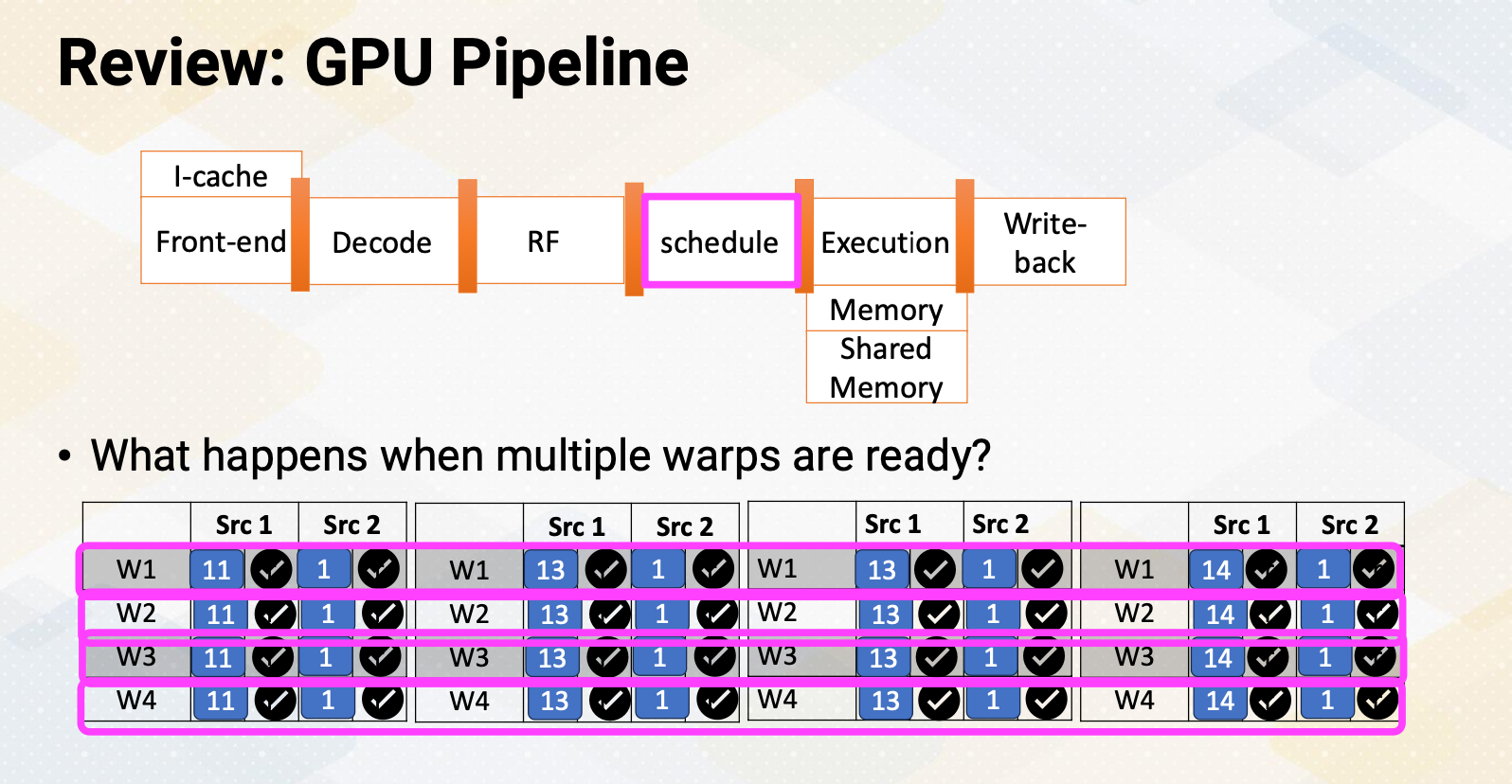

GPU Pipeline

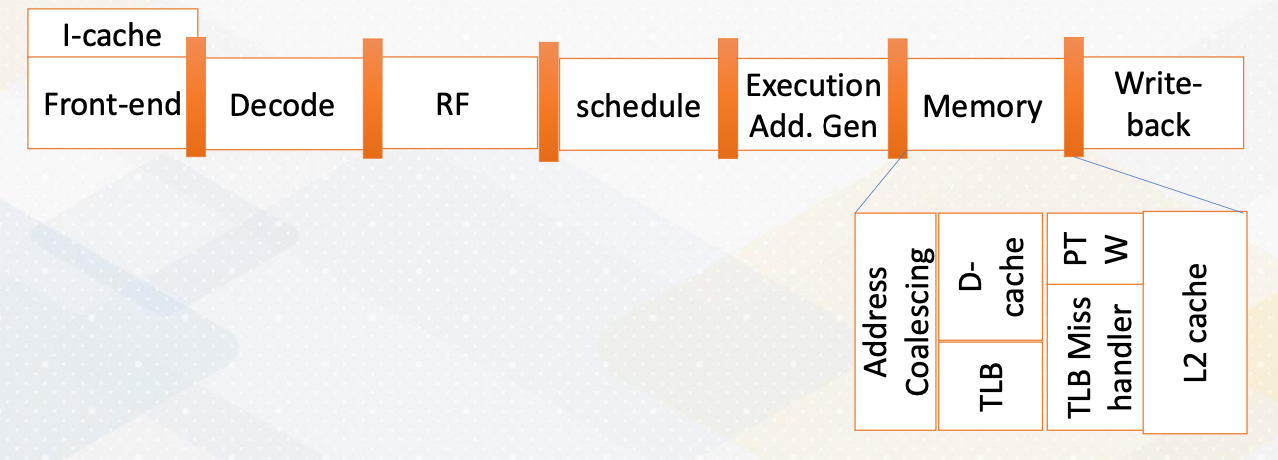

This slide shows a GPU pipeline.

- This stage include the fetch stage

- where each work item is fetched and

- multiple pieces registers are used to support multi threaded architectures.

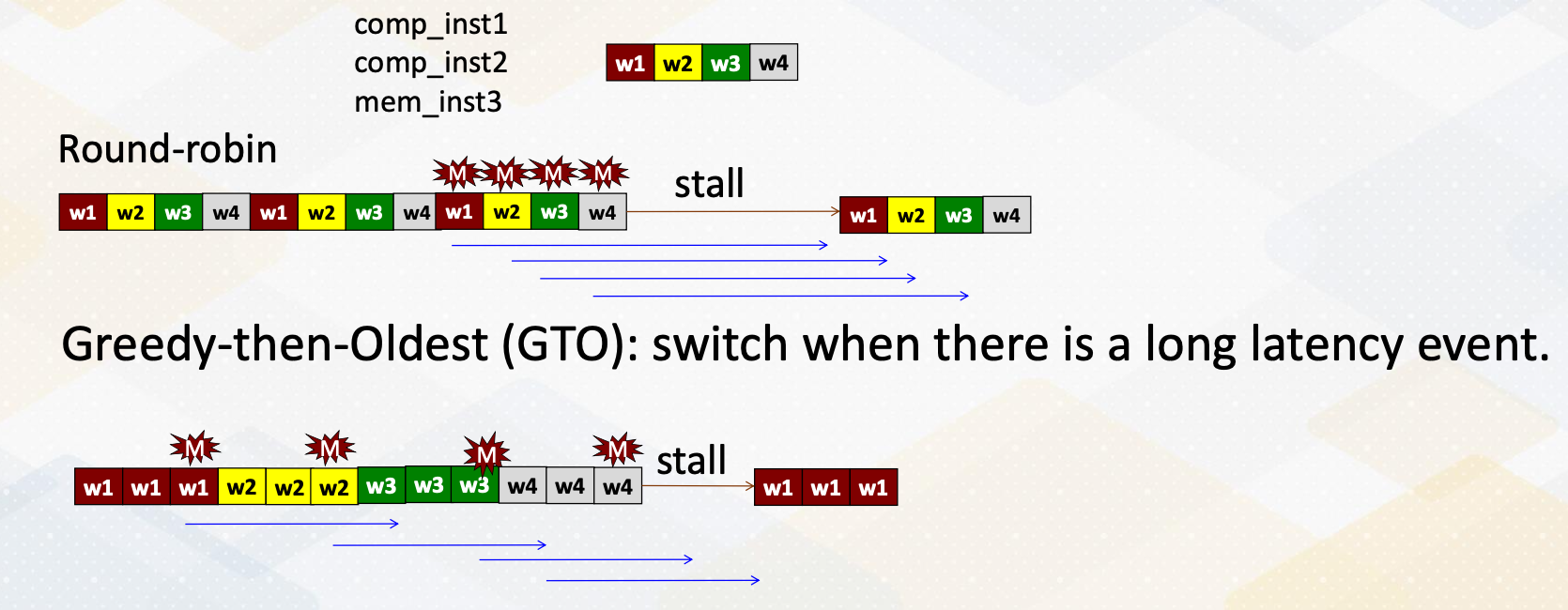

- These are various schedulers such as the round-robin scheduler

- or the more sophisticated greedy scheduler which selects task based on the factors like cache miss or branch predictions.

- The decode stage processes, the fetched instructions, and

- the register values are read.

- Schedulers or score boarding select ready warps.

- Those selected warps would be executed by execution unit and

- then the result would be written back.

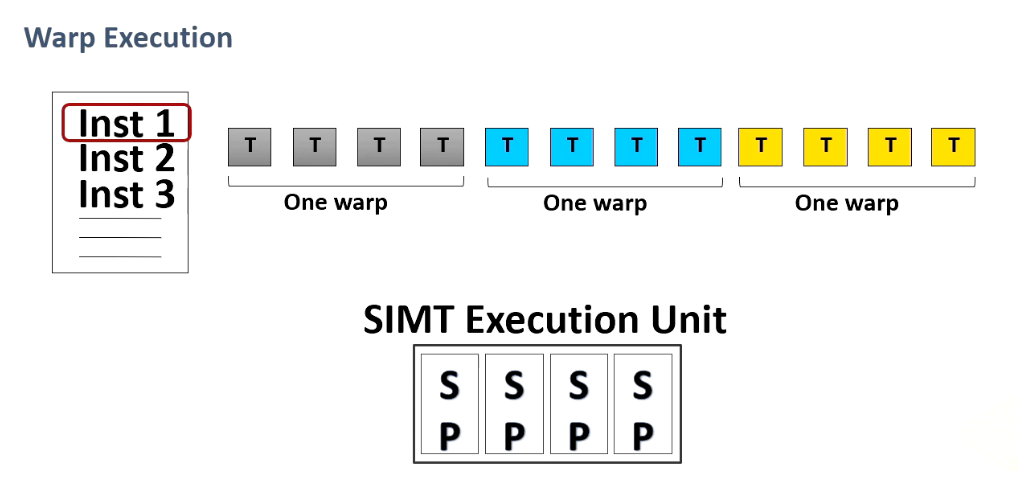

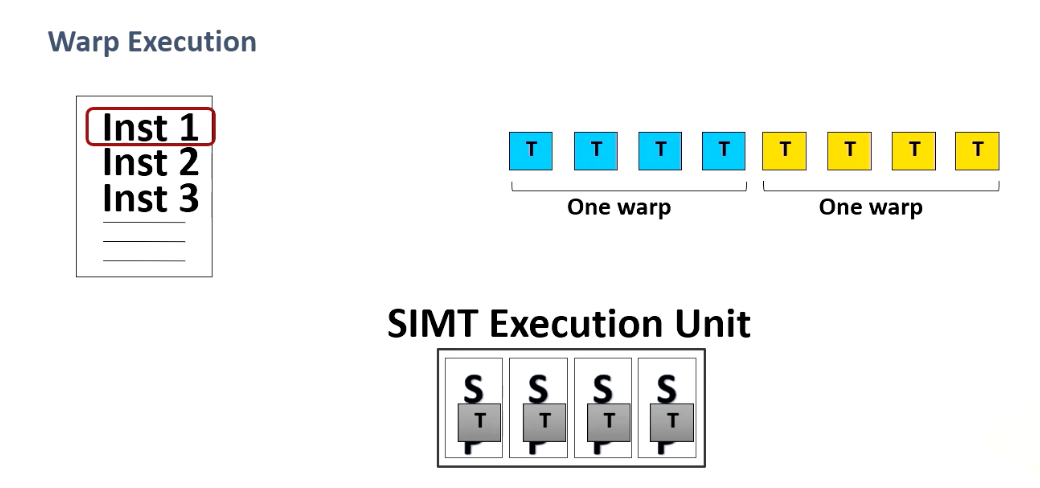

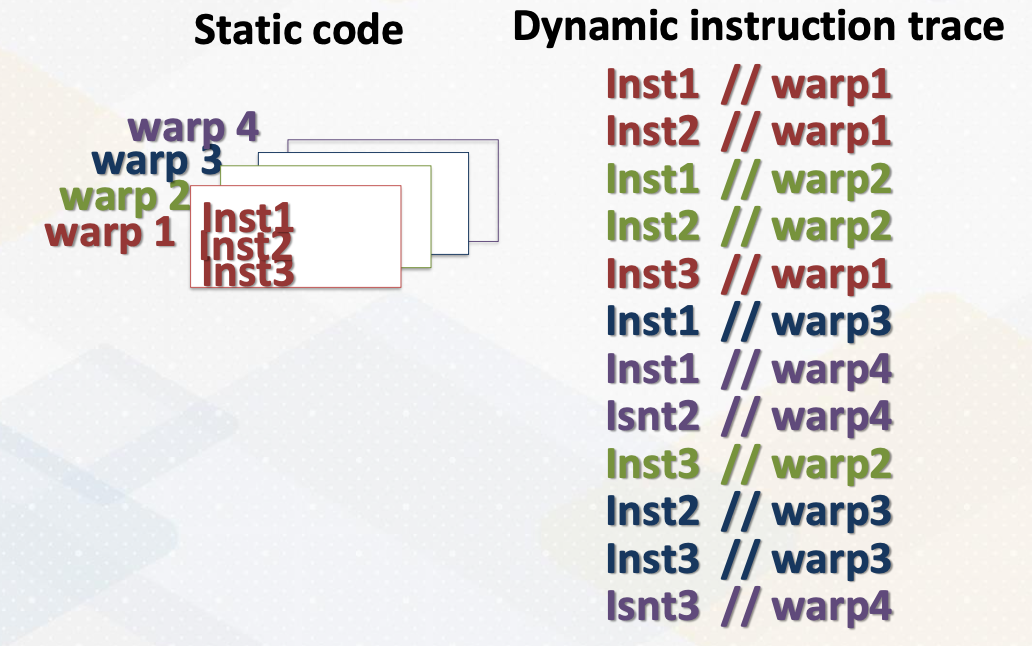

Execution Unit: Warp/Wave-front

In this course warp and the wavefront would be used interchangeably.

Multiple threads are forming what’s known as a warp or wave-front which is a fundamental unit of execution and consists of multiple thread. A single instruction is fetched per warp and multiple threads will be executed. In the world of micro architecture, the term warp size is pivotal. It has remained constant at 32 for an extended period but this can be changed in future.

This diagram illustrates a warp execution. Here is a program which has multiple instructions and programmer specifies a number of threads for a kernel and we have SIMT execution unit and threads are grouped as a warp.

For a given instruction when source operands are ready the entire warp will be executed. When another warp is ready, this will be executed , and another one. So programmers specify 12 threads and in this example the warp size is four threads. And the entire four threads are executed one at a time.

Module 1 Lesson 5: History Trend of GPU Architechtures

Course Learning Objectives:

- Describe the evolution history of GPUs

- Describe the evolution of traditional 3D graphics pipelines

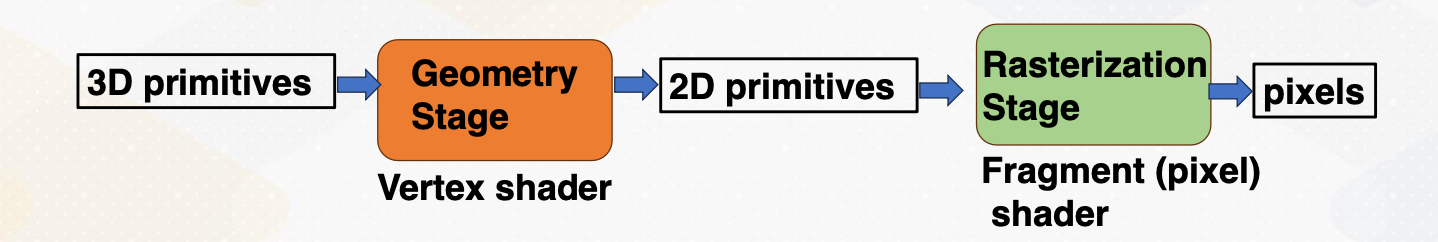

Traditional 3D Graphics Pipeline

- Traditional pipelined GPUs: separate Vortex processing and pixel processing units

- Programmable GPUs: introduction of programmable units for vertex/pixel shader

- Vertex/pixel shader progression to CUDA programmable cores (GPGPU)

- Modern GPUs: addition of ML accelerator components (e.g., tensor cores), programmable features.

Let’s look at a traditional 3D graphics pipeline. In the early days, GPUs were born from the 3D graphics tradition where their primary role was to proceed 3D primitives provide as input. These primitives underwent various stages, including geometry processing, eventually generating in the creation of 2D primitives, which were then rendered into pixel on your screen.

This pipeline formed the core of early GPU architectures. As GPUs evolved, so did their capabilities. The shaders, which were initially fixed function units, gradually became more programmable. This transformation paved the way for unified shader architectures, a hallmark of the Tesla architecture. With this innovation, GPUs became versatile tools capable of handling both graphics and general purpose computing tasks.

The era of GPGPU programming had begun. GPGPU term was used to differentiate, to use GPU for general purpose computing. Nowadays, GPU are more widely used for other than 3D graphics. So this differentiation is not necessary. With each iteration of GPUs extended their capabilities further, Vertex and pixel shader gave way to more powerful cores such as tensor cores, aimed at accelerating tasks beyond traditional graphics processing. The application space for GPUs expanded dramatically, encompassing tasks like machine learning acceleration and more.

Programmable GPU Architecture Evolution

-

Cachehierarchies (L1, L2 etc.) - Extend FP 32 bits to

FP 64bits to support HPC applications - Integration of

atomicandfast integer operationsto support more diverse workloads - Utilization of

HBMmemory (High bandwidth memory) - Addition of smaller floating points formats (

FP16) to support ML workloads - Incorporation of

tensor coresto support ML workloads - Integration of

transformer coresto support transformer ML workloads

Programmable GPU architectures have evolved in various directions. First, it introduces L1 and L2 caches. It also increased the precision of polluting point from single precision 32 bit to double precision 64 bit to accommodate more high performance computing applications. It also introduces atomic and fast integer operations to support more diverse workloads.

The introduction of high bandwidth memory, HBM, marked another significant milestone. HBM integrate into the GPU’s memory subsystem offered unprecedented data transfer rates. GPUs leveraged this cutting edge technology to meet the demands of modern workload. To increase ML computation parallelism, it also supports a smaller floating point format such as FP16. It also adopts tensor core and transformer core to support ML applications.

NVIDIA H100

Let’s look at 2023’s latest NVIDIA GPU architecture which is H100.

- They support various precisions, FP32, FP64, FP16, Integer 8 and FP8 formats.

- It also has tensor cores similar to previous generation of GPUs, and

- introduced new transformer engine, ML cores, GPU cores, and tensor cores play pivotal roles in meeting the demands of modern ML applications.

- Increased capacity of large register files

- Concepts like tensor memory accelerator promise even greater capabilities.

- Furthermore, the number of stream multiprocessor (SMs) and the number of floating point (FP) units keep increasing to provide more compute capability.

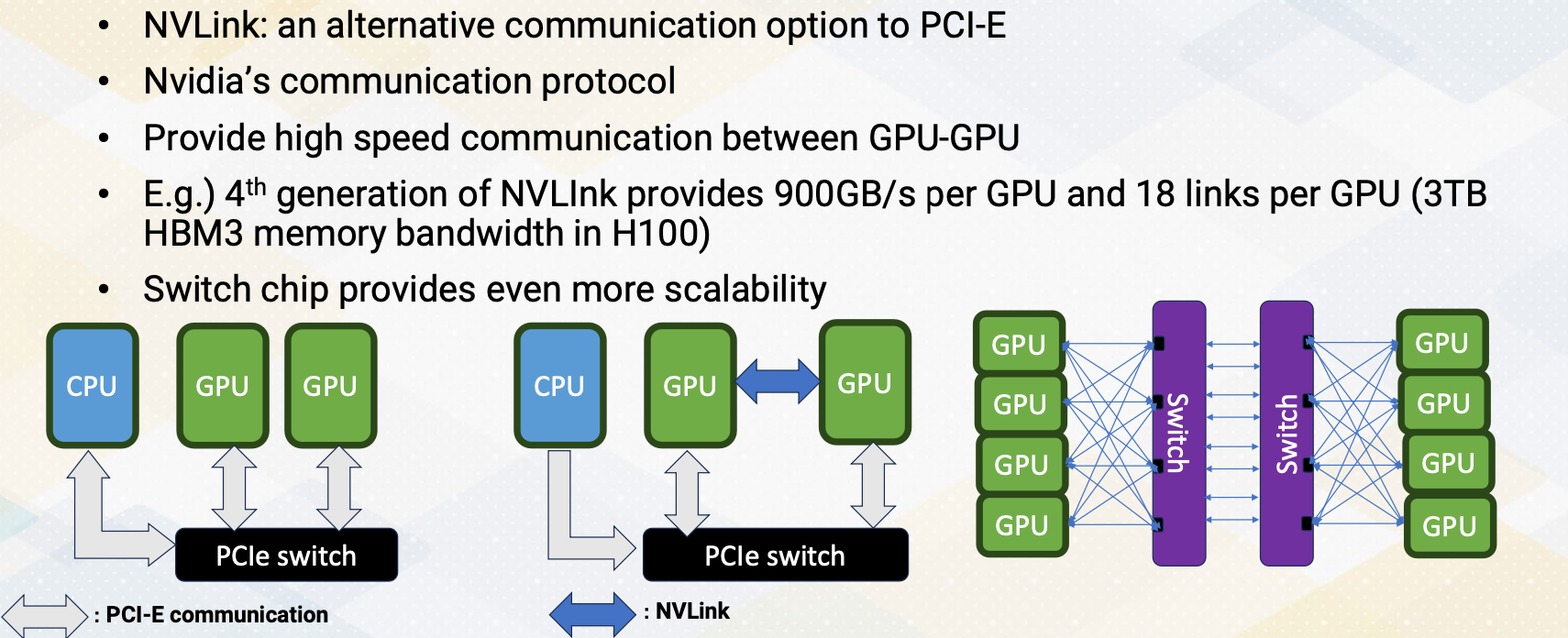

- On top of that, it uses NVIDIA NVLink switch system to connect multiple GPUs to increase parallelism even more.

Module 2: Parallel Programming

Objectives

- Describe the basic backgrounds of GPU

- Explain the basic concept of data parallel architectures

Readings

Required Reading:

Optional Reading:

Module 2 Lesson 1: Parallel Programming Patterns

Course Learning Objectives:

- Provide an overview of different parallel programming paradigms

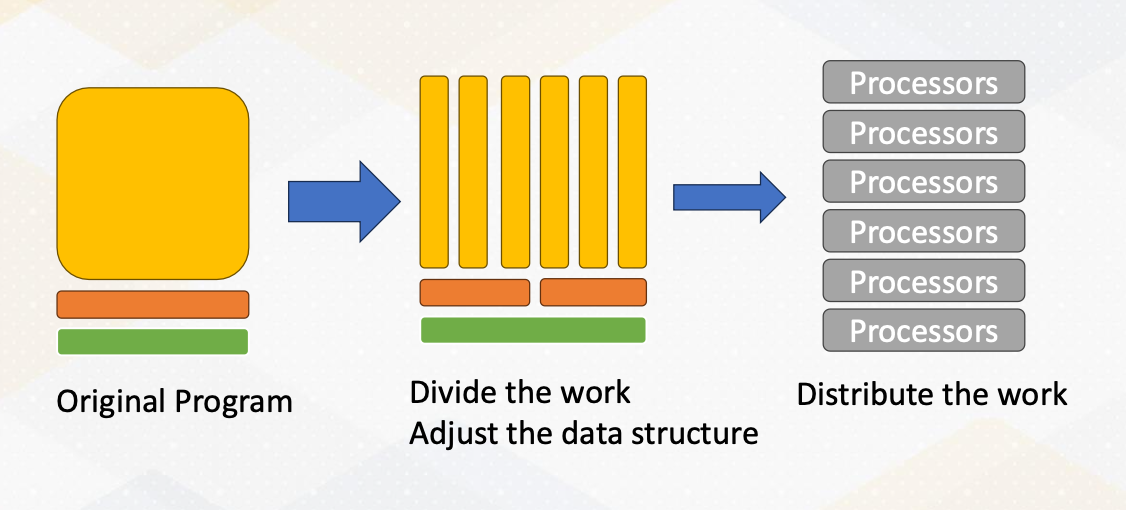

How to Create a Parallel Application

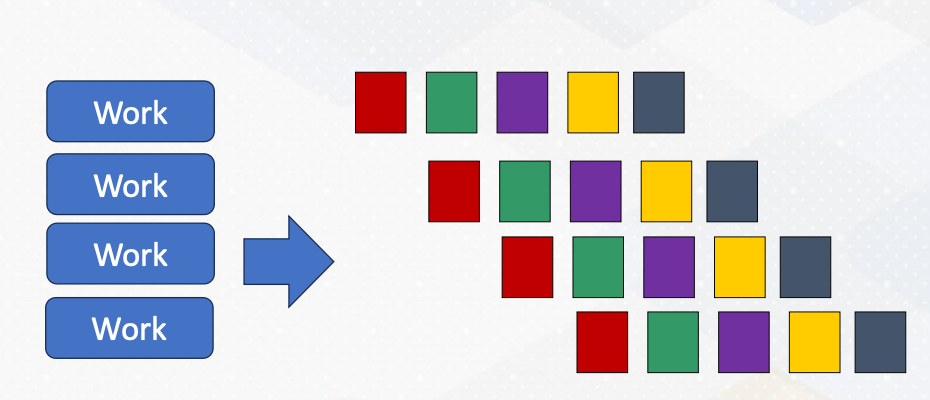

The original program has many tasks. We divide the work and adjust the data structure to execute the task in parallel. Then we dispute the work to multiple processors

Steps to Write Parallel Programming

- Step 1, discover concurrency.

- The first step is to find the concurrency within your problem. This means identifying opportunities for parallelism in your task or problem. This is often the starting point for any parallel programming.

- Step 2, structuring the algorithm.

- Once you’ve identified the concurrency, the next step is to structure your algorithm in a way that can effectively exploit this parallelism. Organizing your code is key to harnessing the power of parallelism.

- Step 3, implementation.

- After structuring your algorithm, it’s time to implement it in a suitable programming environment. In this step, you choose which program language and tools to use.

- Step 4, execution and optimization.

- With your code written, it’s time to execute it on a parallel system. During this phase, you will also focus on fine- tuning the code to achieve the best possible performance.

Parallel Programming Patterns

There are five popular parallel programming patterns:

- Master/Worker Pattern

- SPMD Pattern (Single Program, Multiple Data)

- Loop Parallelism Pattern

- Fork/Join Pattern

- Pipeline Pattern

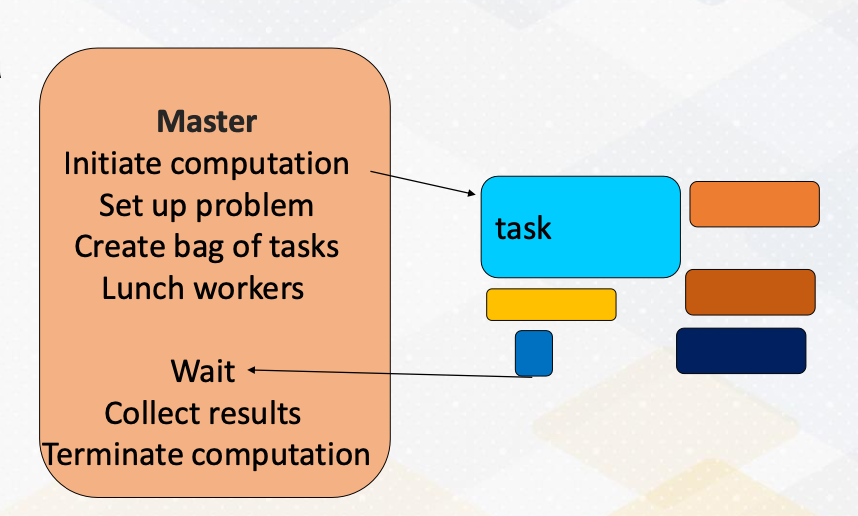

Master/Worker Pattern

First of all, we have the master worker pattern. It is single program and multiple data. There is a master process or thread which manages a pool of worker process/threads, and also a task queue. In this figure, orange box represents master threads.

Then there are many workers and they execute tasks concurrently by dequeuing them from the shared task queue.

In this figure, different color boxes show worker threads who execute different tasks. This master/worker pattern is particularly useful for the task that can be broken down into smaller independent pieces.

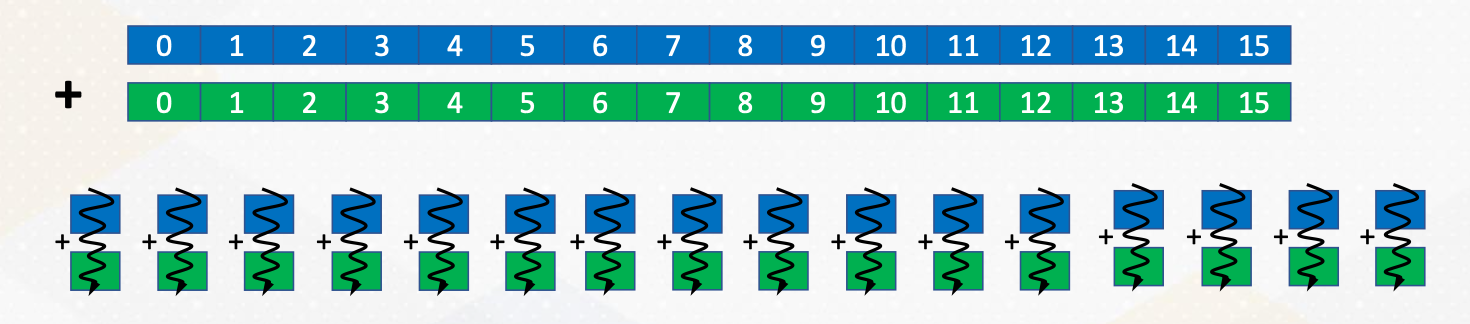

Loop Parallelism PAttern

1

2

for (i = 0; i < 16; i++)

c[i] = A[i]+B[I];

Next, loop parallelism pattern. Loops are a common and excellent candidate for parallelism. What makes them appealing for parallel programming is that many loops involve repetitive independent iterations. This means that each iteration can be executed in parallel. Another note is that unlike master/work pattern, tasks inside the loops are typically the same.

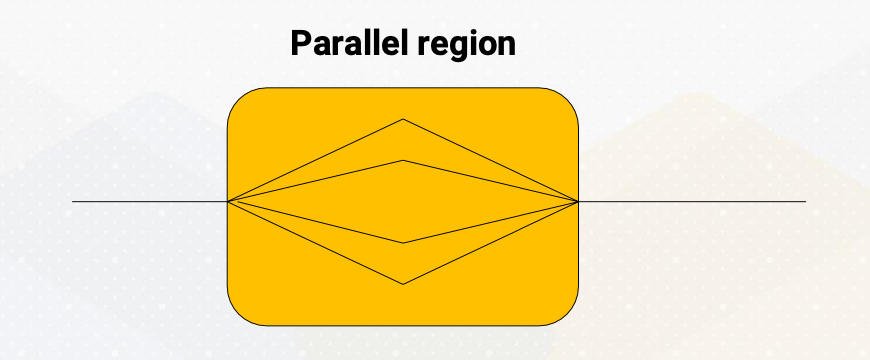

SPMD Pattern

Single program, multiple data

Now let’s talk about SPMD programming pattern. SPMD stands for single program multiple data. In this approach, all processing elements execute the same program in parallel, but each has its own set of data. This is a popular choice, especially in GPU programming.

Fork/Join Pattern

The fourth paradigm is the fork/join pattern. Fork/join combines both serial and parallel processing. Parent task create a new task which is called fork, then wait for their completion which is called join before continuing with the next task. This pattern is often used in programming with a single entry point.

Pipeline Pattern

Finally, let’s explore the pipeline pattern. The pipeline pattern resembles a CPU pipeline where each parallel processor works on different stages of a task. It’s an ideal choice for processing data streams. Examples include: signal processing, graphics pipelines, and compression workflows, which consist of decompression, work, and compression.

This animation illustrates a pipeline programming pattern. Each color represents different pipeline stages. Data work is coming continuously, and by operating different work, it provides parallelism.

Module 2 Lesson 2: Open MP vs. MPI (Part 1)

Explore shared memory programming, distributed memory programming, and take a closer look at the world of OpenMP.

Course Learning Objectives:

- Explain the fundamental concepts of shared memory programming

- Describe key concepts essential for shared memory programming

- Explore the primary components of OpenMP programming

Programming with Shared Memory

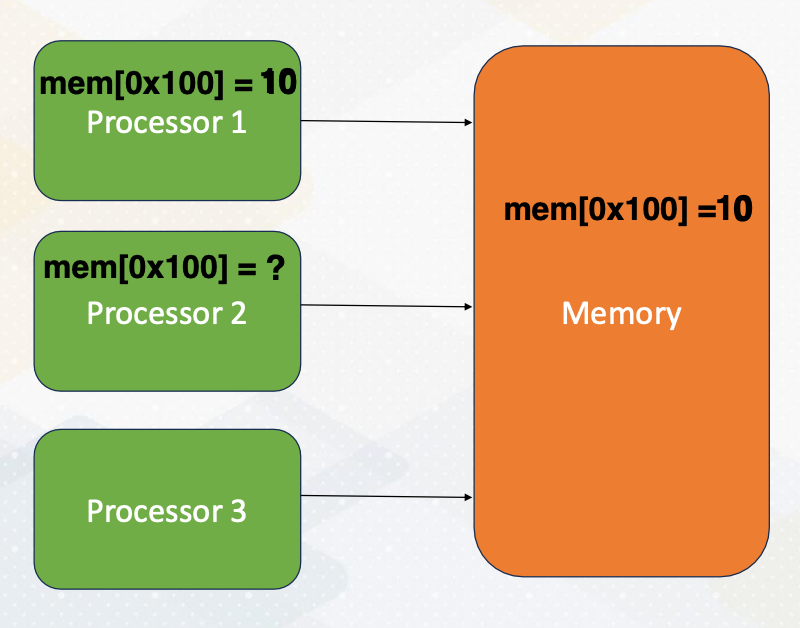

Let’s start by understanding shared memory programming, a core concept in parallel computing. In shared memory systems, all processors can access the same memory. The following animation illustrates the steps. First, processor 1 updates a value, then processor 2 can observe the updates made by processor 1, by simply reading values from the shared memory.

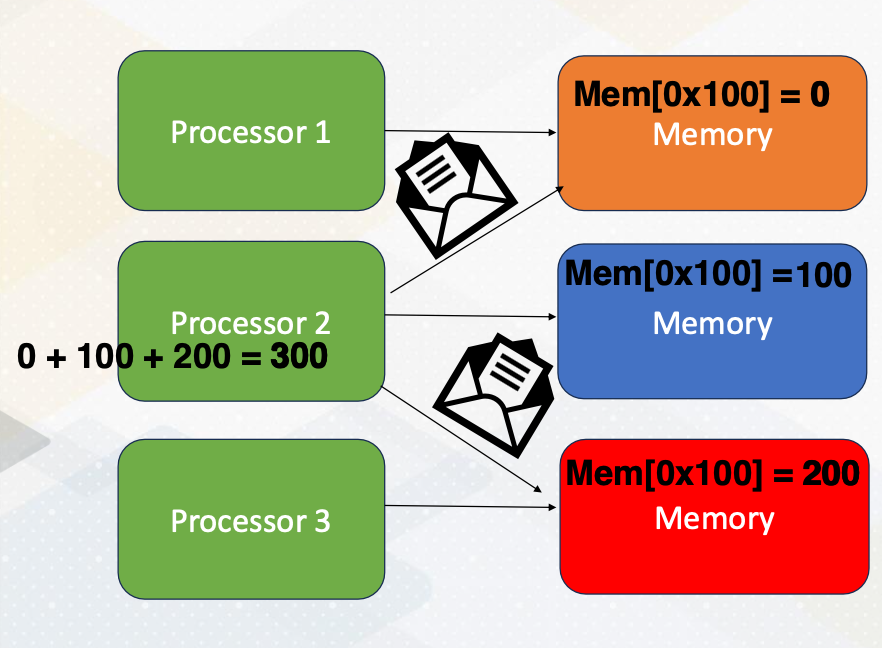

Programming with Distributed Memory

Now let’s just switch gears and explore programming with distributed memory. Unlike a shared memory system, in distributed memory systems, each processor has its own memory space. To access data in other memory space, processors send messages as the following. The same memory address is located in three different memory systems. Processor 2 requests messages from processor 1 and processor 3. Each processor sends data 0 from processor 1, and 200 from processor 3. The updated data are sent by messages, then the processor performs additions using the updated value. Then the updated value will be written back to the memory.

Overview of Open MP

Before we dive deeper into shared and distributed memory, let’s get an overview of OpenMP. OpenMP is open standard for parallel programming on shared memory processing system.

OpenMP is a collection of compiler directives, library routines, and environment variables to write parallel programs.

Key Tasks for Parallel Programming

Here are the key tasks for parallel programming:

- parallelization,

- specifying threads count,

- scheduling,

- data sharing,

- and synchronization.

What is Thread?

We’ll go over these concepts. First, let me provide a little bit of background on thread, which plays a pivotal role in parallel computing. What is thread? Thread is an entity that runs on a sequence of instructions.

Another important fact to know is that a thread has its own register and stack memory. So is thread equivalent to core? No, thread is a software concept. One CPU core could run multiple threads or just a single thread. Please also note that the meaning of thread is different on CPUs and GPUs.

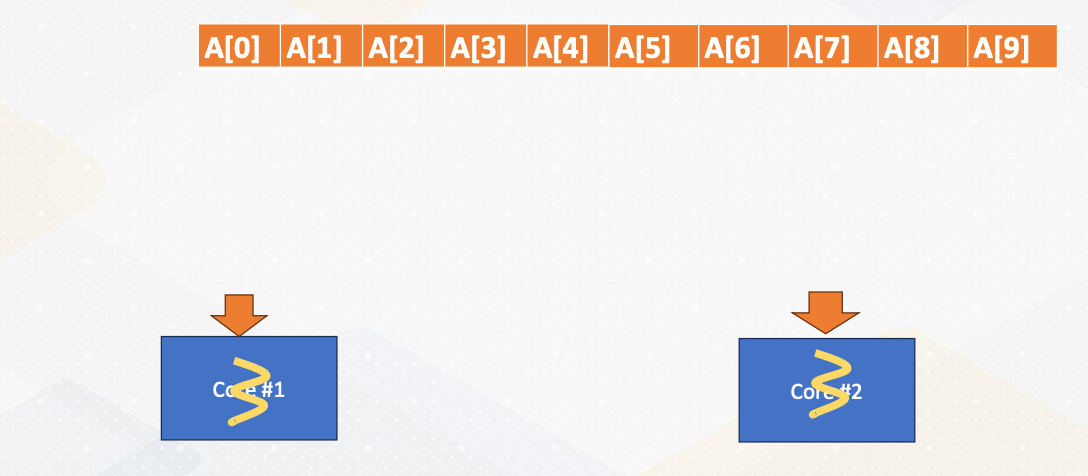

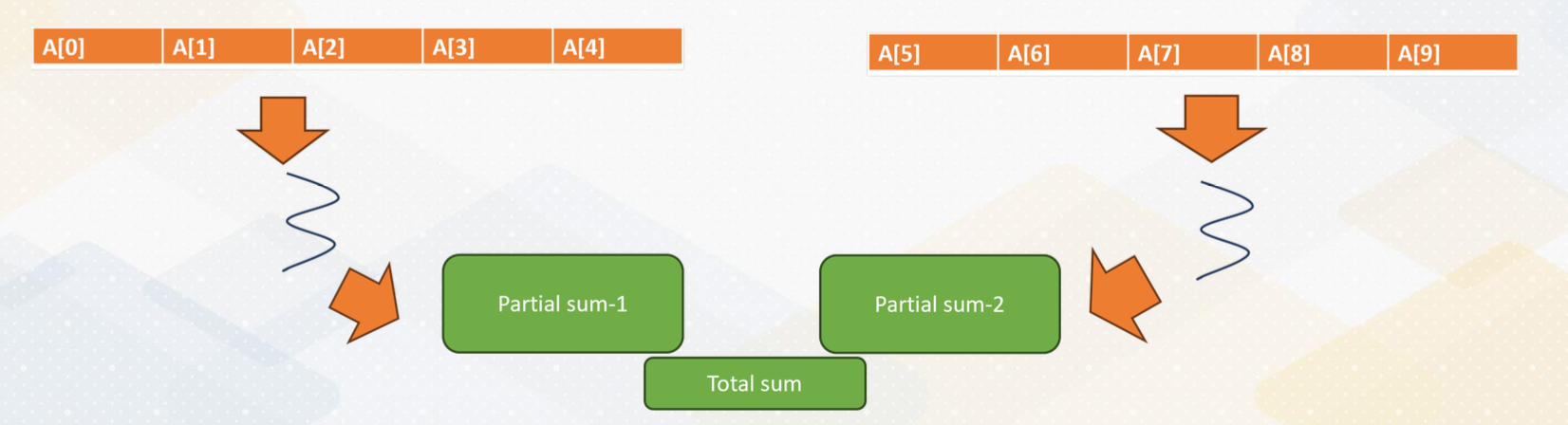

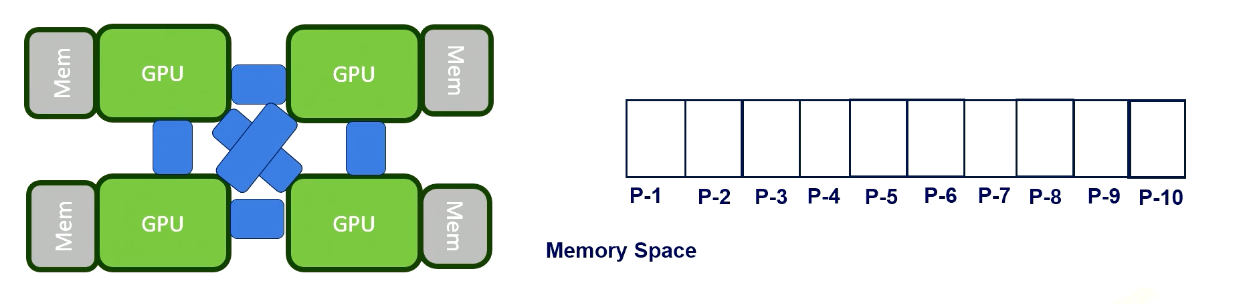

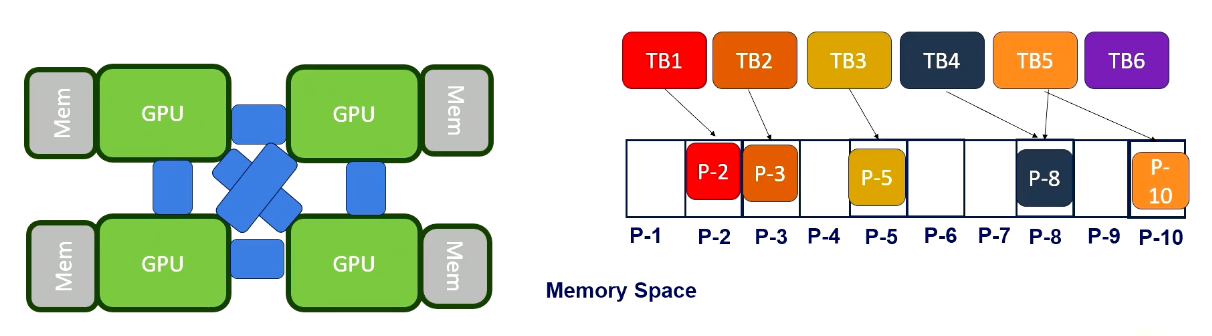

Review: Data decomposition

Let’s review data decomposition, which we saw in Module 1. Let’s decide we want to use data decomposition method. Here we have a 10 element array. An array is split into two cores.

The half of the data is sent to core 1, and the remaining half of the data is send to core 2 and they will be executed in parallel. In practice, modern CPUs can execute multiple threads within one core. However, for simplicity, we assume that one core executes one thread in this illustration.

Example: Vector sum

1

2

3

4

5

6

7

8

9

int main() {

const int size = 10; // Size of the array int data[size];

int sum = 0;

for (int i = 0; i < size; ++i) {

sum += data[i];

}

std::cout << "Sum of array elements: " << sum << std::endl;

return 0;

}

Here is a serial version of vector sum code. We have a for loop which iterates from 0 to 10 and then computes the sum of the elements.

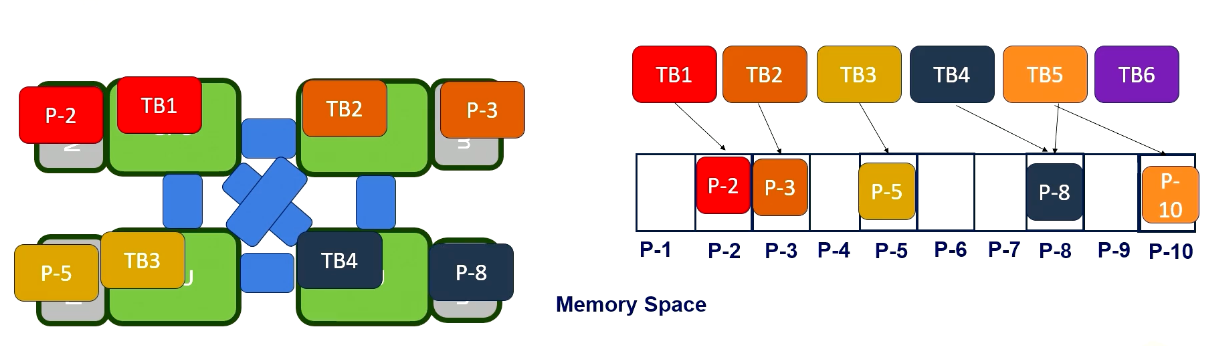

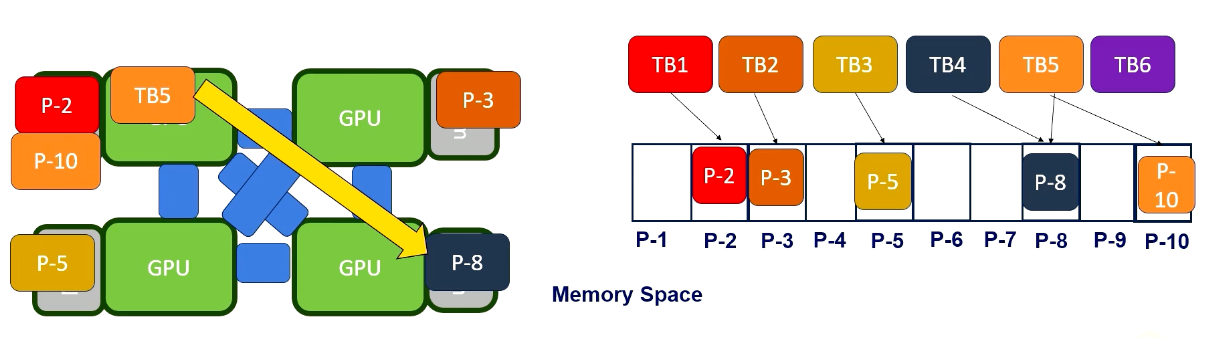

Manual Parallelization processes

This slide shows the actual parallelization steps. In the first step, we create two threads. In the second step, we split the array into two and give a half array to each thread. In the third step, we merge two partial sums. One of the challenges is how to merge values by two threads. This merge is one of the reduction operations.

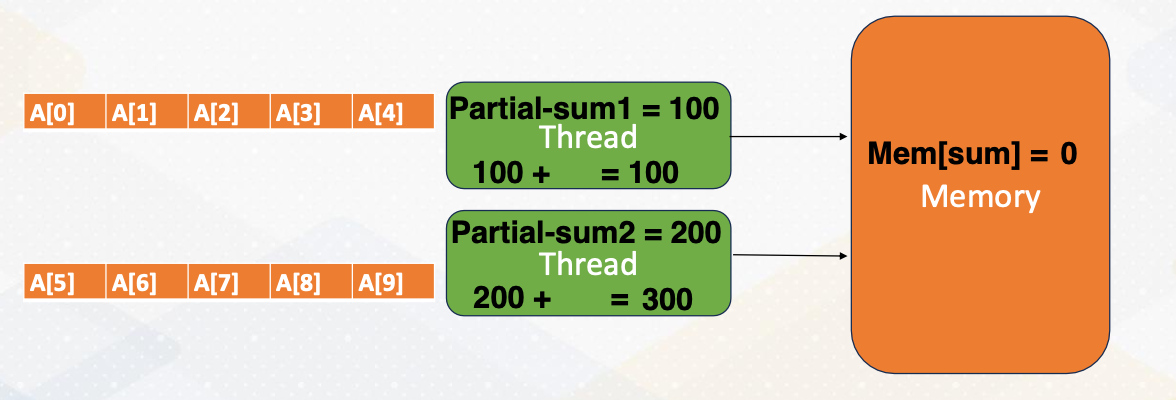

Reduction Operation in Shared Memory

Let’s look at reduction operation in shared memory in detail. First, each thread computes partial sum. The one thread updates the total sum value in one memory. The memory gets updated. The other thread reads updated value from the memory, and then adds the partial sum into the total sum and updates total value in the memory. In this process, we need to make sure only one thread updates the total sum in the memory that can be handled by Mutex.

Mutex Operation

What is Mutex? Mutex, mutual exclusion ensures only one thread can access a critical section of code at a time.

- What if both threads try to update the total sum.

- In previous example Some would be either 100, or 200 instead of 300.

- We need to prevent both threads from updating the total sum variable in the memory because the sum variable is a

shared data. - Updating shared variable is

critical section of code. - Mutex ensures only one thread can access critical section of code. First, we use a lock, which is acquiring a Mutex to enter the critical section.

- After completing a critical section, it unlocks which is releasing a mutex so others are allowed to access the critical section.

Low Level Programming for Vector Sum

If we do this work using low level programming such as p-thread, it requires manual handling of thread creation, joining and Mutex operations.

Vector Sum in OpenMP

1

2

3

4

5

6

7

8

9

10

11

12

13

#include <iostream>

#include <omp.h>

int main() {

const int size = 1000; // Size of the array int data[size];

// Initialize the array

int sum = 0;

# pragma omp parallel for reduction(+:sum)

for (int i = 0; i < size; ++i) {

sum += data[i];

}

std::cout << "Sum of array elements: " << sum << std::endl;

return 0;

}

Luckily, this step can be simplified by using OpenMP APIs. All we need to do is adding pragma omp parallel for reduction +sum. Parallel for invokes loop parallelism in pattern. It generates multiple threads automatically.

pragma omp parallel for reduction(+:sum)

What is reduction + sum?

- It is a compiler directive which is the primary construct.

- It works for C/C++, or Fortran which is used widely in HPC applications.

- Compiler replaces directives with calls to runtime library.

- Library function handles thread, create/join.

- The semantics are

#pragma omp directive [ clause [ clause ] ... ].- Directives are the main OpenMP construct. For example,

pragma omp parallelfor Clause provides additional information such asreduction (+:sum)

- Directives are the main OpenMP construct. For example,

- Reduction is commonly used, so it has a special reduction operation.

Module 2 Lesson 3: Open MP vs. MPI (Part 2)

We will describe key components of both OpenMP and MPI programming.

Course Learning Objectives:

- Extend your understanding of the concept of scheduling in OpenMP

- Describe key components of OpenMP and MPI programming

How many Threads?

The following for loop will be executed in parallel by the number of threads. Then the question is, can you control this number?

1

2

3

4

#pragma omp parallel for reduction(+:sum)

for (int i = 0; i < size; ++i) {

sum += data[i];

}

The answer is yes. You can specify the number of threads using the environment variable, OMP_NUM_THREADS By default, this number is the same as the hardware parallelism, such as the number of cores. Alternatively, you can use the omp_set_num_threads() function to define the number of threads directly within your code.

Scheduling

Now, let’s tackle another important concept of a scheduling within parallel programming. Imagine a scenario where you have a vector of one million elements and you want to distribute this work among five threads, but you only have two cores. How do you ensure each thread makes progress evenly?

We probably want to give 200k elements to each thread. This works well if each thread can make the same progress. But what if each thread makes a different progress? Why would this happen? Because we have allocated a total of five threads. Here is the interesting part. One core has three threads while the other core has only two threads.

As a result, the three threads on one core would have significantly fewer resources compared to the case where each core has an equal number of threads.

Static Scheduling/Dynamic Scheduling

To address the challenges of uneven progress among thread, we have various scheduling options.

- Option 1 (static scheduling): still give 200K elements to each thread

- in the first option known as static scheduling, we continue to allocate a consistent chunk of work to each thread. We will give 200k elements to each thread. This approach assumes that each thread will make roughly the same progress.

- Option 2 (dynamic scheduling): give 1 element to each thread and ask it to come back for more work when the work is done

- this option takes a different approach. In this scenario, we give just one element to each thread initially and allow them to come back for more work when they finish processing their assigned element. This dynamic nature allows threads to request additional work as needed, ensuring efficient resource utilization. This is called dynamic scheduling.

- Option 3 (dynamic scheduling): give 1000 elements to each of them and ask it to come back for more work when the work is done

- asking more work for every element becomes too expensive. So in the third option, we initially allocate larger chunks of work saying 1,000 elements to each thread. As with Option 2, threads can return for more work once they complete their assigned portion. This approach aims to strike a balance between granularity and efficiency.

- Option 4 (guided scheduling - chunk size varies over time): initially give 1000, but later start to give only 100 etc.

- lastly, we have a guided scheduling where we start with the larger chunk sizes such as 1,000 elements, but progressively decrease the chunk size over time. This approach adapts to the run time conditions, ensuring that threads with varying progress rates can efficiently utilize resources. For example, if some threads finish faster, they receive larger chunks, while slow threads get smaller chunks.

Giving one element or 1,000 elements refers to different chunk sizes. And in guided scheduling, which is option 4, chunk size varies over time. Each of these scheduling options offer distinct advantages and trade-offs. The choice depends on the specific workload and the dynamic nature of the threads progress. By selecting the most suitable scheduling option, we can optimize resource utilization and overall program efficiency. Dynamic scheduling can adopt run time effect (maybe some threads got scheduled to an old machine etc.)

Data Sharing

Now let’s shift our focus to data sharing, an essential consideration in parallel programming. Data sharing involves distinguishing between private and shared data. While partial sums are private data. The overall sum is shared among thread. And it’s crucial for programmers to specify data sharing policies.

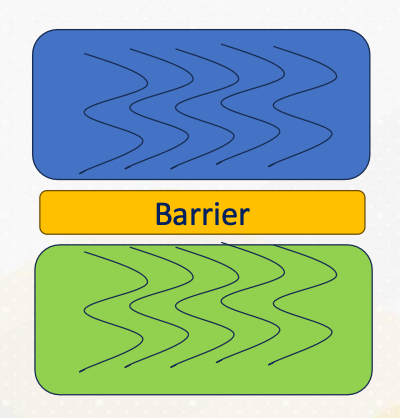

Thread Synchronization

Thread synchronization is another critical aspect of parallel programming. It ensures correct execution. Barrier, critical section and atomics are examples of thread synchronization.

Barrier

A common synchronization construct is the barrier which is denoted by #pragma omp barrier. It ensures that all participating threads reach a specific synchronization point before proceeding. This is crucial for tasks like that have dependencies between tasks such as sorting and update.

- Synchronization point that all participating threads reach a point

- Green work won’t be started until all blue work is over.

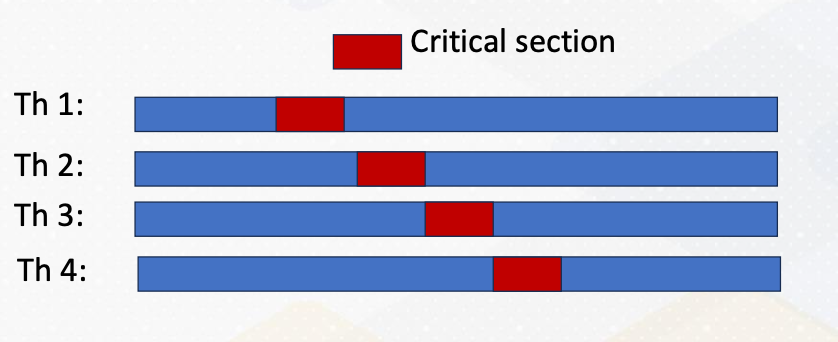

Critical Section

1

2

#pragma omp critical [(name)]

// Critical section code

Critical section should only be updated by one thread at a time. They play a vital role in preventing data race conditions. For example, incrementing a counter. This can be done by denoting #pragma omp critical [(name)].

1

2

3

4

5

#pragma omp parallel num_threads(4) {

#pragma omp critical {

//critical section code

}

}

As the following code example shows, critical section code is guarded by pragma omp critical directives. Even though there are four threads, only one thread would enter the critical section as illustrated in the above diagram. The red color represent critical section and only one thread enters the critical section. We have already studied mutex to perform this type of critical sections.

Atomic

Let’s explore atomic operations in OpenMP, denoted by #pragma omp atomic.

These operations guarantee that specific tasks are performed atomically. Meaning that they either complete entirely or not at all. For instance, consider incrementing a counter. Here is a code example.

1

2

3

4

5

#pragma omp parallel for

for (int i = 0; i < num_iterations; ++i) {

#pragma omp atomic counter++;

// Increment counter atomically

}

Counter value is incremented with atomic. Incrementing a counter requires loading the counter value, adding and storing, which are three different operations. Atomic operations ensure that all these three operations will happen altogether or none of them. Thereby, counter value is incremented by only one thread at once. These operations are vital for safeguarding data integrity and avoiding data race conditions. They can be implemented using Mutex or hardware support, depending on the specific scenario.

Parallel Sections

Sometimes work needs to be done in parallel, but not within a loop. How can we express them?

1

2

3

4

5

6

7

8

9

10

11

#pragma omp parallel sections

{

#pragma omp section

{

// work-1

}

#pragma omp section

{

// work-2

}

}

To address this, openMP provides the section directives. The code shows an example. Work 1 and work 2 will be executed in parallel. This directive can be combined with other constructions ordered or single. Work specified within sections can be executed in parallel. They are very useful with various programming patterns.

Example of Parallel Sections: Ordered

1

2

3

4

5

6

7

8

9

10

11

#pragma omp parallel

{

#pragma omp for ordered

for (int i = 0; i < 5; i++) {

#pragma omp ordered

{

// This block of code will be executed in order

printf("Hello thread %d is doing iteration %d\n", omp_get_thread_num(), i);

}

}

}

Here is an example demonstrating the orderered construct within parallel sections. It ensures that threads are executed as an ascending order in the left side without ordered construct, threads are executed out of order. In the right side with ordered construct, threads are printing messages in order.

Example of Parallel Sections: Single

1

2

3

4

5

6

7

8

9

#pragma omp parallel

{

#pragma omp single

{

// This block of code will be executed by only one thread

printf("This is a single thread task.\n");

}

// Other parallel work...

}

Similarly, the single construct within parallel sections ensures that only one thread executes the specified task (no exception about which thread will do). It’s useful for scenarios where no specific thread is expected to perform the work by only one thread. This can be used for different tasks such as initialization. So in this example, we see only one single printf message.

Module 2 Lesson 4: Programming with MPI

Course Learning Objectives:

- Describe fundamental concepts of distributed memory parallel programming

- Gain understanding of MPI (Message Passing Interface) programming

Why Study OpenMP and MPI?

You might be wondering, why do we need to study OpenMP and MPI. Well, the answer lies in the complexity of GPU programming. CUDA programming combines elements of shared memory and distributed memory programming. Some memory regions are shared among all cores, while others remain invisible and need explicit communications.

MPI Programming

Now let’s dive into MPI programming. MPI, or Message Passing Interface, is a powerful communication model for parallel computing. It allows processes to exchange data seamlessly.

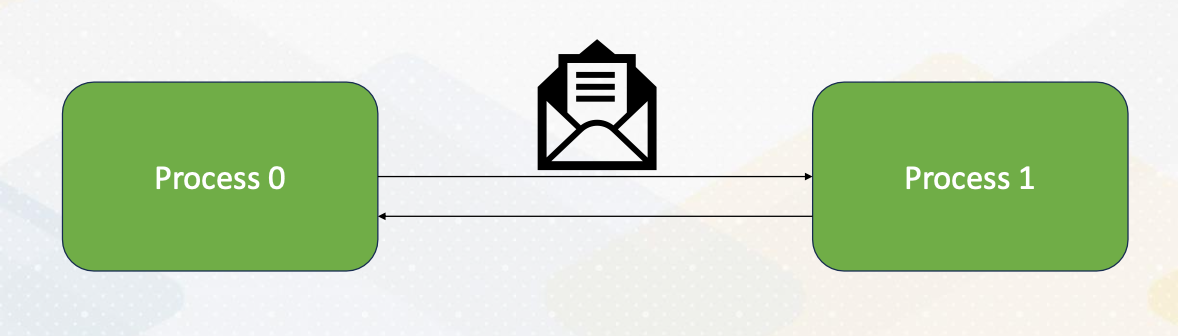

Here the diagram illustrates that Process 0 sends an integer value to Process 1 using MPI_send() And Process 1 receives the value sent by Process 0 using MPI_recv().

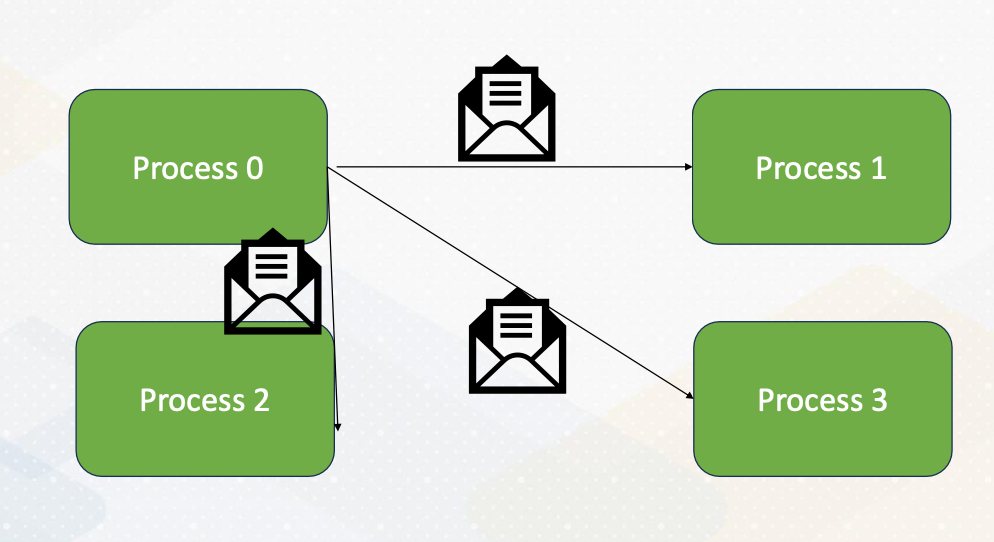

Broadcasting

MPI provides various communication functions, and one of them is MPI_Bcast() or broadcast. This function allows you to broadcast data from one process to all other processes in a collective communication manner. It’s a valuable tool for sharing information globally.

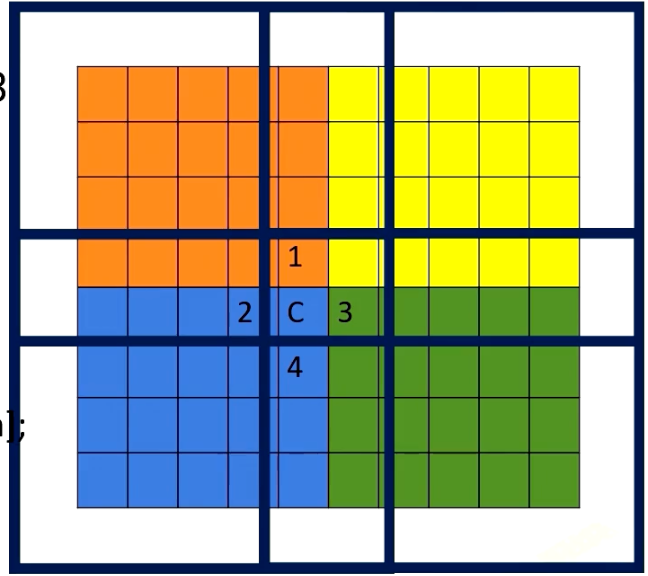

Stencil Operations

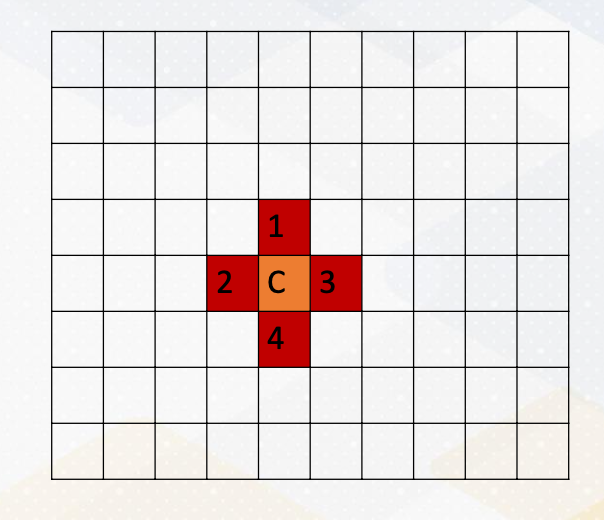

To give a more explicit example of message passing, here we take a look at stencil operations, which is common in HPC, High-Performance Computing.

This involves computations with the neighborhood data. For example, in this diagram, computing c is the average of 4 neighborhood, and we want to apply this operation to all elements in a dataset.

Stencil Operations (cont’d)

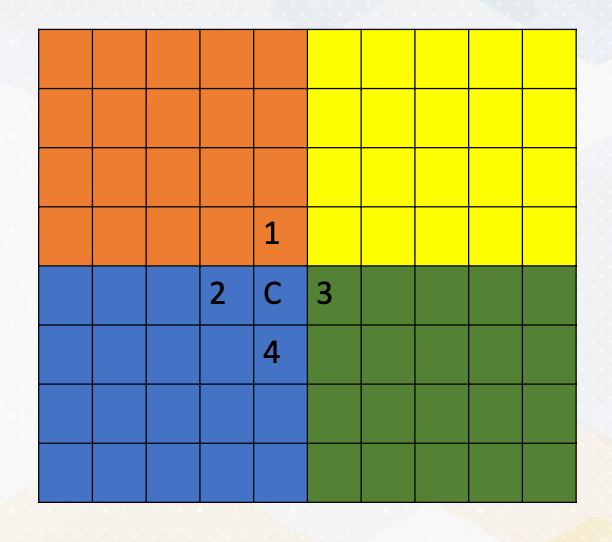

Let’s assume that:

- we want to compute the stencil operation with the four processes in the distributed memory systems.

- It’s important to note that in MPI, each process can access only its own memory regions.

- But what happens when you need to compute C, which requires access to data in other memory regions? This is where the challenge arises. (For example C where it requires numbers from orange and green area)

Communicating Boundary Information

To overcome the challenge of computing C, we need to communicate boundary information, which is the gray color in this diagram. This means sending data from one process to another using messages. It’s a crucial aspect of MPI programming, especially in scenarios where data dependencies span across processes.

Module 3: GPU Programming Introduction

Objectives

- Describe GPU programming basic

- Be able to program using CUDA

Readings

Module 3 Lesson 1: Introduction of CUDA Programming

Course Learning Objectives:

- Write kernel code for Vector Addition

- Explain basic CUDA programming terminologies

- Explain Vector addition CUDA code example

Cuda Code Example: Vector Addition

Kernel code

1

2

3

4

5

6

7

__global void vectorAdd(const float *A, const float *B, float *C, int numElements){

int i = blockDim.x * blockIdx.x + threadIdx.x;

if (i < numElements){

C[i] = A[i] + B[i] + 0.0f

}

}

Host Code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

int main(void) {

// Allocate the device input vector A

float *d_A = NULL;

err = cudaMalloc((void **)&d_A, size);

// copy the host input vectors A and B in host memory to the device input vectors

// in device memory

printf("Copy input data from host memory to the CUDA device \n");

err = cudaMemcpy(d_A, h_A, size, cudaMemcpyHostToDevice);

vectorAdd<<<blocksPerGrid, threadsPerBlock>>>(d_A,d_B,d_C, numElements);

// copy the device result vector in device memory to the host result vector in host memory

printf("Copy output data from the CUDA device to the host memroy\n");

err = cudaMemcpy(h_C, d_C, size, cudaMemcpyDeviceToHost);

}

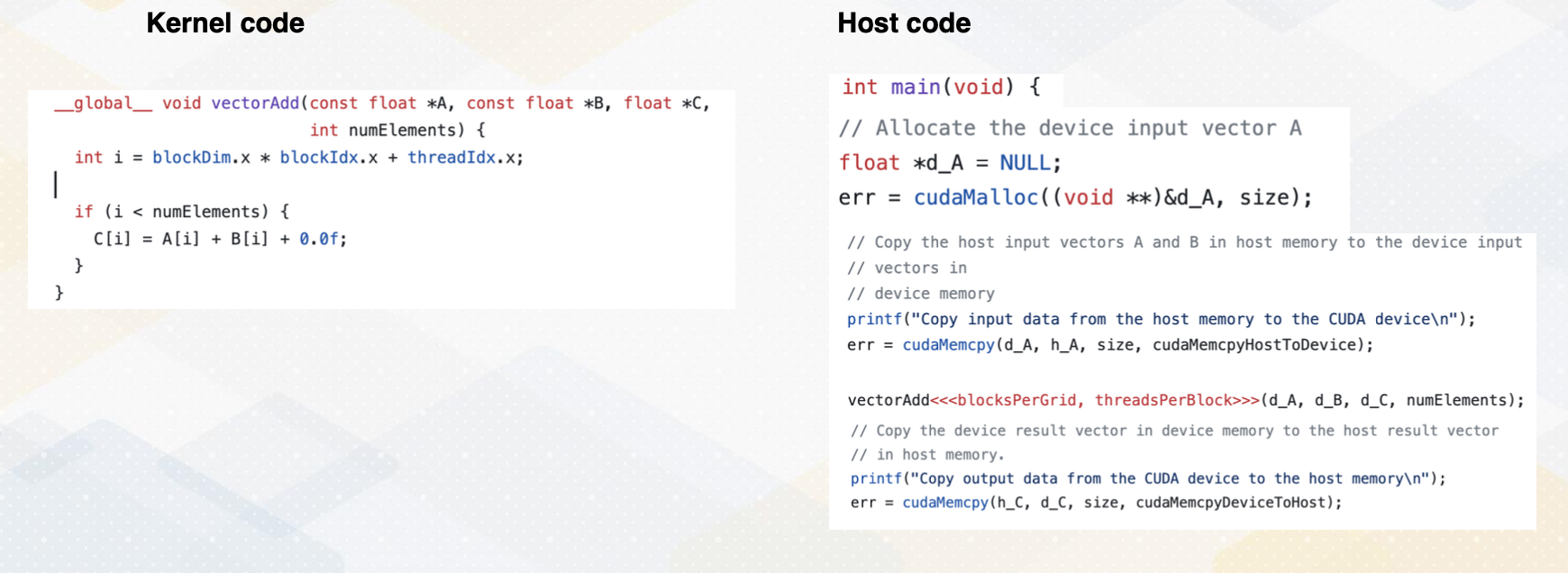

Now let’s take a look at the vector addition code example. This code shows both host code and kernel code. The host code, executed on the CPU sets up an environment such as reading data from file and setting memory. It also invokes the kernel to perform vector addition by using three angle brackets. When it calls the kernel, it also passes information such as the number of blocks per grid and threads per block as an argument using angle brackets. Kernel arguments are also passed d_A, d_B, d_C, and numElements. These are all vectorAdd kernel arguments. This kernel code will be executed on the GPU, allowing for parallel processing.

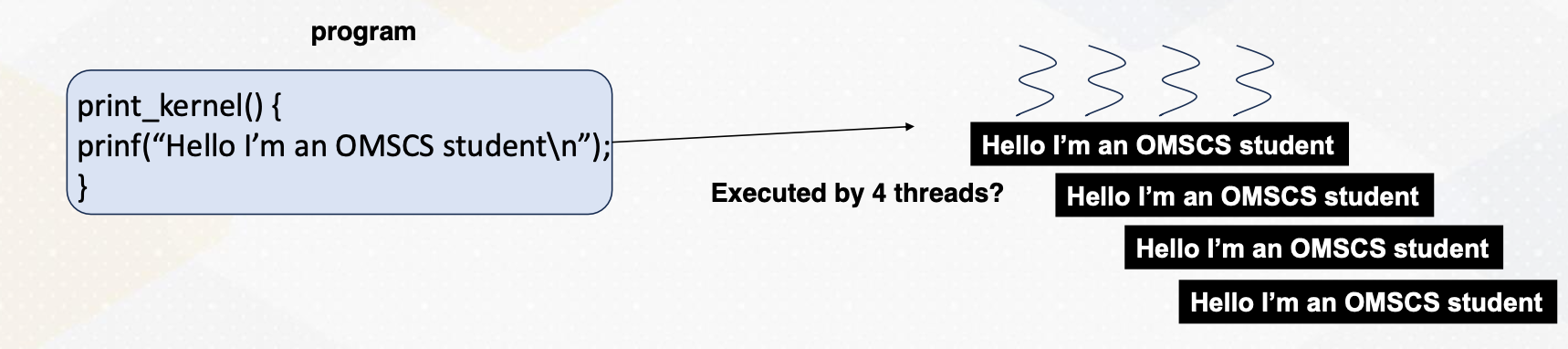

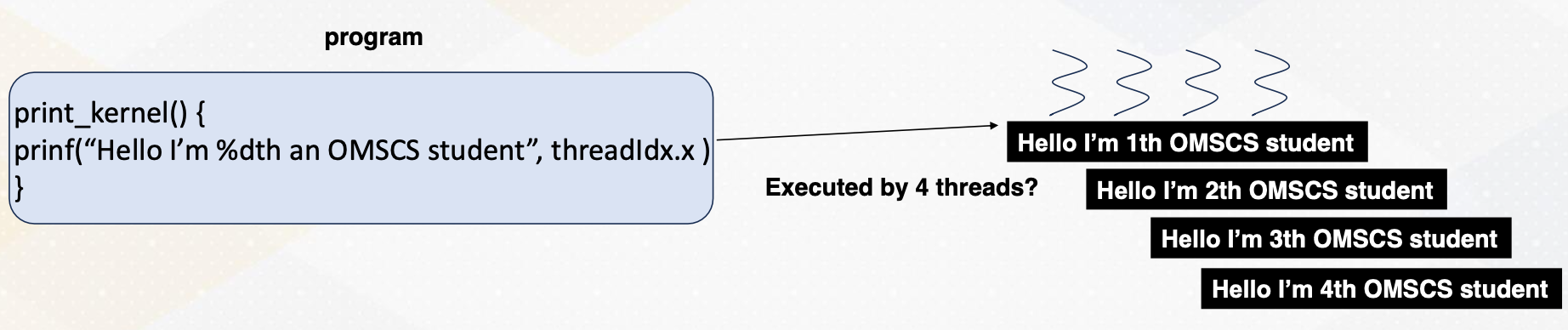

SPMD

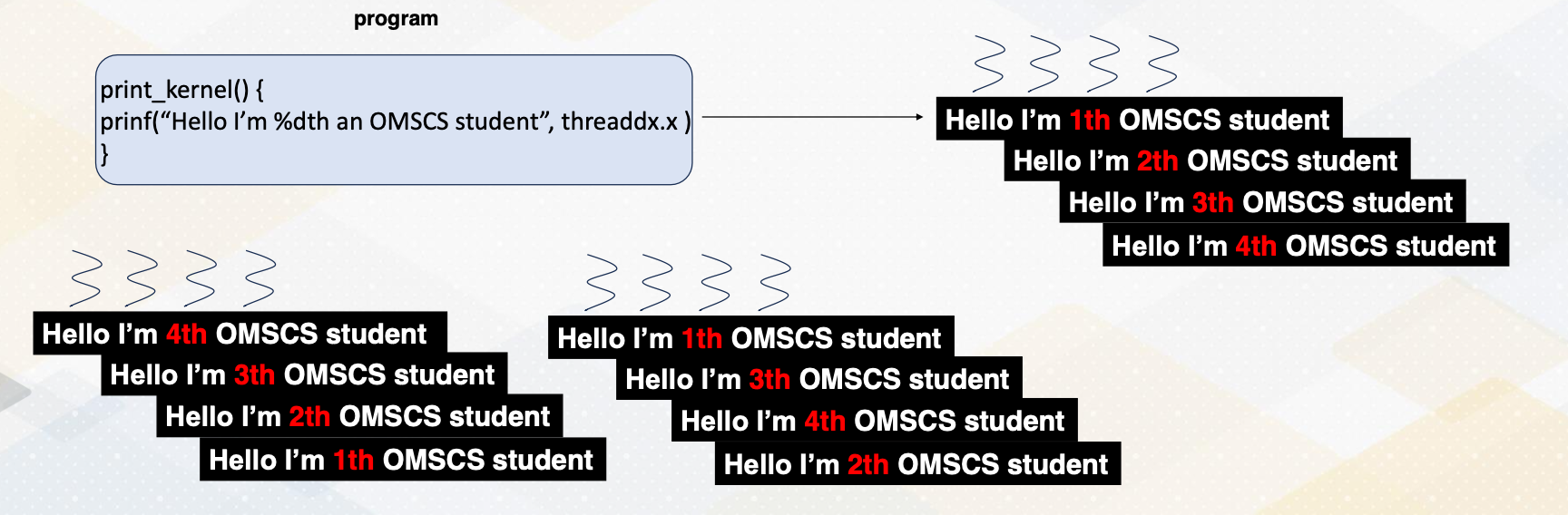

Remember that GPUs execute code concurrently following single program multiple data SPMD paradigm.

In this program, four threads were executed in parallel, each printing the message “hello, I’m an OMSCS student” without any specific ordering. However, we need to make each thread execute different data.

threadIdx.x

To offload different tasks per thread, we must assign unique identifiers to each thread. These identifiers are represented by built-in variables such as threadIdx.x, which represents the x-axis coordinate. This way each thread operates on different elements, enabling parallel processing even with a single program.

Vector Add with Thread Ids

1

2

3

4

vectorAdd (/* arguments should come here */) {

int idx= threadIdx.x; //* simplified version */

c[idx] = a[idx] + b[idx]

}

Now, returning to vector addition, we notice that it utilizes threadIdx.x. Each thread accesses a[idx] and b[idx] and idx is determined by thread ID. Therefore, this approach ensures that each thread operates on different element with the vector enhancing parallelism.

Execution Hierarchy

CUDA introduces complexity beyond the warps, a group of threads involving hierarchical execution.

Threads are grouped into blocks, and these blocks can be executed concurrently. This might be very confusing initially, since we already discussed that a group of threads are executed together as a warp. Warp is a micro architecture concept. In earlier CUDA programming, programmers do not have to know about the concept of warp because it was a pure hardware’s decision which we also call microarchitecture. Just note that in later CUDA, warp concept is also exposed to the programmer.

However, block is a critical component of CUDA,

- a group of threads form of block.

- Blocks and threads have different memory access scopes, which we will discuss shortly.

- CUDA block: a group of threads that are executed concurrently.

- Because of the different memory scope, data is divided among blocks.

- To make it simple, let’s assume that each block is executed on one streaming multiprocessor (SM).

- There is no guaranteed order of execution among CUDA blocks.

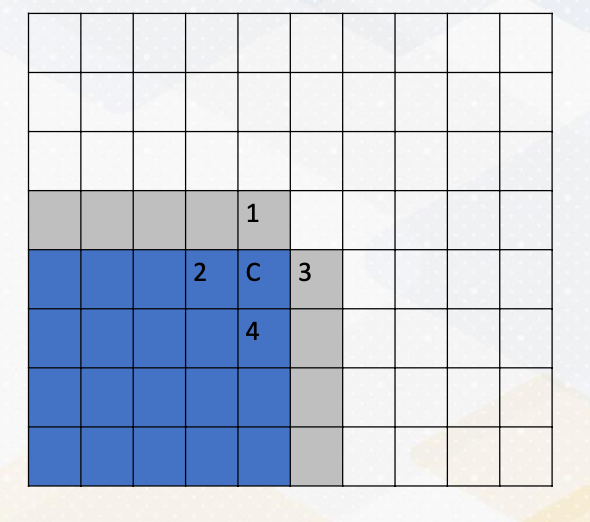

Example of Data indexing

-

threadIdx.x,threadIdx.y,threadIdx.z:threadindex -

blockIdx.x,blockIdx.y: block index

1

2

3

4

vectorAdd (/* arguments should come here */) {

int idx= blockIdx.x * blockDim.x + threadIdx.x;

c[idx] = a[idx] + b[idx]

}

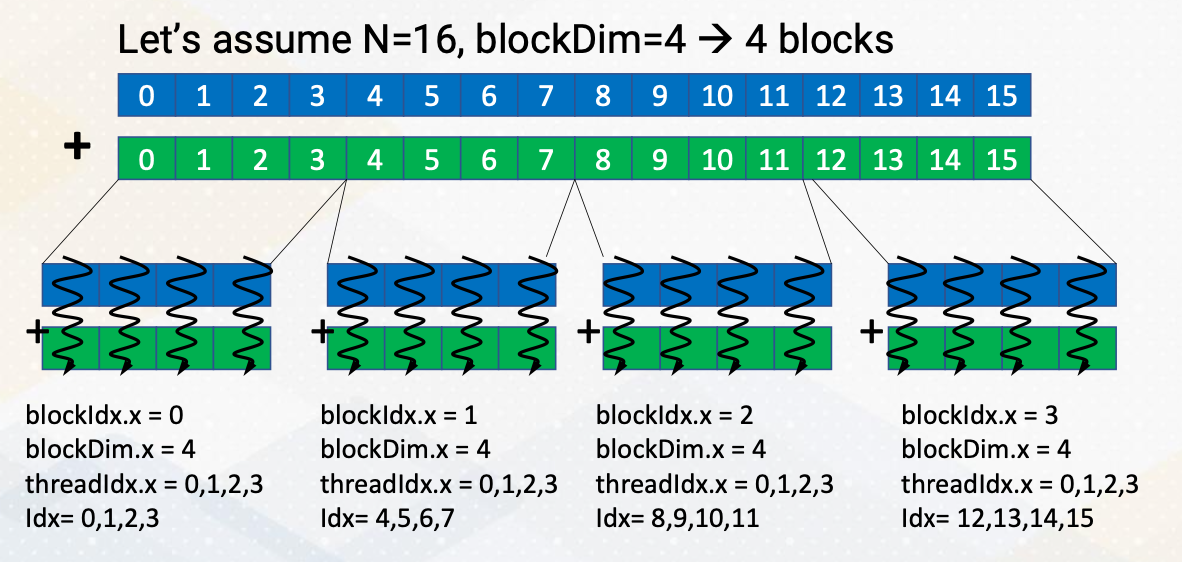

Let’s discuss an example where we divide 16 vector elements into four blocks. Each block contains four elements. ThreadId.x y z indicate thread index, similar to x, y, z coordinates. And blockId.x, y indicate block index, again similar to x and y coordinate. These indexes can be two dimensional or three dimensional and it can easily match with the physical size of images or 3D objects. To access all 16 elements, we combine block and thread IDs using block ID.x and threadId.x.

In this example, we divide 16 elements with four blocks. and each block has four threads. Each thread has unique ids, from 0 to 3 and each block has a unique id from 0 to 3 as well. Using the combination of these CUDA block ids and thread ids, we should be able to generate element index, idx. Idx equals blockId.x times blockDim.x plus threadId.x. This is a typical pattern of indexing elements using block idx and thread idx.

Shared memory

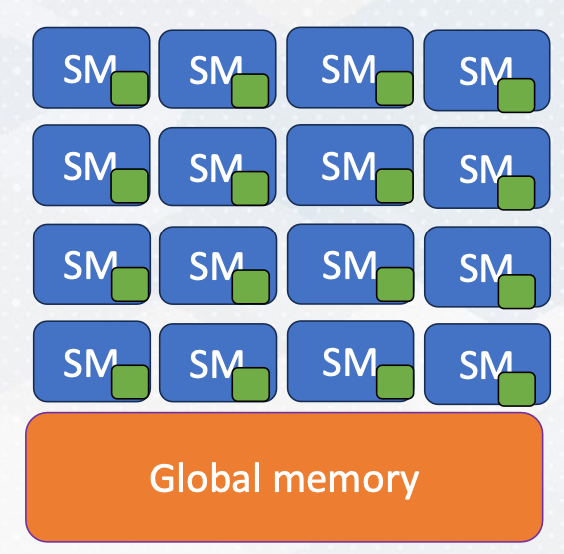

The green dot represents on chip storage

The green dot represents on chip storage

Before we explore why this hierarchical execution is crucial, let’s discuss shared memory. Shared memory serves as a scratch pad memory managed by software. It is indicated as _shared_, it provides faster access compared to global memory which resides outside the chip. Shared memory is accessible only within a CUDA block. Here is an example of CUDA that shows shared memory.

1

2

3

4

5

6

7

// CUDA kernel to perform vector addition using shared memory

__global__ void vectorAdd(int* a, int* b, int* c) {

__shared__ int sharedMemory[N]; // Each thread loads an element from global memory into shared memory

Int idx = threadIdx.x;

sharedMemory[idx] = a[idx] + b[idx]; // Wait for all threads in the block to finish loading data into shared memory

__syncthreads(); // Perform the addition using data from shared memory c[idx] = sharedMemory[idx];

}

Execution Ordering: Threads

This slide also illustrates the threads are executed in any order. The print message can be 4, 3, 2, 1 or 1, 3, 4, 2, or 1, 2, 3, 4 threads.

Execution Ordering: Blocks

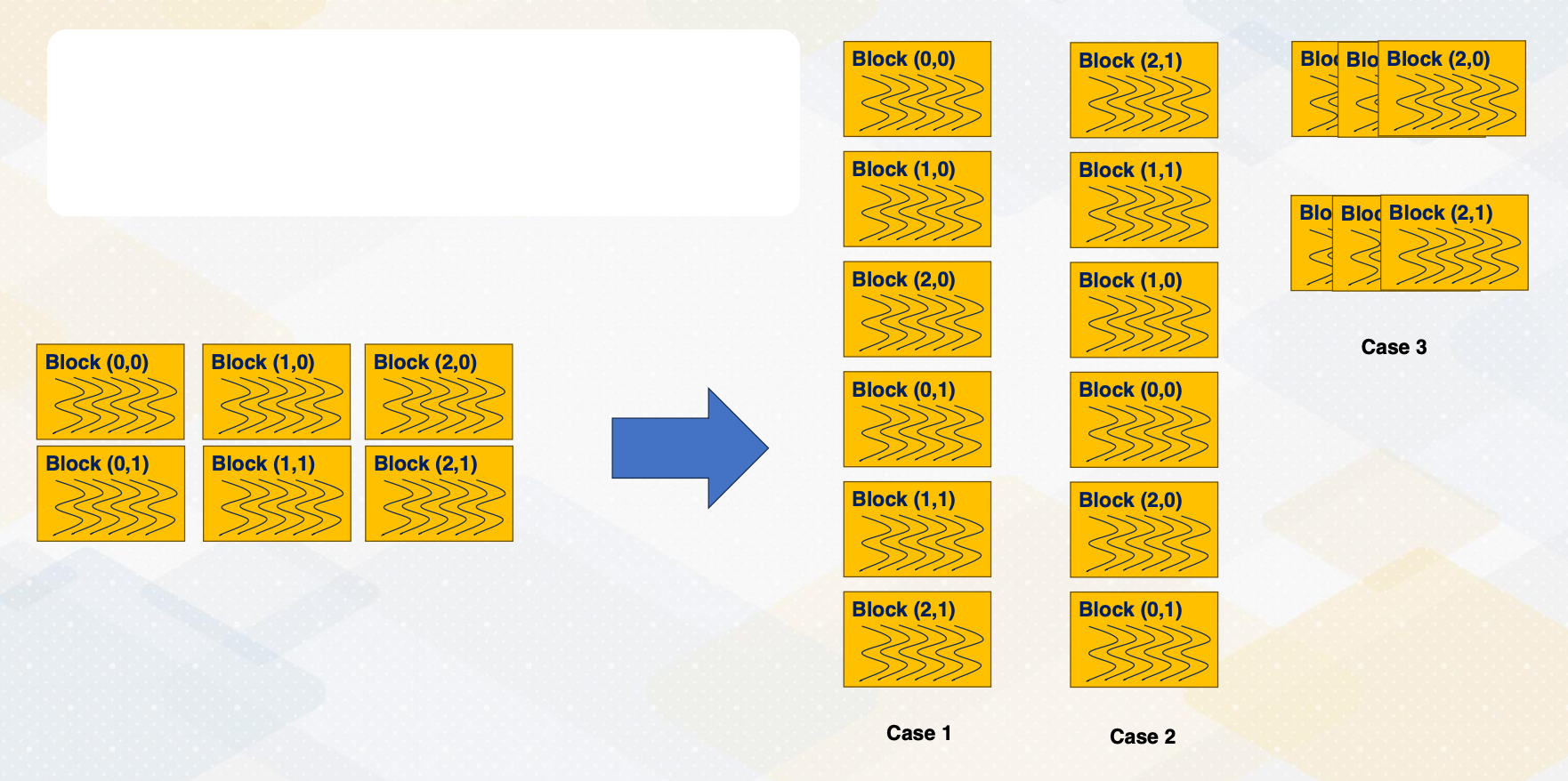

CUDA block execution also does not follow a specific order.

- Blocks can execute in any sequence, such as ascending order in case 1,

- descending order in case 2,

- and case 3, three blocks are executed together.

Thread Synchronizations

1

2

3

4

5

__global__ void Kernel(){

// work-1

__synchthreads(); // synchronization

// work-2

}

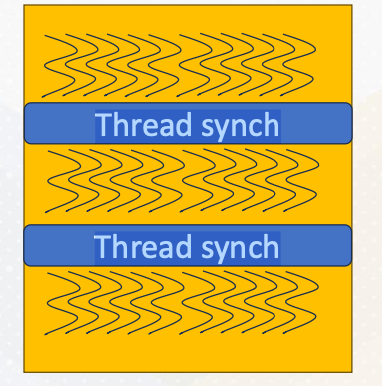

Since threads are executed in any order and can make progress with different speeds, to ensure synchronization among threads, we often use __sync_threads(), which synchronizes all threads until a specified point in the code. Interblock synchronization is achieved through different kernel launches.

Typical Usage with Thread Synchronizations

The typical computation pattern involves loading data into shared memory, performing computations, and storing results. So load from the global memory to the shared memory, compute with the data in the shared memory, and then store the results into the global memory back. This programming model is called BSP, Bulk Synchronous Parallel.

1

2

3

4

5

6

7

__global__ void Kernel(){

// Load to shared memory

__synchthreads(); // synchronization

// compute

__synchthreads(); // synchronization

// store

}

All threads work independently and then it meets at the thread synchronization point. This slide also shows these three step computation example and also illustrates threads and thread synchronization. Due to potential data dependencies and threads can be executed in any order, thread synchronization is vital.

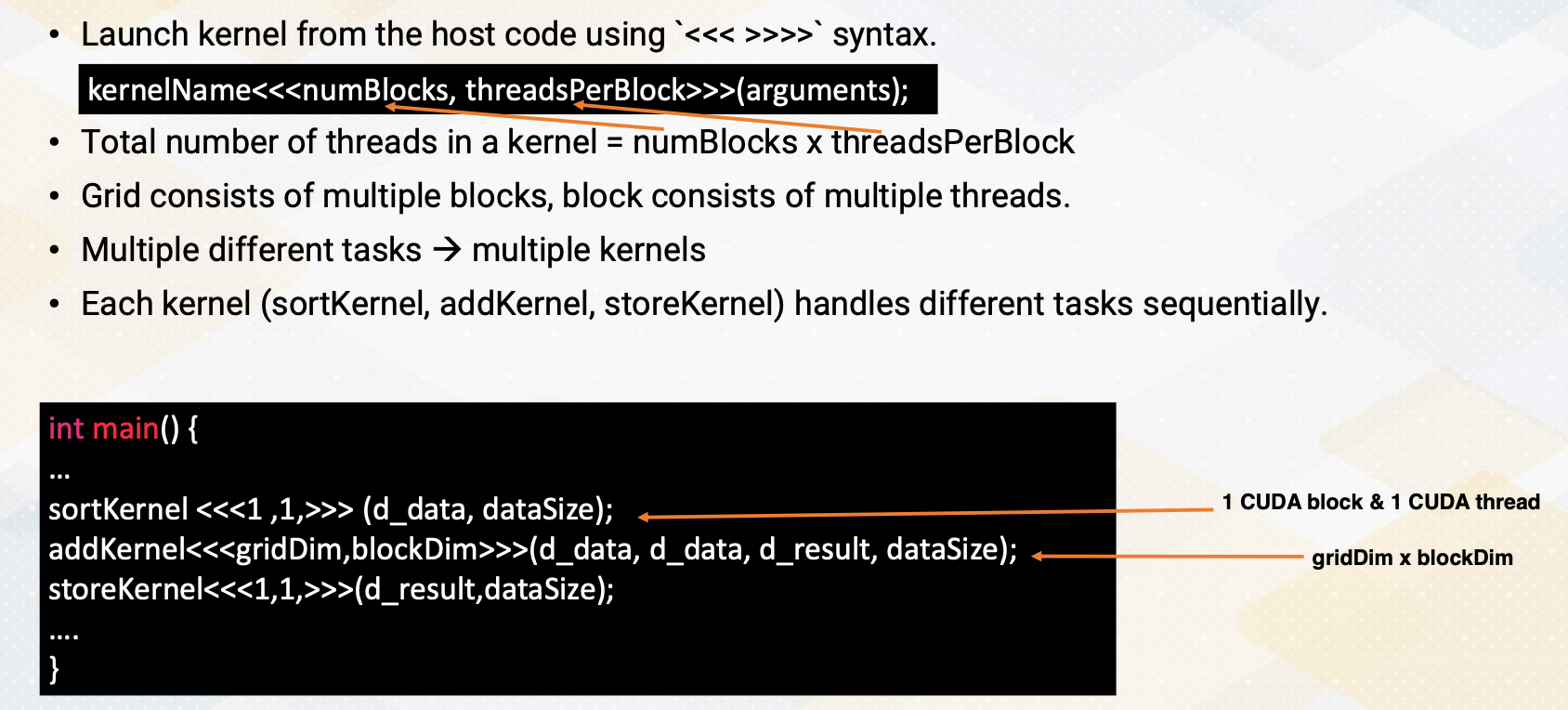

Kernel Lanuch

Now let’s discuss kernel launch again. Kernel launch is initiated from the host code with three angle brackets and specifies the number of blocks and threads per block. The total number of threads in the grid is the product of these two values. Grid consists of multiple blocks and block is consisted of multiple threads.

1

2

3

4

5

6

7

int main() {

...

sortKernel <<<1 ,1,>>> (d_data, dataSize); // 1 Cuda Block & 1 Cuda Thread

addKernel<<<gridDim,blockDim>>>(d_data, d_data, d_result, dataSize); // gridDim x blockDim

storeKernel<<<1,1,>>>(d_result,dataSize);

...

}

Here in this example, it shows three different kernels, sort, add, and store to handle three different tasks. One more thing to note is that sort is done using only one thread. And then the main add computation is done with gridDim times blockDim, number of threads, and then store is also done with only one thread. Here is an example to show that multiple kernels can be used for different tasks with its grid and block dimensions.

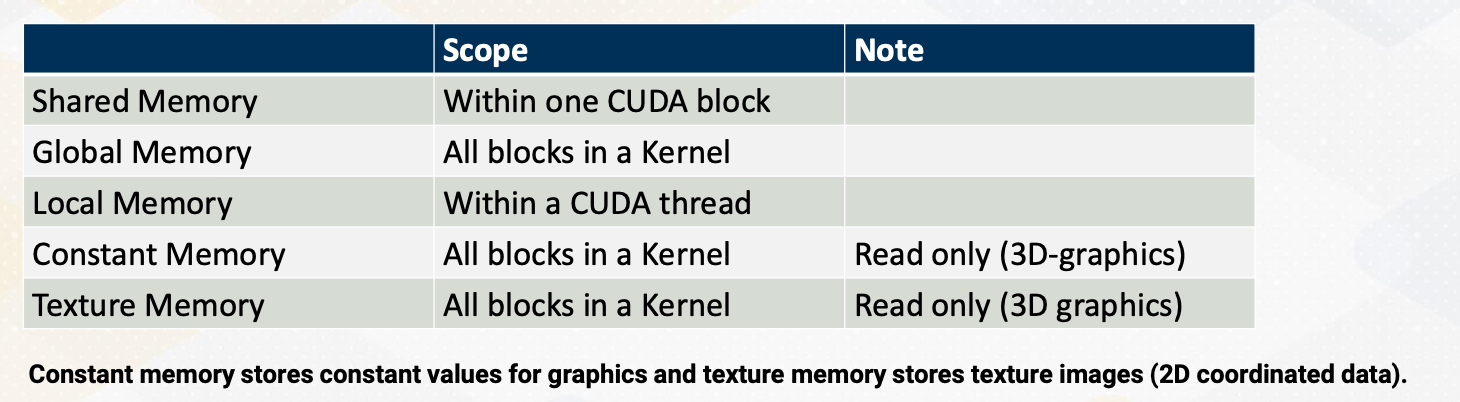

Memory Space and Block/Thread

Here is a summary of memory space and block/thread. Shared memory is available only within one CUDA block, and global memory is accessible by all blocks in a kernel. Local memory is only within a CUDA thread. Constant and texture are from 3D graphics. And constant memory is very small and stores very small amount of read only data such as the value of fhi. Texture memory is also read only but it also stores texture values. So it’s structured for two dimensional accesses. For CUDA programmers, constant and texture memories are not widely used, so we will not discuss too much.

Information sharing is limited across CUDA execution hierarchy. This is probably the crucial reason to have different execution hierarchy. Data in the shared memory is visible only within one CUDA, which means the data in the shared memory can stay only in one SM (cpu core). Which also means that data in the shared memory of one CUDA block needs explicit communication. And later we will discuss that CUDA also support thread block cluster to allow data sharing in the shared memory.

Module 3 Lesson 2: Occupancy

Course Learning Objectives:

- Explain the concept of occupancy

- Determine how many CUDA blocks can run on one Streaming Multiprocessor

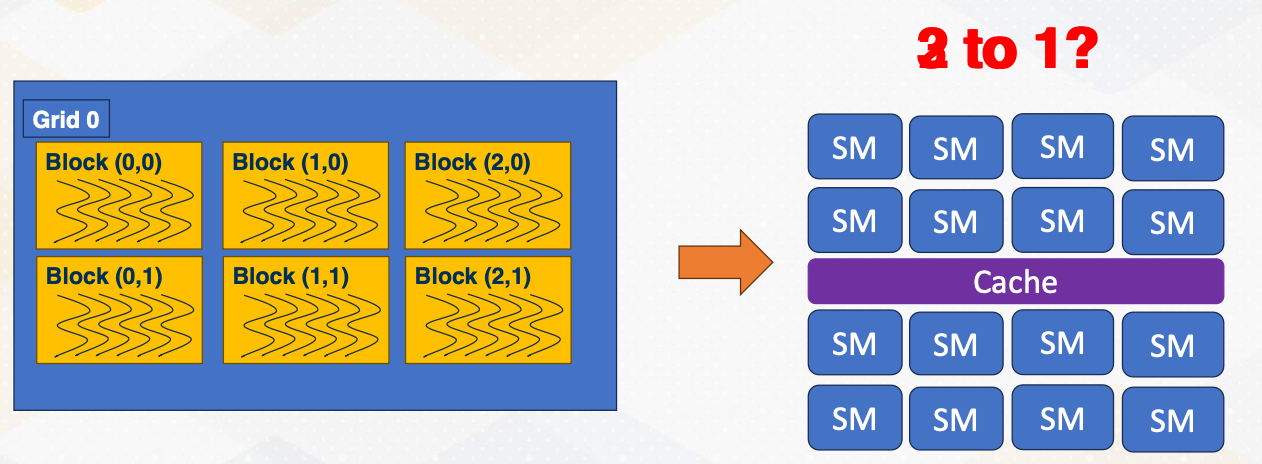

How Many CUDA Blocks on One Stream Multiprocessor?

So far, we have assumed that each SM handles a single CUDA block, but the reality is much more complex. Multiple blocks can coexist on a single SM, but how many? Two blocks per SM or three blocks per SM? What decides this choice?

Occupancy

How many CUDA blocks can be executed on one SM can be decided by the following factors.

- Number of registers,

- shared memory, and

- number of threads.

Exact hardware configurations are varied by GPU microarchitecture and different by each generation.

Let’s take a closer look at one example. We will explore the example with 256 threads and 64 kilobyte of register files and 32 kilobytes of shared memory.

- In the software side, each CUDA block has 32 threads and two kilobytes of shared memory, and each thread has 64 registers.

- If the occupancy is constrained by running number of threads, the total number of CUDA blocks per SM would be 256/32, which means eight CUDA blocks.

- If the occupancy is constrained by the registers, it will be 641,024/6432, which means 32 CUDA blocks.

- If the occupancy is constrained by the shared memory size, it will be 32 kilobyte divided by 2 kilobyte equals 16 CUDA blocks.

- So the final answer is the minimum of all constraints, so it will be 8 CUDA blocks per SM.

Number of Threads Per CUDA Block

- Host sets the number of threads per CUDA block, such as threadsPerBlock:

1

kernelName<<<numBlocks, threadsPerBlock>>>(arguments);

- Set up at the kernel launch time

- When it launches the kernel, the number of registers per CUDA block can be varied. Why?

- Because the compiler sets how many architecture registers are needed for each CUDA block. This will be also covered later part of this course.

- In case of CPU, the number of ISA decide the number of registers per thread such as 32. But in CUDA, this number varies.

- Shared memory size is also determined from the code.

- For example, the code says

__shared__ int sharedMemory[1000];. So in this case four byte times 1,000 equals 4,000 bytes. (Remember int is 4 bytes) So the occupancy is determined by these factors.

Why Do We Care about Occupancy?

Then why does the occupancy matter?

- This is because higher occupancy means more parallelism.

- Utilization - Utilizing more hardware resource generally leads to better performance.

- Exceptional cases will be studied in later lectures.

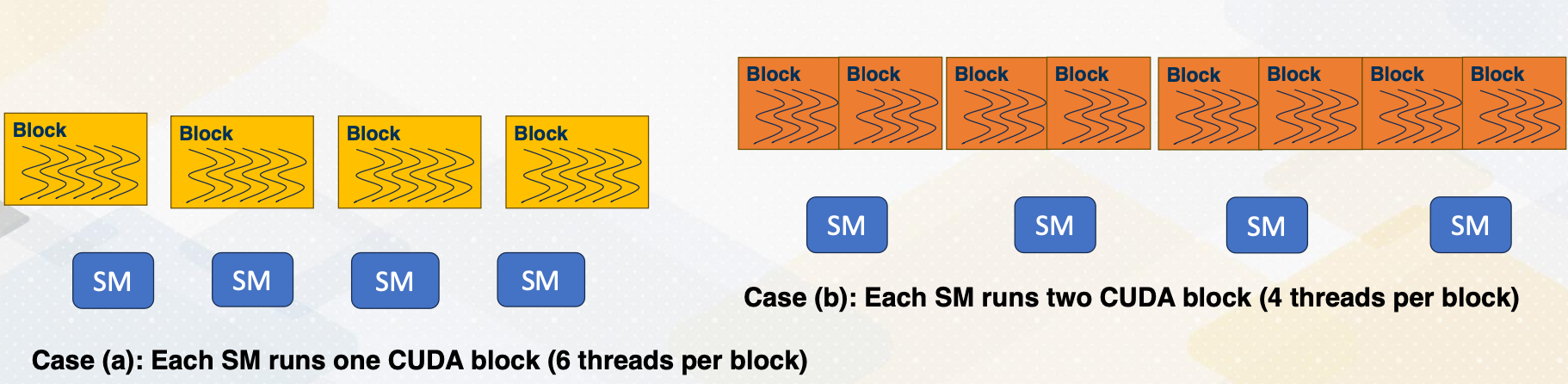

In case A, only one CUDA block runs on each SM, and each CUDA block has six threads. In case B, each SM can execute two blocks, but each block has only four thread. So total eight threads per block is running per each SM, which is actually more than case A.

- Case A - 4 * 1 * 6 = 24

- Case B - 4 * 2 * 4 = 32

Module 3 Lesson 3: Host Code

Course Learning Objectives:

- Write the host code for vector addition operation

- Explain the management of global memory

Managing Global Memory

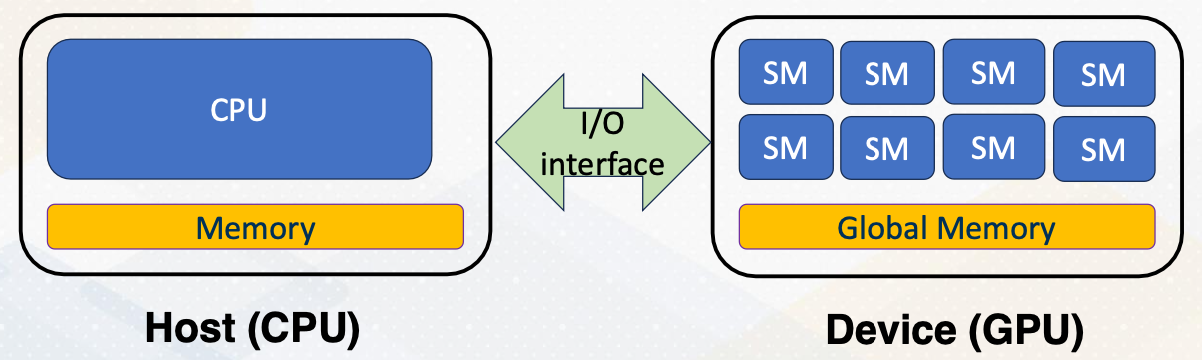

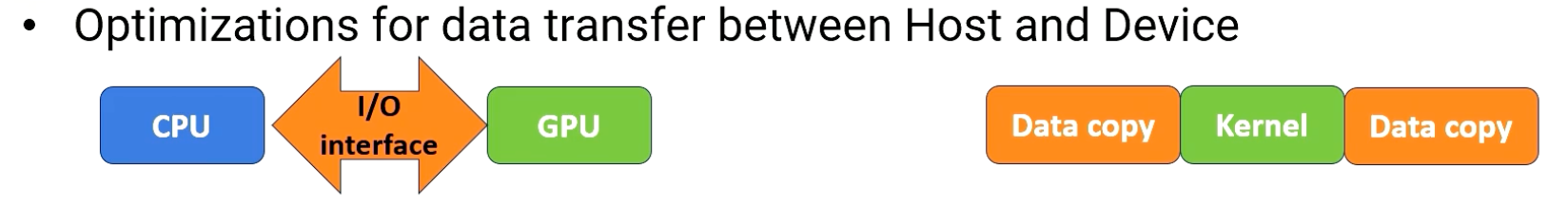

Recap, we have discussed that shared memory is visible within only CUDA block. And global memory is visible among all CUDA blocks. But this global memory is located at the device memory, which is not visible on CPU side because they are connected with I/O interface. Hence, there should be explicit APIs to manage device memory from the host side.

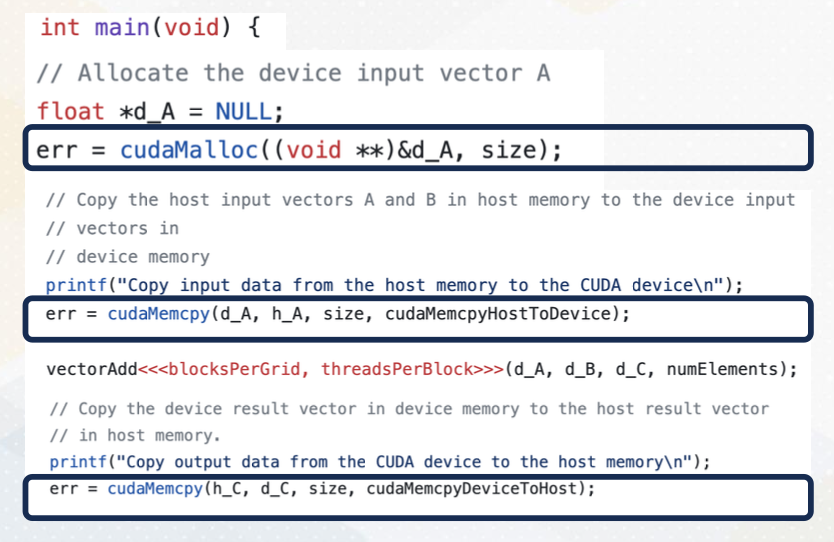

Managing Global Memory (cont’d)

In this vector code example, we see two APIs that manage global memory. cudaMalloc and cudaMemcpy. cudaMalloc allocates memory in the GPU. In this example, cudaMalloc allocates d_A with the size. cudaMemcpy transfers data between CPU and GPU, either using cudaMemcpytoHostto Device or cudamemcpyDevicetoHost. Later lecture we will discuss using unified memory which eliminates the need for explicit data copies.

Review Vector Addition

Now this is a full vector addition code. In the left side we have a kernel code which is executed by individual threads on GPUs. And on the right side it shows a host code which is executed on CPUs.

Module 3 Lesson 4: Stencil Operation with CUDA

Course Learning Objectives:

- Be able to write a stencil code with CUDA shared memory and synchronization

Recap: Stencil Operations

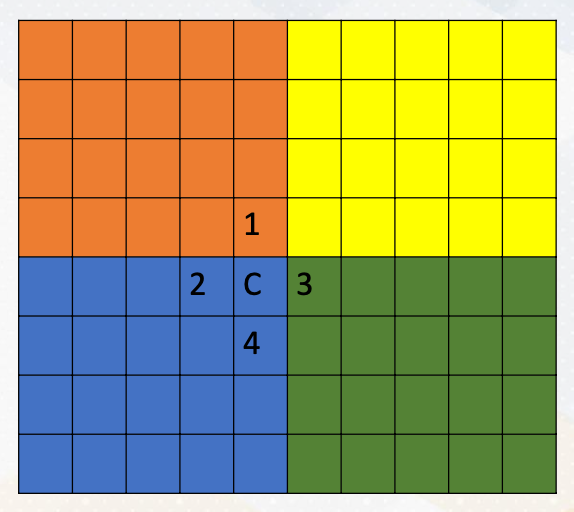

As we discussed in earlier videos, stencil operations are common operations in high performance computing. For example, here, C value is computed by simply averaging 4 neighborhood elements East, West, North, and South.

Each CUDA block performs different elements. Different color zones represent the area that is computed by each CUDA block. So this follows data decomposition programming pattern. Each thread handles one element of the stencil operation. And the operation will be fully parallel.

You also notice that one element will be used at least four times. So these are good candidates for caching, and since programmers know the exact pattern of reuse it is good to utilize the shared memory to store in an on-chip storage.

Stencil Operations: Operations Per Thread

Let’s use the shared memory for stencil operation. As we discussed, typical way of using shared memory is three stages.

- In the first stage, the processor loads data from global memory to the shared memory, on-chip storage.

- In the second stage, the processor actually performs stencil operation.

- In the third stage, the processor writes the result back eventually to the global memory.

- Typically thread synchronizations are between Stage 1 and Stage 2 to make sure the computation is performed after all the shared memory is loaded from the global memory.

How about Boundaries?

Using shared memory exposes another problem. If you remember shared memory is only accessible within one CUDA block. If we divide the memory area by colors, each CUDA block can access its own color zone. However, to compute the value in C location, we need the element in Location 1 in red area, 3 in green area.

Hence, the way it works is each shared memory brings boundary elements as well, such as the following. This is a somewhat similar to MPI programming where explicit communications are needed for boundaries. Here in CUDA, we just simply bring boundary data to the shared memory. We can do that because we make sure those data is only read only data. Here we show an example of using shared memory for stencil operation. Please note that to make the example more simpler, we use a filter to compute stencil operation, which is similar programming style with convolutional operations.

1

2

3

4

// Load data into shared memory

__shared__ float sharedInput[sharedDim][sharedDim];

int sharedX = threadIdx.x + filterSize / 2;

int sharedY = threadIdx.y + filterSize / 2;

Use if-else to Check Boundary or Not

Maybe it looks obvious, but it was not clearly mentioned earlier. Is that if-else statement can be also used in the CUDA programming. Depending on the position of x, y values, the program can also decide whether it brings the data from the global memory or it fills with zero. This is implemented with if-else statement to check whether thread ID is belonged to a certain range.

The program has to create more number of threads than actual color zone and threads only within the inner element. The original color zone area performs computation. In other words, more threads are created to bring the data from the global memory to the shared memory. The bottom code example shows the actual computation snippet again, This code uses convolutional operation style to make the example have less if-else statements.

- Load different values on boundaries

1 2 3 4 5

if (x >= 0 && x < width && y >= 0 && y < height) { sharedInput[sharedY][sharedX] = input[y * width + x]; } else { sharedInput[sharedY][sharedX] = 0.0f; // Handle boundary conditions }

- Perform computations only on inner elements

1 2 3 4 5 6

// Apply the filter to the pixel and its neighbors using shared memory for (int i = 0; i < filterSize; i++) { for (int j = 0; j < filterSize; j++) { result += sharedInput[threadIdx.y + i][threadIdx.x + j] * filter[i][j]; } }

Module 4: GPU Architecture

Objectives

- Describe GPU microarchitecture

- Be able to explain the basic GPU architecture terminologies

Readings

Module 4 Lesson 1: Multi-threaded Architecture

Course Learning Objectives:

- Explain the difference between multithreading and context switch

- Describe resource requirements for GPU multithreading

In this module, we’ll dive deeper into GPU architecture. Throughout this video, we’ll explore the distinctions between multithreading and context switching in GPUs. We’ll also discuss resource requirements for GPU multithreading.

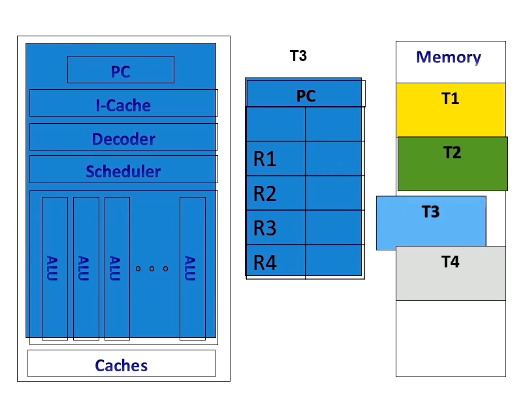

Recap: GPU

Let’s first recap the fundamentals of GPU architecture.

- GPUs are equipped with numerous cores.

- Each core features multithreading,

- which facilitates the execution of warp or wave front within the core.

- Each core also has shared memory and hardware caches.

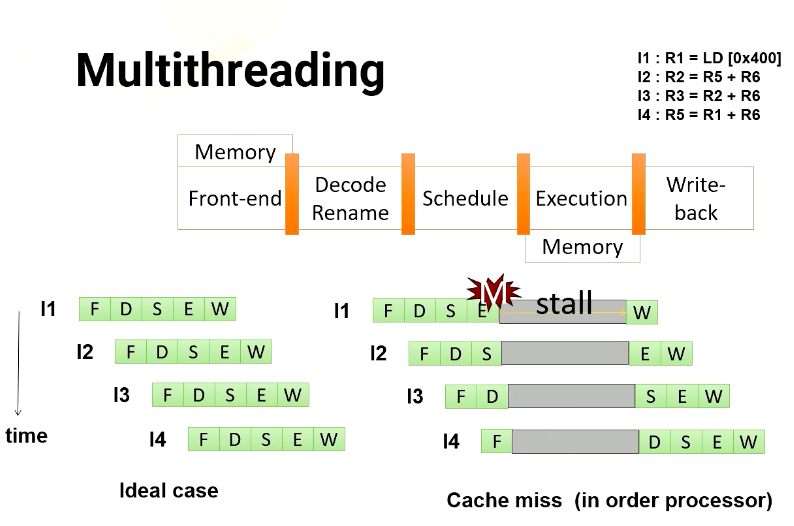

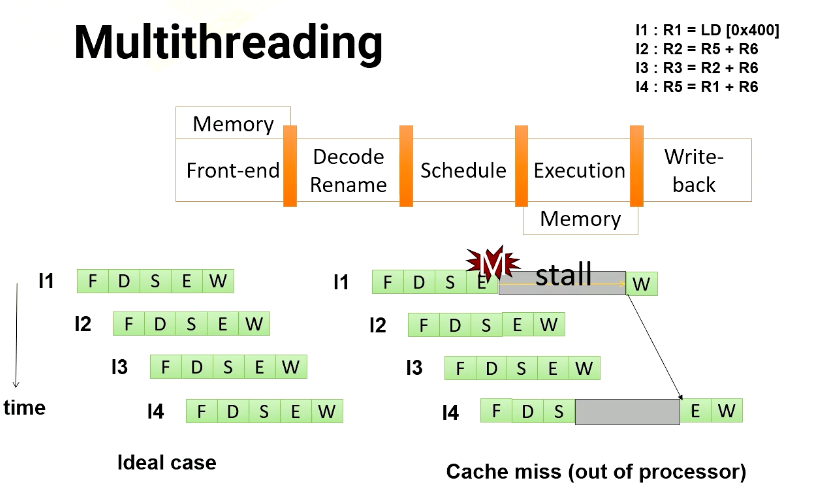

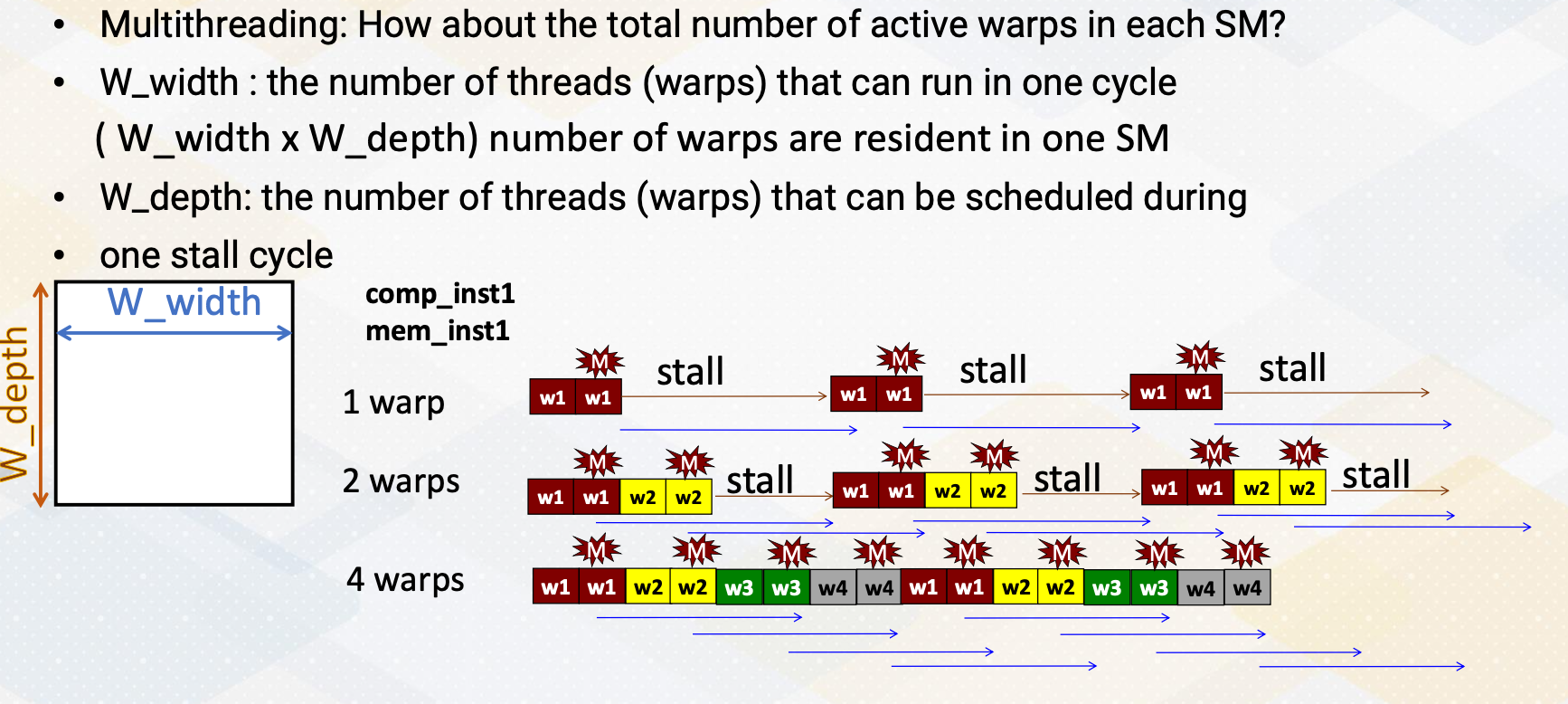

Multithreading

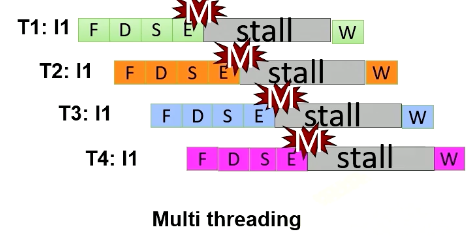

Let’s dive into multithreading. Imagine a GPU with a five stage pipeline and four instructions. In an ideal scenario, each instruction would proceed through the pipeline one stage at a time. However, in an in order processor, if an instruction has a cache miss, the following instructions have to wait until the first instruction completes. If you look carefully, the instruction 2 is not actually dependent on instruction 1. Hence, in an out of order processor, instruction 2 doesn’t have to wait.

So in an out of order processor, it starts to execute instruction 2 and 3 before instruction 1 completes. Once instruction 1 receives the memory request, instruction 4 can be executed.

In multithreading, the processor just simply switches to another thread regardless of whether a previous instruction generates cache misses or not. Since they are from different threads, they’re all independent. In this example, the processor executes four instructions from four different threads. Furthermore, the processor also generate four memory requests concurrently.

Benefits of multithreading

To get the benefit of generating multiple memory requests, GPU utilize multithreading. Instead of waiting for stalled instructions, GPU start to execute instructions from another thread, allowing for parallel execution as well as more memory requests.

- Multithreading’s advantage is its ability to hide processor stall time, which is often contributed by

- cache misses,

- branch instructions or

- long latency instructions such as ALU instructions.

- GPUs leverage multithreading to mitigate such long latency issues.

- While CPUs employ out of order execution, cache systems, and instruction level parallelism (ILP) to tackle latency.

- Longer memory latency requires a greater number of threads to hide latency

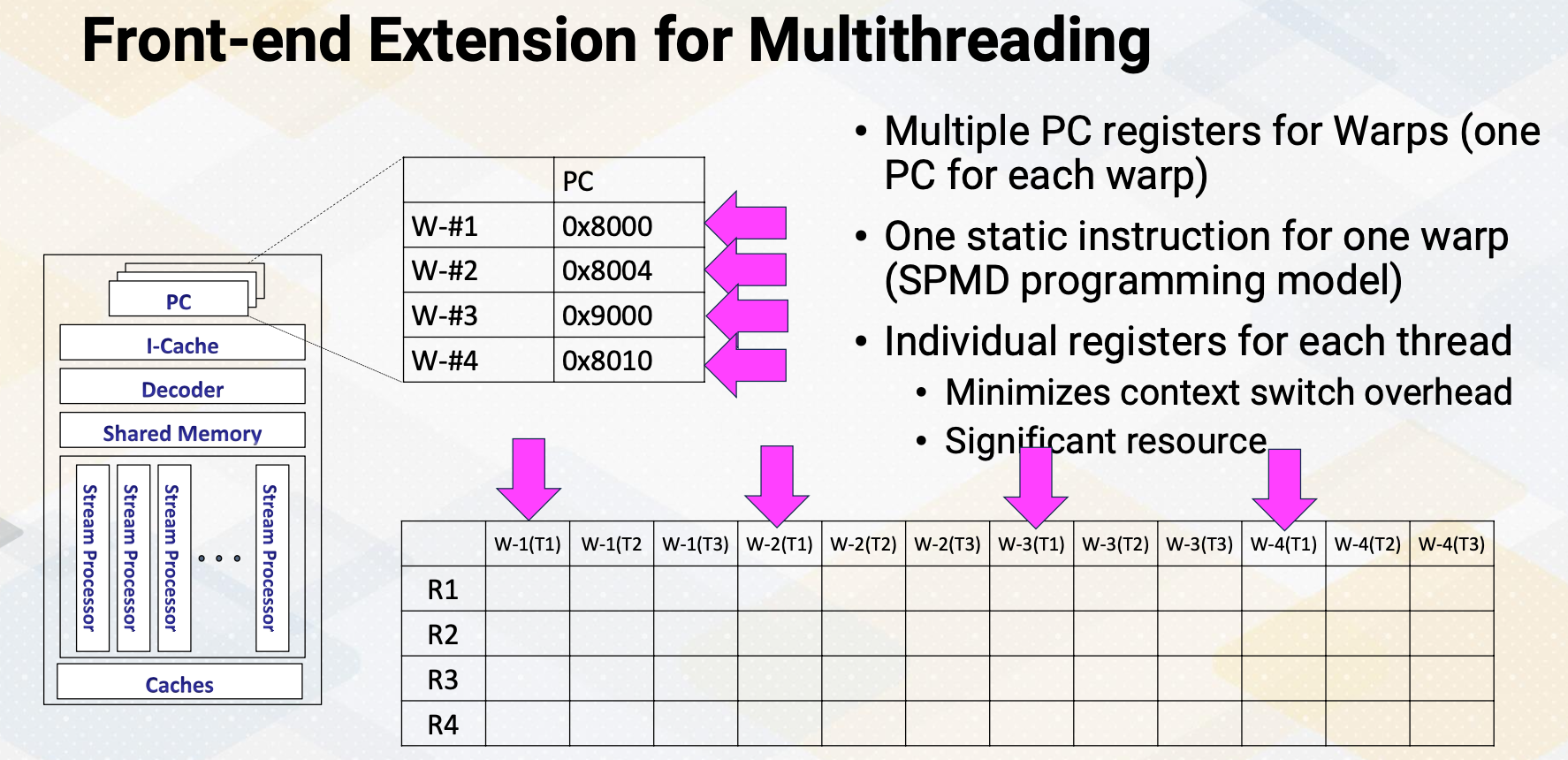

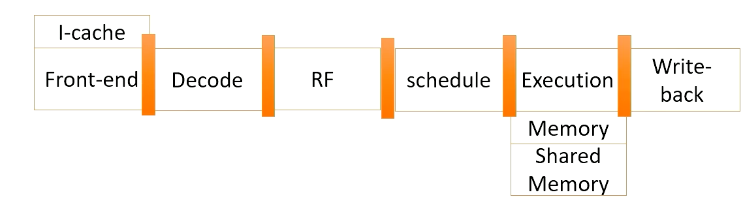

Front-end Extension for Multithreading

The front end features multiple program counter (PC) values, each warp needs one PC register so supporting four warps requires four registers. It also has four sets of registers. So context switching in GPUs, means simply switching the pointer among multiple PC registers and register files.

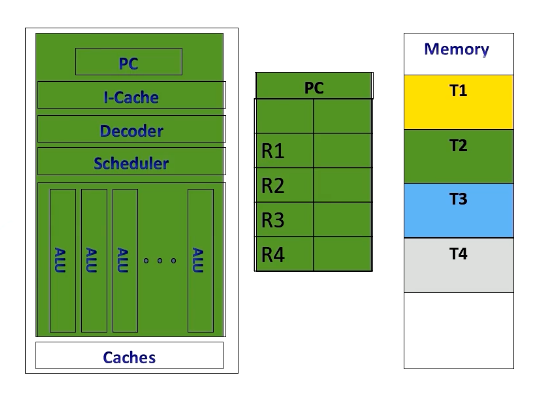

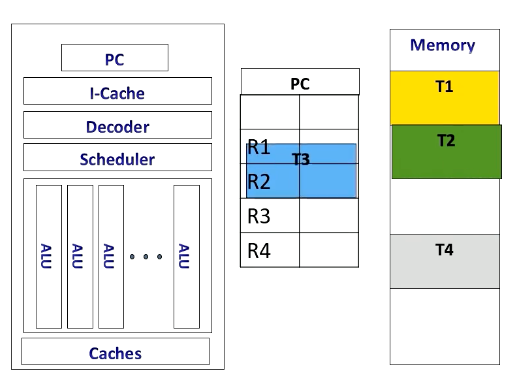

CPU Context Switching

In contrast, CPUs implement context switches in a different way. When executing a thread, the instruction pipeline, PCs, and registers are dedicated to the specific thread.

If the CPU switches to another thread, for example, from T2 to T3, it stores T2’s contents in memory and loads T3 content from the memory.

Once it’s done, it executes the original thread context. Context switches in CPUs incur substantial performance overhead due to the need to store and retrieve register contents from the memory.

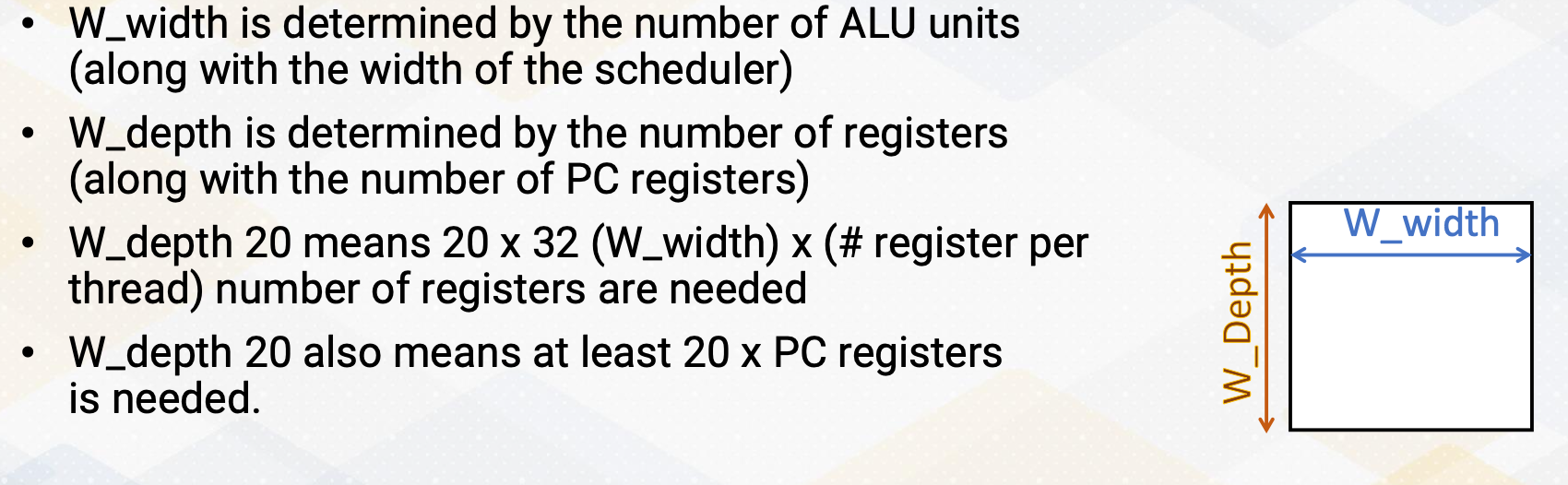

Hardware Support for Multithreading

Now let’s discuss the resourc e requirement for multithreading in more detail.

- Front-end needs to have multiple PCs

- One PC for each warp since all threads in a warp share the same PC

- Later GPUs have other advanced features, we’ll keep it simple and assume one PC per warp.

- Additionally, a large register file is needed.

- Each thread needs “K” number of architecture registers

- total register file size requirement = K times $\times$ number of threads

- “K” varies by applications

- Remember occupancy calculation?

- Each SM (shared memory) can execute Y number of threads, Z number of registers, etc.

- Here, Y is related to the number of PC registers. So if the hardware has five PC registers, it can support up to 5 times 32, which is 160 threads.

- Z is related to K

Revisit Previous Occupancy Calculation Example

Let’s revisit a previous example to calculate occupancy. If we can execute 256 threads, have 64 times 1024 registers and 32 kilobytes of shared memory, here 32 threads per warp is assumed. And then the question is how many PCs are needed?

- The answer is 256 divided by 32, which is eight PCs.

If a program has 10 instructions, how many times does one SM need to fetch an instruction?

- The answer is simply put, 10 multiplied by 8 which is 80.

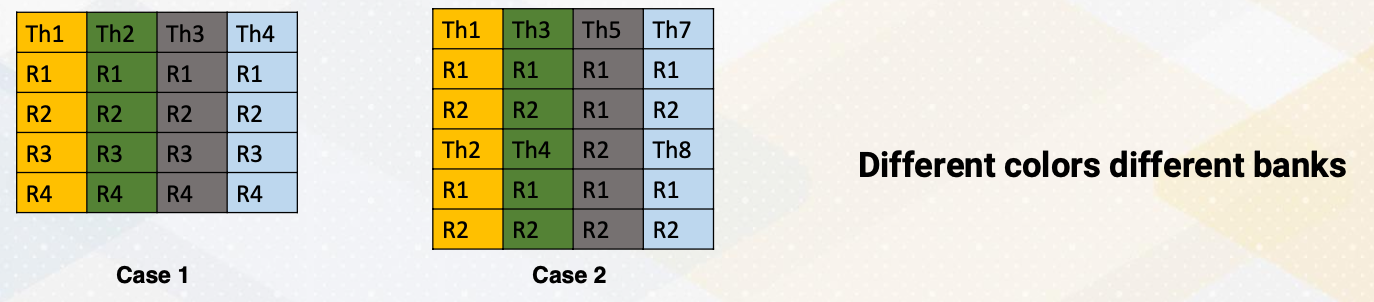

Module 4 Lesson 2: Bank Conflicts

Course Learning Objectives:

- Explain SIMT behavior of register file accesses and shared memory accesses

- Describe techniques to enhance bandwidth in register and shared memory accesses

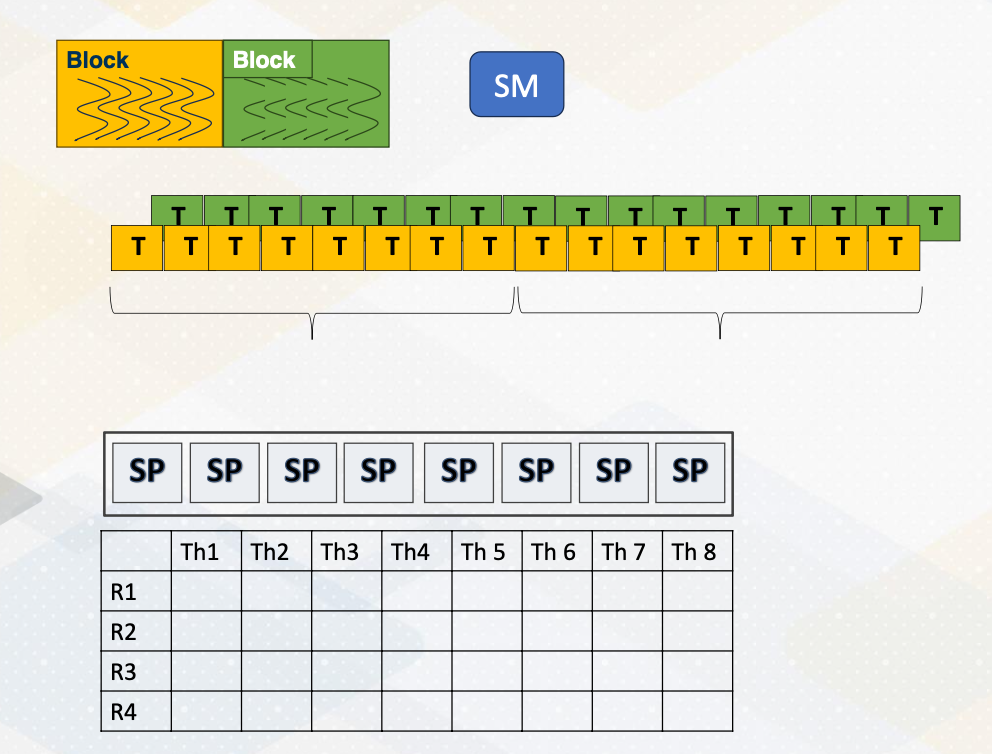

CUDA Block/Threads/Warps

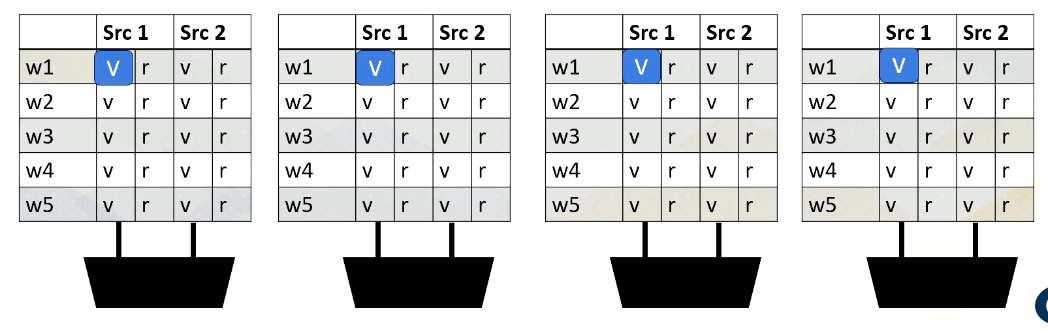

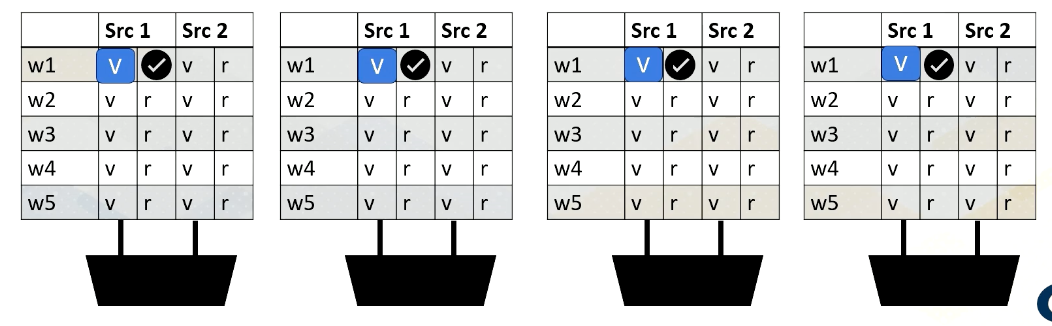

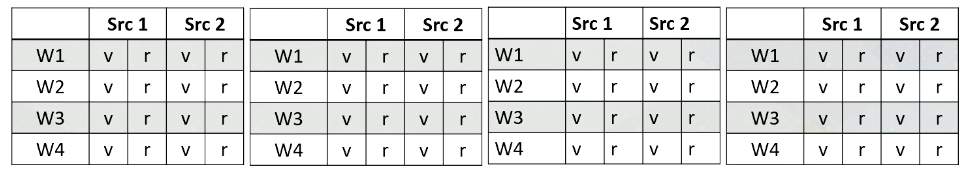

Let’s revisit GPU architecture basics. In a GPU, multiple CUDA blocks run on one multiprocessor and each block has multiple threads. And a group of threads are executed as a warp. As shown in this animation, one warp will be executed and then the other warp will be executed. Each thread in a warp needs to access the registers because the registers are per thread. Assume that each instruction needs to read two source operands and write one operand and the execution width is eight. In that case, we need to supply eight times three, two read and one write which is 24 values at one time.

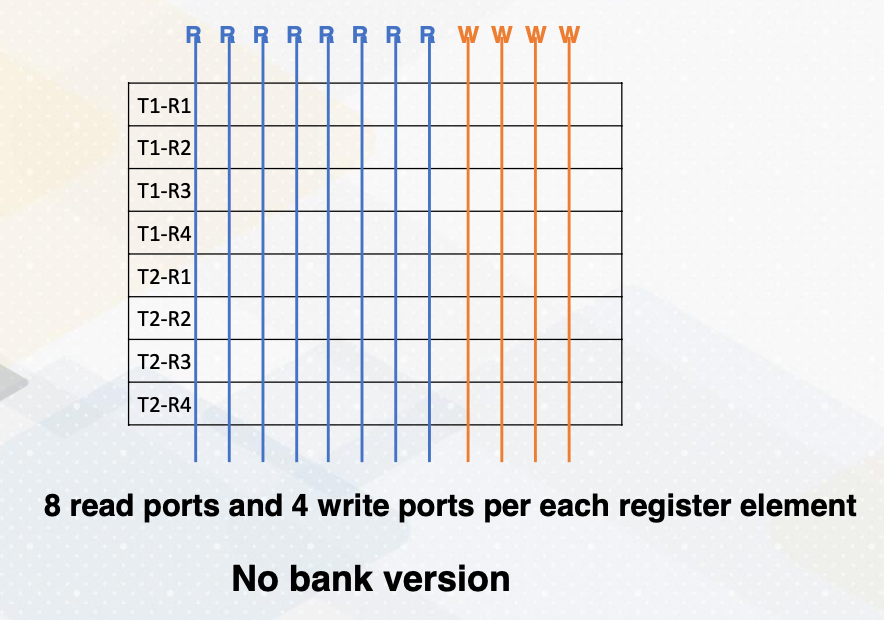

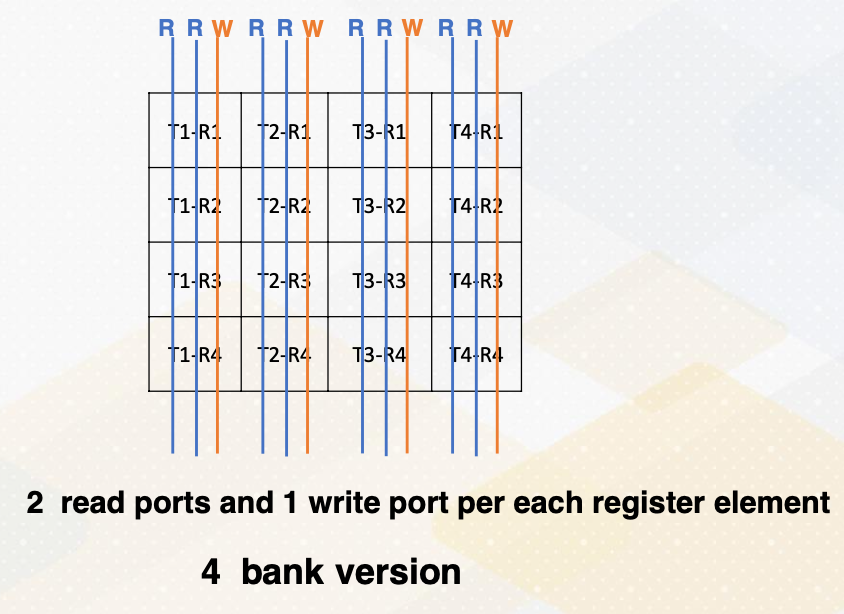

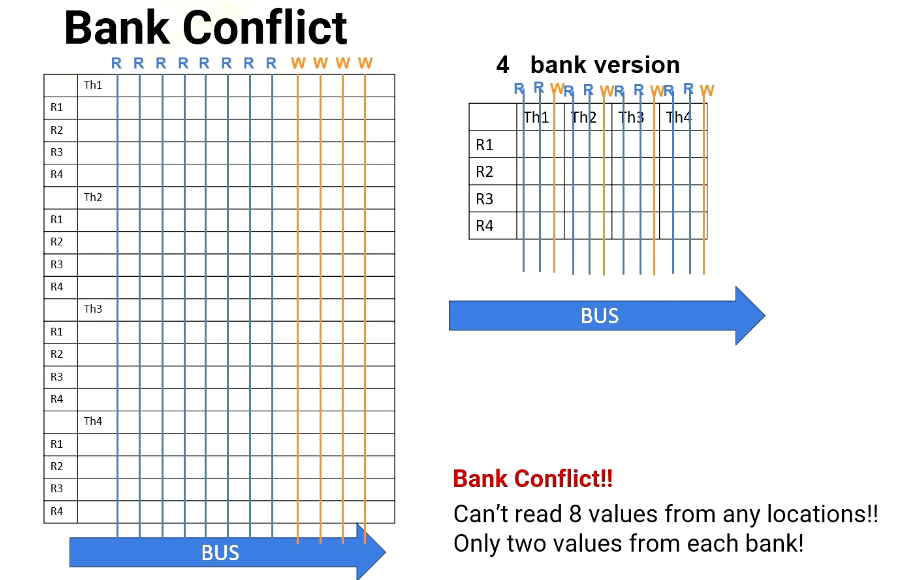

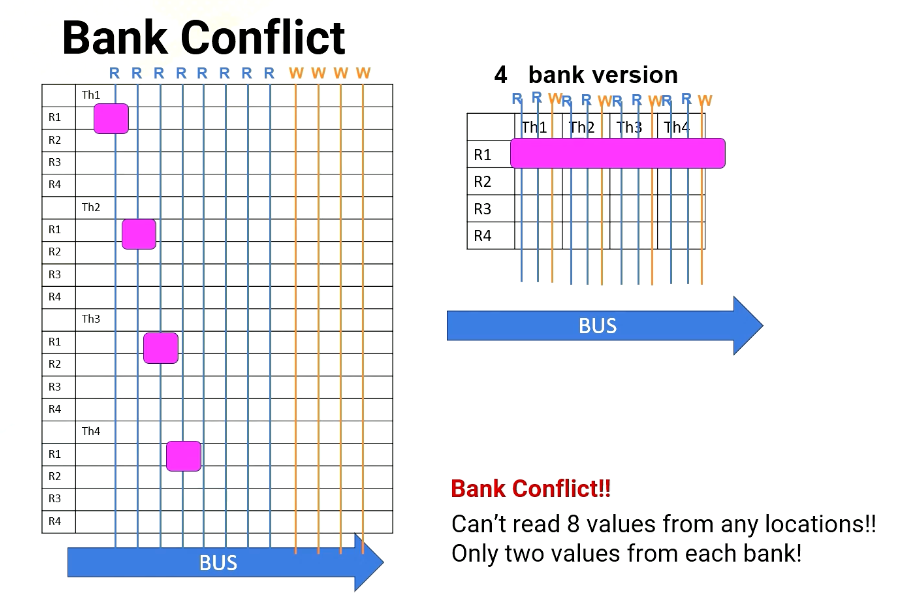

Port vs Bank

Let’s provide some backgrounds about ports and bank. Port is a hardware interface for data access. For example, each thread requires two read and one write ports. And if an execution width is a four, then there is a total of eight read ports and four write ports.

This figure illustrates eight read ports and four write ports per each register element. Read and write ports literally require wires to be connected. So it actually uses up quite a bit of space.

On the other hand, we can place register files differently and put only two read ports and one write port for each register element. This is called a four bank version which requires a much smaller number of ports.

What is a bank? Bank is a partition or a group of the register file. The benefit of bank is that multiple banks can be accessed simultaneously which means we do not need to have all read and write ports. We can simply have multiple banks with fewer read and write ports as shown in these diagrams. This is important because more ports means more hardware wiring, and more resource usage.

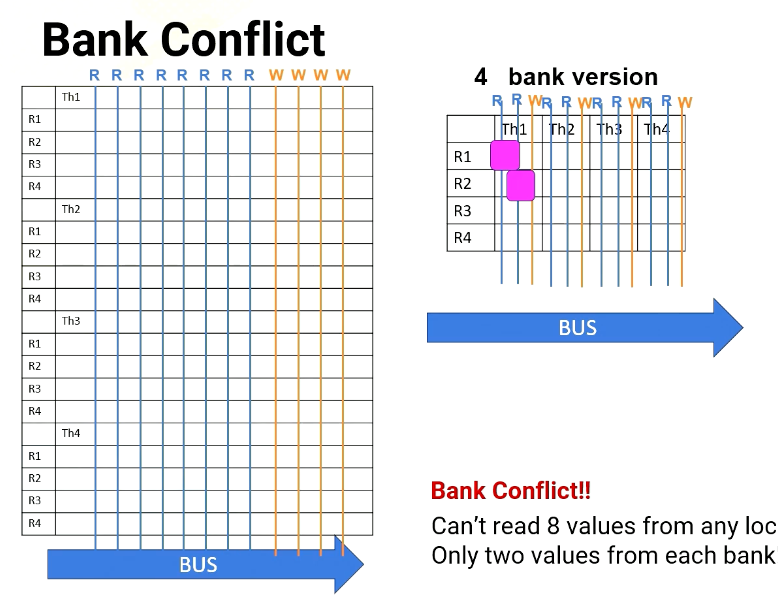

Bank conflict

Scenario #1: read R1 from T1,T2,T3,T4 (each thread on different banks)

Scenario #1: read R1 from T1,T2,T3,T4 (each thread on different banks)

However, a challenge arises when multiple threads in a warp requires simultaneous access to the same bank, which causes bank conflict. For example, in Scenario 1, the processor needs to read R1 from thread 1, 2, 3, 4. And each thread register file is on different bank.

Scenario #1: read R1 from T1,T2,T3,T4 (each thread on different banks)

Scenario #1: read R1 from T1,T2,T3,T4 (each thread on different banks)

In no bank version it has eight read ports. So it can easily provide four read values, as does the four bank version, since all register file accesses are in different banks.

Scenario #2: read R1, R2, R3, R4 from T1

Scenario #2: read R1, R2, R3, R4 from T1

However, in Scenario 2, the processor has to read from R1, R2, R3, and R4. All are in the same thread or in the same bank. For 8 port version, no problem. It can read all four values simultaneously, but in the four bank version it can read only two values at a time. So it takes multiple cycles.

Variable Number of Registers per Thread

- Will Register File Have Bank Conflicts?

- Why do we worry about bank conflicts for registers? Don’t we always need to access two registers from different threads anyway?

The challenge arise because CUDA programming will get benefits from different register counts per thread. Let’s say that we want to operate instruction R3 = R1+R2. Here are two cases.

- In the first case, four registers per one thread.

- In the second case, two registers per one thread. And different colors means different banks.

- In Case 1, reading registers would not cause a bank conflict because each thr ead register file is located in a different bank.

- However, in Case 2, read R1, R2 from multiple threads would cause a bank conflict because thread 1 and thread 2 are in the same bank. Same for Thread 3 and Thread 4.

- Remember, GPU executes a group of threads (warp), so multiple threads are reading the same registers. Then how to overcome this problem? The first solution is using a compile time optimization.

Solutions to Overcome Register Bank Conflict

Then how to overcome this problem? The first solution is using a compile time optimization. The compiler can optimize code layout because register ID is known as static time.

Side Bar: Static vs. Dynamic

Let me just provide a little bit of background of static versus dynamic. In this course static often means before running code. The property is not dependent on input of the program. Dynamic means that the property is dependent on input of the program.

Here is an example. There is a code ADD and BREQ.

1

2

LOOP: ADD R1 R1 #1

BREQ R1, 10, LOOP

Here is an example. There is a code ADD and BREQ. Let’s say that this loop iterates 10 times. What would be static and dynamic number of instructions? Static number of instruction is 2, since this is what we see in the code, and dynamic number of instruction is 2 times 10 becomes 20. Also note that static time analysis means compile time analysis.

Solutions to Overcome Register Bank Conflict (Cont)

Going back to the solution to overcome register bank conflict, we try to use compile time analysis to change the instruction order or to remove bank conflict. But not all bank conflicts can be avoided.

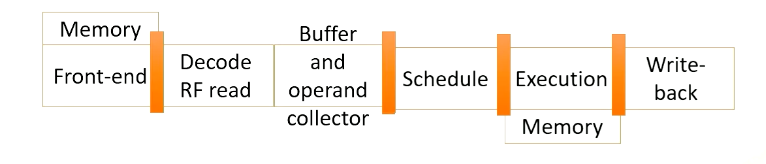

So in a real GPU, GPU pipeline is more complex (beyond a 5-stage pipeline). First, register file access might take more than one cycle, maybe there is a bank conflict, or maybe because the register file might have only one read port, so the pipeline is actually expanded.

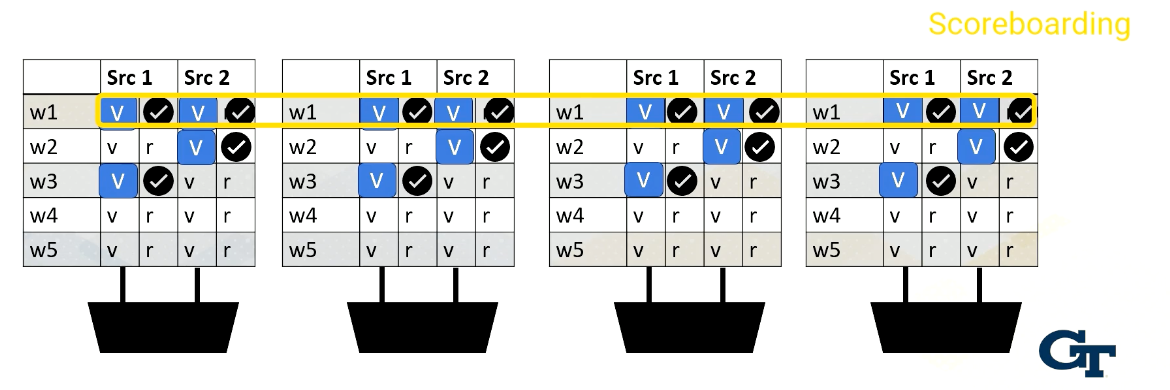

After value is read, the values are stored in a buffer. After that, scoreboard is used to select instructions.

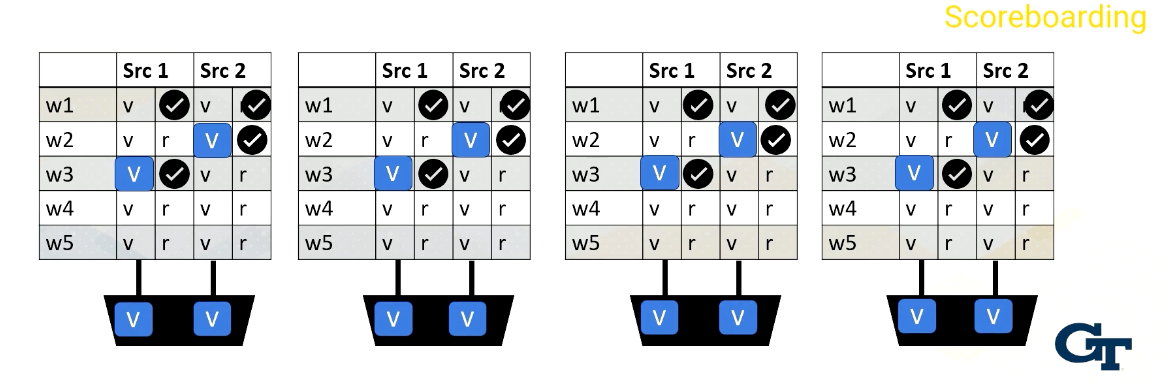

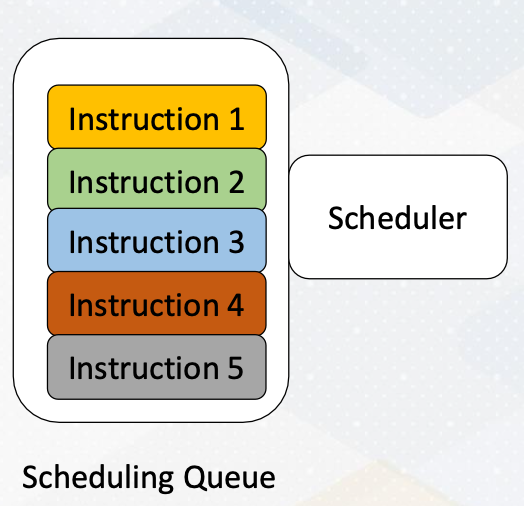

Scoreboarding

Scoreboard is widely used in CPUs to enable out of order execution. It is used for dynamic instruction scheduling.

However, in GPUs, it is used to check whether all source operands within a warp are ready and then it chooses which one to send to the execution unit among multiple warps. Possible policies is oldest first, and there could be many other policies to select warps.

Reading Register Values

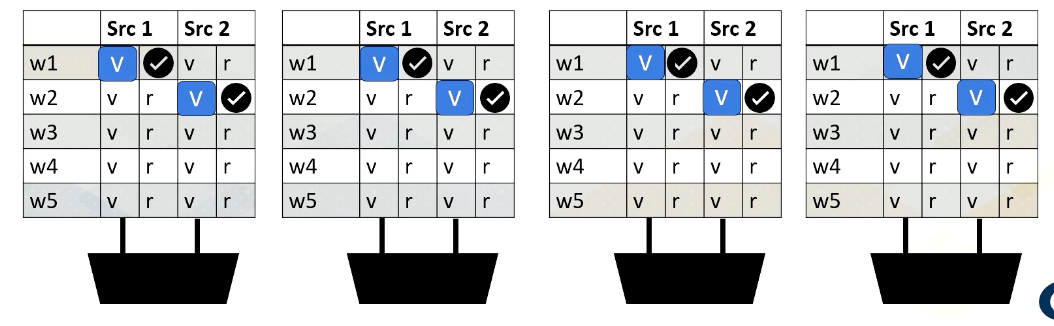

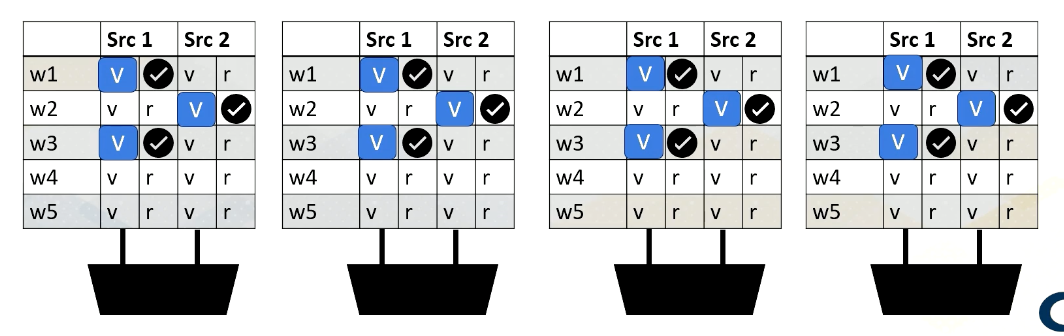

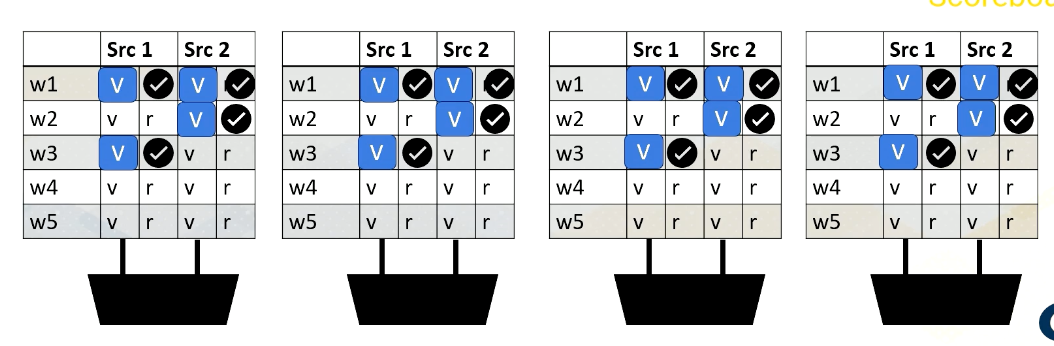

Here is an example. Reading register files might take a few cycles. Ready register values are stored at a buffer. And this diagram shows the buffer and scoreboard.

Whenever a value is stored, it sets the ready bit. Here, Warp 1, src 1 is ready.

then Warp 2, src 2 is ready,

then Warp 3, src 1,

and then finally Warp 1, src 2 are ready.

When all values are ready, the scoreboard selects the warp, then the values are sent to the execution unit.

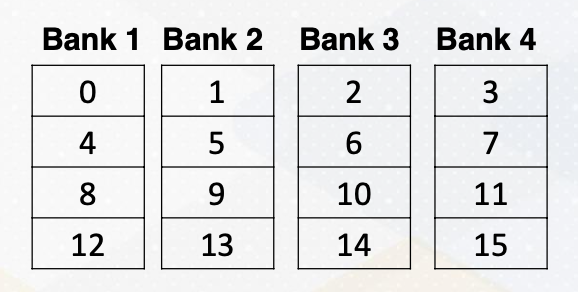

Shared Memory Bank Conflicts

Bank conflict can also happen in the shared memory on GPUs. Shared memory is on-chip storage and also scratch pad memory. Shared memory is also composed with banks to provide high memory bandwidth. Let’s assume the following shared memory.

There are four banks and the number in a box represent memory addresses.

Here is a code which shows shared memory, shared input.

1

2

__shared__ float sharedInput[index1];

Index1= threadIdX.x *4

And the index to the shared memory is computed by simply multiplying threadIdX.x and 4. Which means that thread 1 needs to access memory address 4, and thread 2needs to access memory address 8, and thread 3 needs to access memory address 12, and so on. Unfortunately, all these addresses are all mapped to the same bank, so all threads will generate bank conflicts. The solution is changing the software structure, which we will cover more in later lectures.

In summary, in this video, we have learned the benefits of banks in register and shared memory. We have also studied the reasons of bank conflicts in register files and shared memory.

Module 4 Lesson 3: GPU Architecture Pipeline

Course Learning Objectives:

- Describe GPU pipeline behavior ith multithreading and register file access

- Explain how mask bits are used.

GPU Pipeline (1)

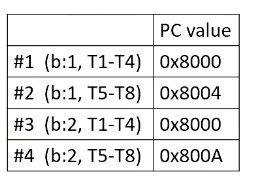

Here is a GPU pipeline.

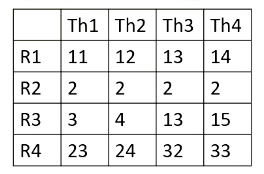

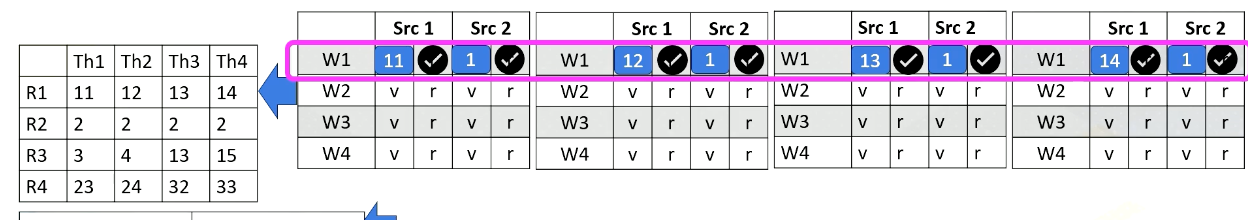

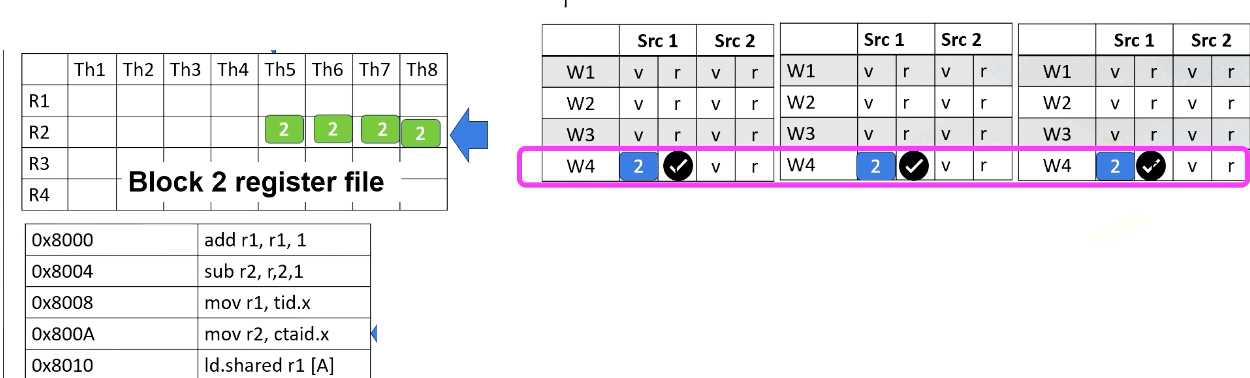

Here it shows PC values for each warp. In this example, there are four warps, and the first warp should fetch from PC value 0x8000 and the first warp is from Block 1 and threads 1-4. The second warp is from Block 1 as well and threads 5-8. The third warp is from Block 2 and threads 1-4, and fourth warp is Block 2 threads 5-8.

Here it shows register values for Block 1.

Here it shows an I-cache memory address and the instructions. You see tid.x at 8008. Tid.x is a special register to store thread ID within a block. And an instruction at 8000A has a ctaid, which is another special register to store block ID within a grid.

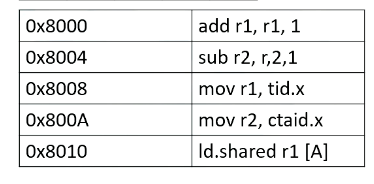

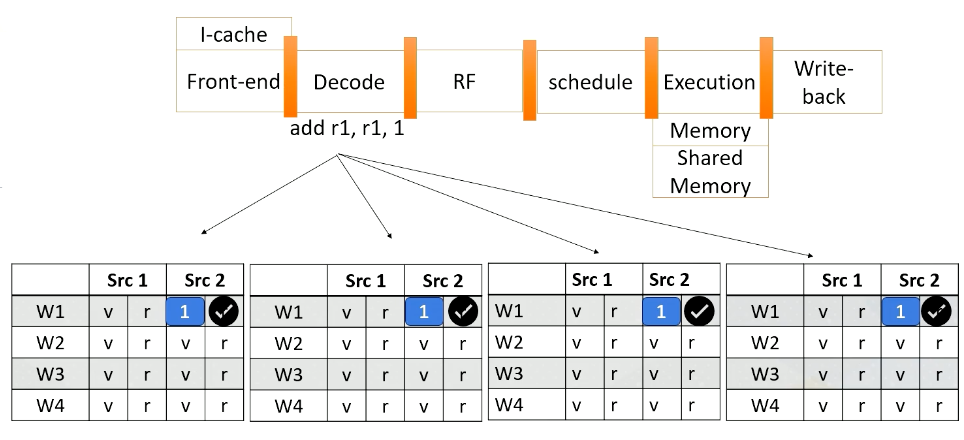

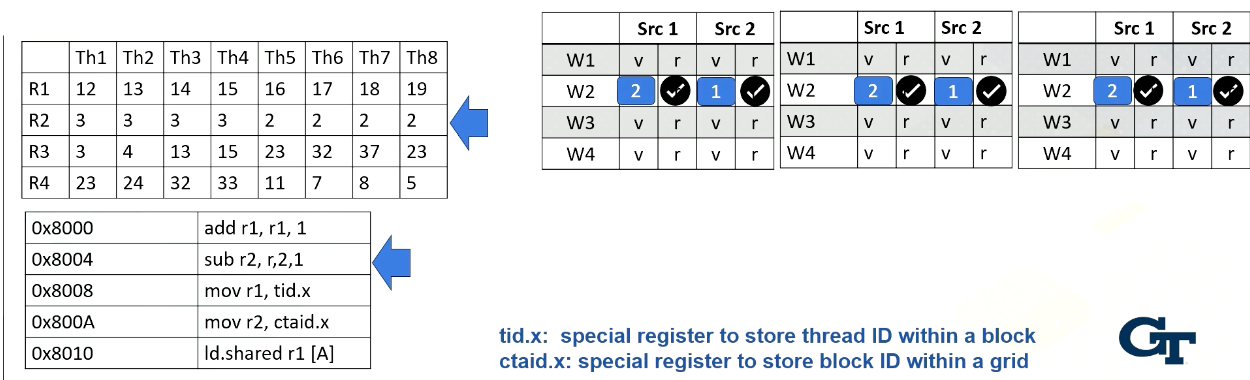

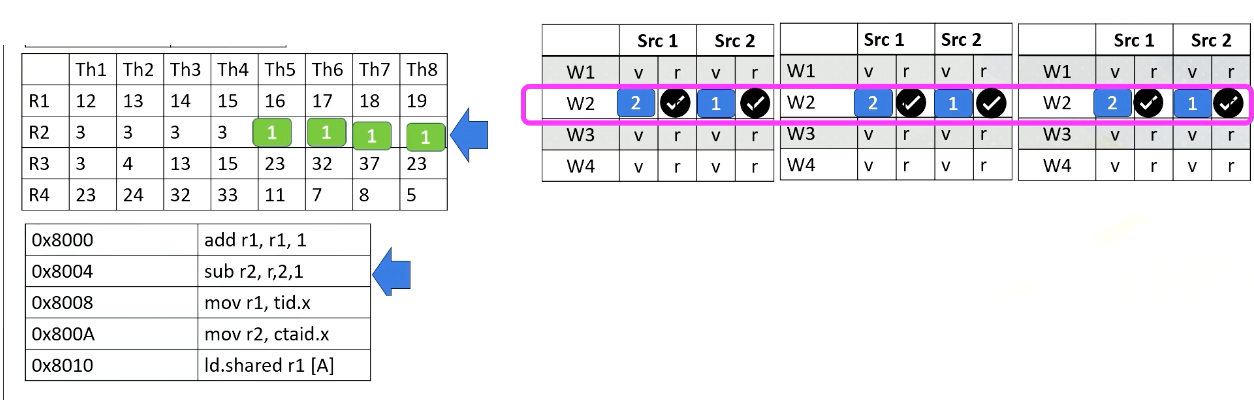

And here is a scoreboard. Okay, the front end fetches an instruction from 8000. Only one instruction is fetched for entire warp. Add r1, r1, 1.

This instruction is brought to the front end, and then it is sent to the decode stage, and it will be decoded. Since the instruction itself has a constant value or immediate value 1, the value 1 will be broadcasted to the scoreboard.

So all source 2 operands are ready for the warp 1. And in the register file access stage, we access the source register 1, which is r1 for thread 1, 2, 3, 4. And values are read and sent to the scoreboard. So now, the processor checks the instruction and sees that all the source operands are ready, so this warp is selected.

The warp is sent to the execution stage and it performs the additions, and the final result will be written back to the register file at the right back stage.

GPU Pipeline (2)

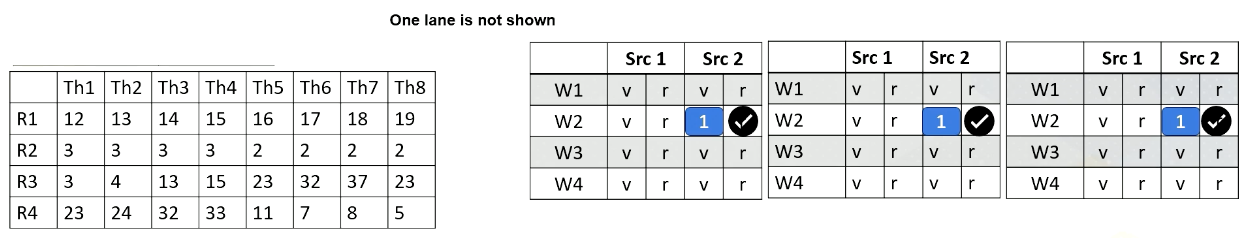

Now let’s look at the second warp. It fetches an instruction from warp 2 which is Block 1 and thread 5-8. Again, the instruction is decoded, the constant value 1 is broadcasted to all source operand buffers. Here we omit the values for one execution unit due to the space limitation.

In the next stage, the processor accesses the register file and reads value r2 from thread 5-8. In this example, all source values are all two. Now all source operands are ready, so the scheduler select Warp 2 and they will be executed even though they are all operating in the same values.

The hardware performs the same work for all thread in a warp, in the subtractions and perform 2 minus 1. And then the result will be written back at the write back stage just like the previous example. They will update the register values for thread 5-8.

GPU Pipeline (3)

Now let’s assume the processor fetches from warp 4. The PC address is 8000A, which moves ctid.x to r2, The warp 4’s block ID is 2, so ctid.x value is also 2. Ctaid.x value is read and stored inside the scoreboard. These values will be stored to r2 at the write back stage as shown in this animation.

GPU Pipeline (4)

Now, let’s assume that instead of 8000A, the processor fetches from 8008 for warp 4. The instruction has tid.x. Since this is for threads 5-8, tid.x values will be 5, 6, 7, and 8. The tid.x values will be read and stored in the scoreboard, and in the write back stage, all these values will be written back to r1 as shown in this animation.

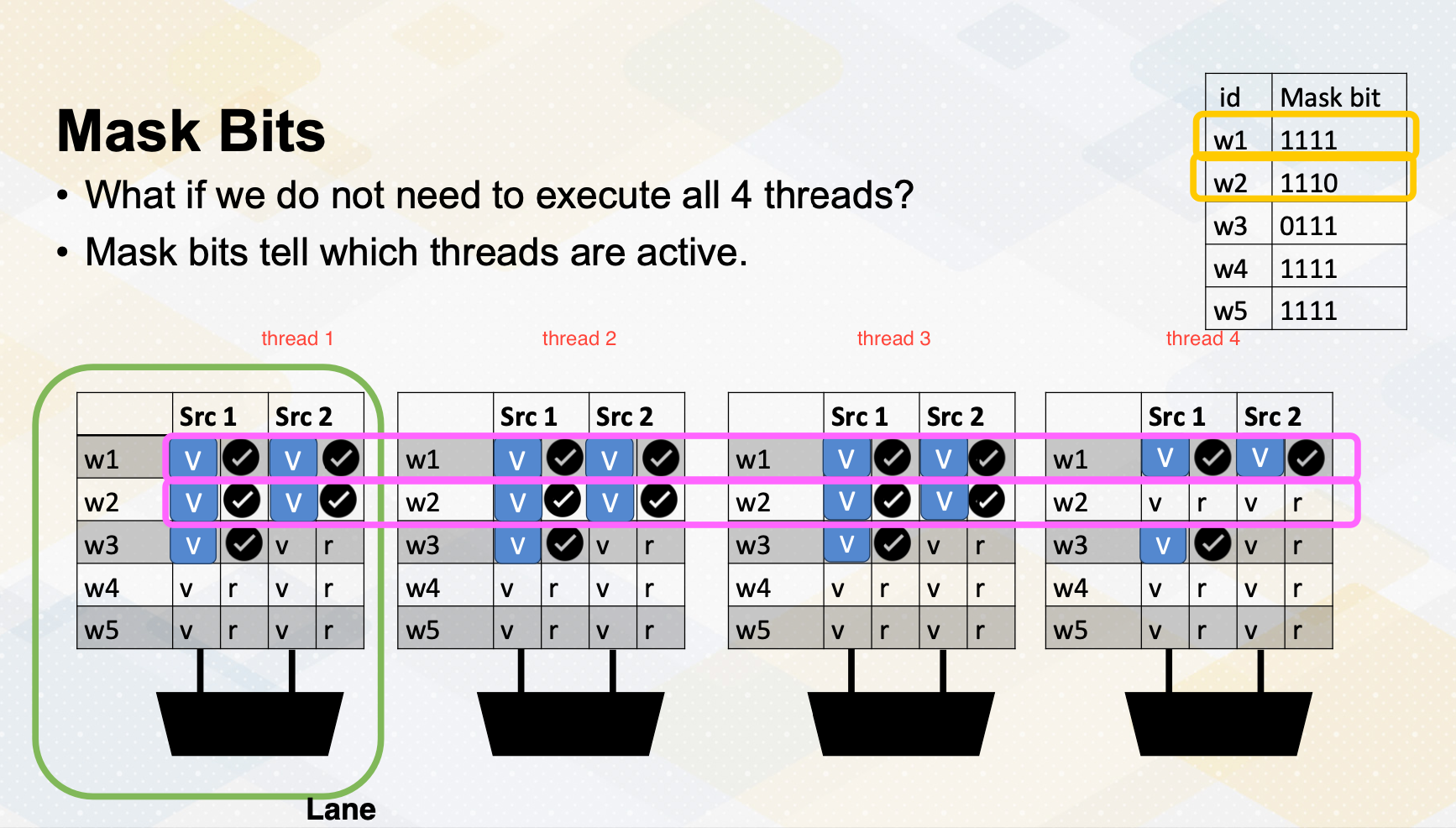

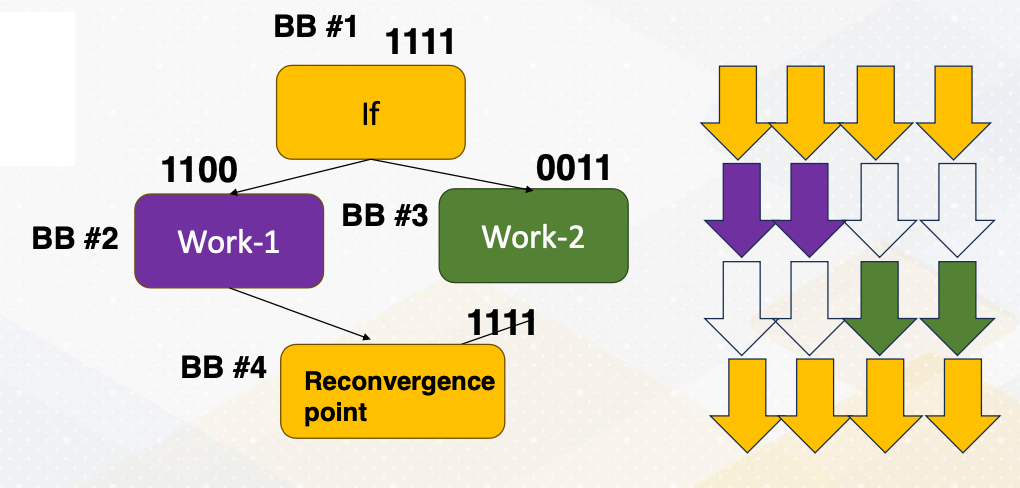

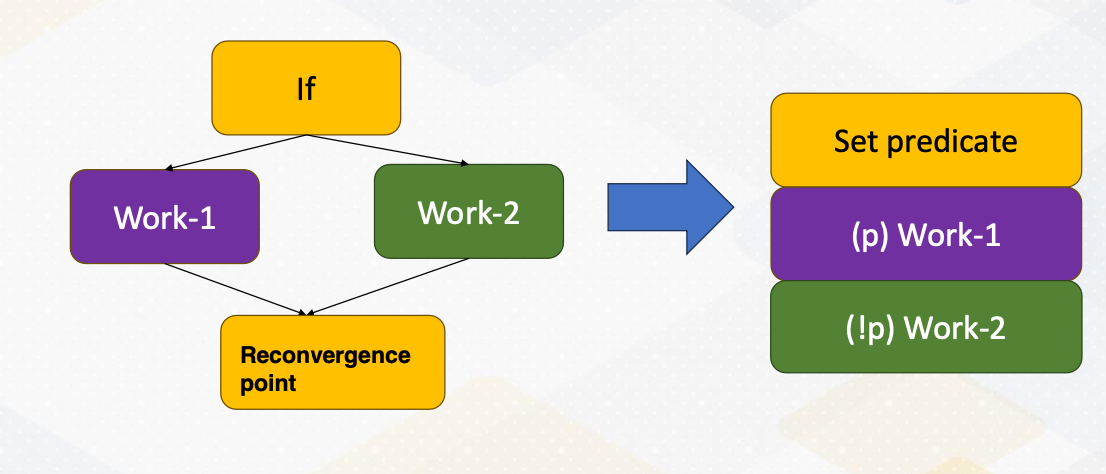

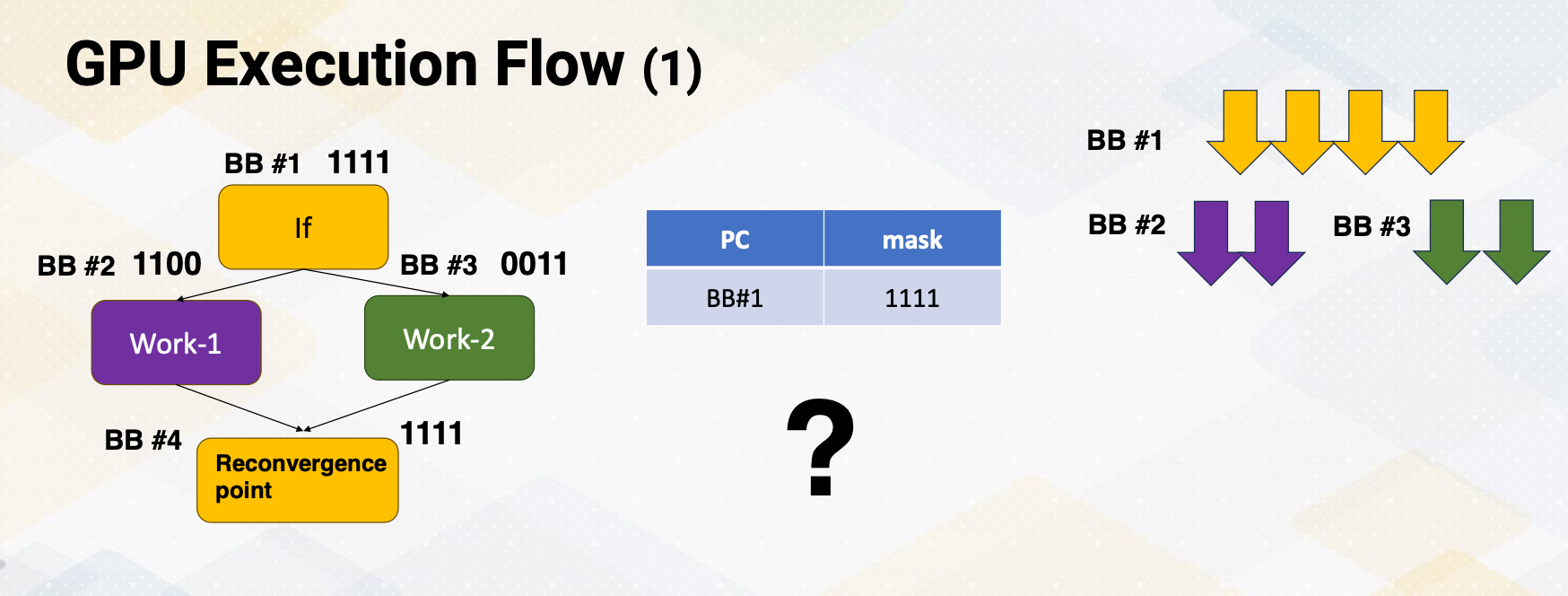

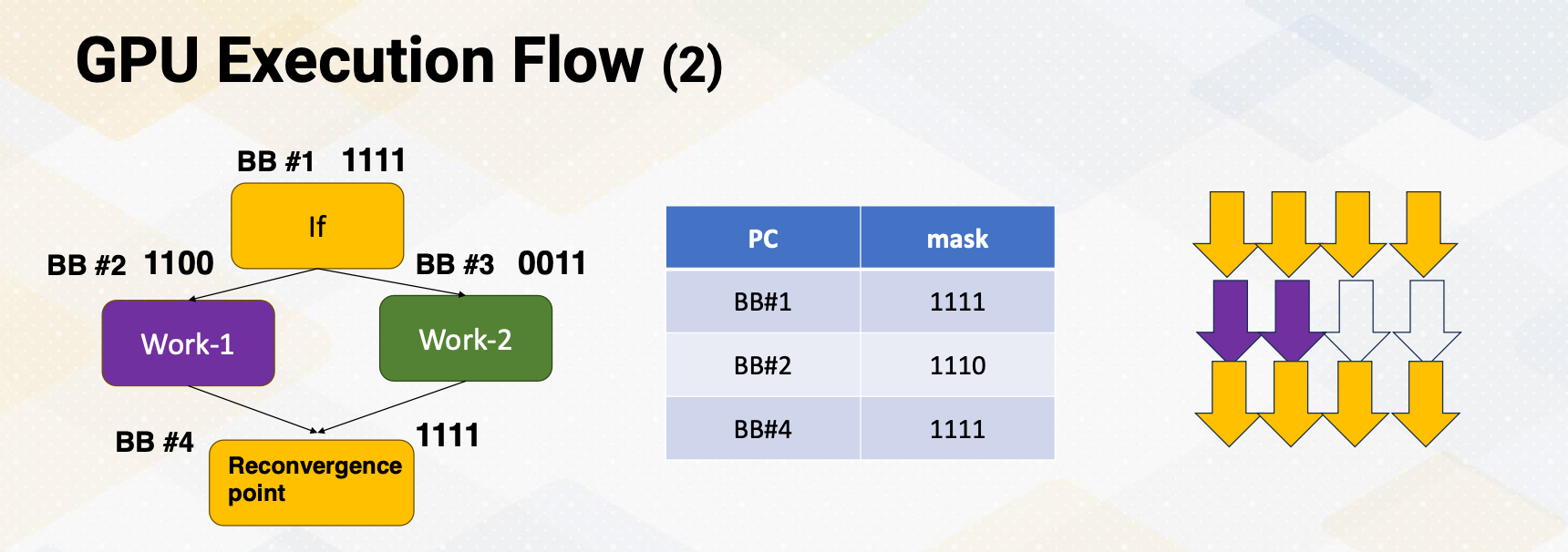

Mask bits

What if we do not need to execute r4 threads? GPU stores information, tells which thread or lane is active. One ALU execution path is called lane.

Active thread performs actual computation and inactive thread will not do any work. Mask bits tell which threads are active or not. Here scoreboard shows register values and ready bits. Warp 1 has 1111 in the mask bit, which means all threads in the warp 1 will actually perform the work. Warp 1 is selected and is executed. In the warp 2 case, the mask bit is 1110, so only the first three lanes or first three threads will do the work.

In summary, in this video we have reviewed the GPU pipeline’s instruction flow. We also studied the use of special registers for thread ID and block ID. The concept of active mask for identifying active SIMT lanes is also introduced in this video.

Module 4 Lesson 4: Global Memory Coalescing

Course Learning Objectives:

- Explore global memory accesses

- Explain Memory address coalescing

- Describe how one warp can generate multiple memory requests

Let’s look at more on global memory coalescing. In this video, we’ll explore global memory accesses. You should be able to explain memory address coalescing and you should be able to describe how one warp can generate multiple memory requests.

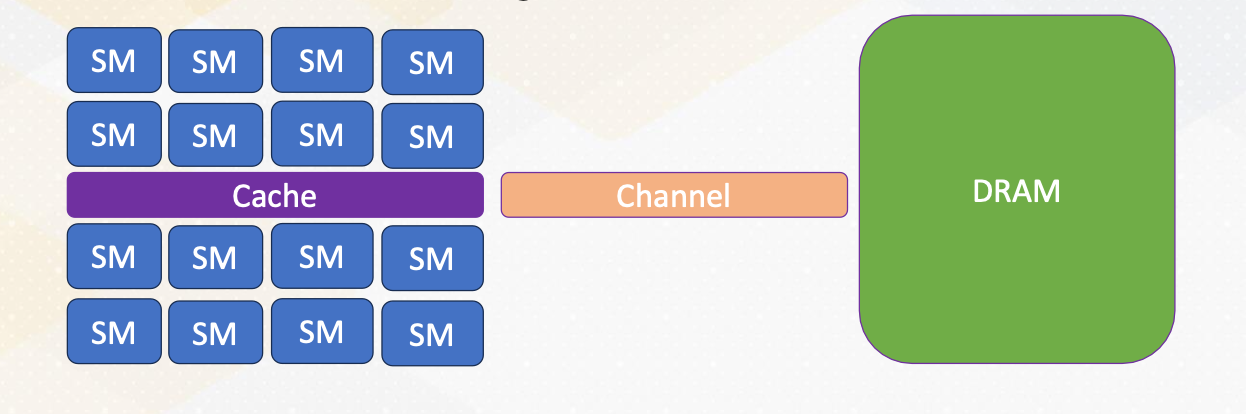

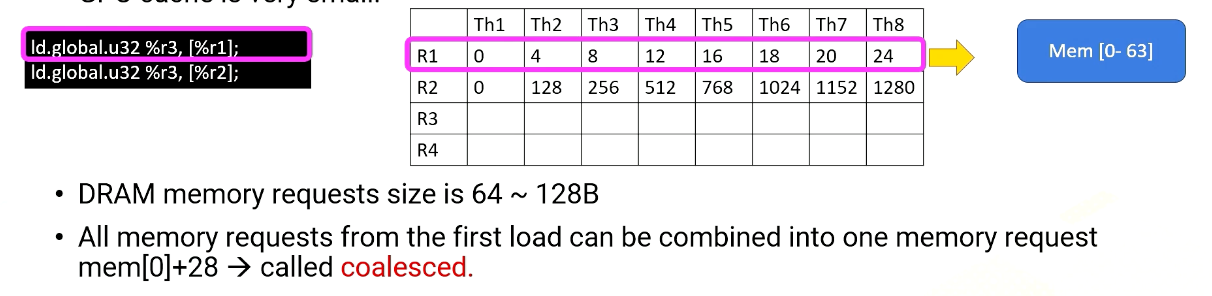

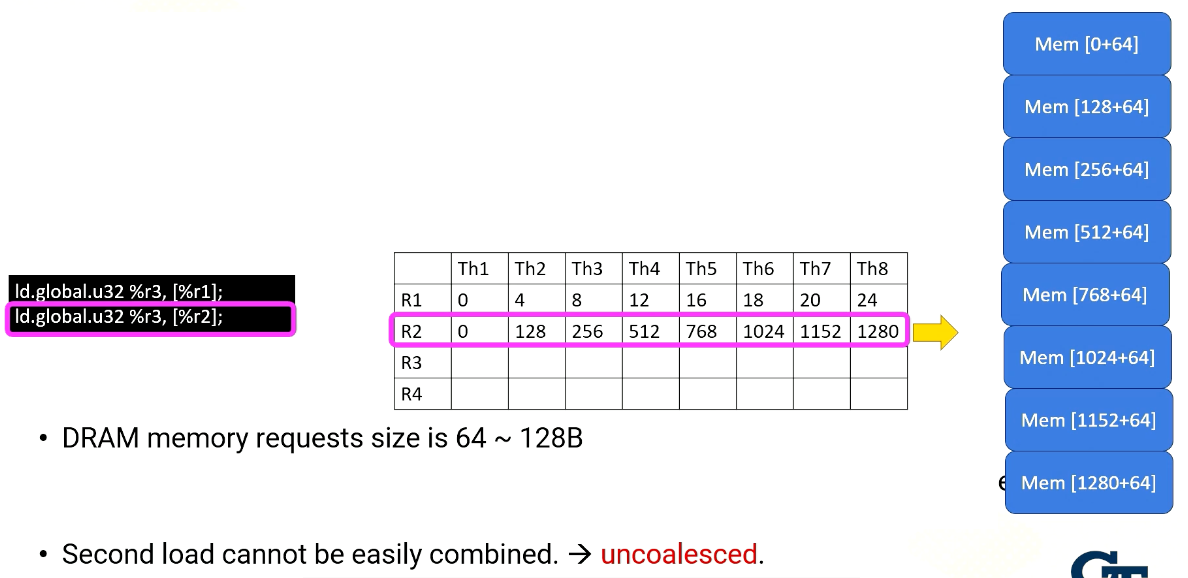

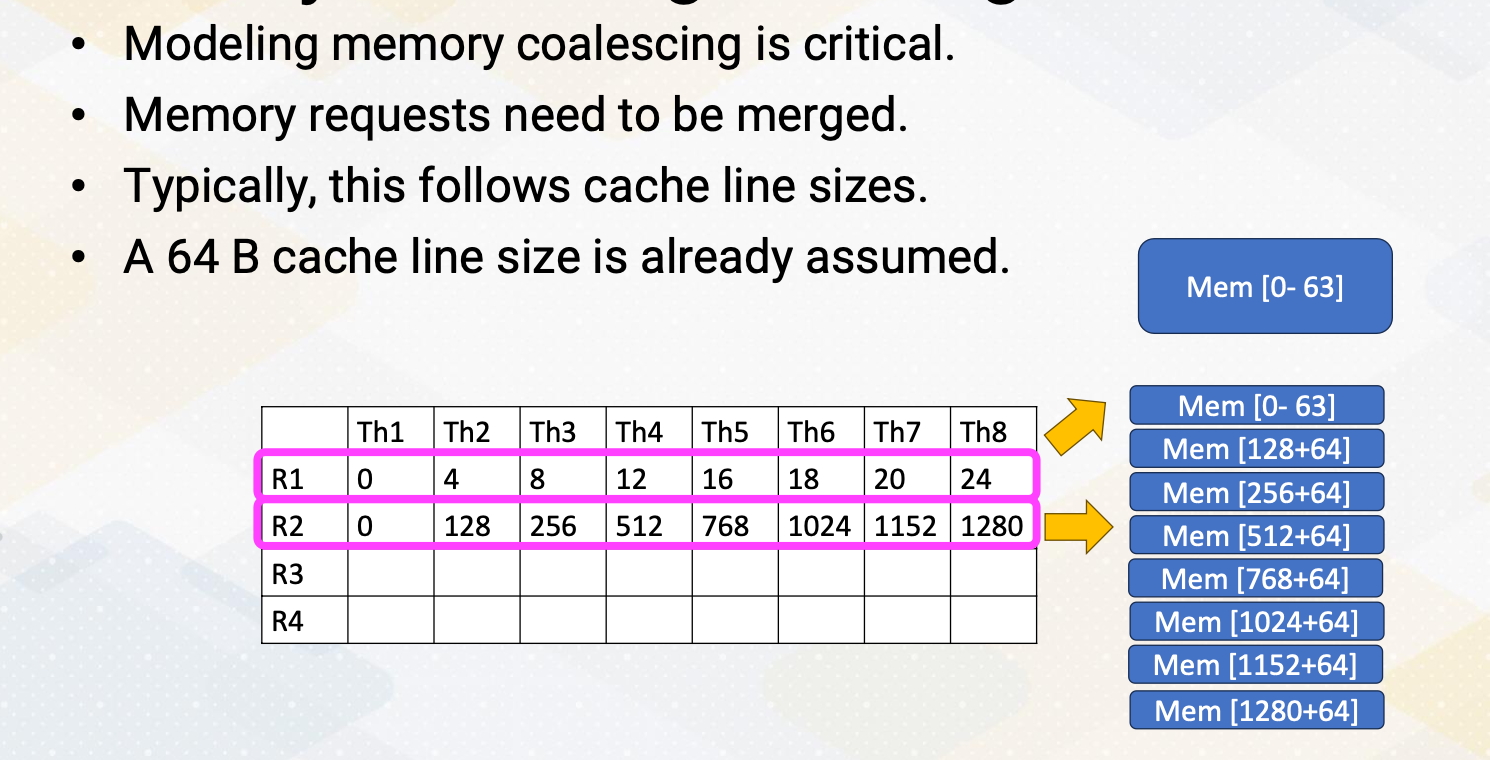

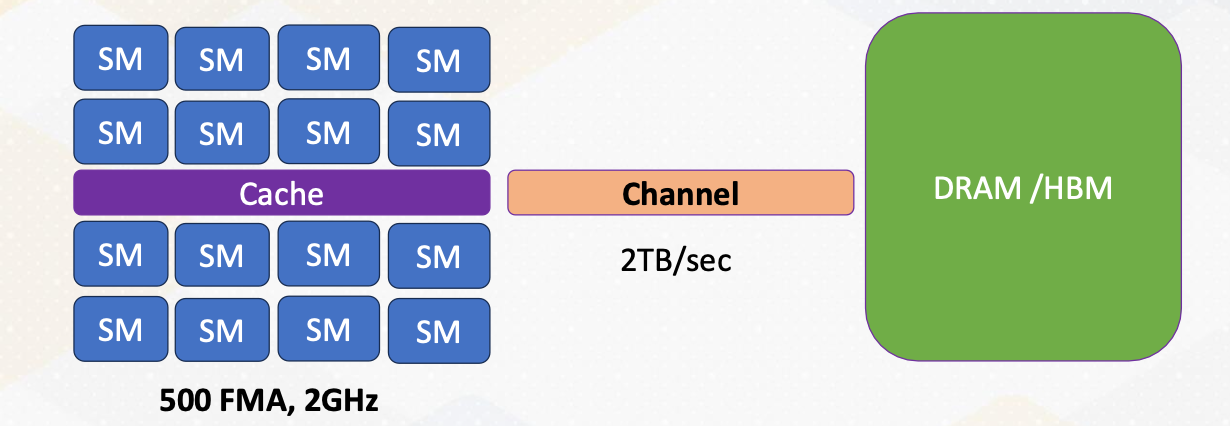

Global Memory accesses

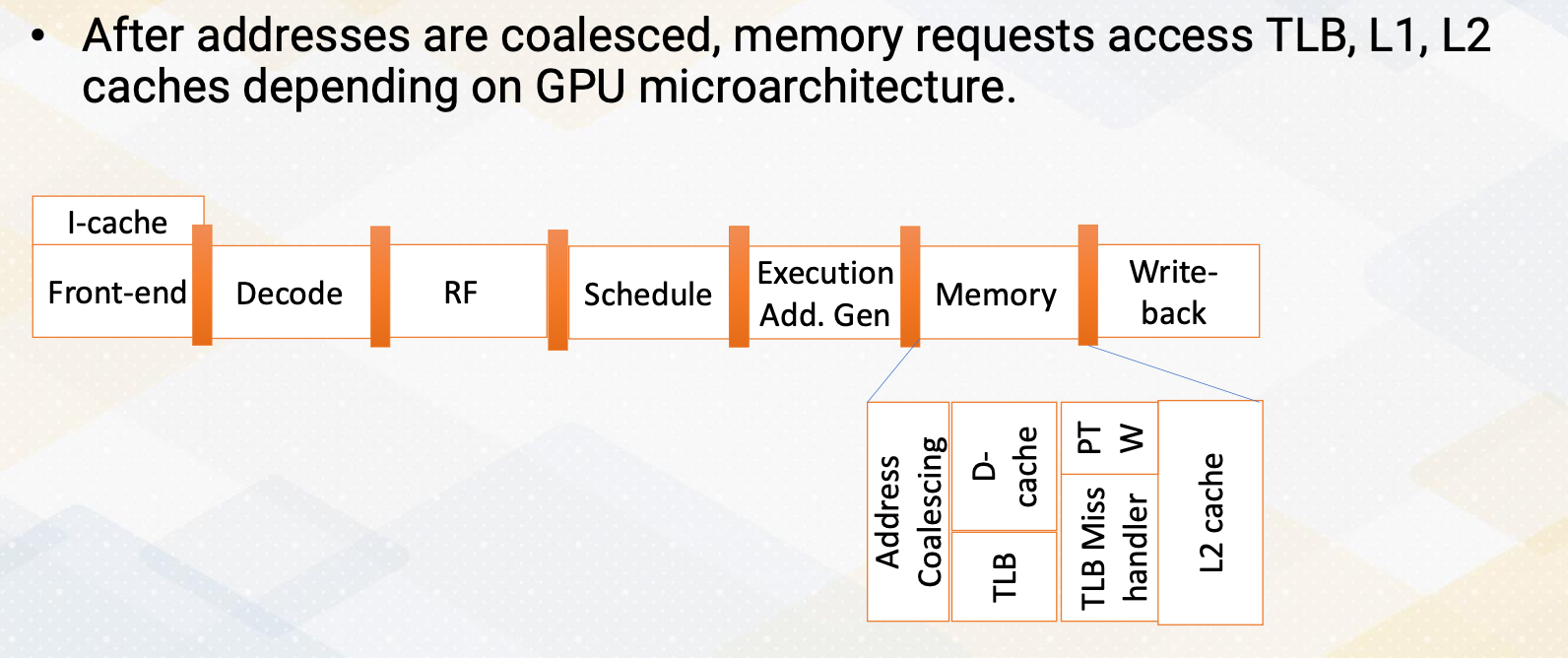

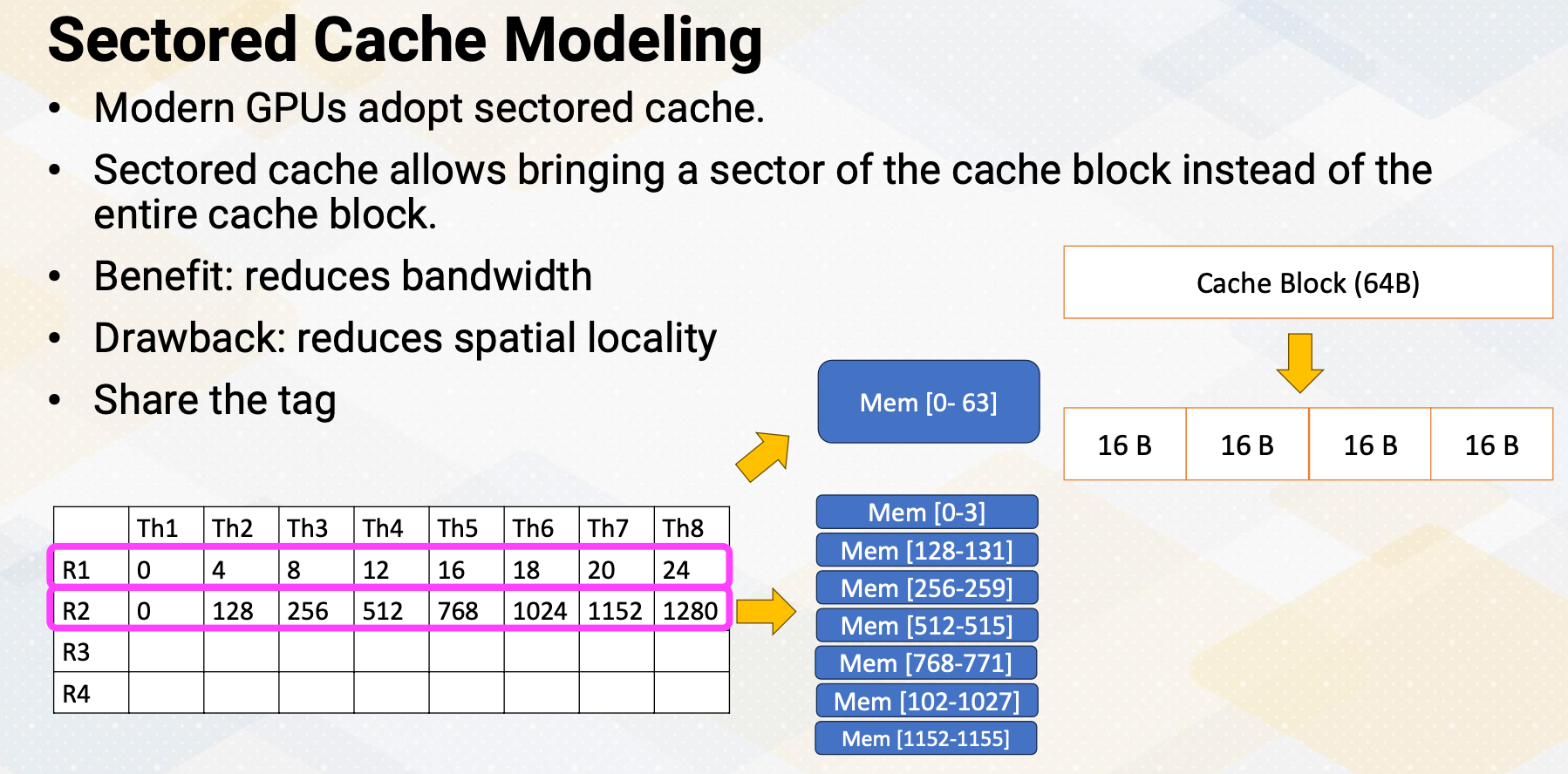

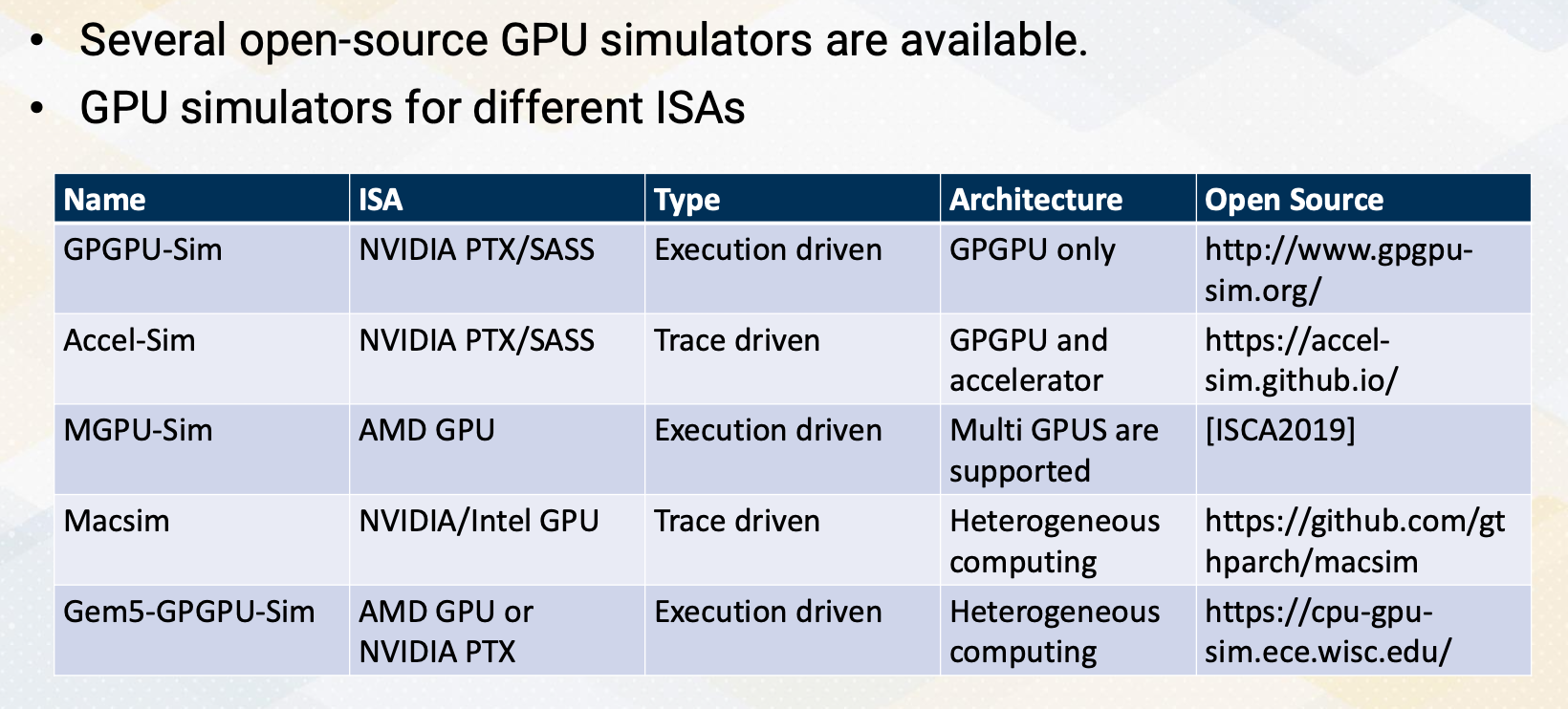

Here the slide illustrates GPU and DRAM. In GPU architecture, one memory instruction could generate many memory requests to DRAM because one warp can generate up to 32 memory requests if we assume warp size is 32. So the total number of memory requests can easily be a larger number. For example, if we have 32 SMs and each SM has one warp to be executed, 32 times 32 In other words, 1024 requests can be generated in one cycle. Each memory request is 64 byte, so 64 kilobyte per cycle, and if we assume one GHz GPU, 64 terabyte per second memory bandwidth is needed.

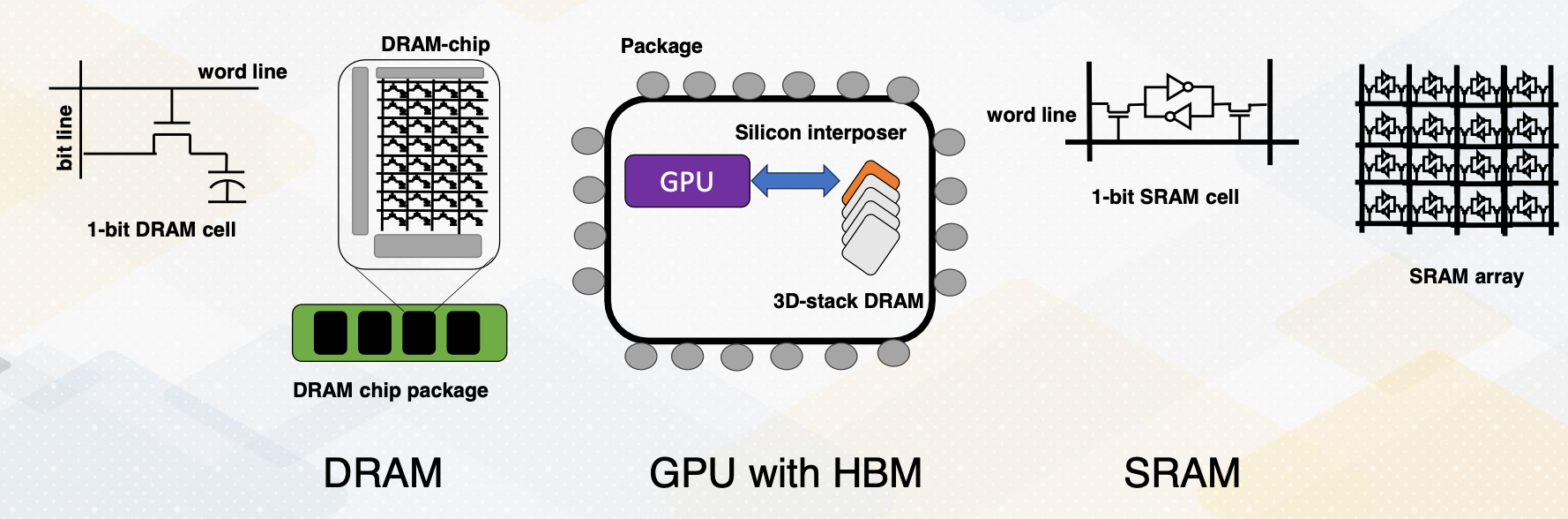

Side Bar: DRAM vs. SRAM

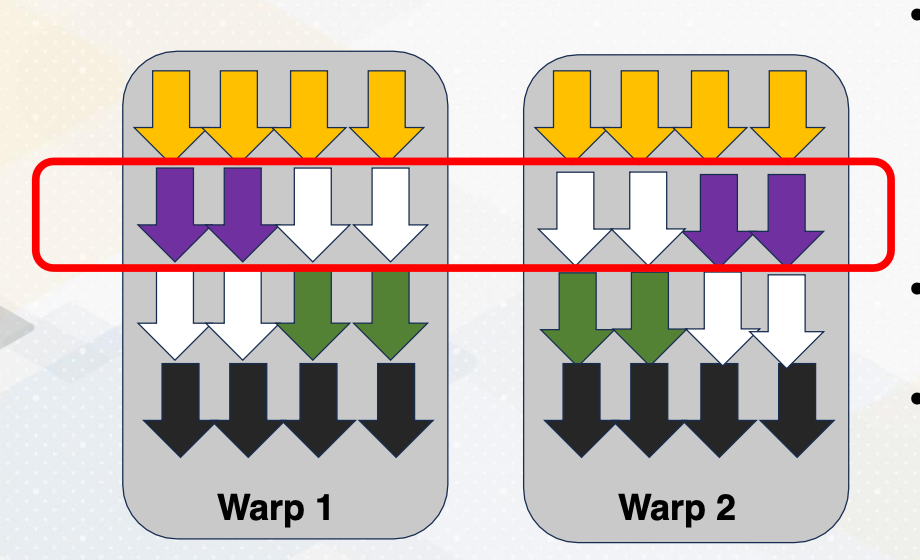

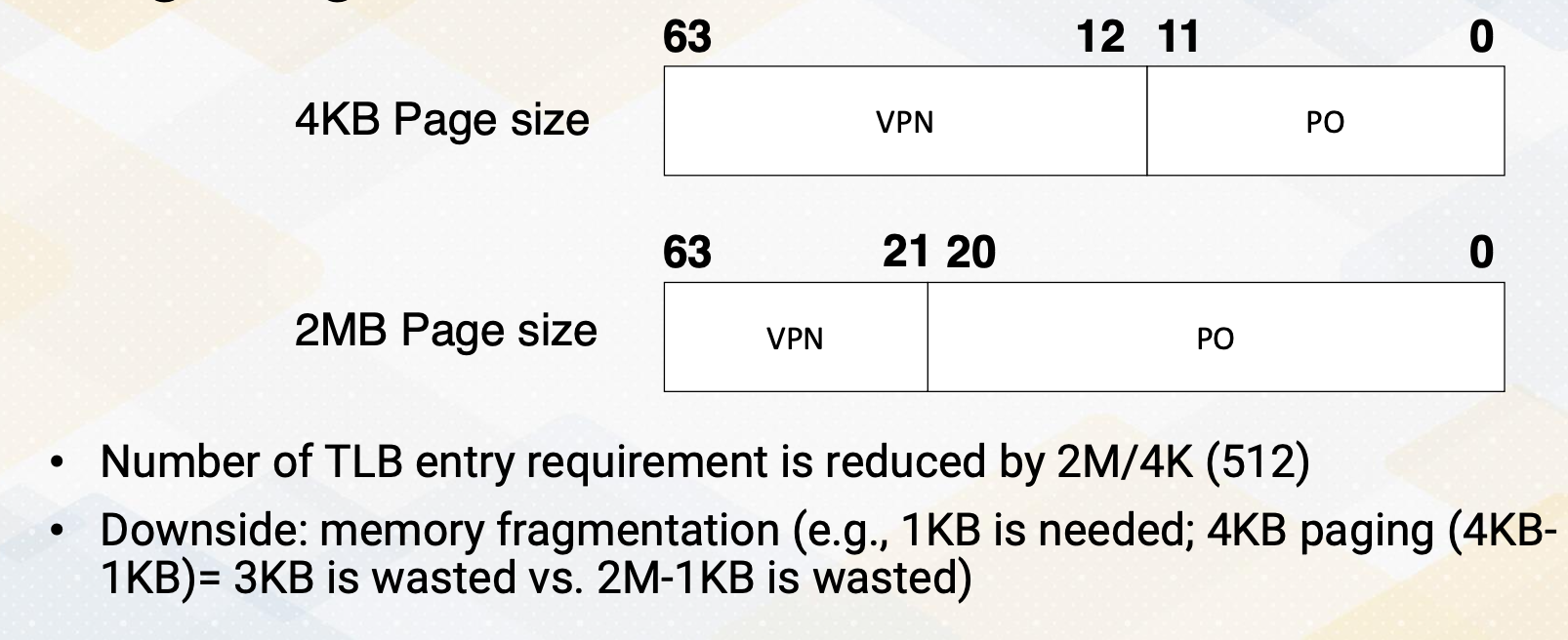

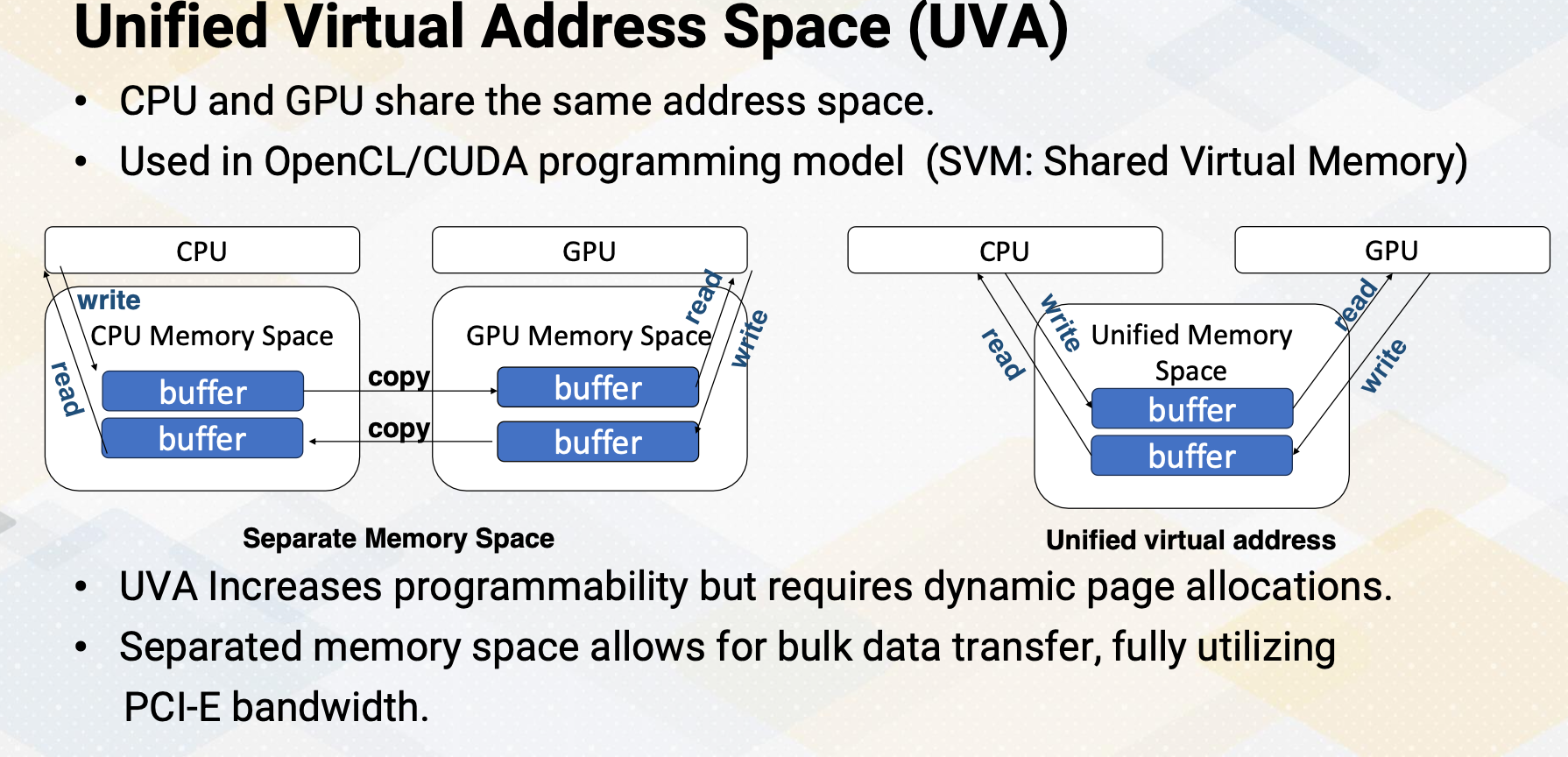

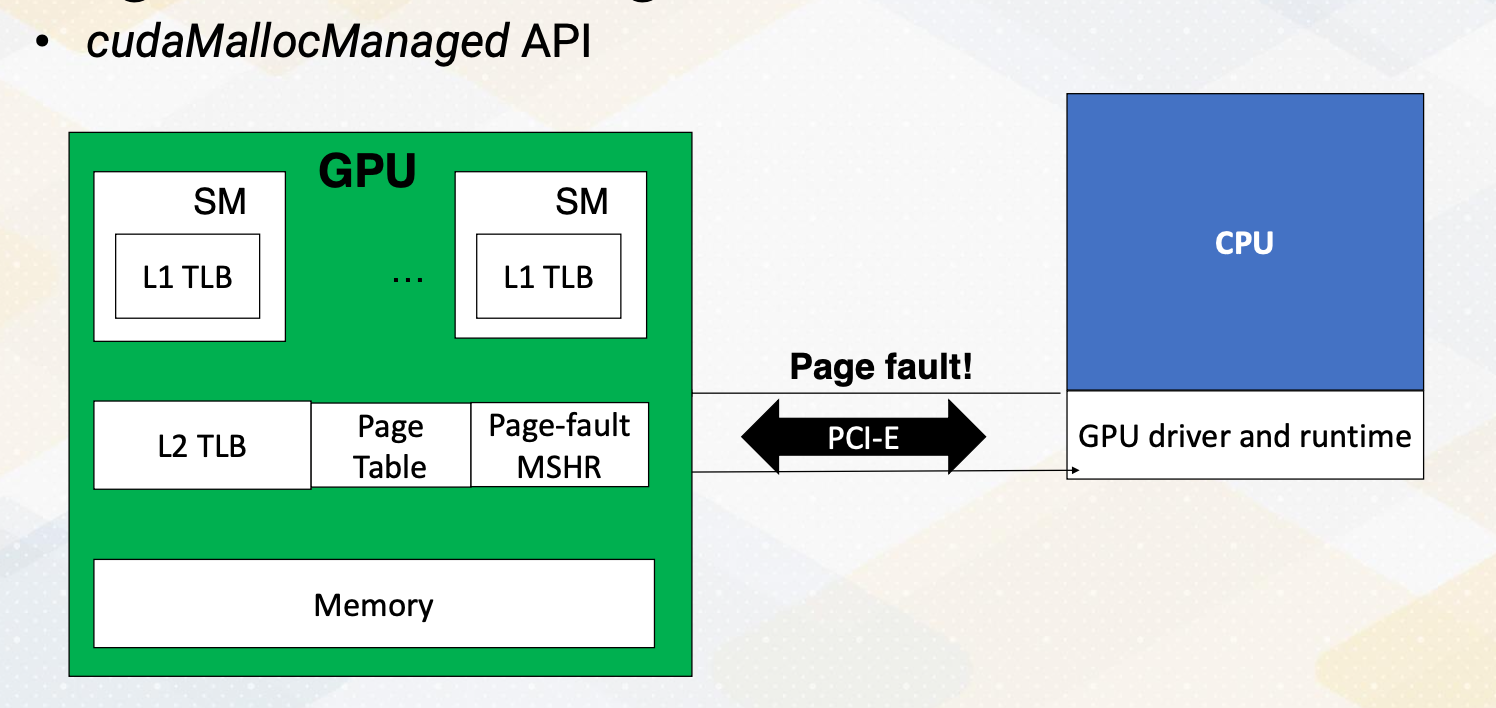

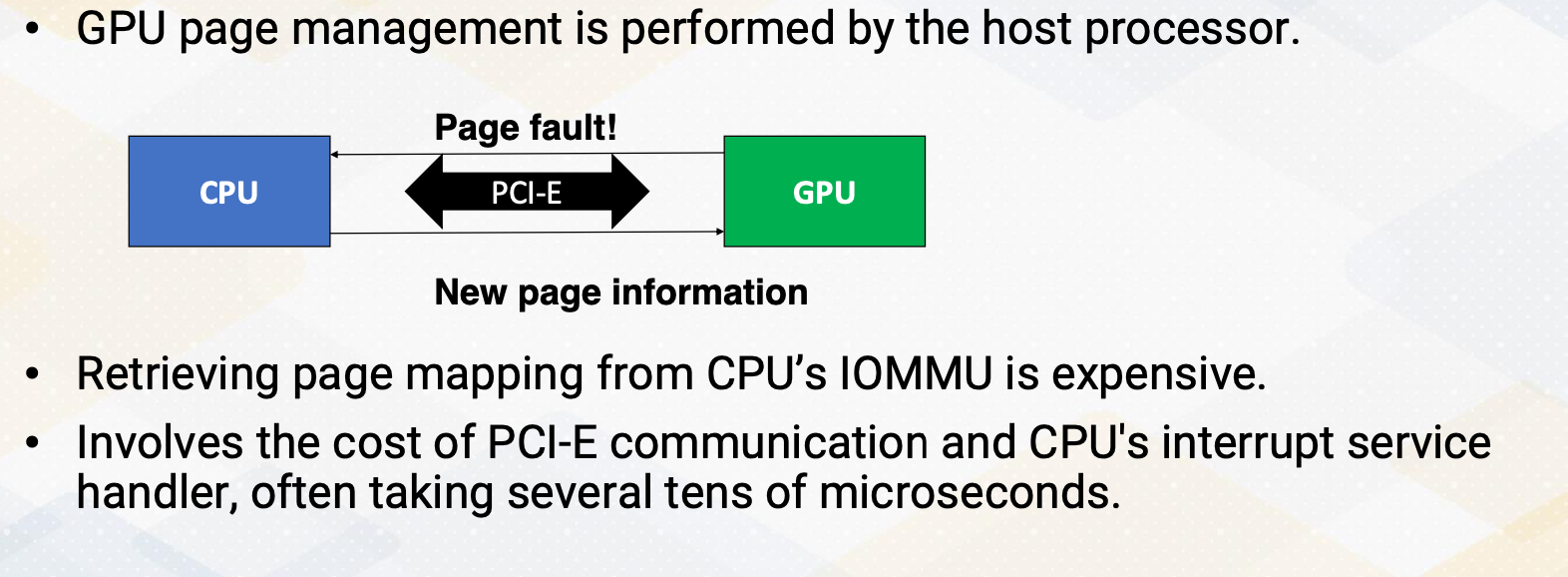

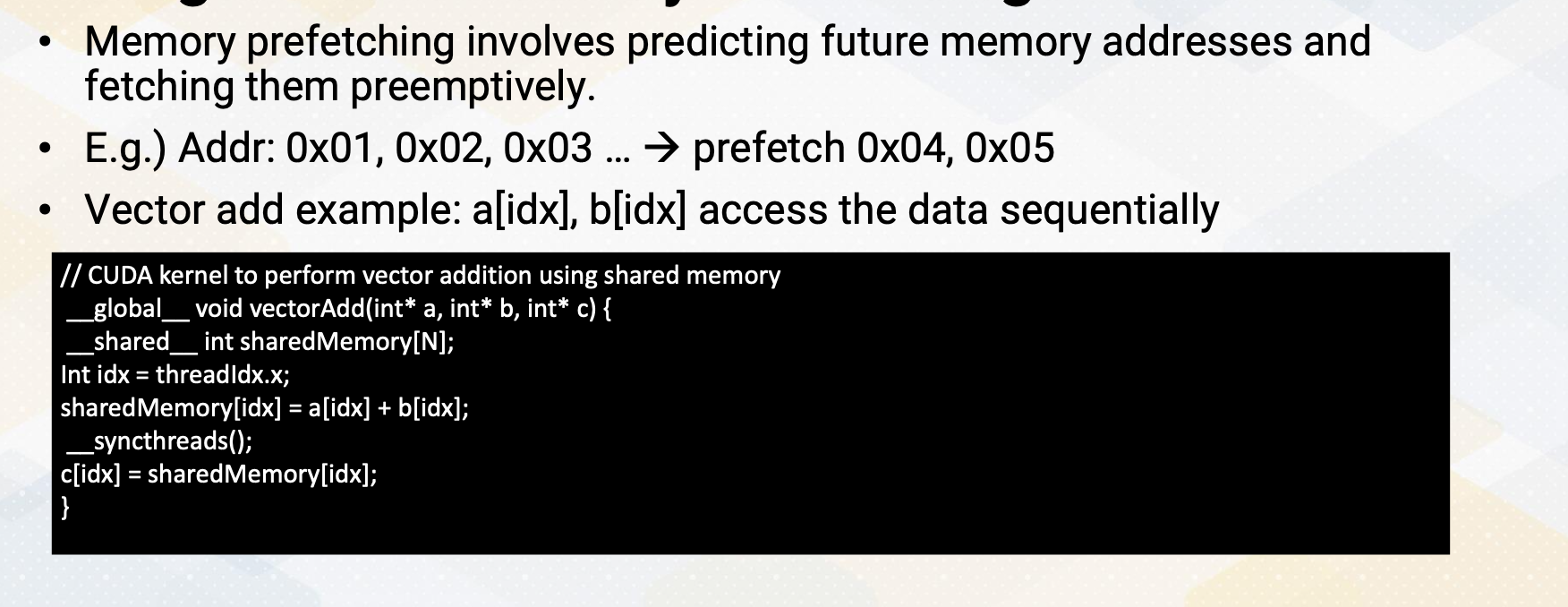

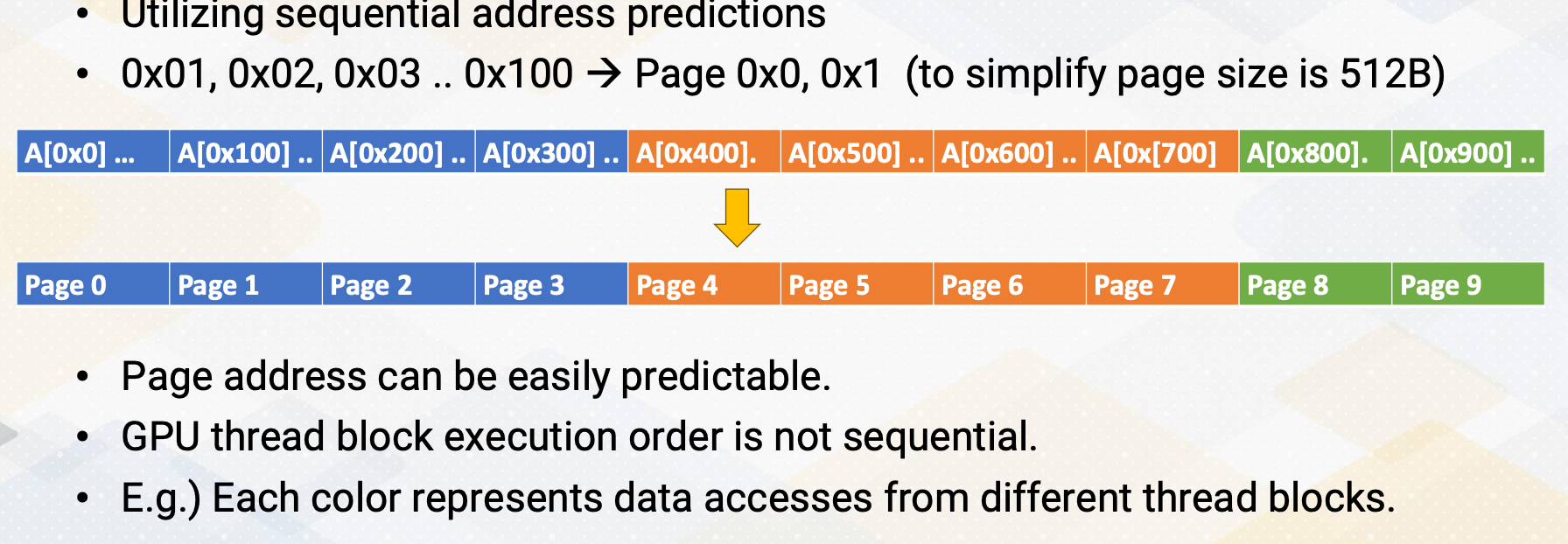

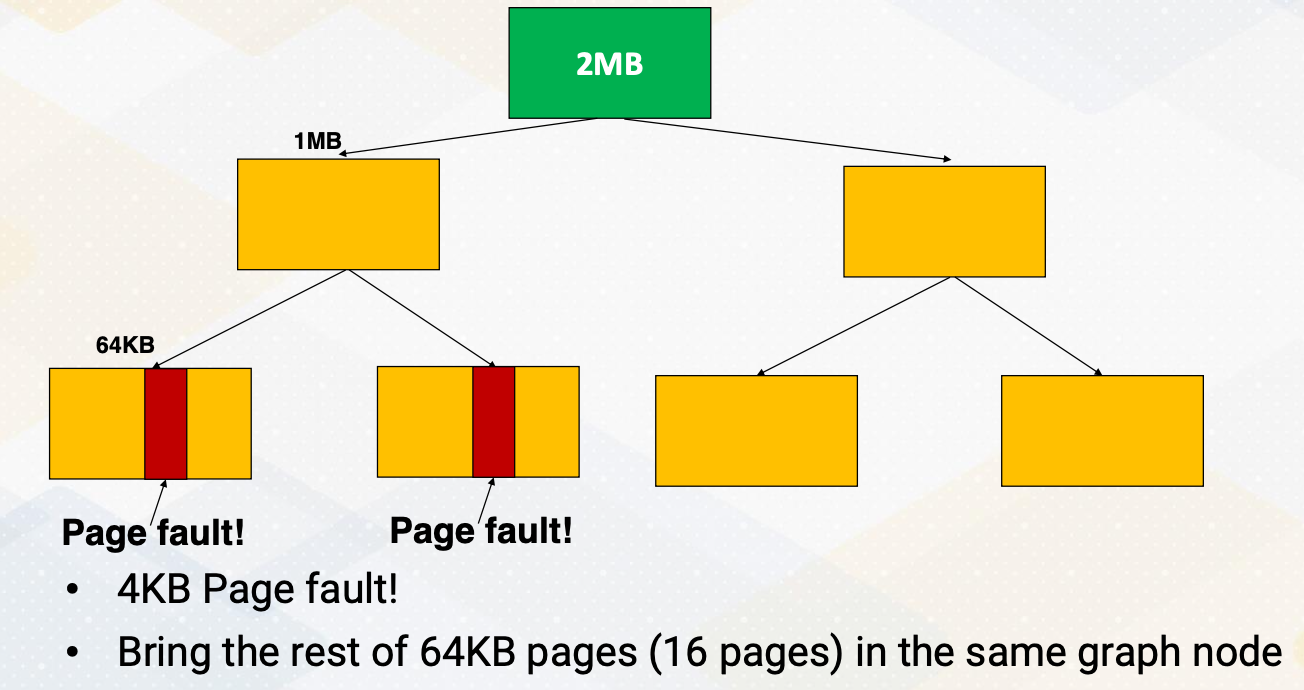

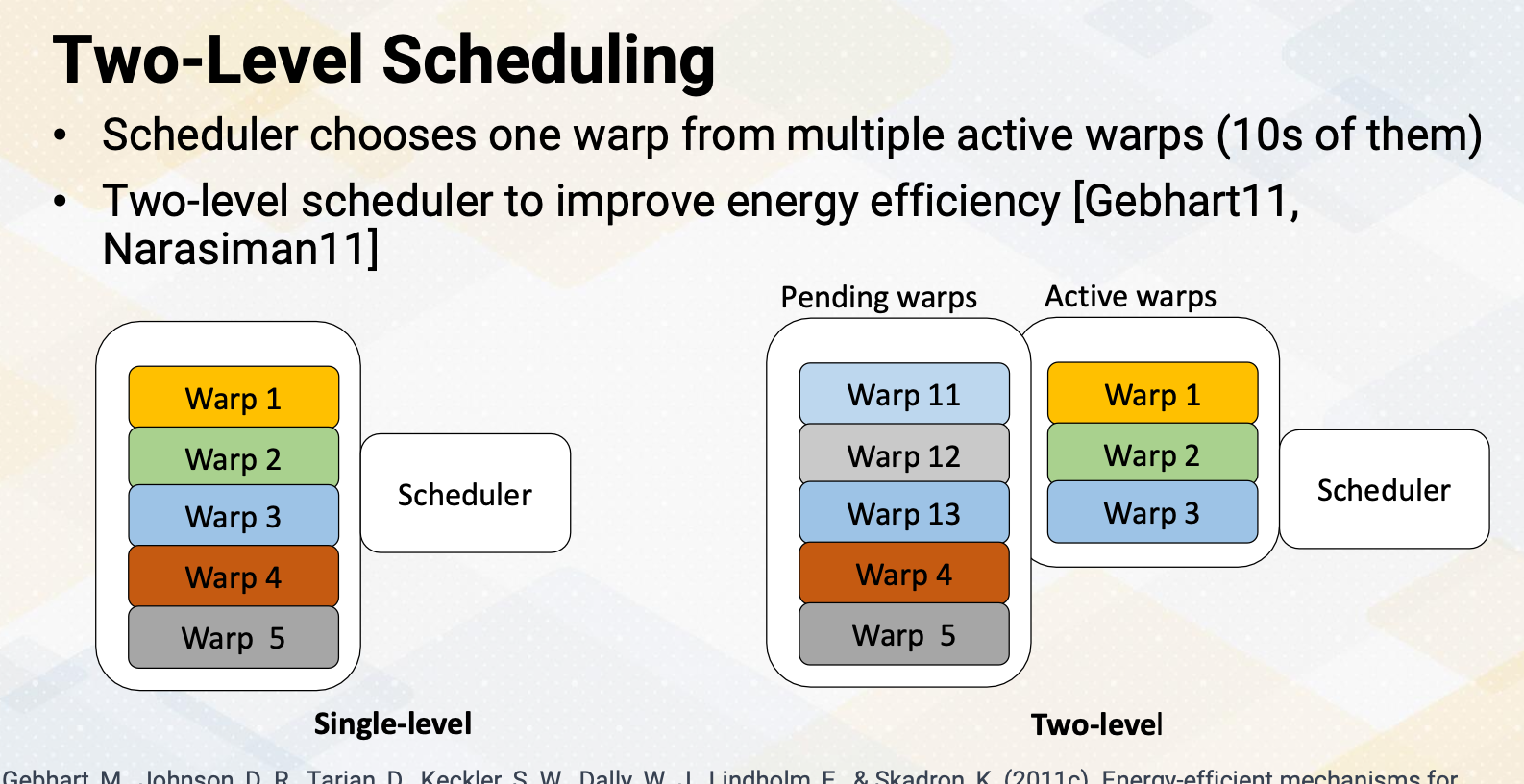

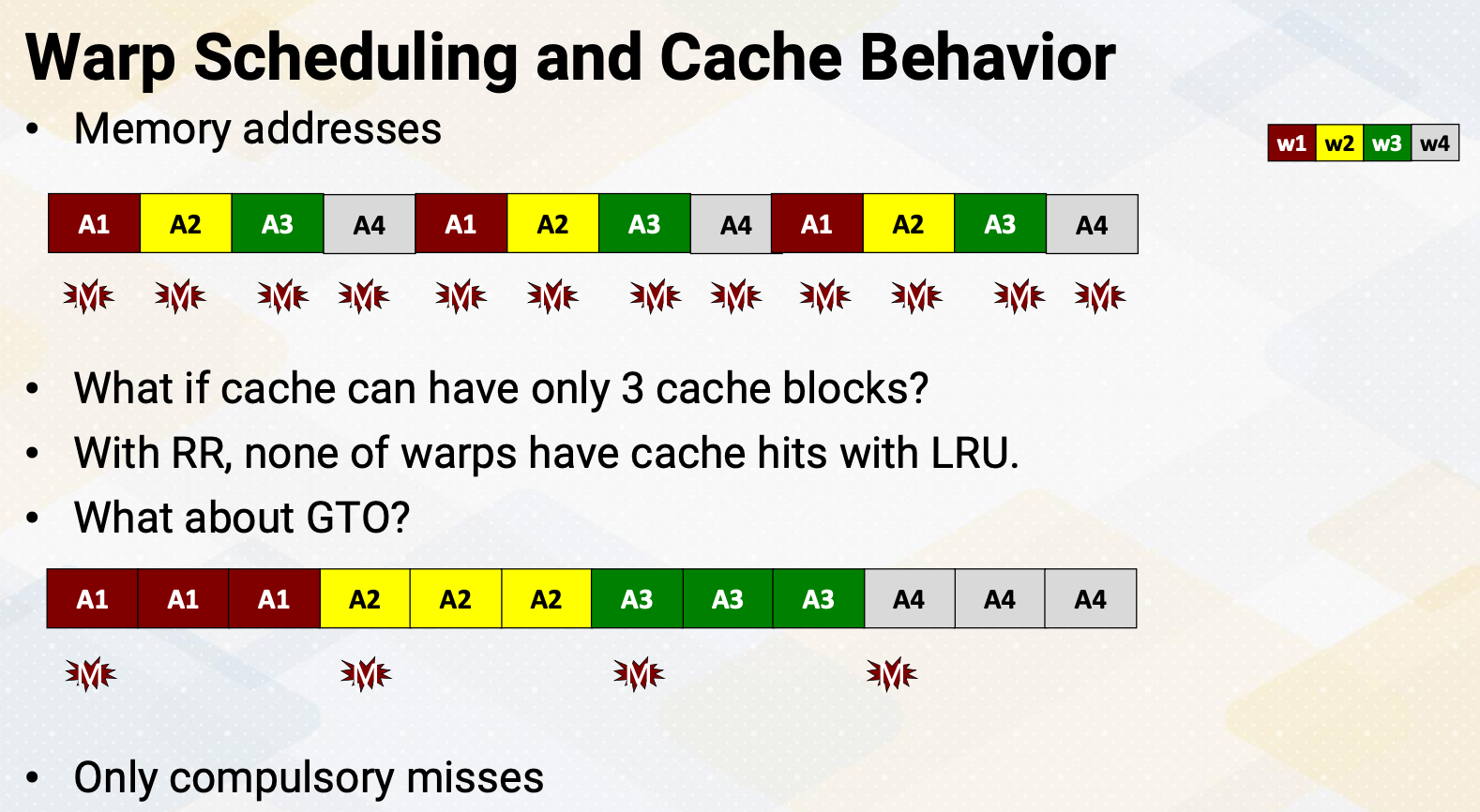

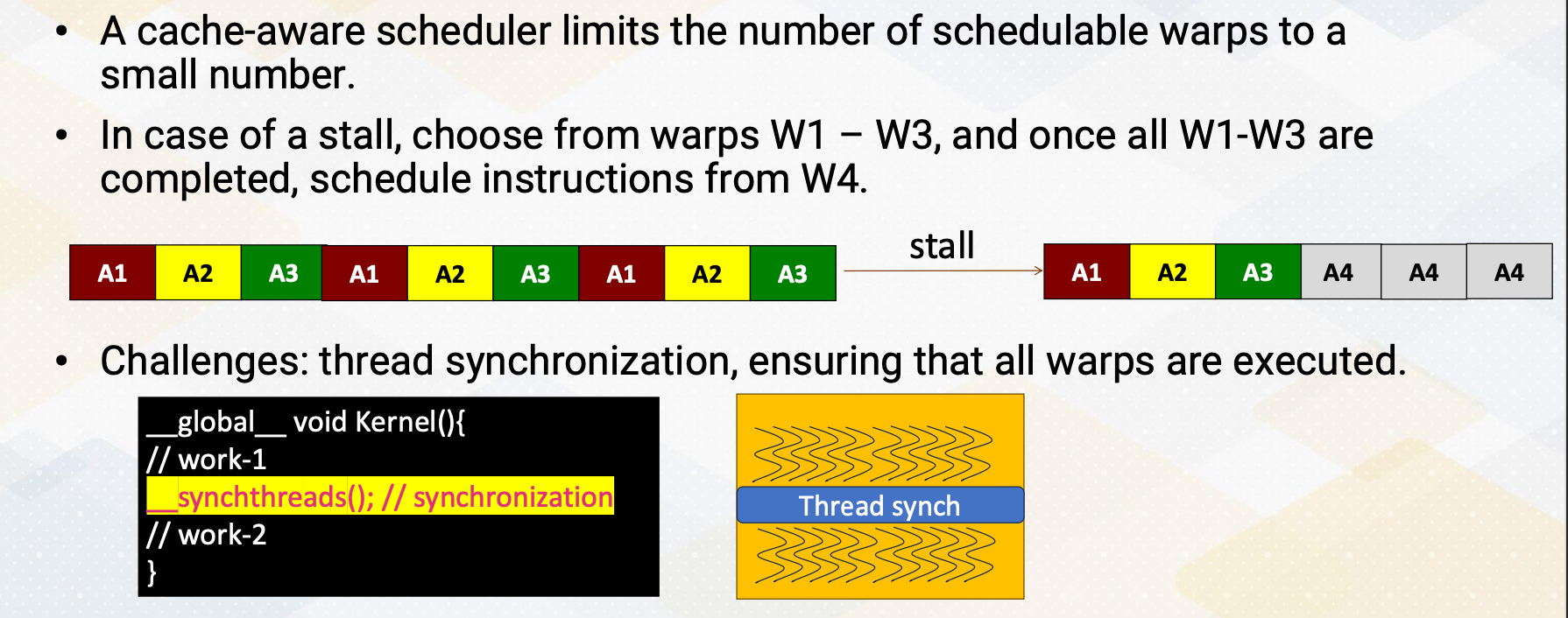

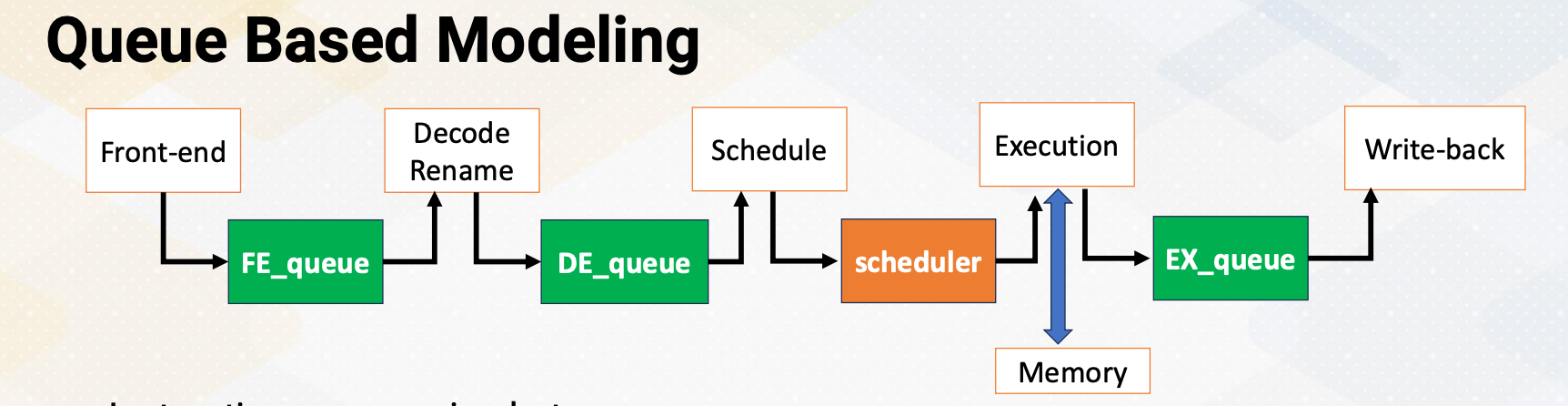

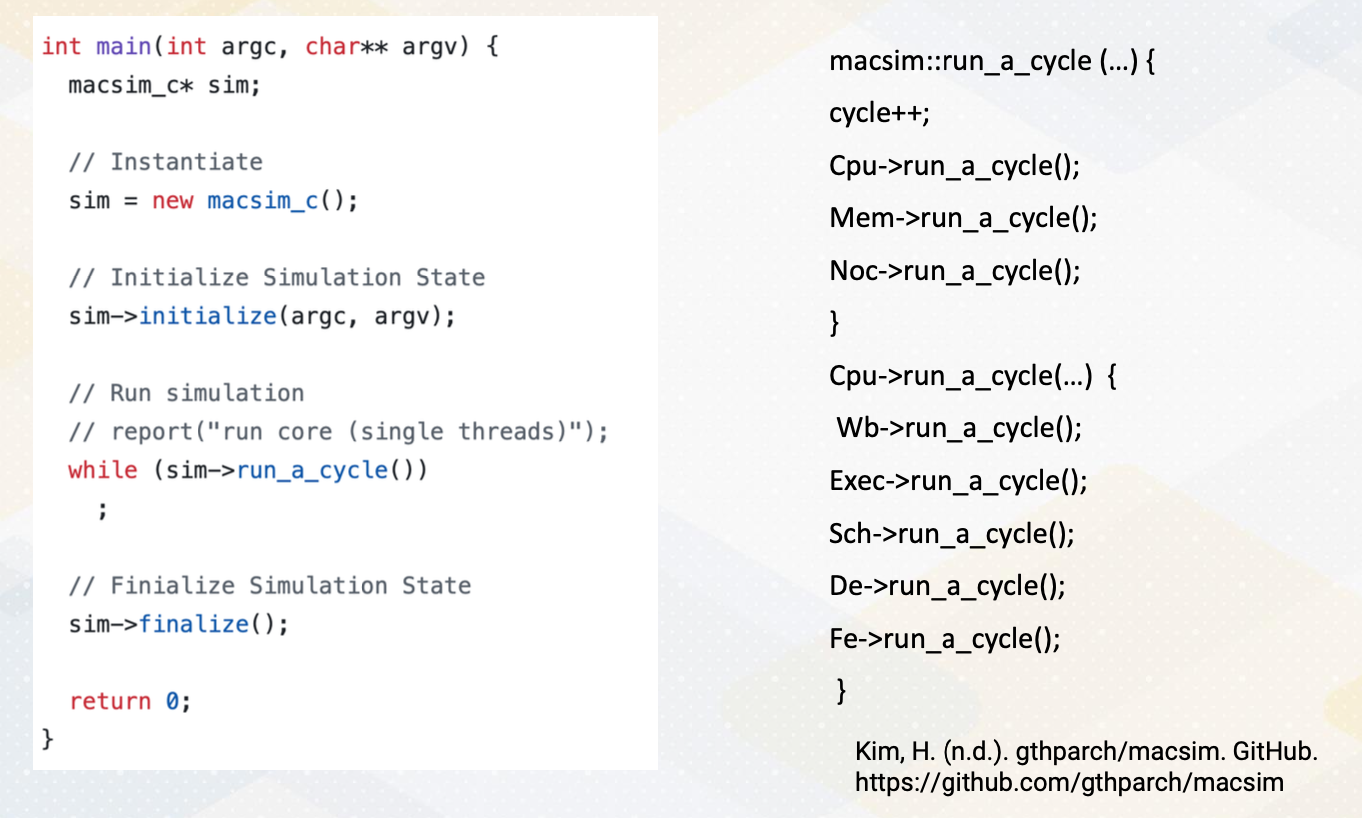

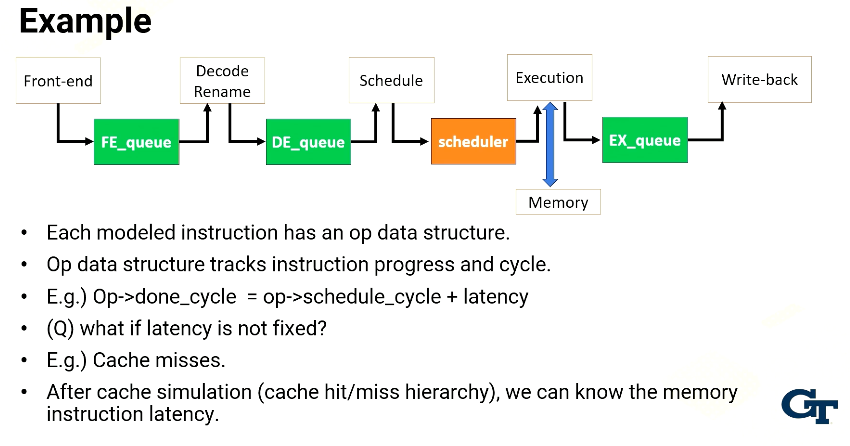

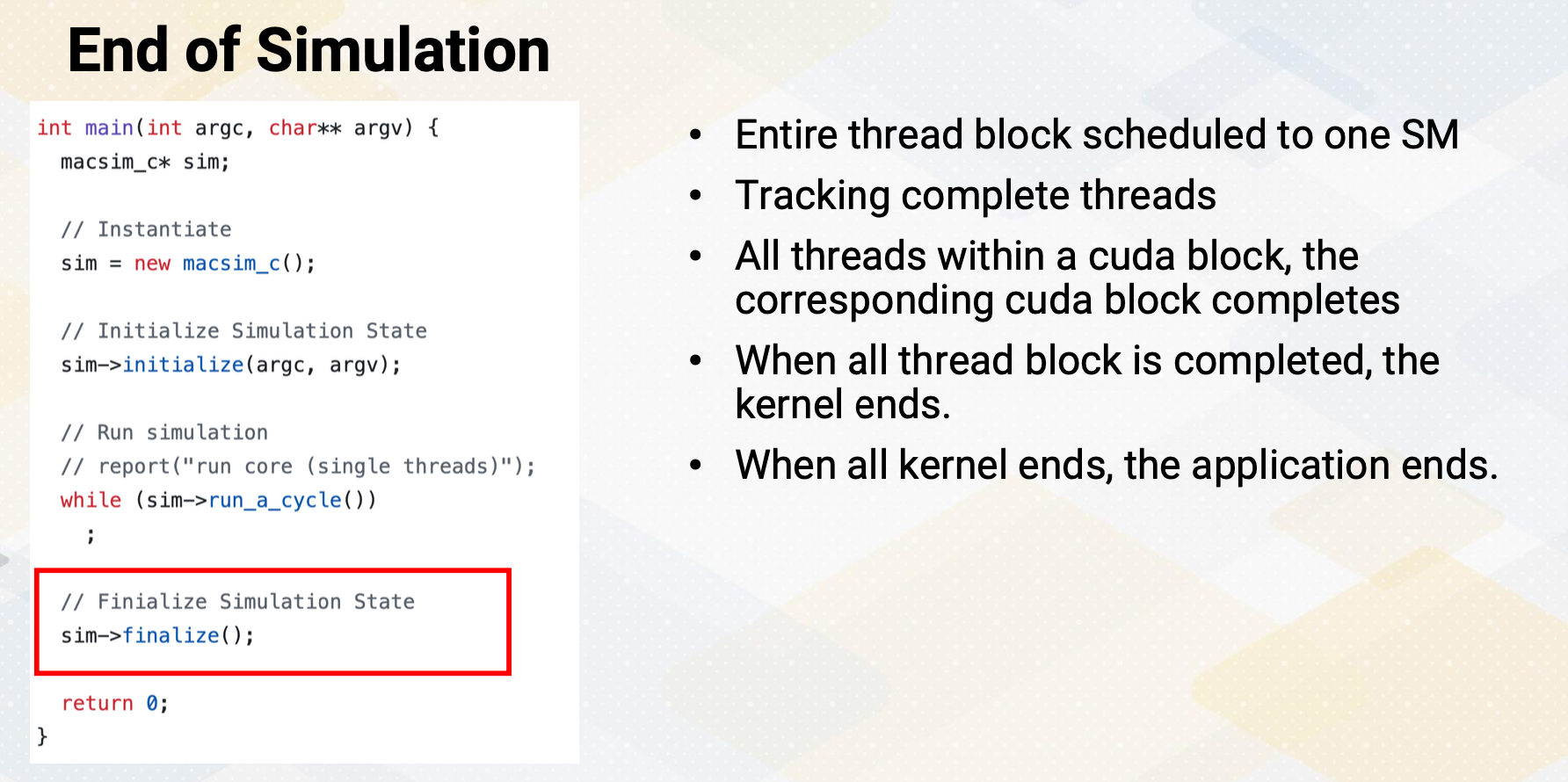

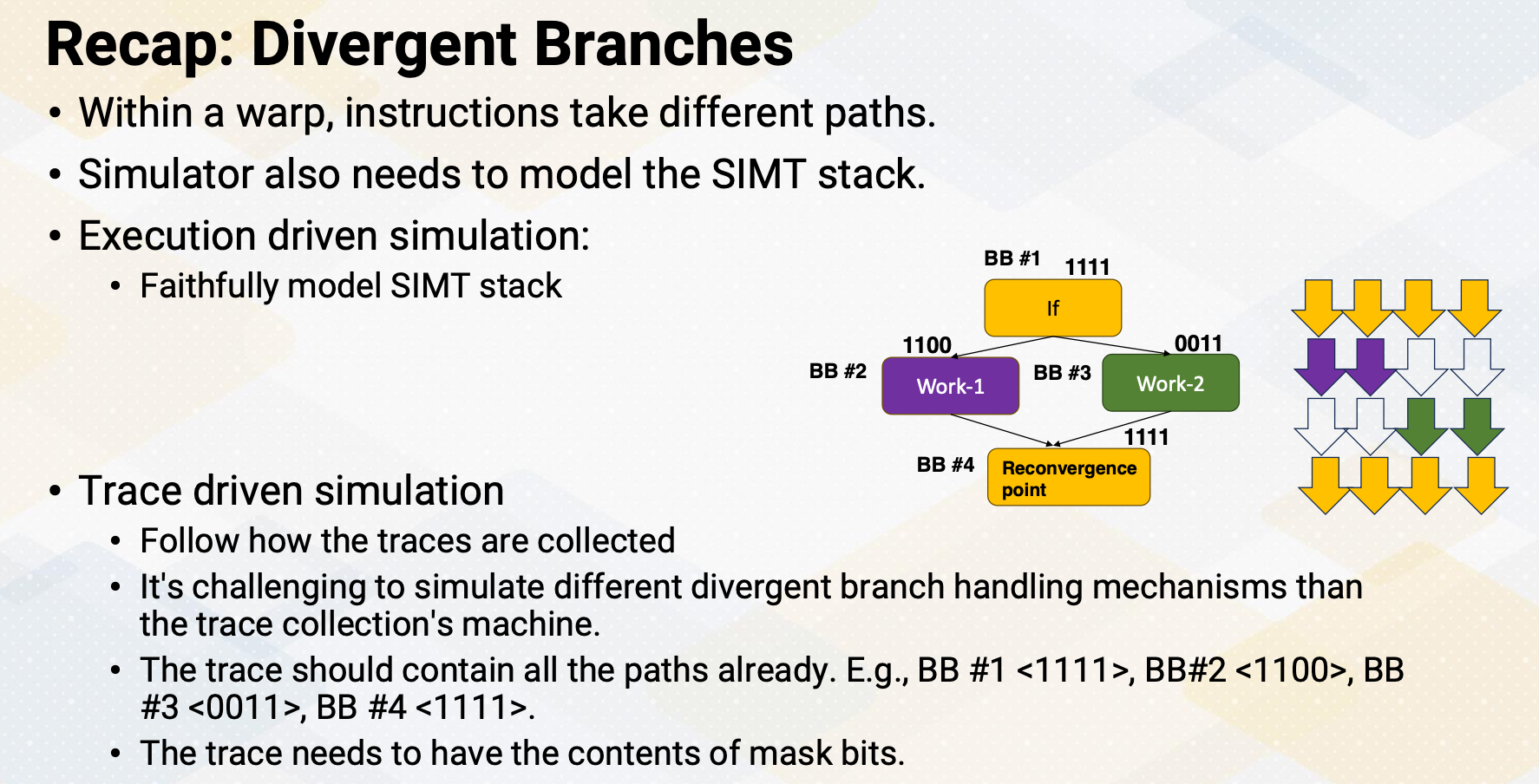

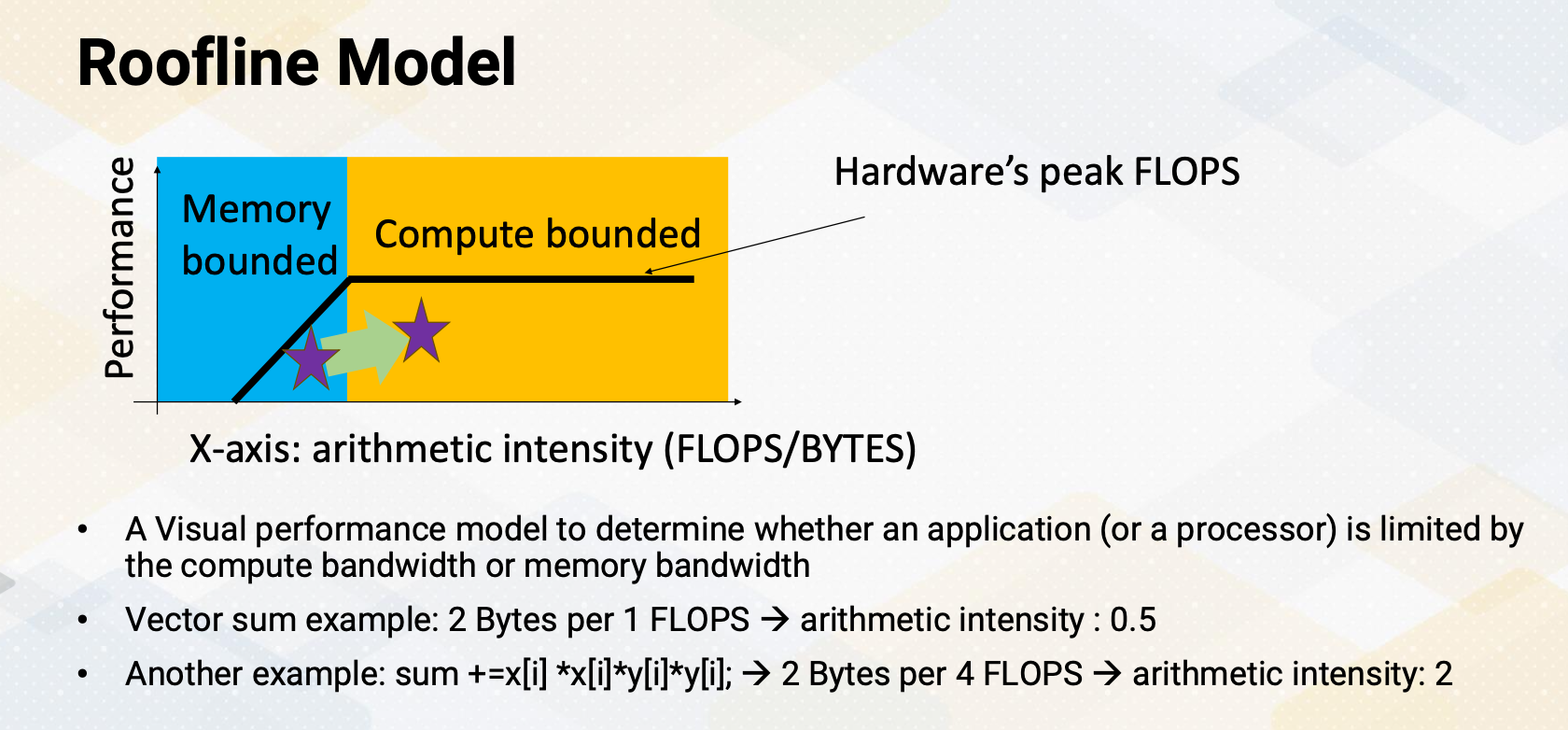

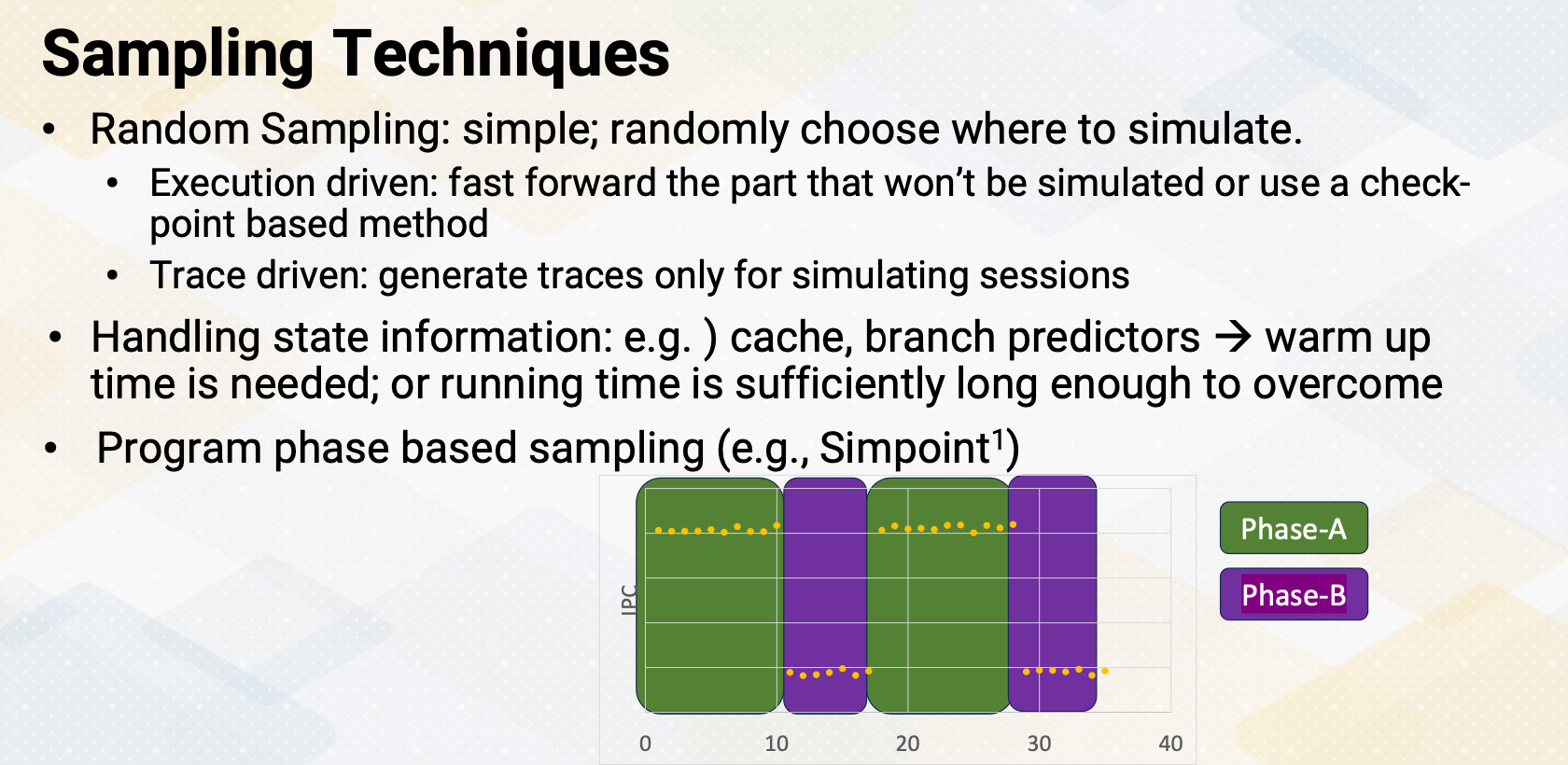

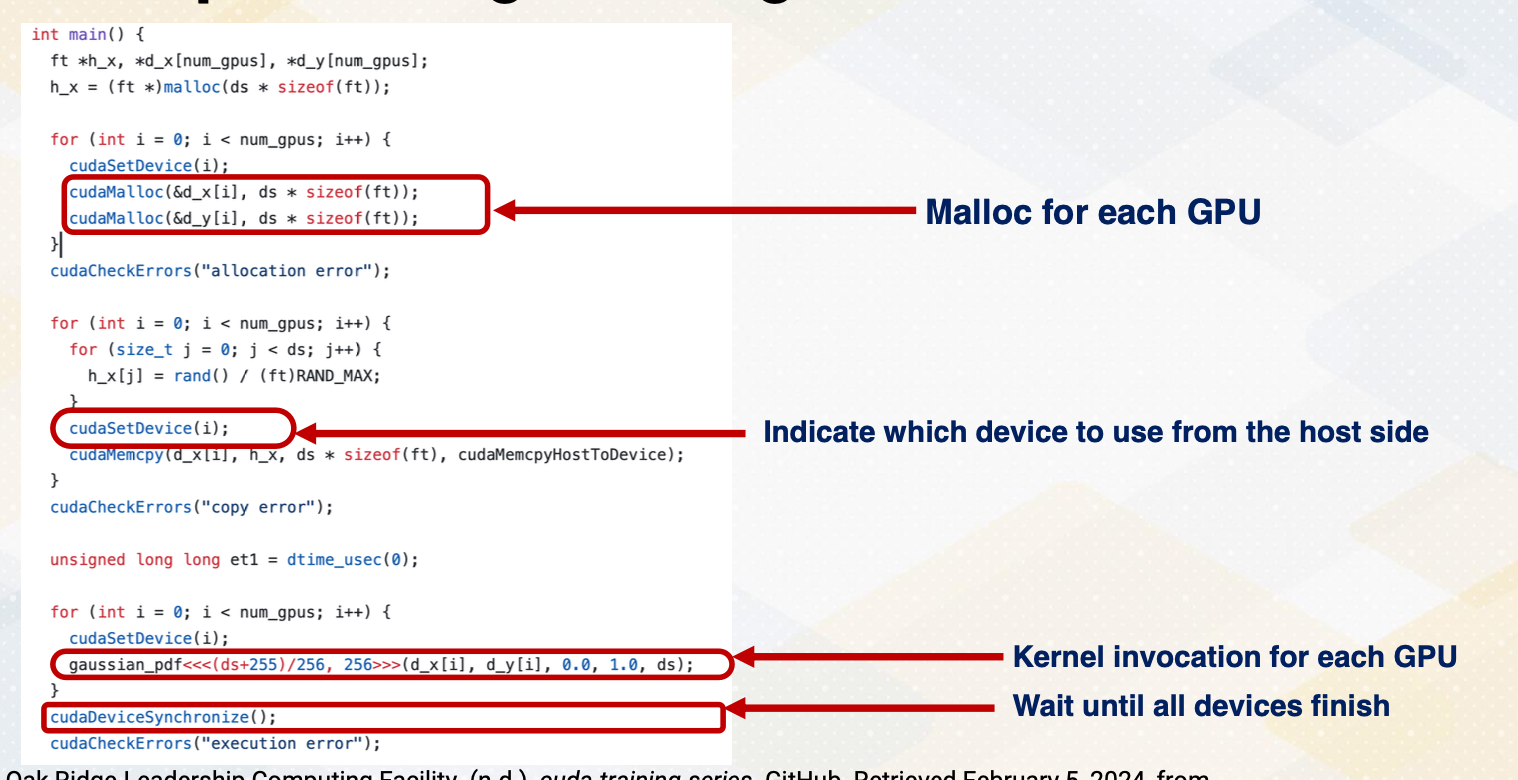

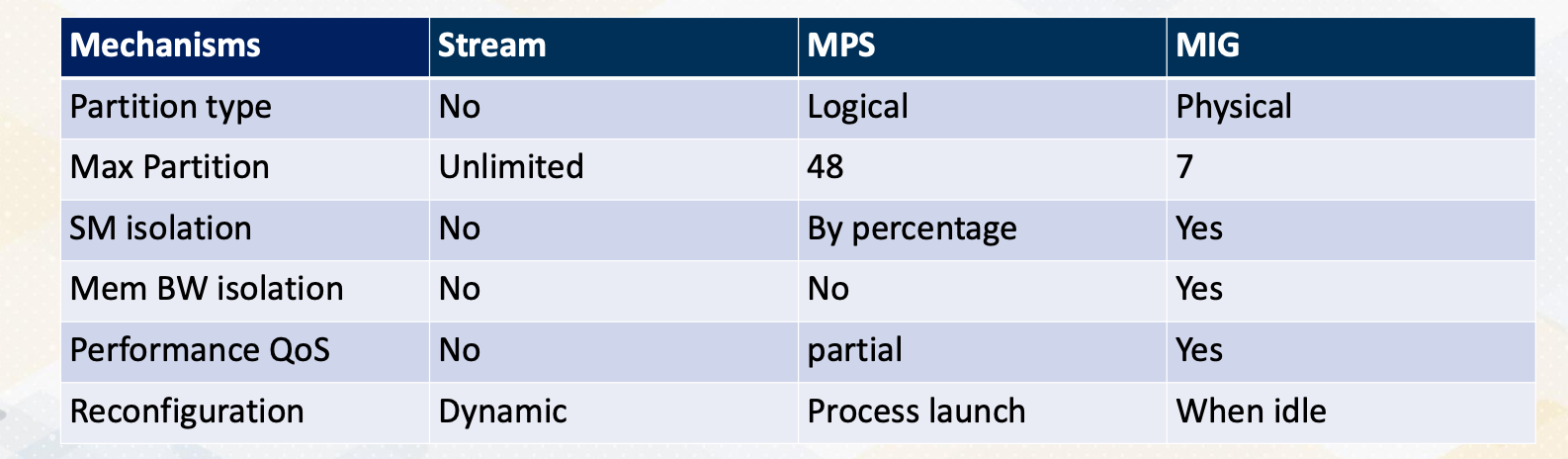

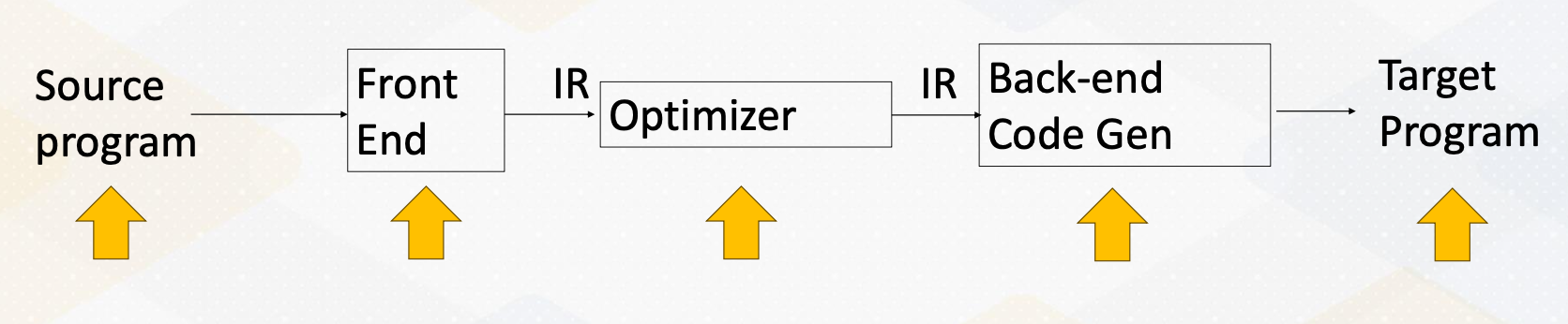

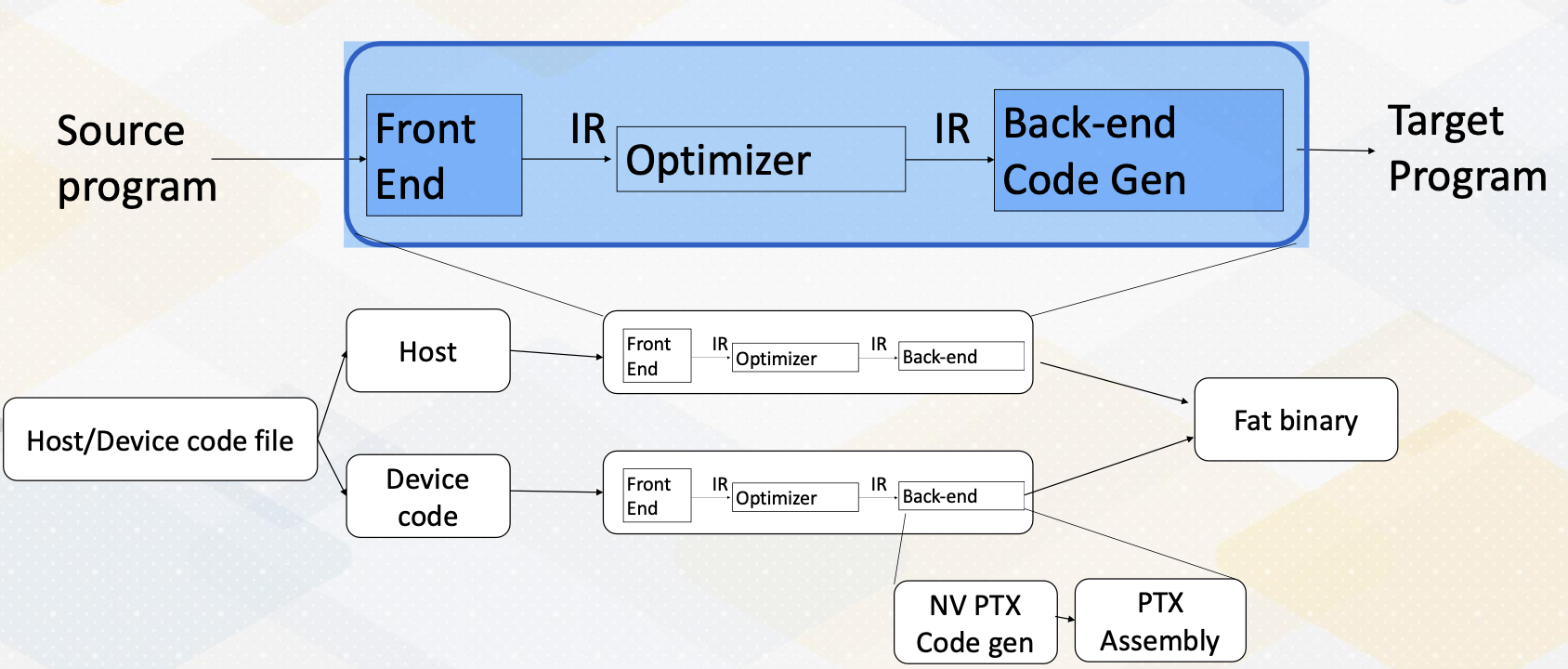

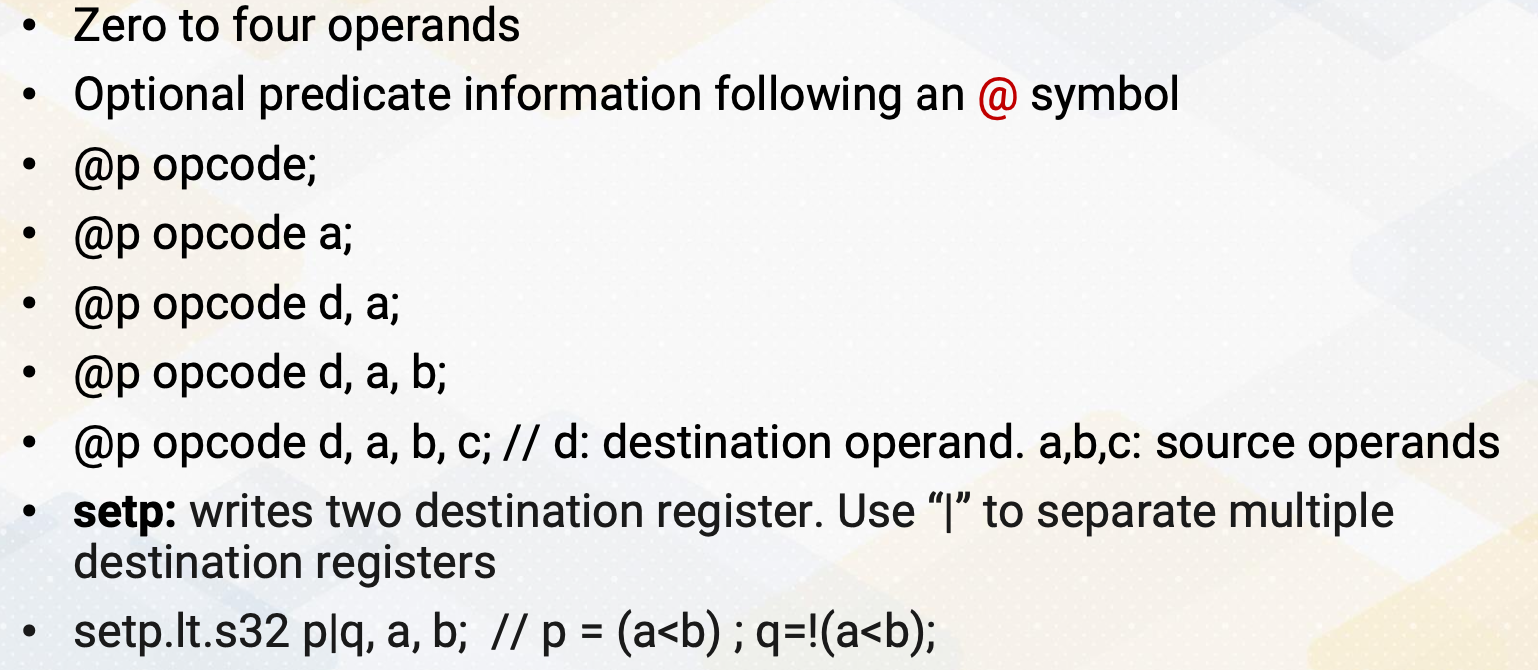

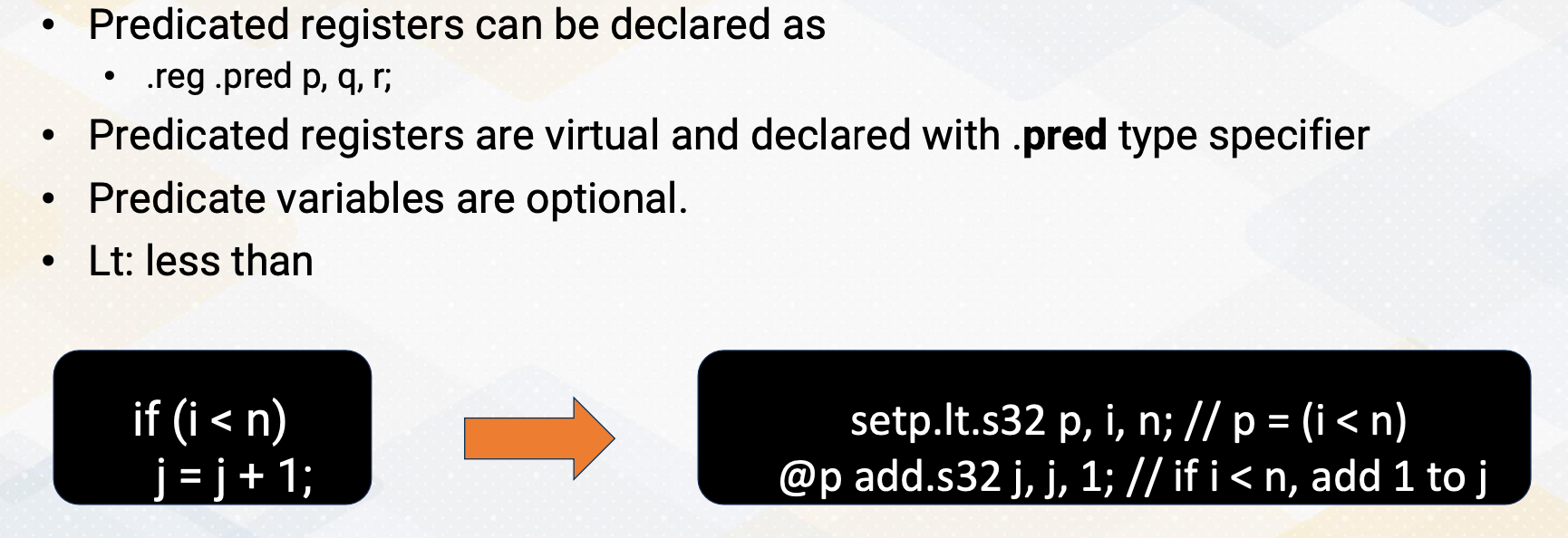

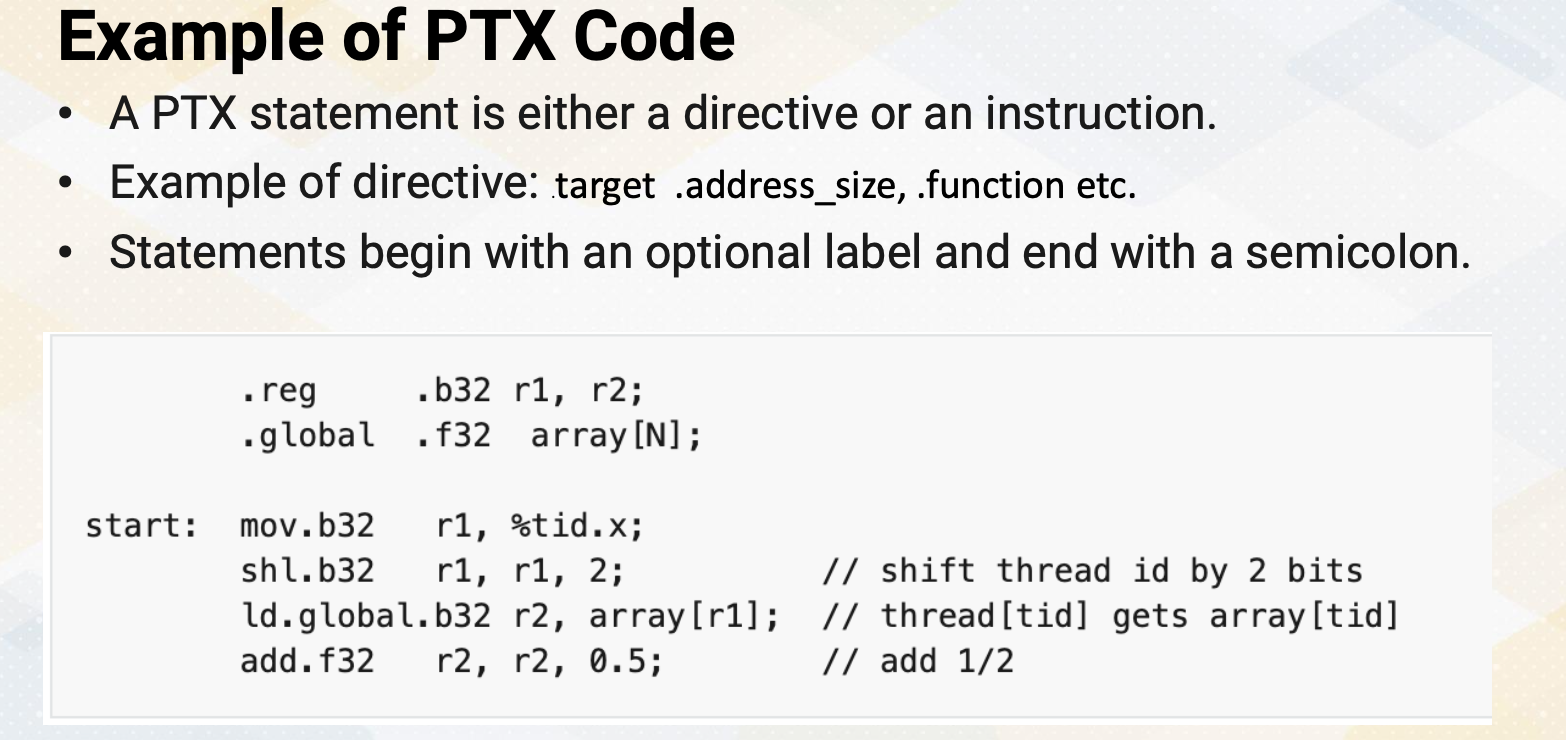

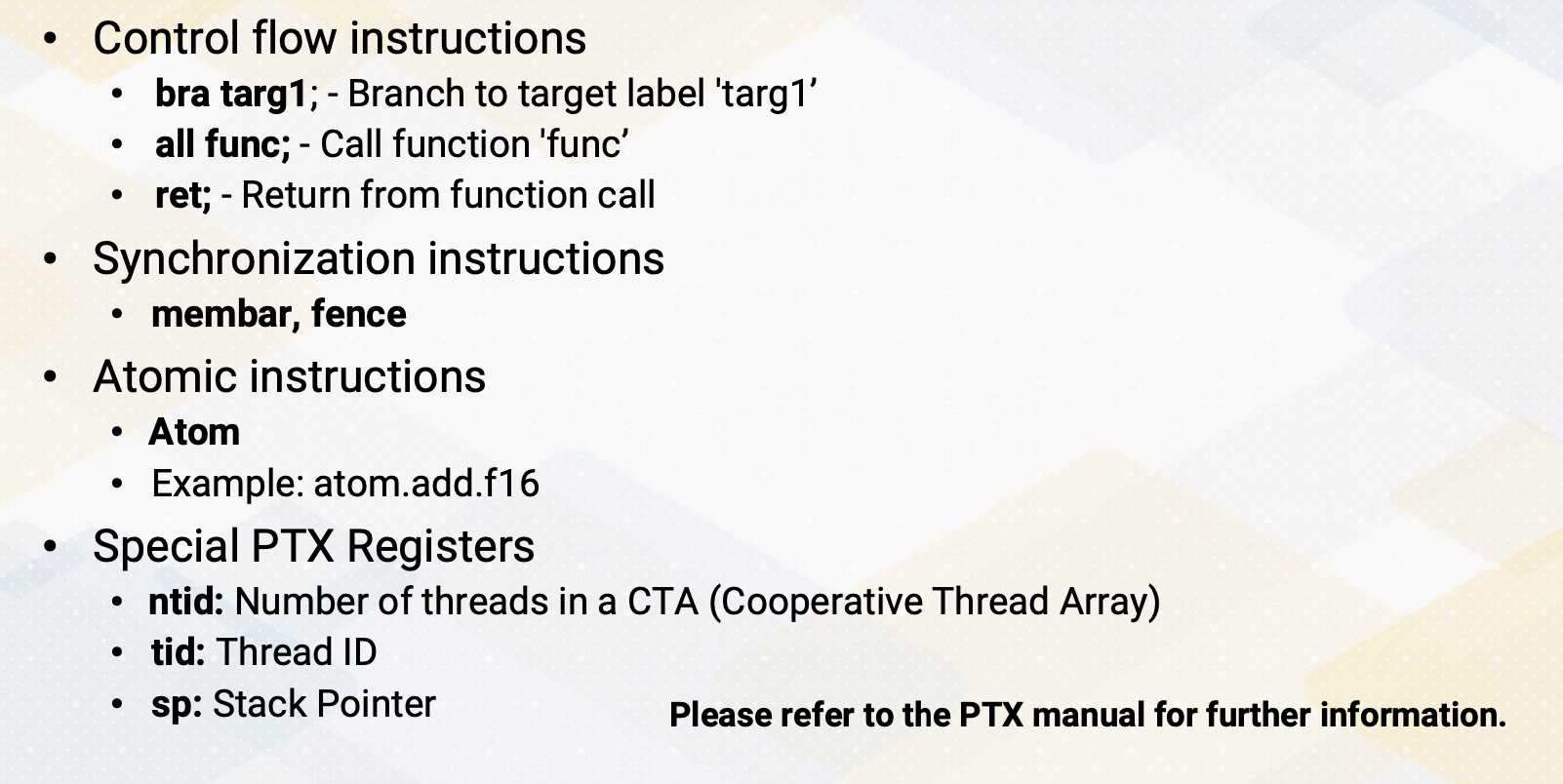

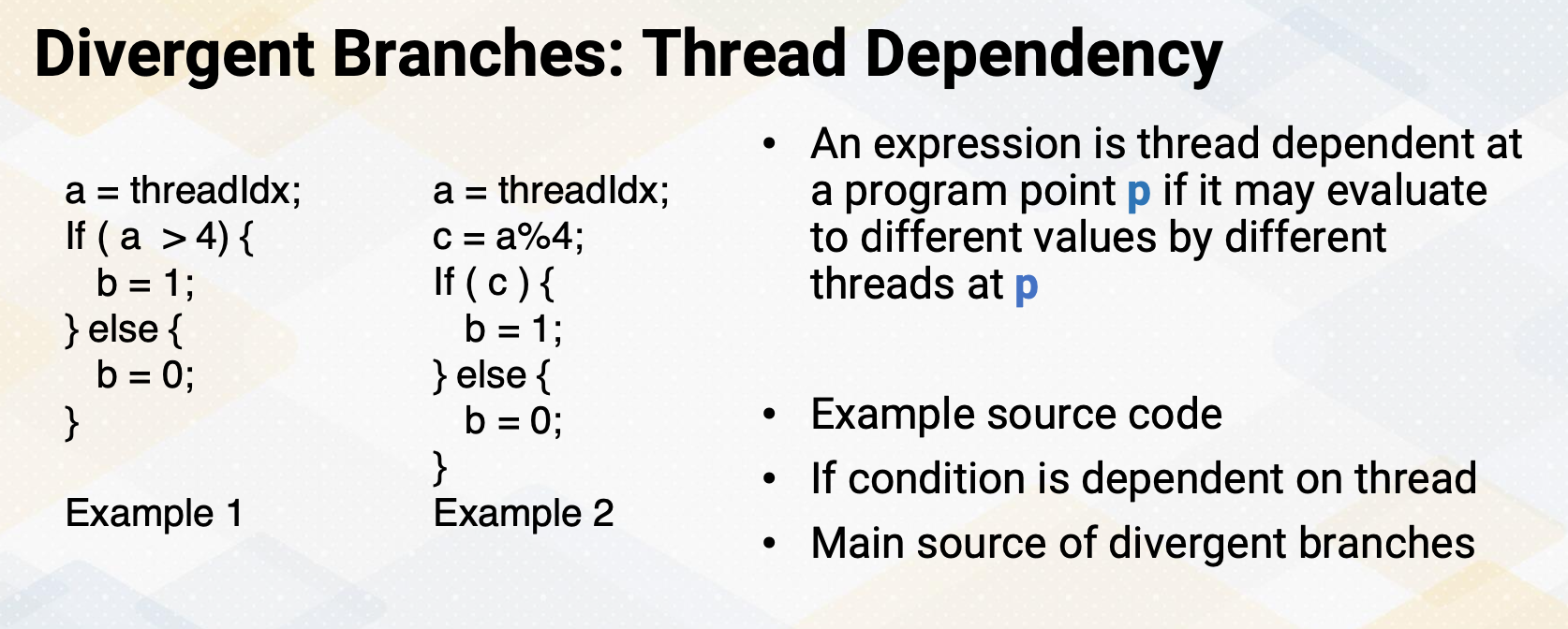

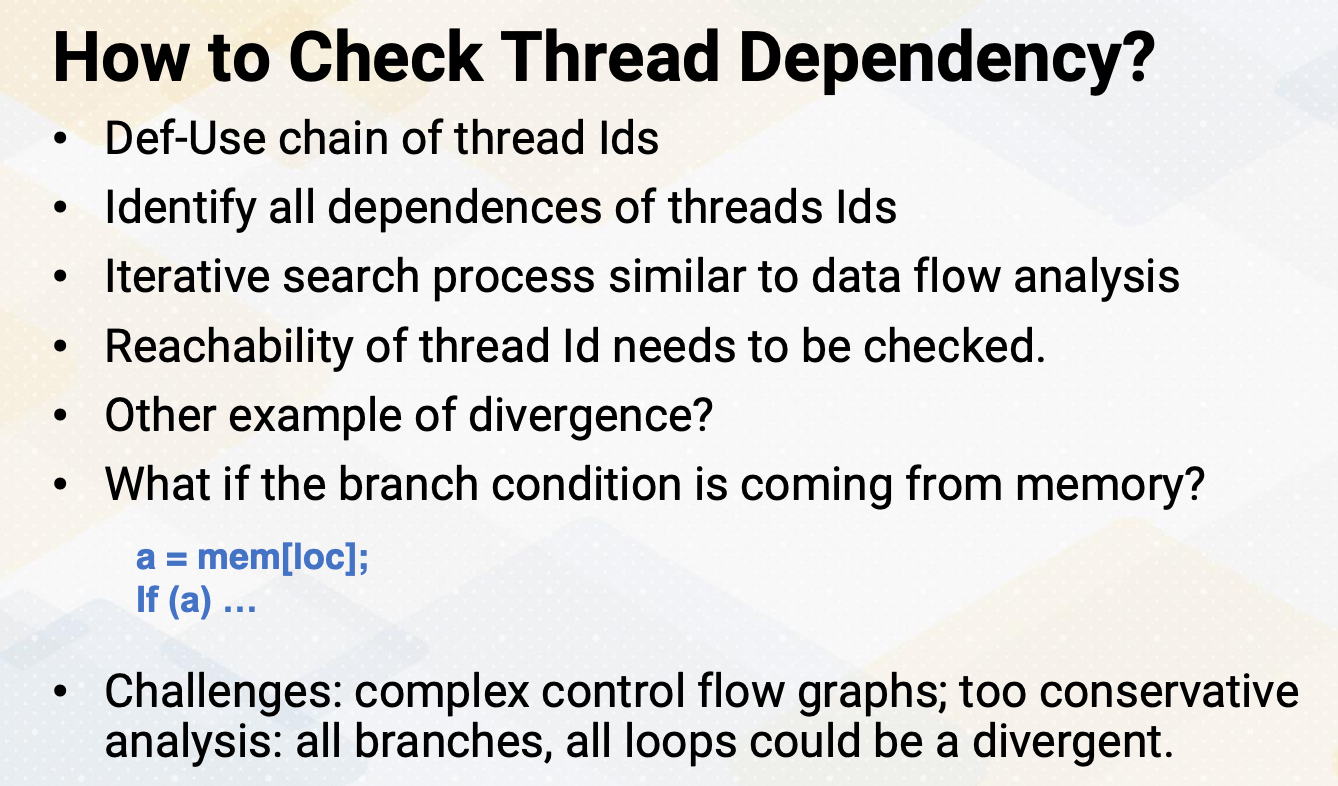

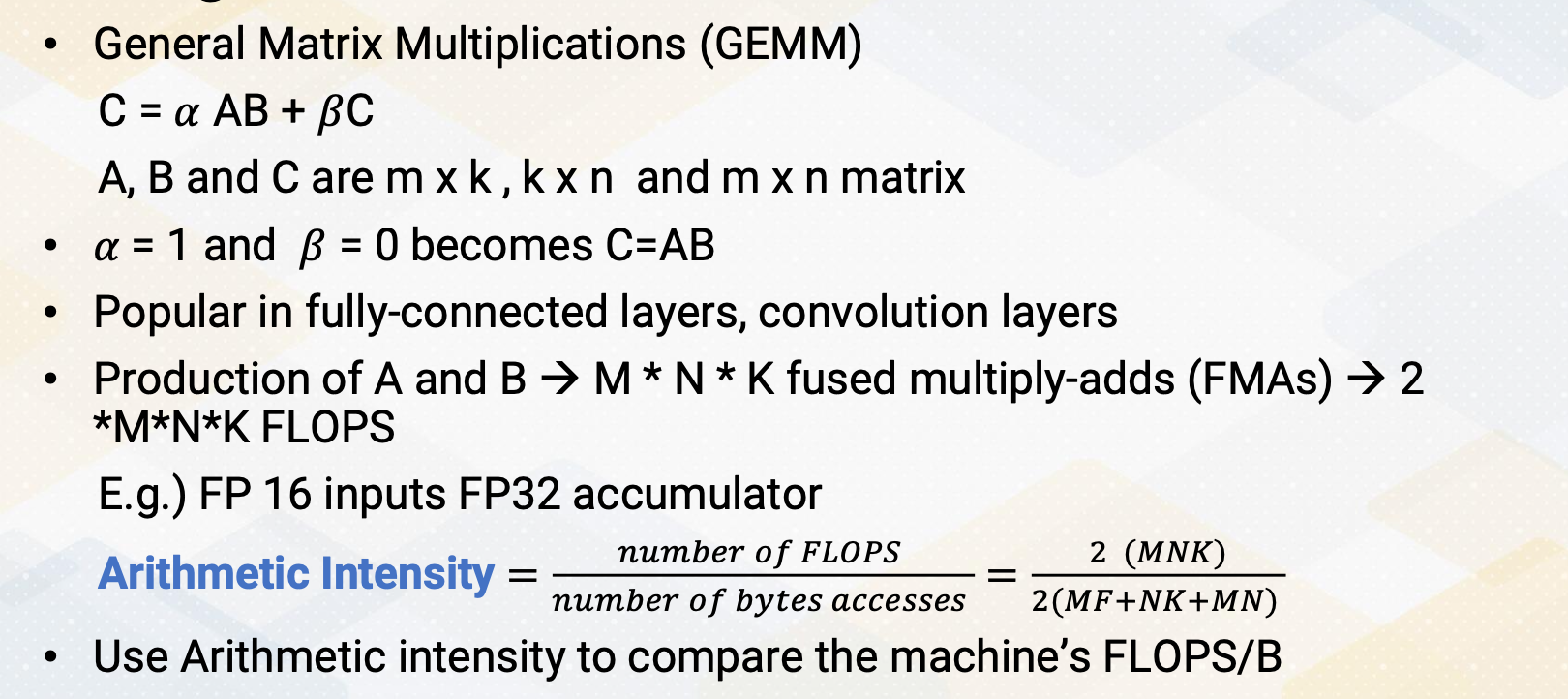

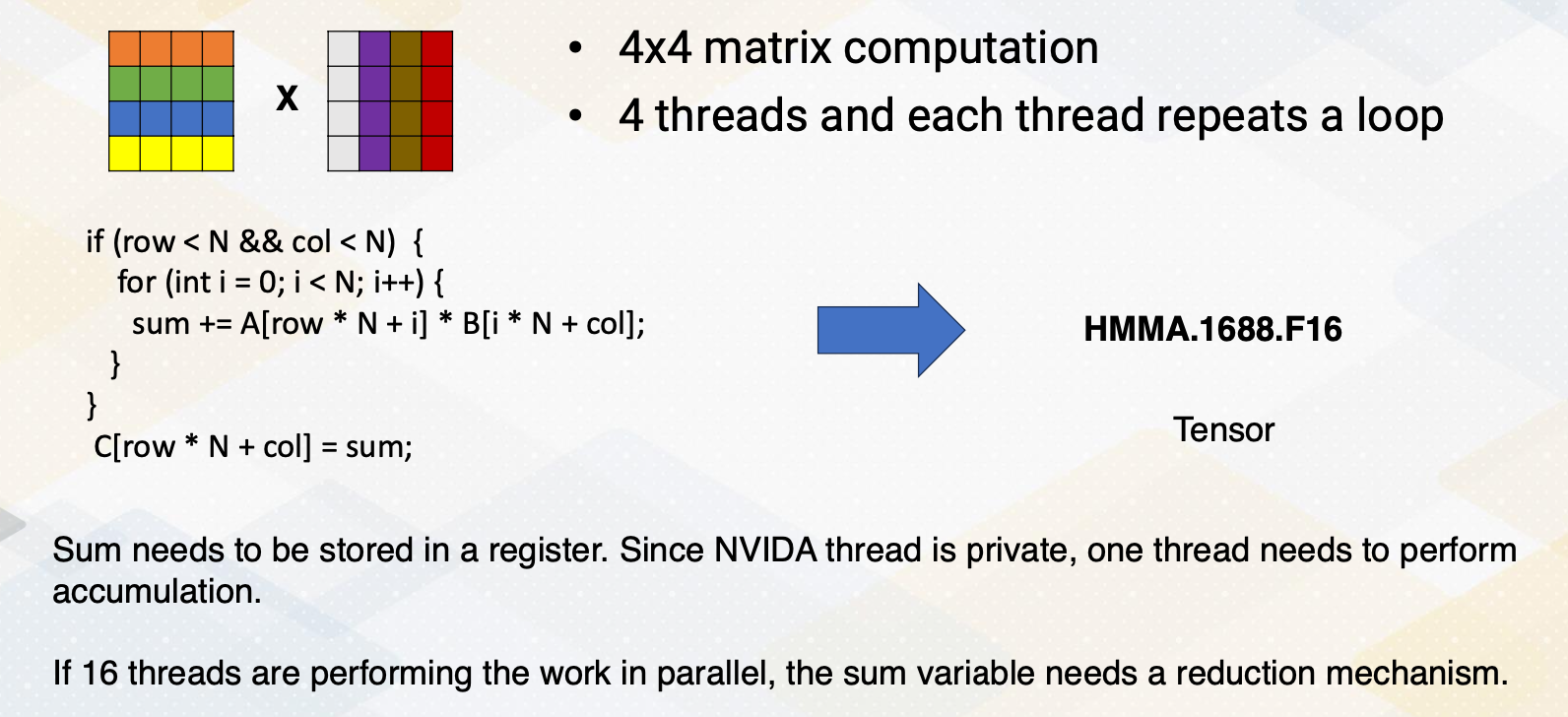

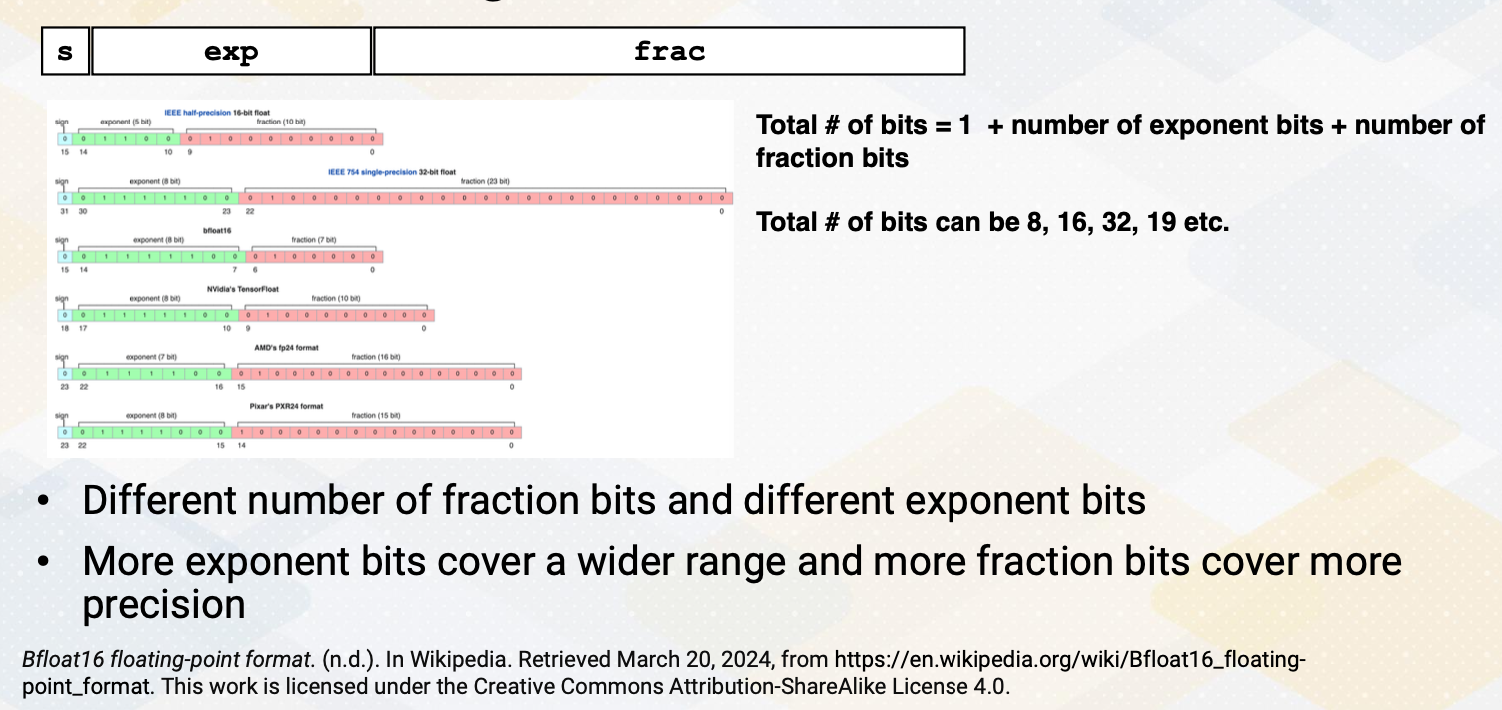

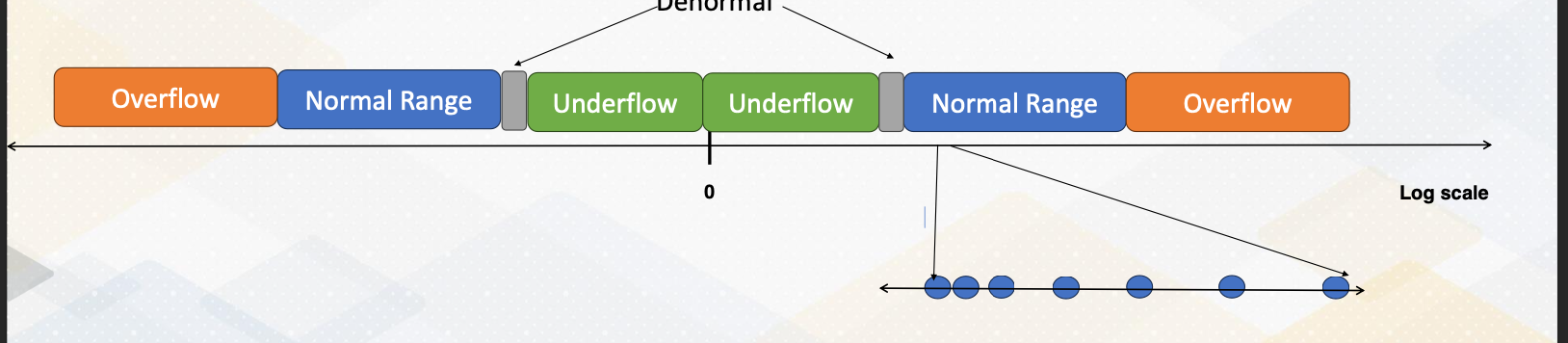

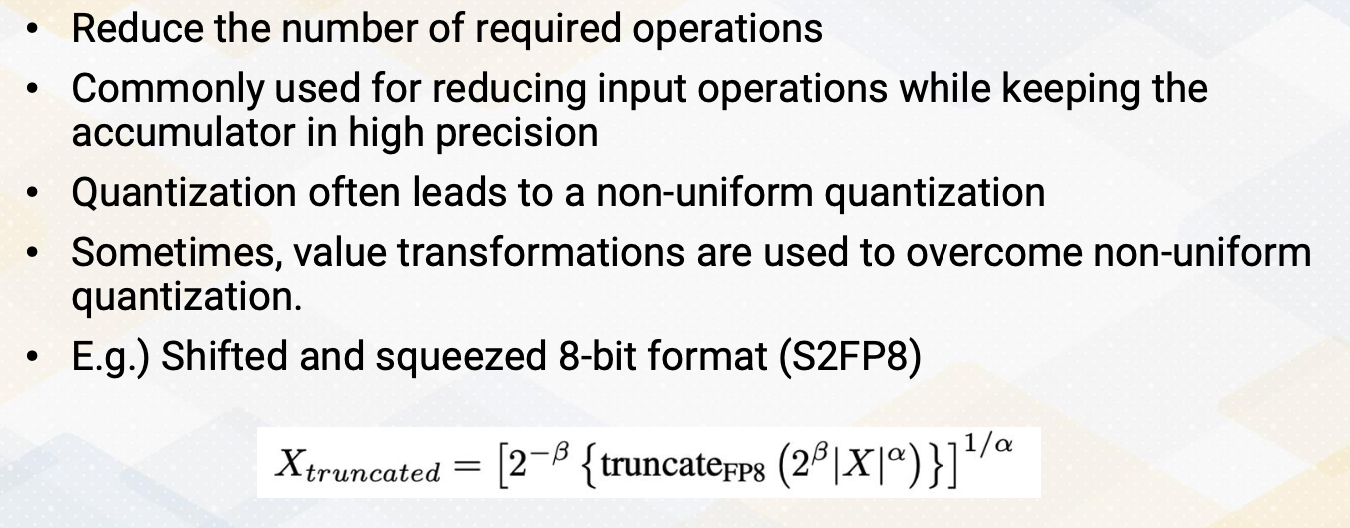

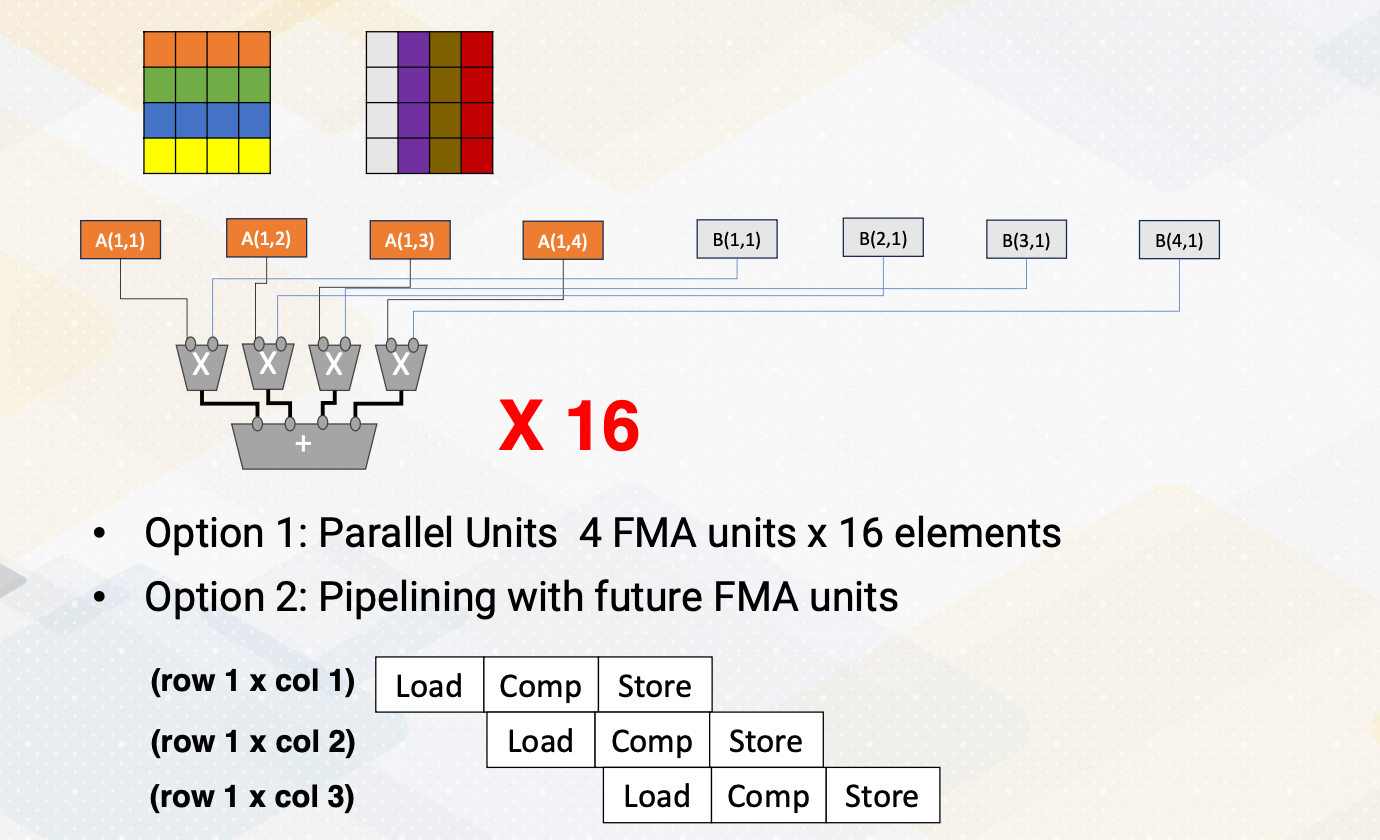

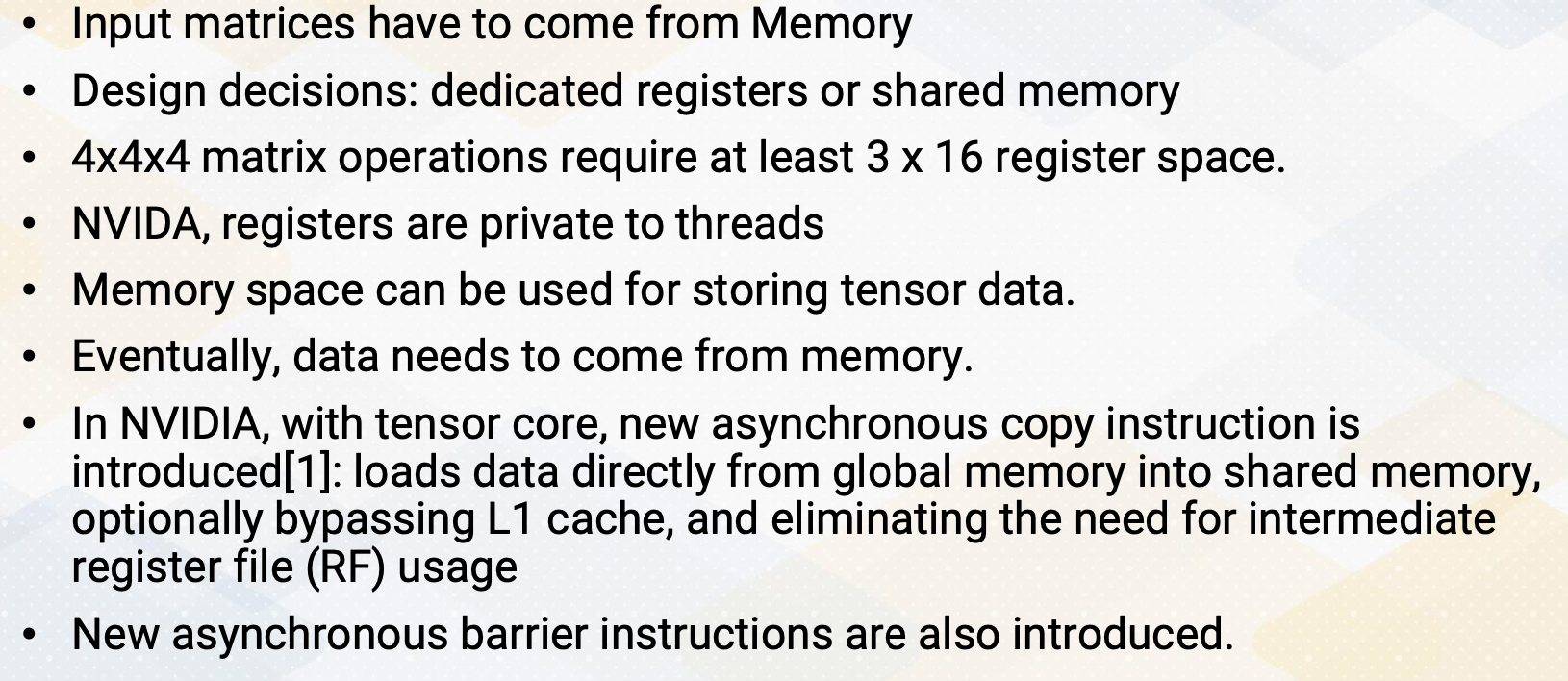

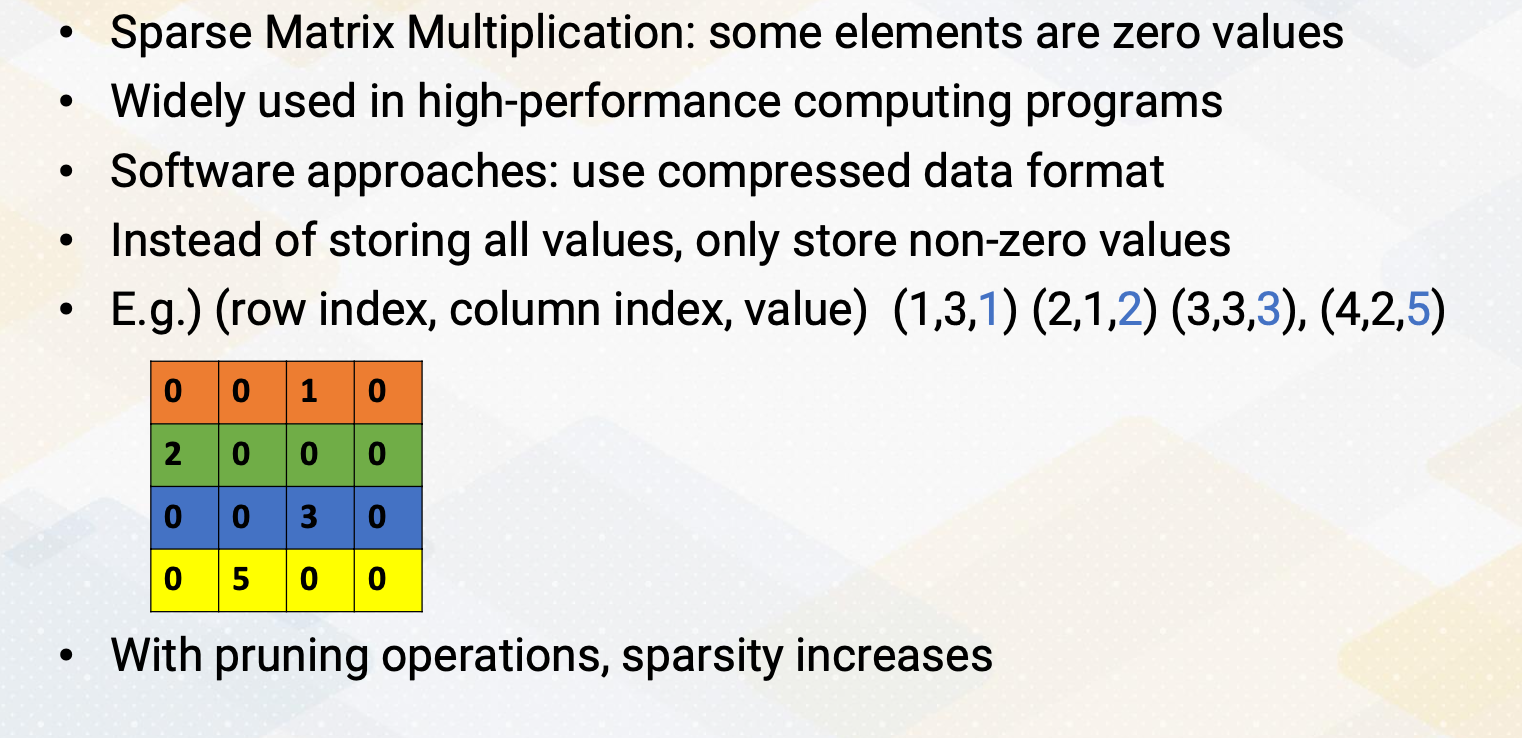

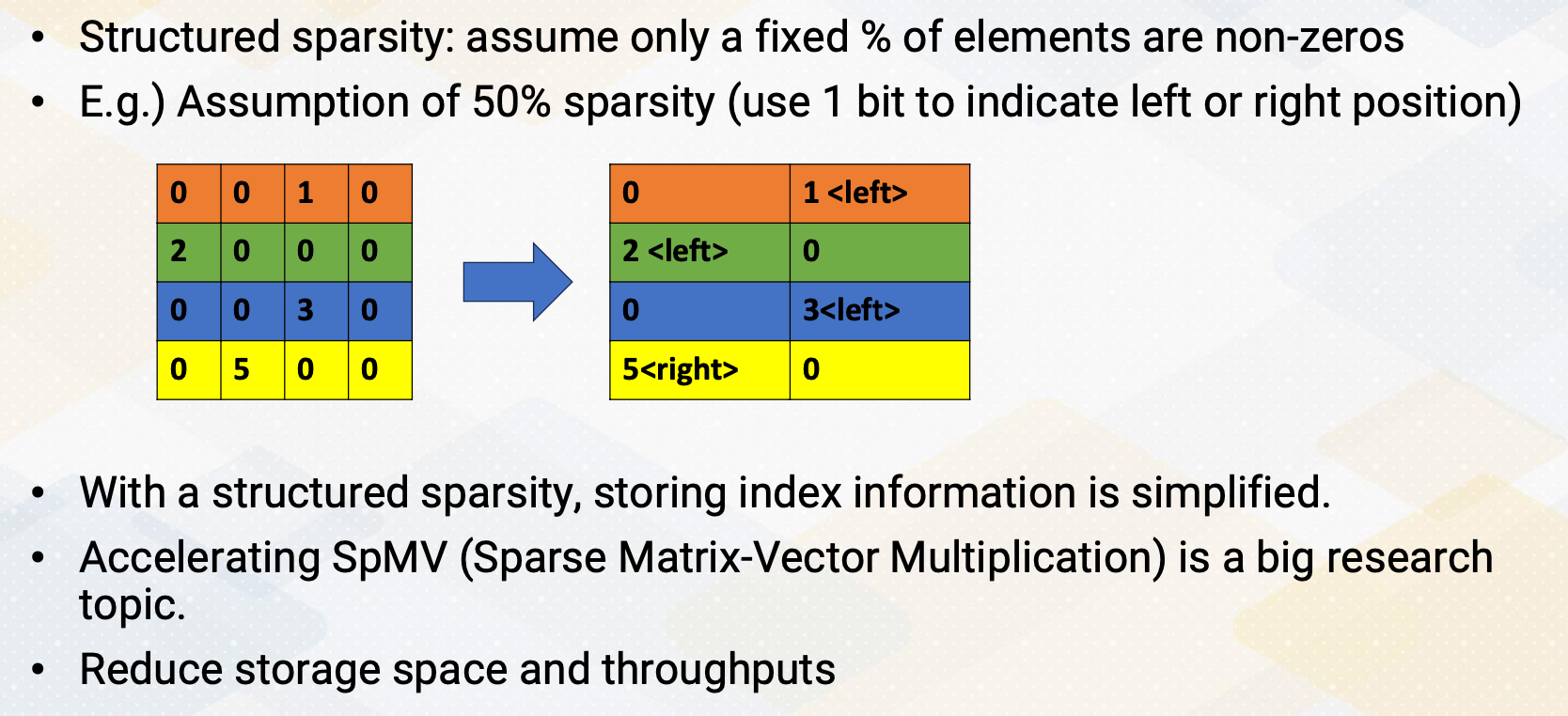

Let me briefly provide a background on DRAM and SRAM. SRAM is composed of six transistors and combining multiple one bit SRAM cell makes SRAM, and SRAM is commonly used in caches. On the other hand, DRAM is composed of one bit transistor and DRAM chip has many one bit DRAM cells. Since each bit requires only one bit DRAM, DRAM can provide a large capacity but all communication in the DRAM chip require pins to communicate which can be a limiting factor.