CS7280 OMSCS - Network Science Notes

Content of these notes are from Georgia Tech OMSCS 7280: Network Science: Methods and Applications by Prof. Constantine Dovrolis. They kindly allowed this to be shared publicly, please use them responsibly!

Module one

L1 - What is Network Science?

Overview

Required Reading

- Chapter-1 from A-L. Barabási, Network Science, 2015.

Recommended Reading

Read at least one of the following papers, depending on your interests:

Networks in Epidemiology: An Integrated Modeling Environment to Study the Co-evolution of Networks, Individual Behavior and Epidemics by Chris Barrett et al.

Networks in Biology: Network Inference, Analysis, and Modeling in Systems Biology by Reka AlbertNetworks in Neuroscience: Complex brain networks: graph theoretical analysis of structural and functional systems by Ed Bullmore and Olaf Sporns

Networks in Social Science: Network Analysis in the Social Sciences by Stephen Borgatti et al.

Networks in Economics: Economic Networks: The New Challenges by Frank Schweitzer et al.

Networks in Ecology: Networks in Ecology by Jordi Bascompte

Networks and the Internet: Network Topologies: Inference, Modelling and Generation by Hamed Haddadi et al.

What is Network Science?

The study of complex systems focusing on their architecture, i.e., on the network, or graph, that shows how the system components are interconnected.

In other words, Network Science or NetSci focuses on a network representation of a system that shows how the system components are interconnected. To understand this definition further, let’s first explore the concept of Complex Systems.

Complex Systems

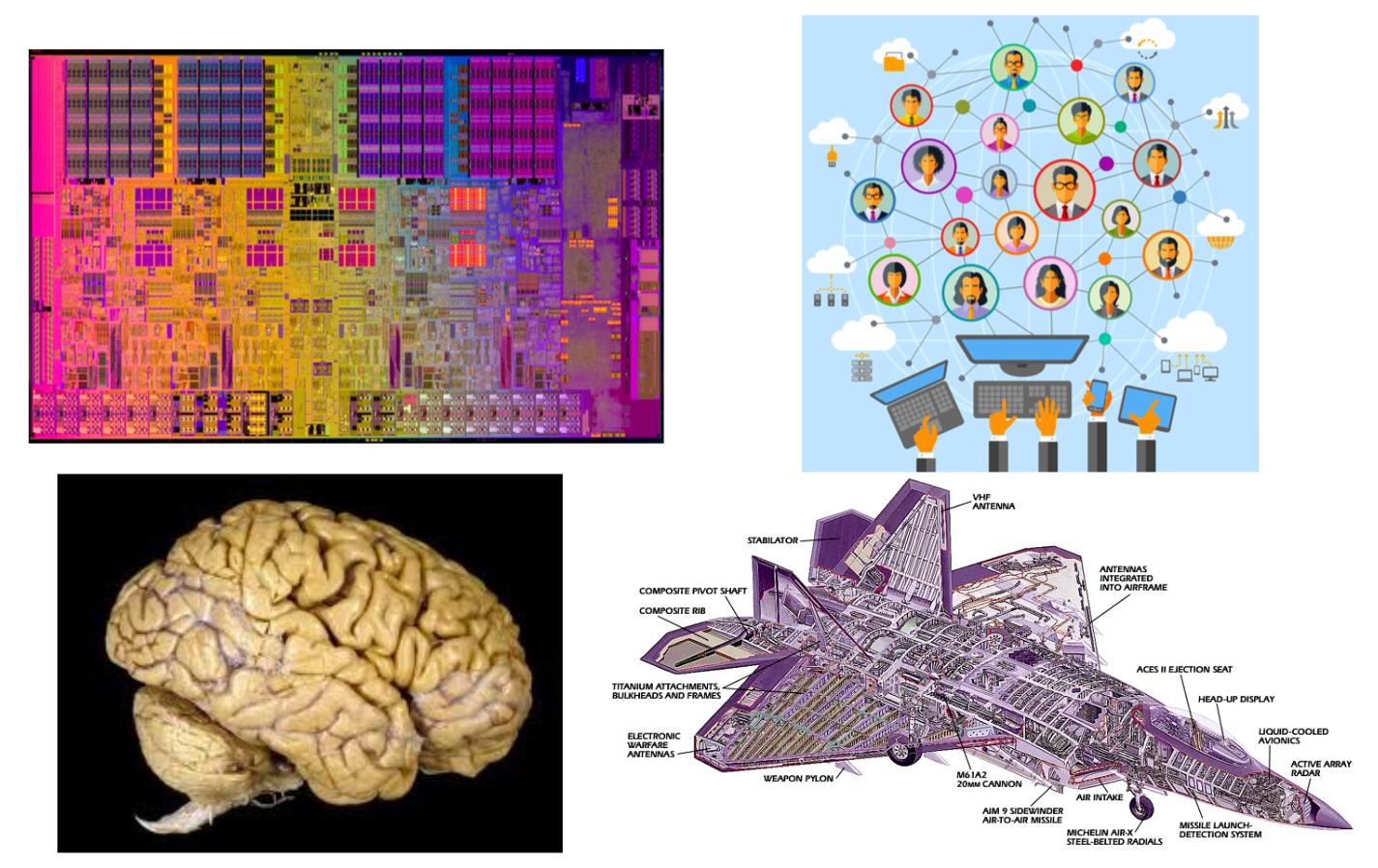

The image above shows a microprocessor, a human brain, an online social network, and a fighter jet. On the surface, you may think that these systems have nothing in common!

However, they all have some common fundamental properties:

- Each of them consists of many autonomous parts, or modules – in the same way, that a large puzzle consists of many little pieces. The microprocessor, for example, consists of mostly transistors and interconnects. The brain consists of various cell types, including excitatory neurons, inhibitory neurons, glial cells, etc.

- The parts of each system are not connected randomly or in any other trivial manner – on the contrary, the system only works if the connections between the parts are highly specific (for example, we would not expect an electronic device to work if its transistors were randomly connected). These interconnections between the system components define the architecture of the system – or in other words, the network representation of the system.

- The interactions between connected parts are also non-trivial. A trivial interaction would be, mathematically, a linear relation between the activity of two parts. On the contrary, as we will discuss later in the course, in all interesting systems at least, these interactions are non-linear.

To summarize, Complex Systems have:

- Many and heterogeneous components

- Components that interact with each other through a (non-trivial) network

- Non-linear interactions between components

Next, we’ll discuss Trivial Networks versus Complex Networks.

Trivial Networks Versus Complex Networks

Image Source: Local Patterns to Global Architectures: Influences of Network Topology on Human Learning. Karuza, Thompson-Schill, Bassett; 2016

Image Source: Local Patterns to Global Architectures: Influences of Network Topology on Human Learning. Karuza, Thompson-Schill, Bassett; 2016

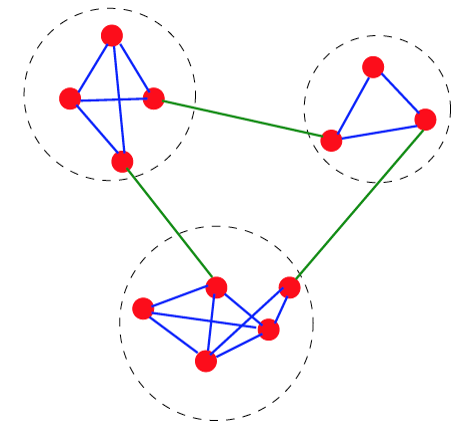

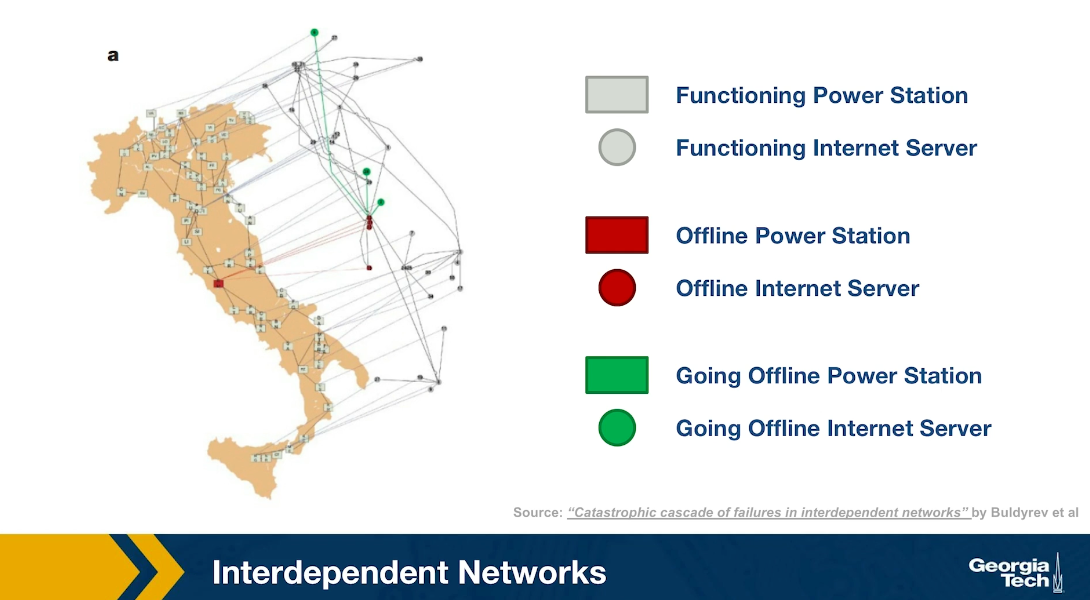

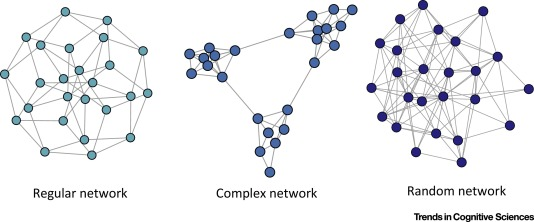

Trivial Networks also known as regular or random networks differ significantly from complex networks as the image above shows.

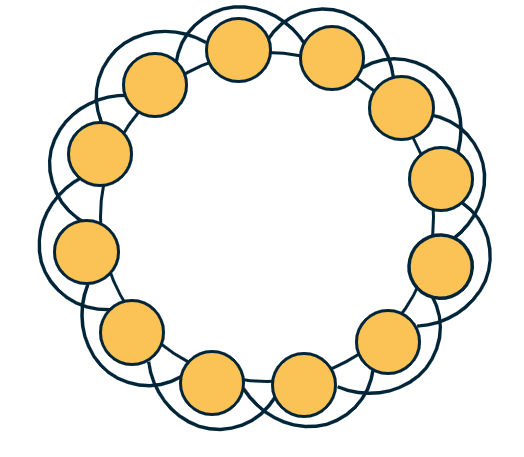

“Regular networks“ are a large family of networks that have been studied extensively by mathematicians over the last couple of centuries. Regular networks such as rings, cliques, lattices, etc, have the same interconnection pattern, the same structure, at all nodes.

The example shown at the left in the image above is a regular network in which every node connects to four other nodes.

Another well-studied class of networks in graph theory is that of “Random networks”. Here, the connections between nodes are determined randomly. In the simplest model, each pair of nodes is connected with the same probability.

In practice, most technological, biological and information systems do NOT have a regular or random network architecture. Instead, their architecture is highly specific, resulting in interconnection patterns that are highly variable across nodes.

For example, the network in the middle has several interesting properties that would not be expected if the network was randomly “wired”: note that there are three major clusters of nodes, few nodes have a much larger number of connections than others, and there are many connected three-node groups.

A major difference between network science and graph theory is that the former is an applied data-science discipline that focuses on complex networks encountered in real-world systems.

Graph theory, on the other hand, is a mathematical field that focuses mostly on regular and random graphs. We will return to the connection between these two disciplines later in this lesson.

Example: The Brain of the C.elegans Worm

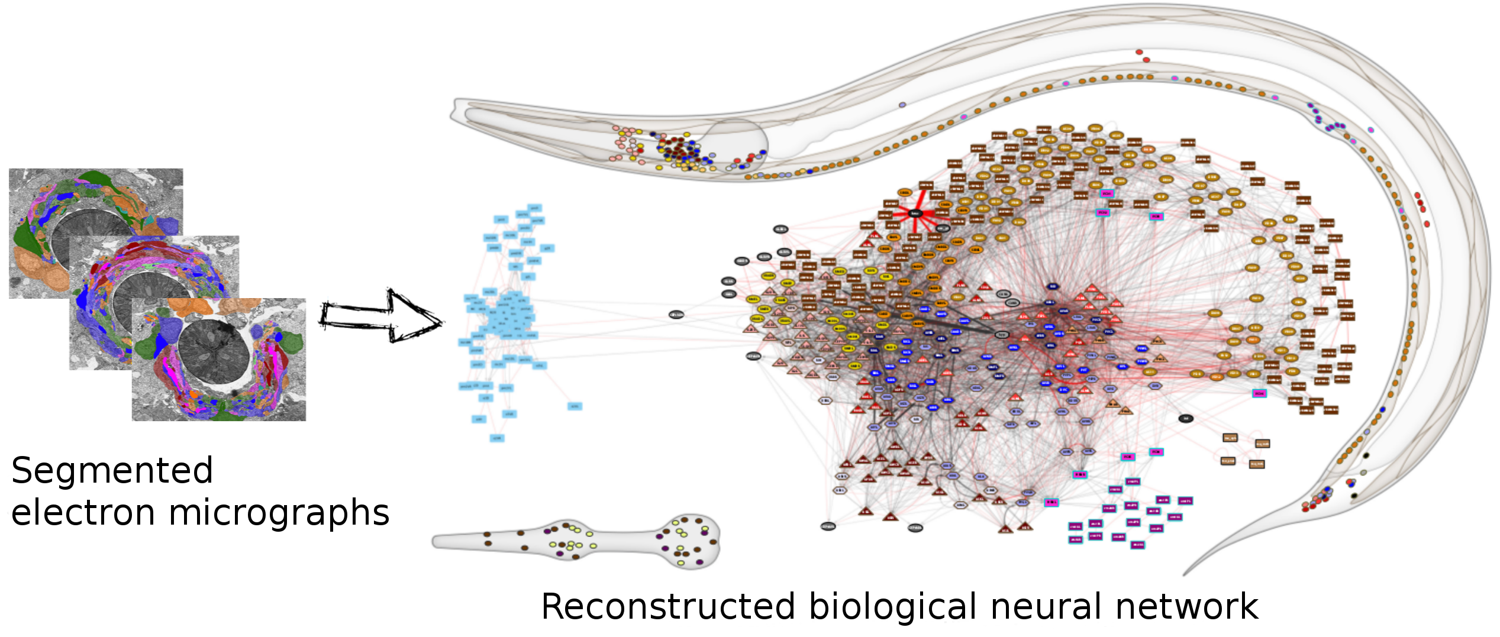

(Image Source: wormwiring.org)

(Image Source: wormwiring.org)

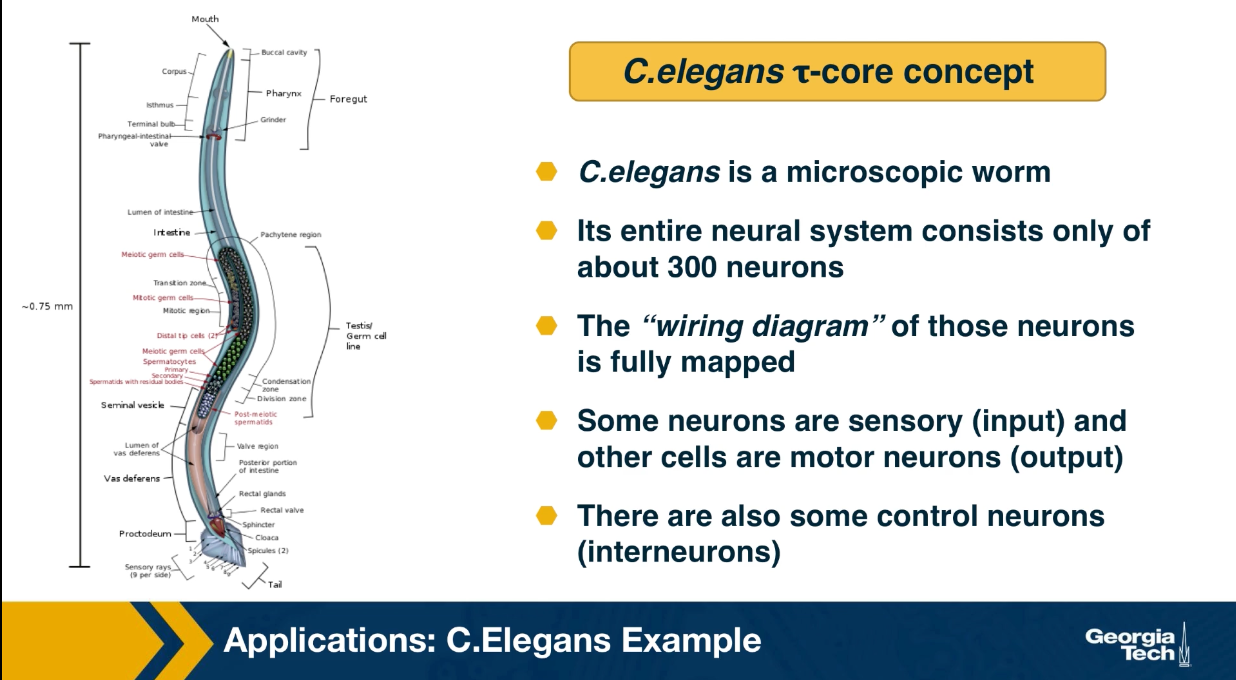

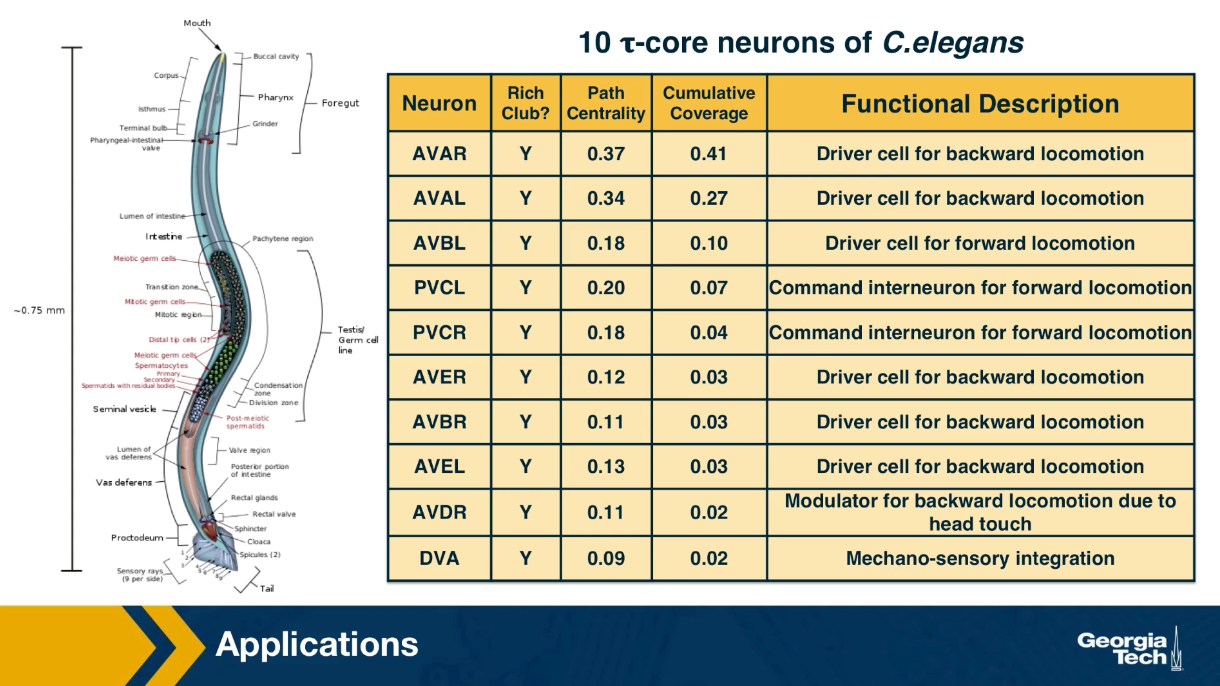

To understand the relationship between a complex system and its network representation, let’s focus on a microscopic worm called C.elegans.

This amazing organism, which is about 1mm in length, has roughly only 300 neurons in its neural system. Still, it can move in different ways, react to touch, mate, sense chemical odors, and respond to food versus toxins, etc.

Each dot represents a neuron, and the location of every neuron at the worm’s body is shown at the top right. The connections between neurons, at the level of individual synapses, have been mapped using electron micrographs of tiny slices of the worm’s body.

The network on the right in the image shows each neuron as a node and each connection between two neurons as an edge between the corresponding two nodes. Do not worry about the different colors for now – we will discuss this network again later in the course. The important point, for now, is that network science maps this highly complex living system into a graph – an abstraction that we can analyze mathematically and computationally to ask a number of important questions about the organization of this neural system.

Note that this mapping from an actual system to a graph representation is only a model, and so it discards some information that the modeler views as non-essential. For instance, the network representation does not show in this example if a neuron is excitatory or inhibitory – or whether these connections remain the same during the worm’s lifetime.

So it is always important to ask: does the network representation of a given system provide sufficient detail to answer the questions we are really interested in about that system?

The Main Premise

We can now state the main idea, the main premise, of network science:

The network architecture of a system provides valuable information about the system’s function, capabilities, resilience, evolution, etc.

In other words, even if we don’t know every little detail about a system and its components, simply knowing the map or “wiring diagram” that shows how the different system components are interconnected provides sufficient information to answer a lot of important questions about that system.

Or, if our goal is to design a new system (rather than analyze an existing system), network science suggests that we should first start from its network representation, and only when that is completely done, move to lower-level design and implementation.

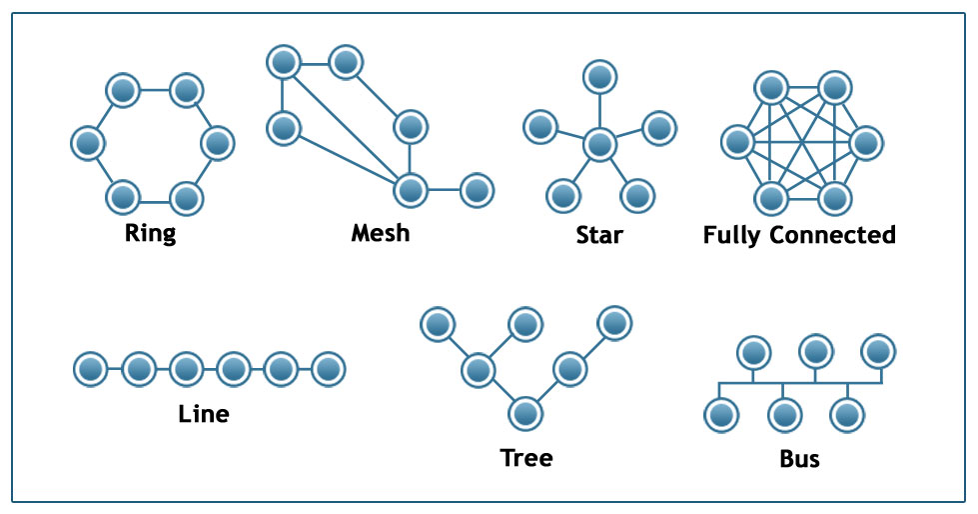

Image Source: techiereader.com

Image Source: techiereader.com

Above, is an example to illustrate the previous point.

Even if you know nothing about the underlying system, what would you say about its efficiency and resilience under each of the following architectures?

Suppose that we are to design a communication system of some sort that will interconnect 6 sites. The first question is: what should be the network architecture? This figure shows several options. For example, the Ring architecture provides two disjoint paths between every pair of nodes. The Line, Tree, and Star architectures require the fewest number of links but they are highly vulnerable when certain nodes or edges fail. The Fully Connected architecture requires the highest number of links but it also provides the most direct (and typically faster) and resilient communication. The Mesh architecture provides a trade-off between all previous properties.

Examples of Systems Studied by Network Scientists

Skipped. Just some examples provided.

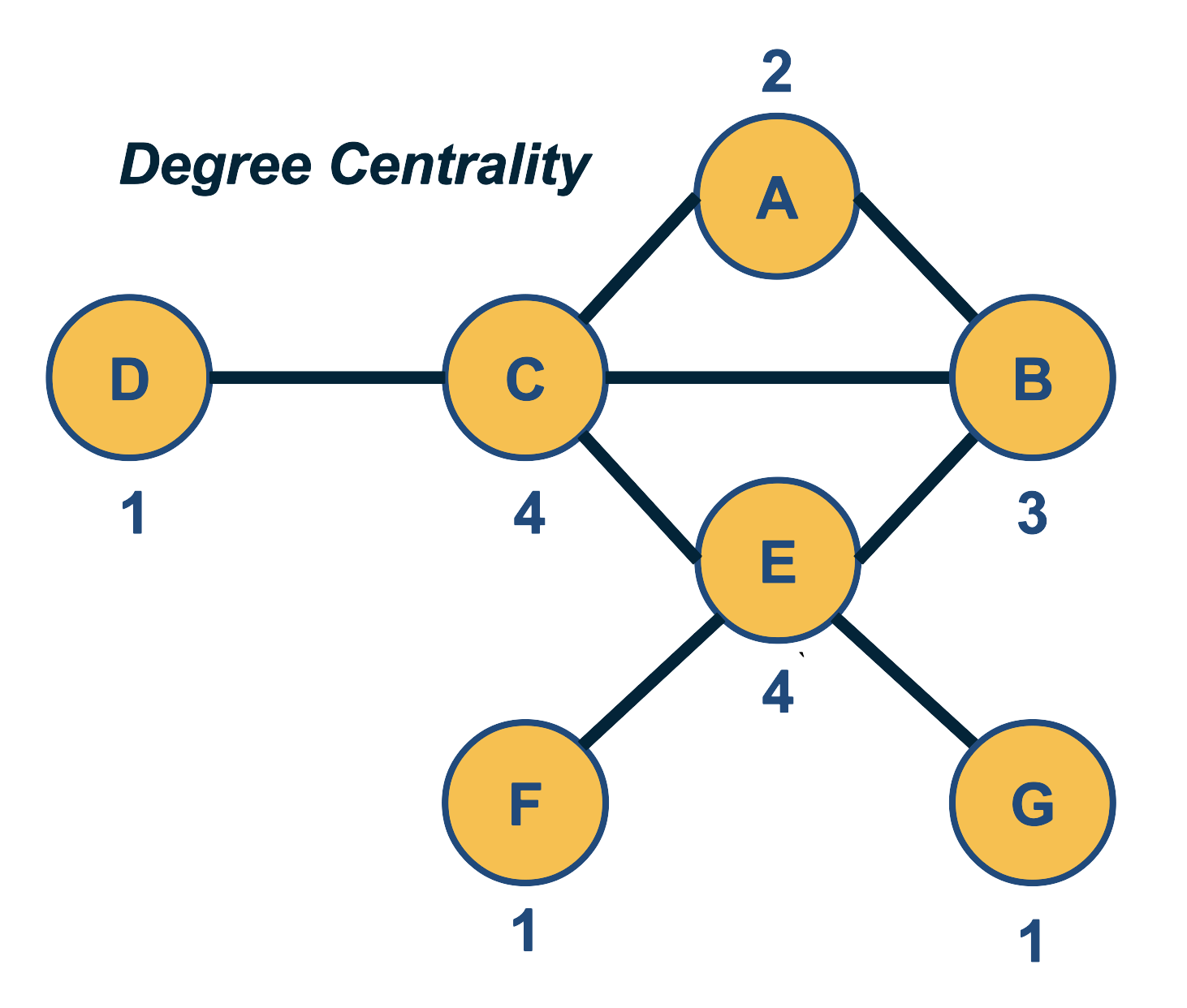

Network Centrality

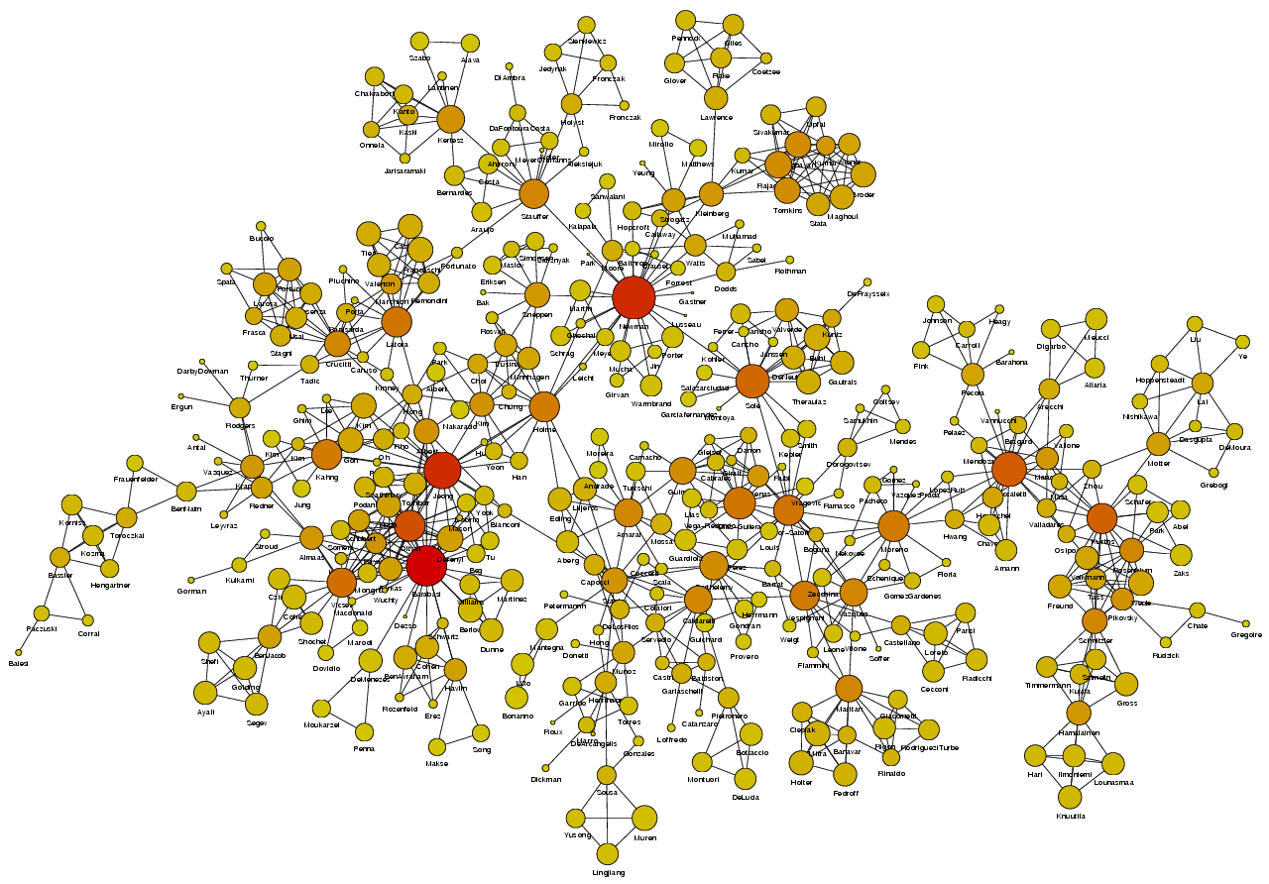

Image Source: University of Michigan

Image Source: University of Michigan

Above is an image that shows the co-authorship network for a set of Network Science researchers: each node represents a researcher and two nodes are connected if they have published at least one paper together.

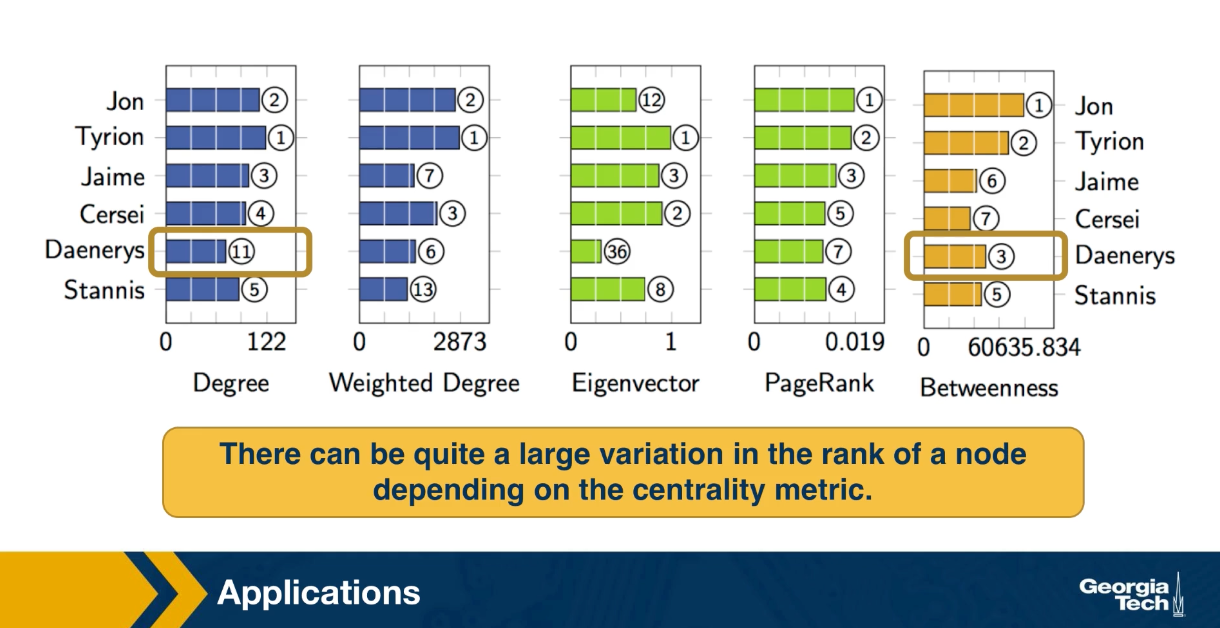

A very common question in network science is: given a network representation of a system, which are the most important modules or nodes? Or, which are the most important connections or edges?

Of course, this depends on what we mean by “important” – and there are several different metrics that quantify the “centrality” of nodes and edges.

Sometimes we want to identify nodes and edges that are very central in the sense that most pairs of other nodes communicate through them.

Or, nodes and edges that, if removed, will cause the largest disruption in the underlying network.

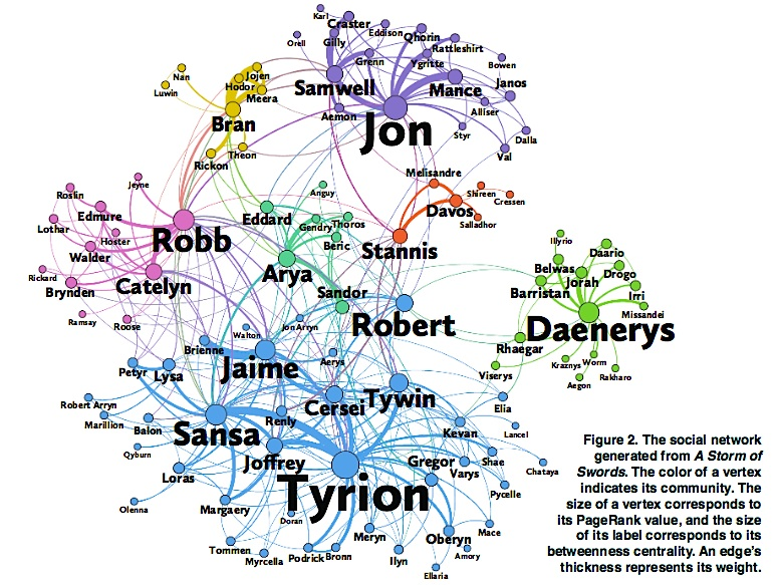

Image Source: The Measurement Standard, Carma

Image Source: The Measurement Standard, Carma

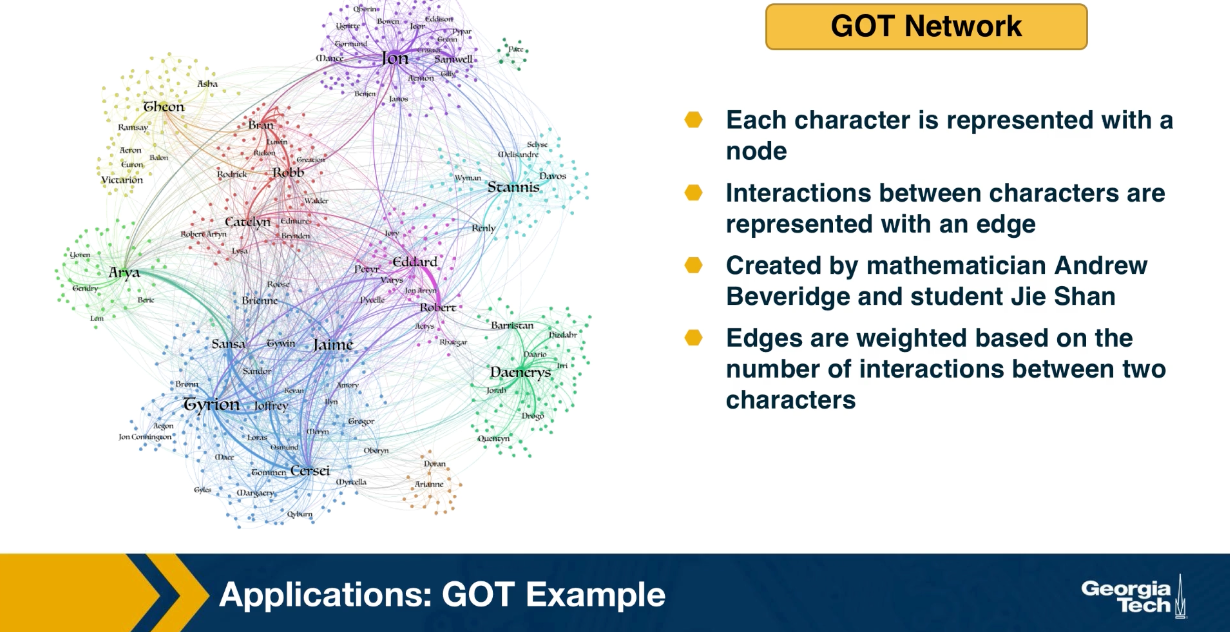

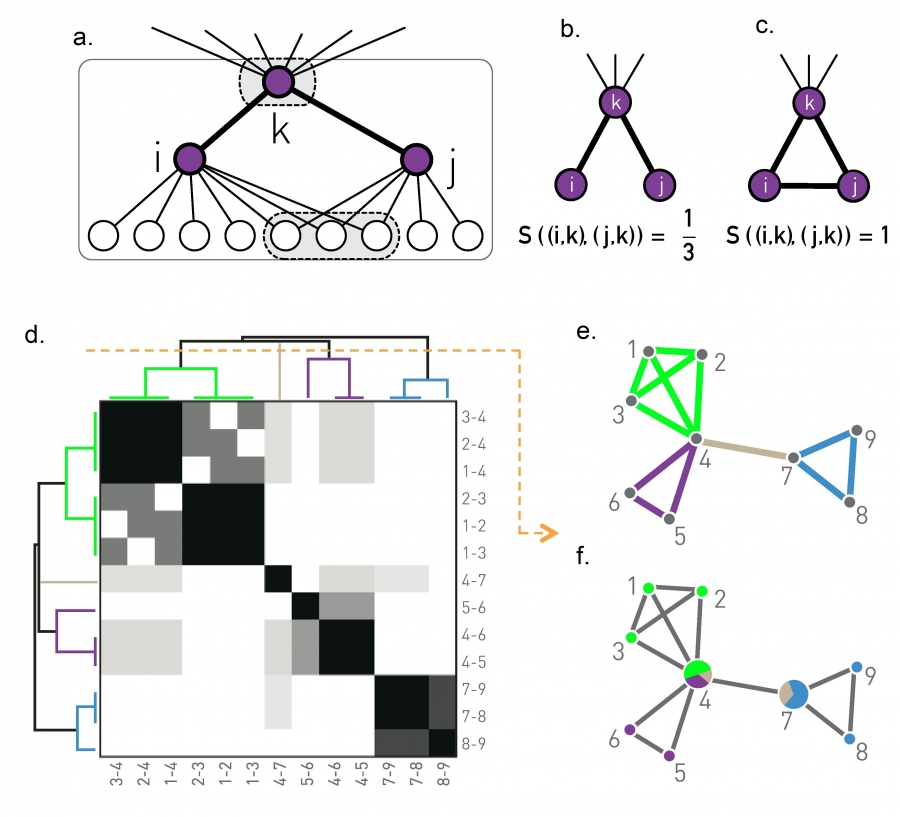

Two nodes are connected if the corresponding two characters interacted in that novel, and the weight of the edge represents the length of that interaction.

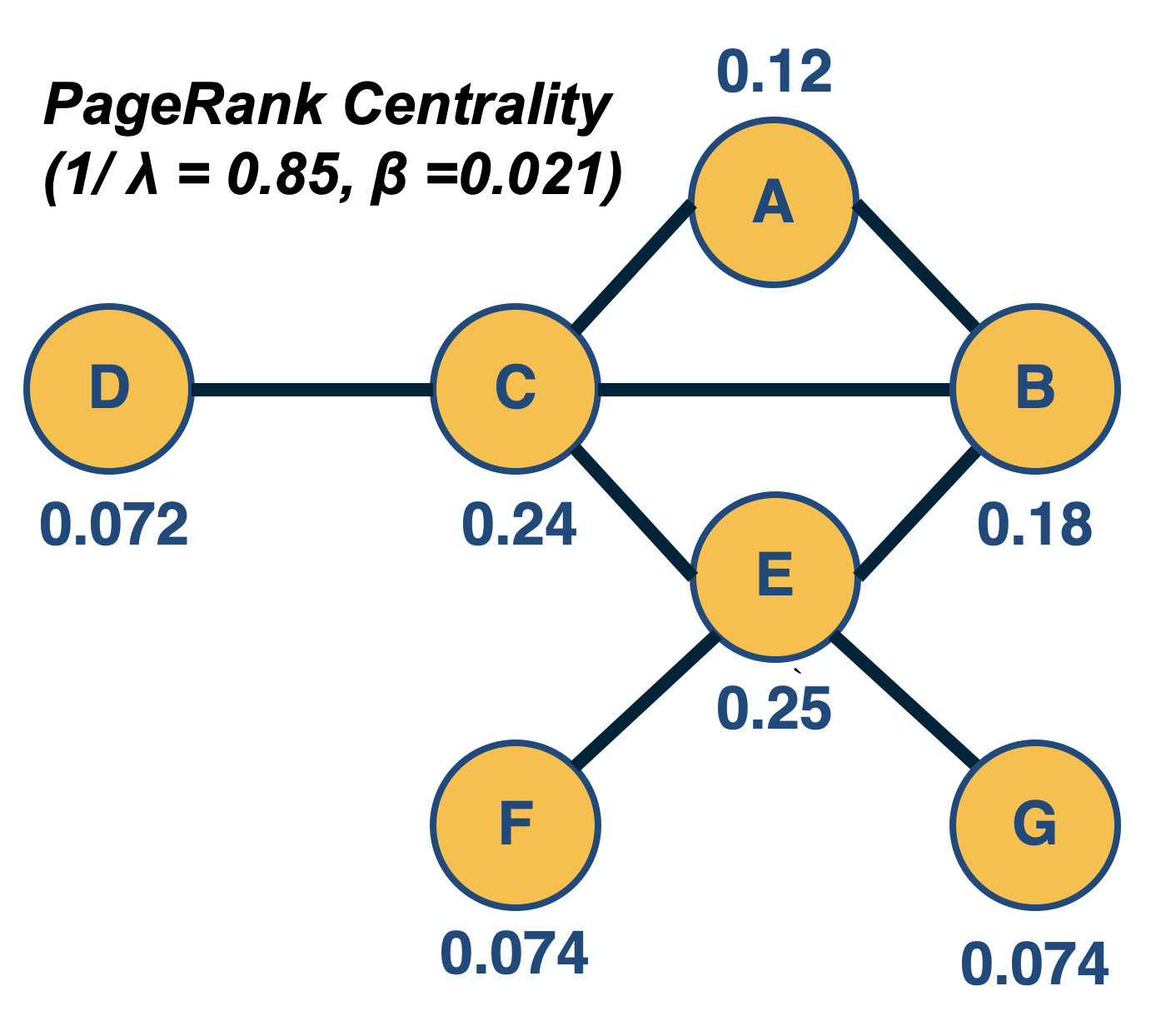

Two different node centrality metrics are visualized in this figure. The size of the node refers to a centrality metric called PageRank – it is the same metric that was used by Google in their first web search engine. The PageRank value of a node v does not depend simply on how many other nodes point to v, but also how large their PageRank value is and how many other nodes they point to.

The second centrality metric refers to a centrality metric called “Betweenness” and it is shown by the size of the node’s label. The Betweenness centrality of a node v relates to the number of shortest paths that traverse node v, considering the shortest paths across all node pairs.

Both metrics suggest that Tyrion and Jon are the most central characters in that novel, even though they were not interacting yet.

Source Links

- Finding community structure in networks using the eigenvectors of matricesLinks to an external site.

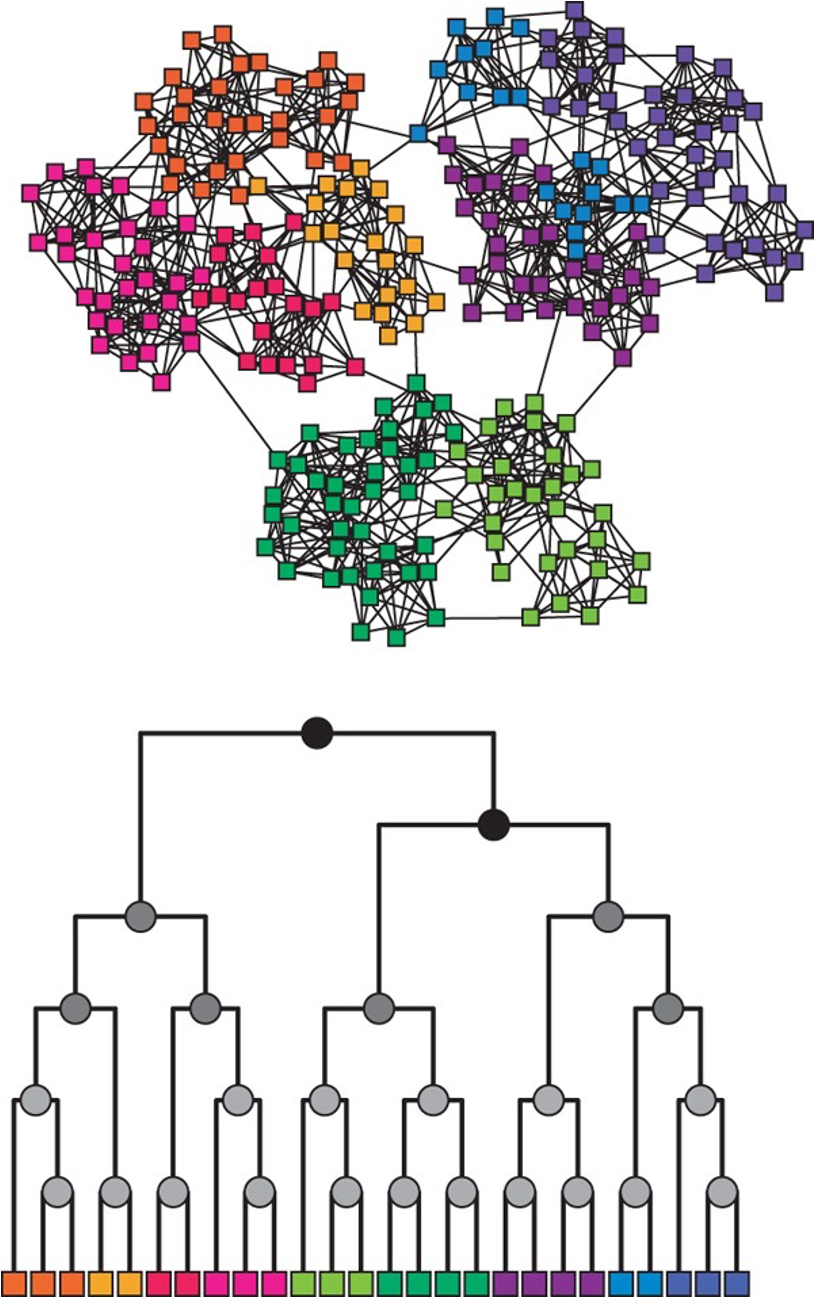

Communities (Modules) in Networks

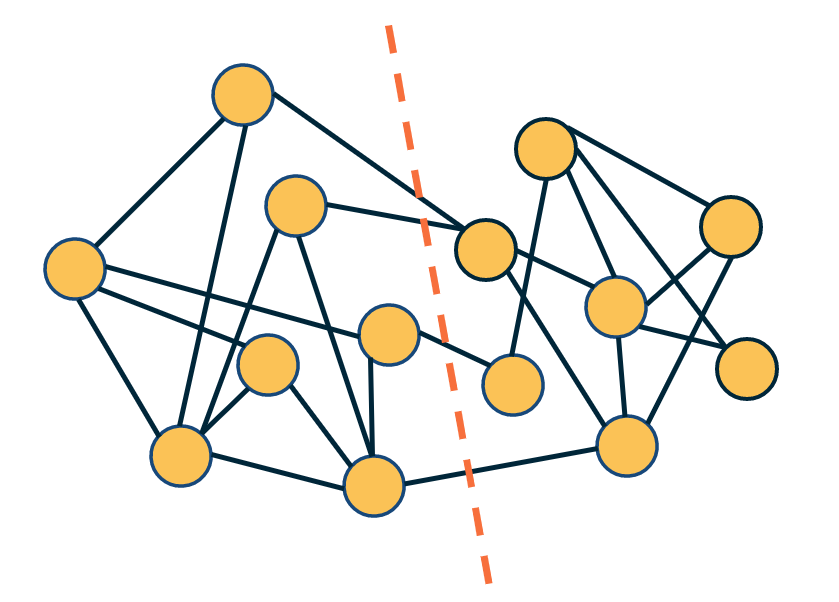

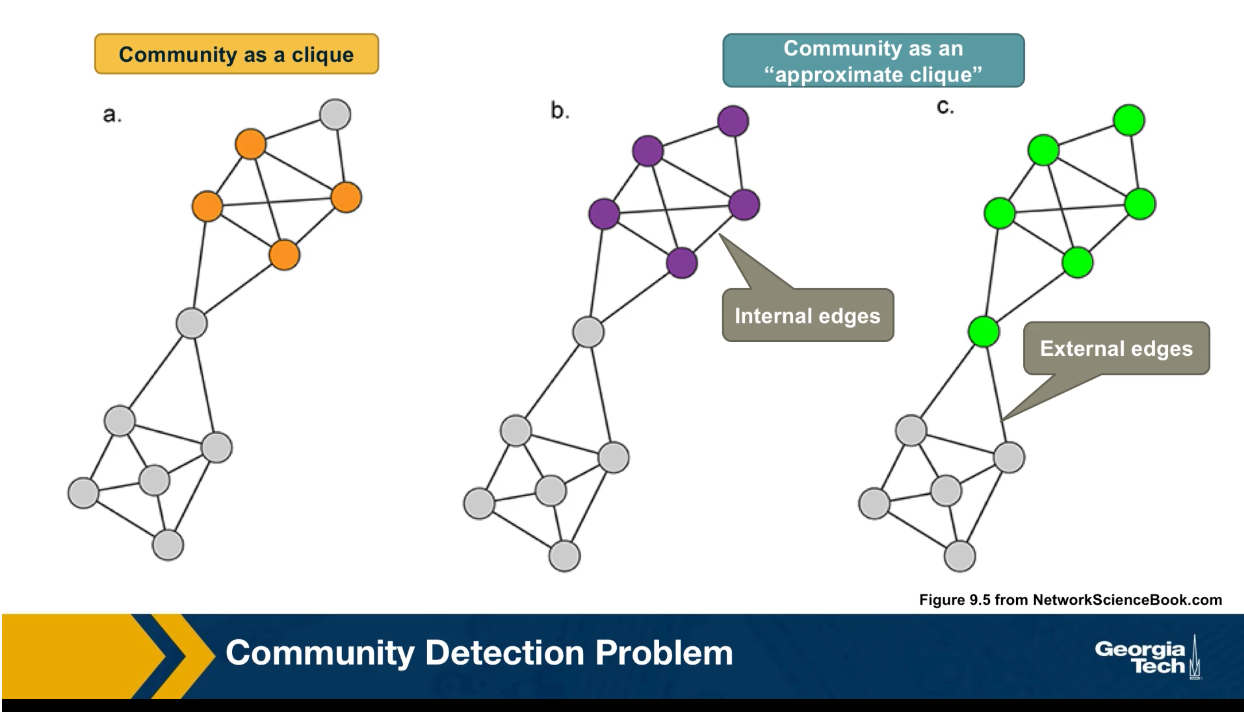

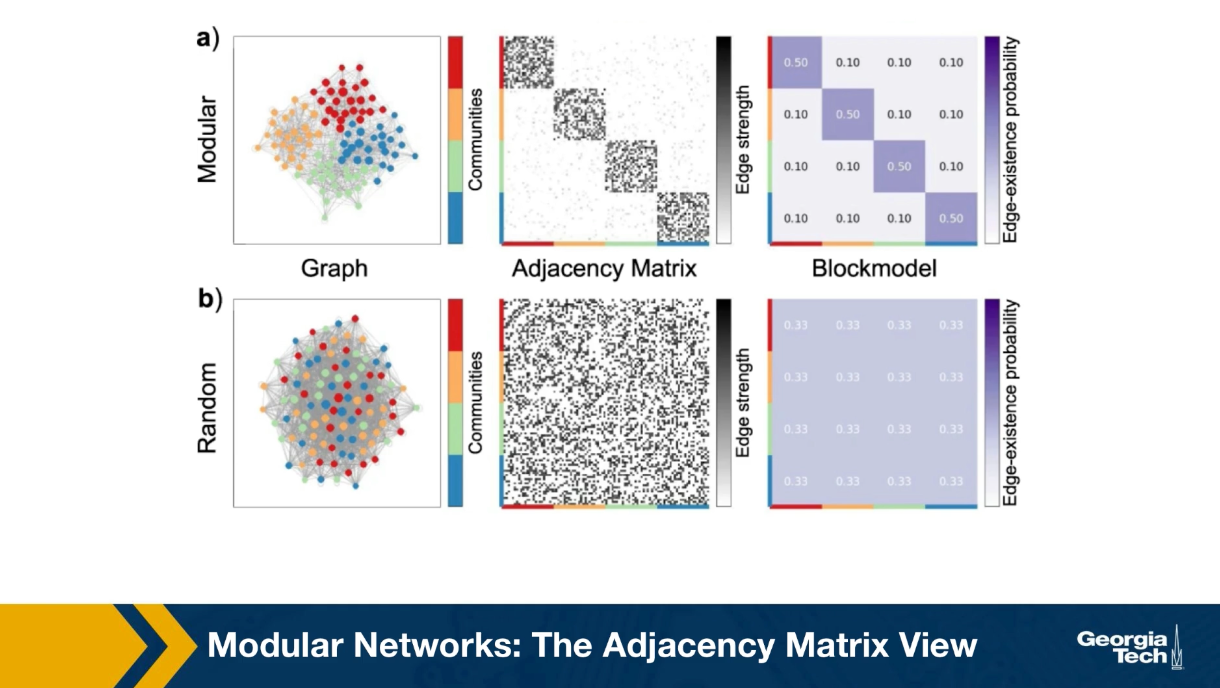

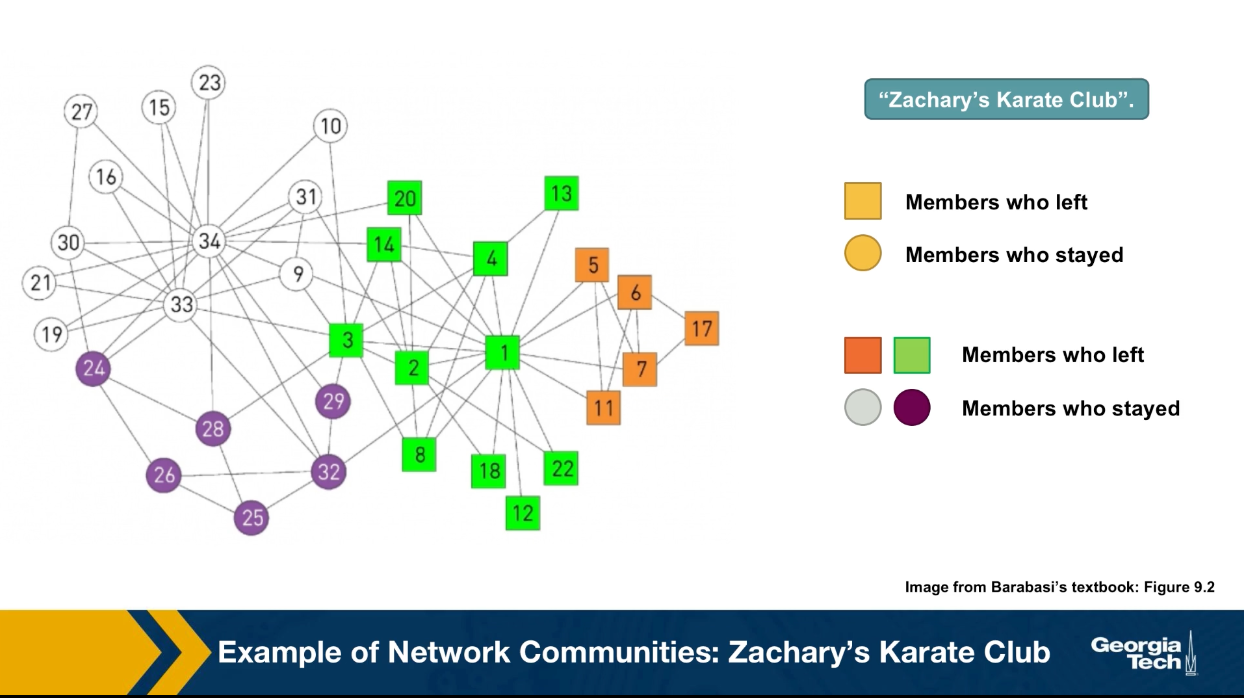

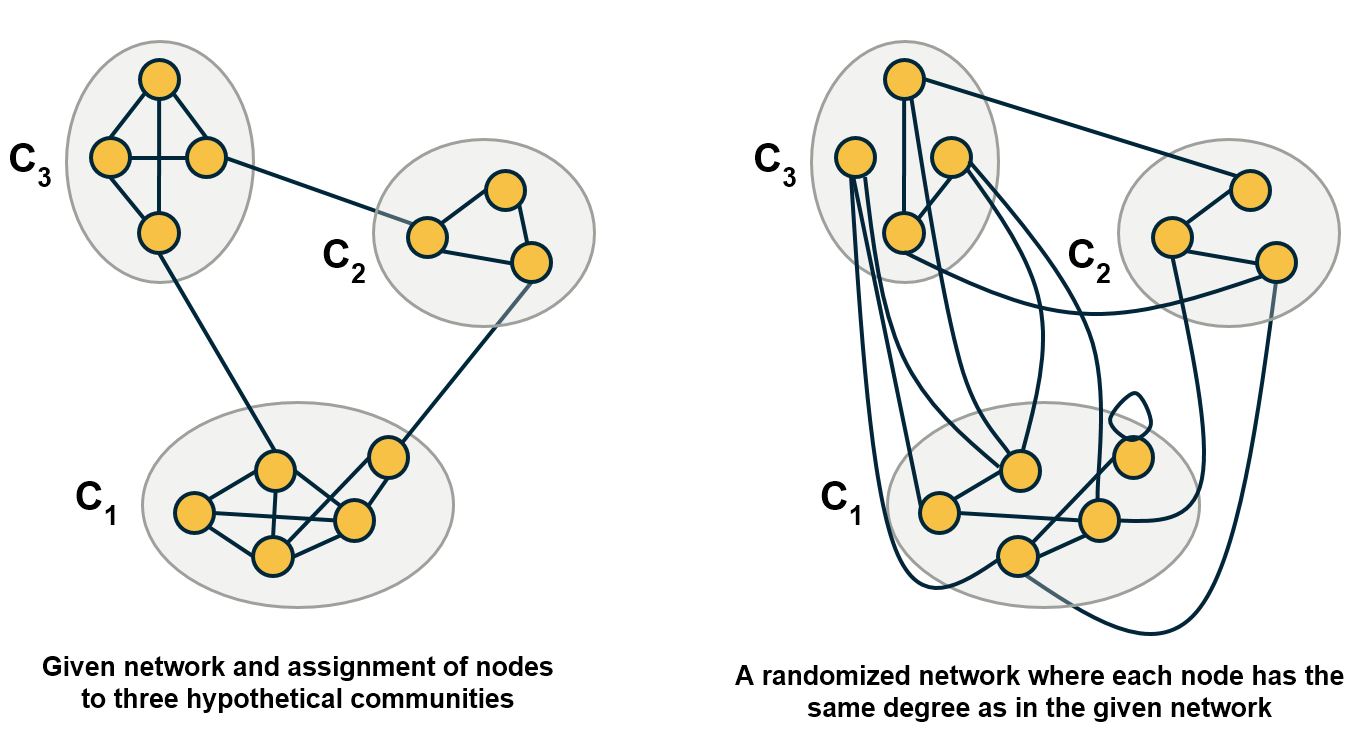

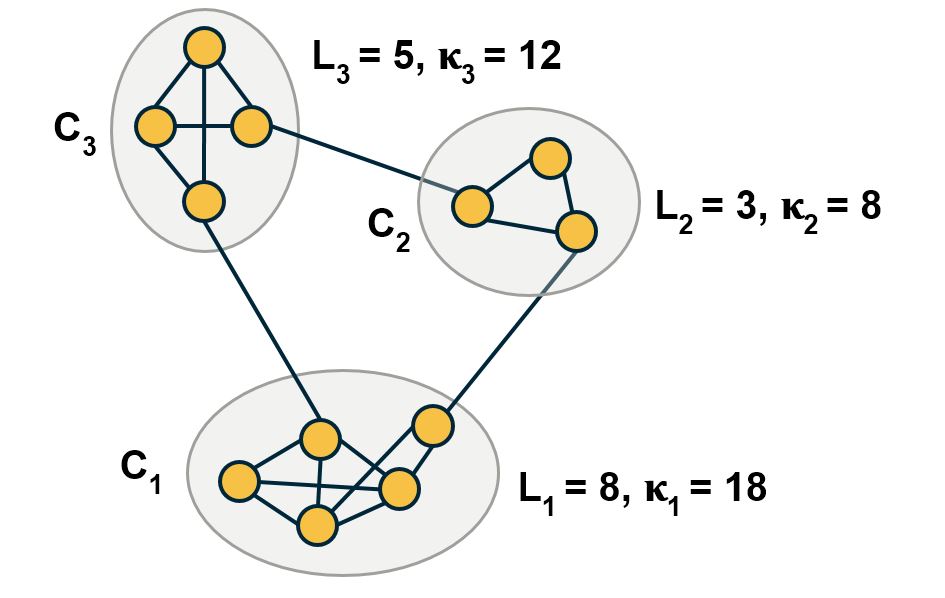

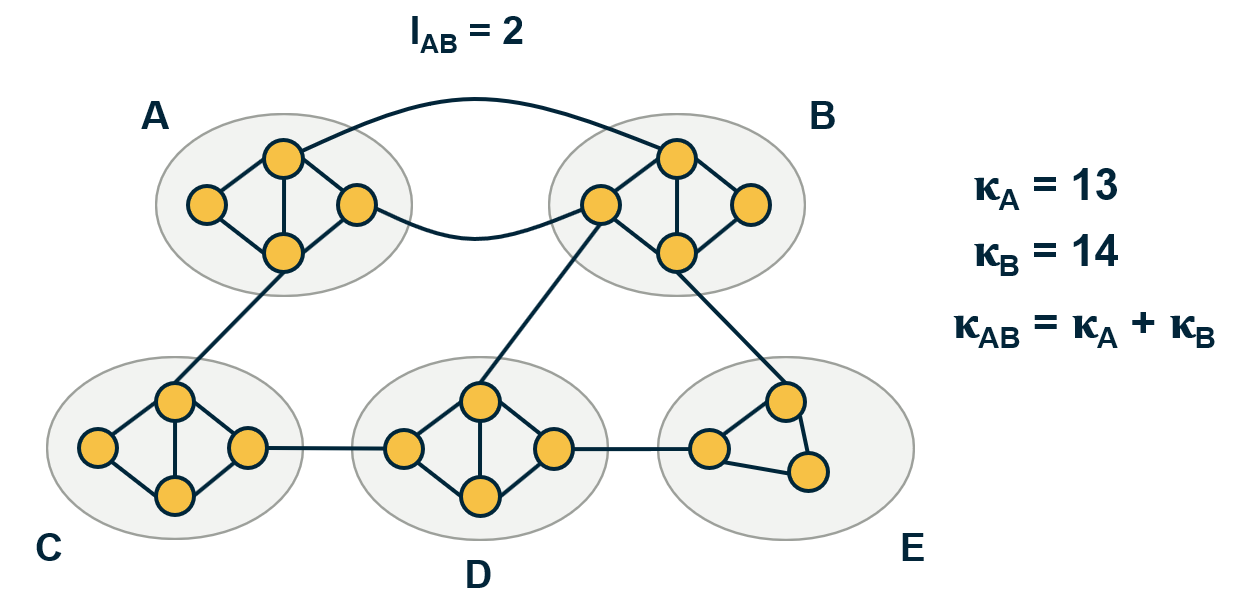

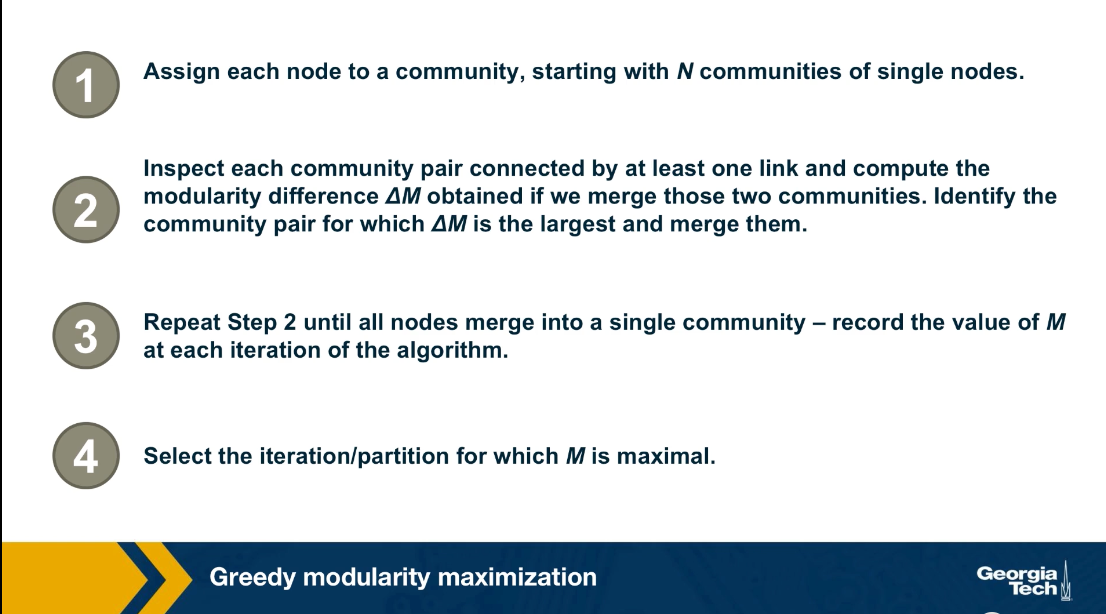

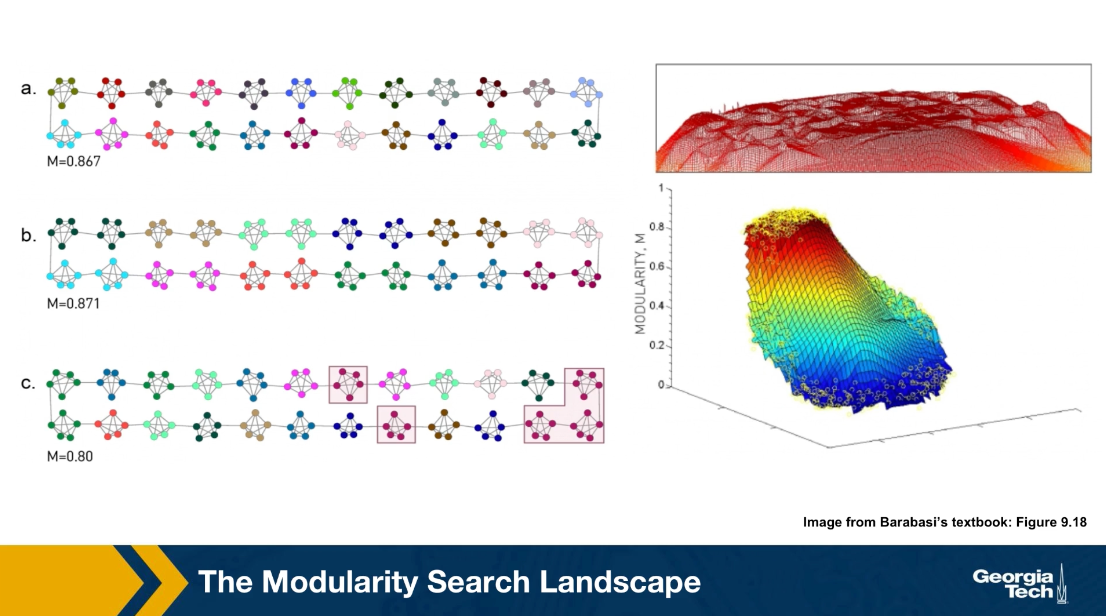

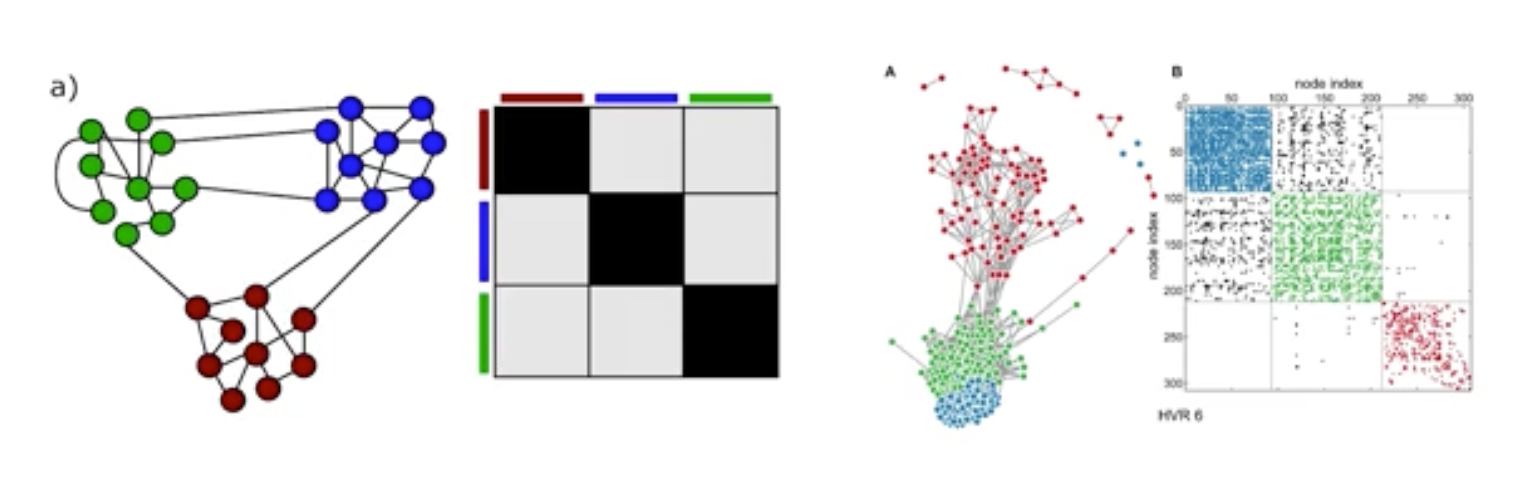

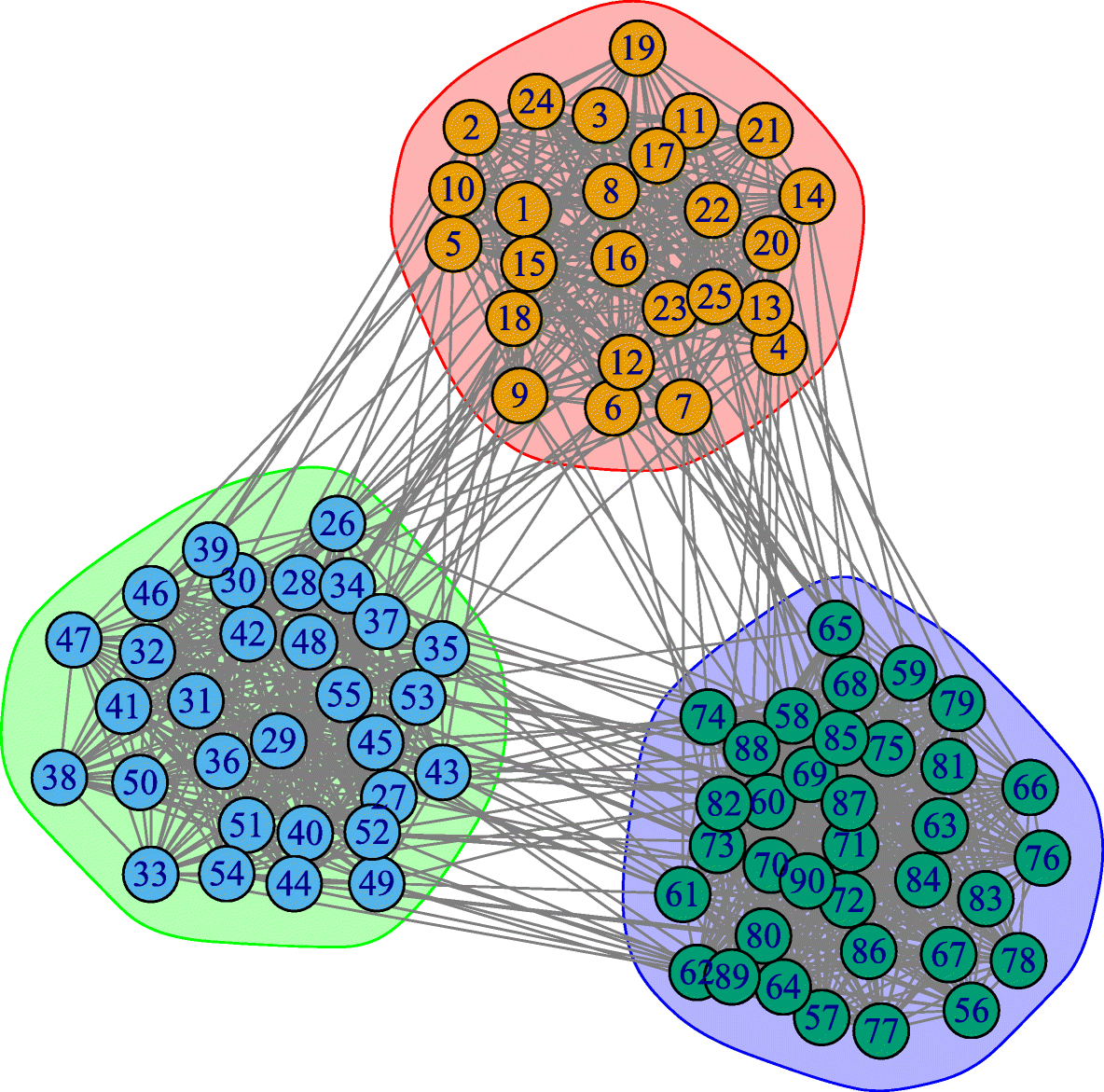

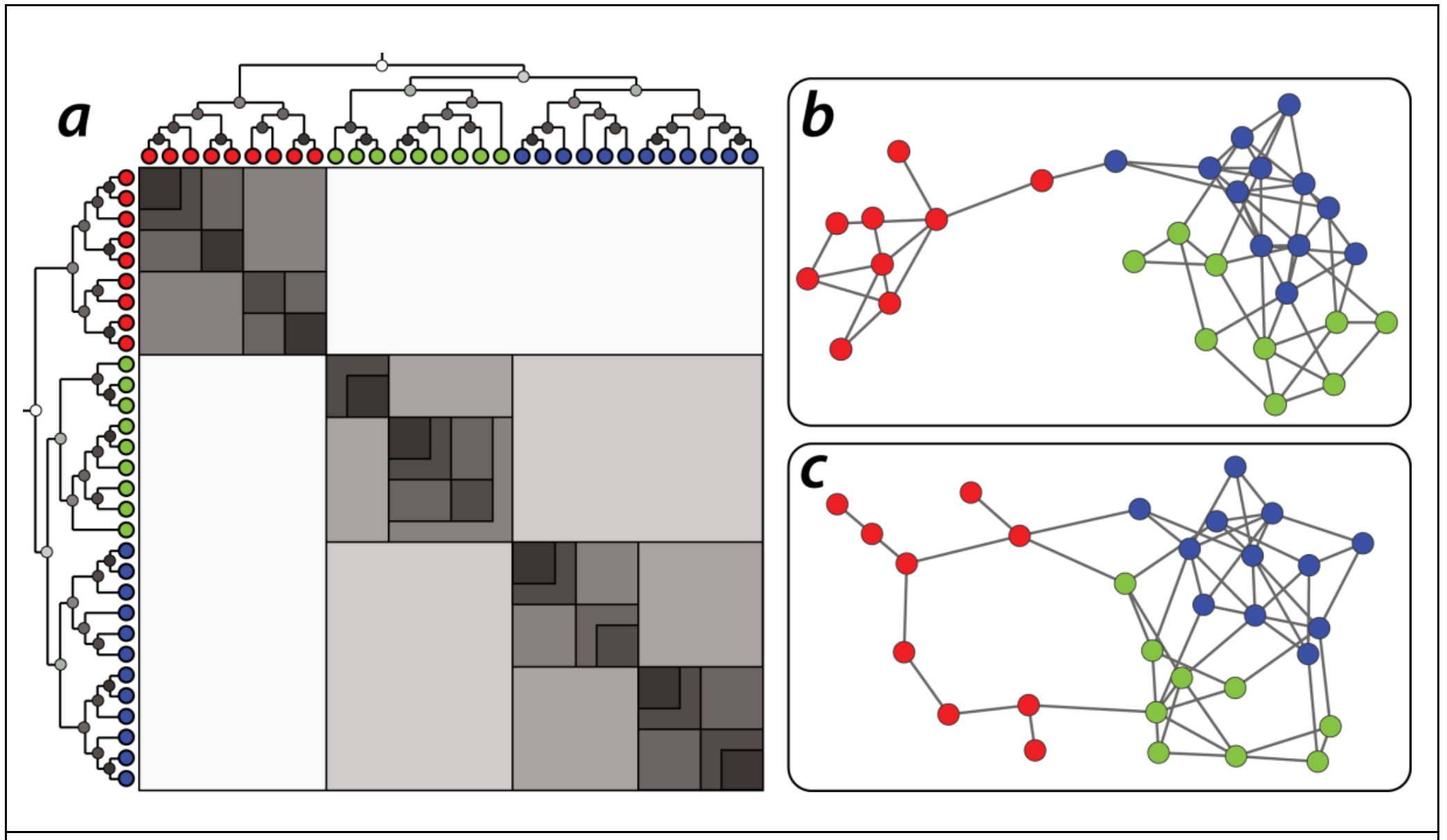

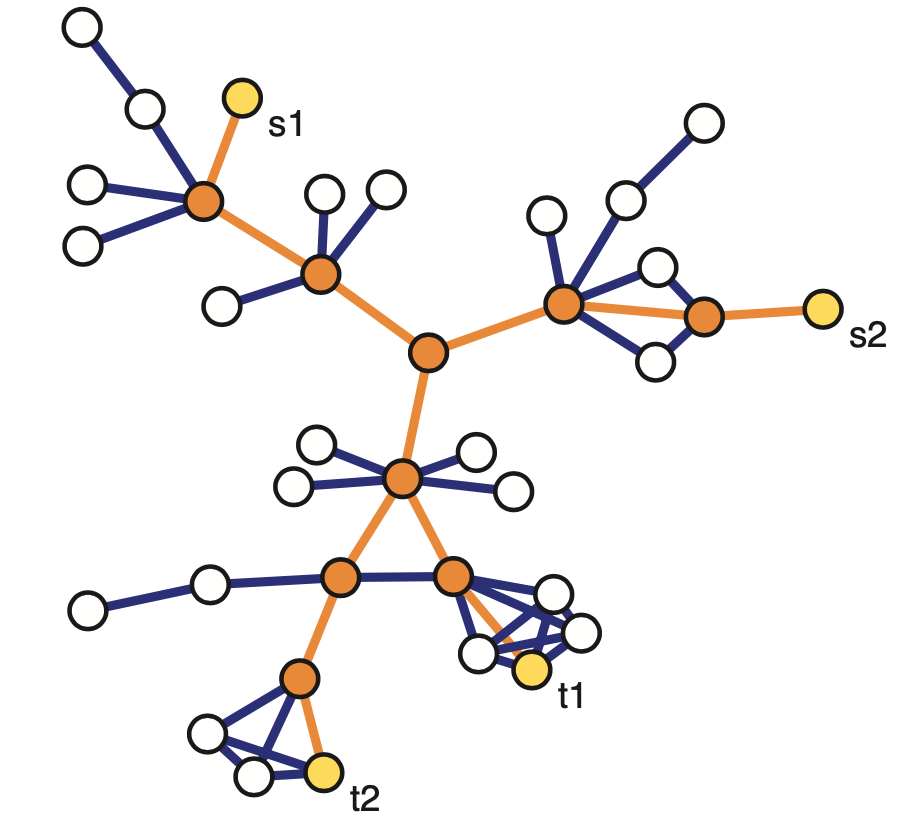

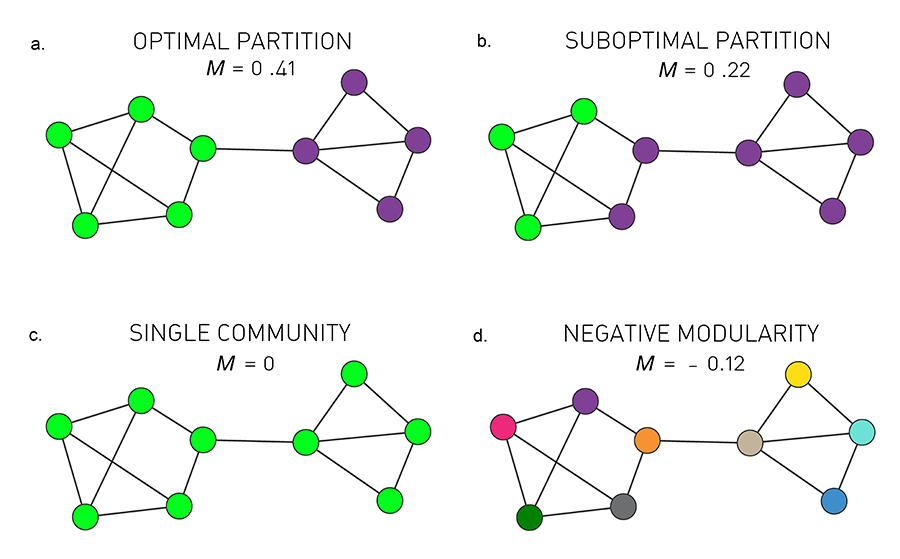

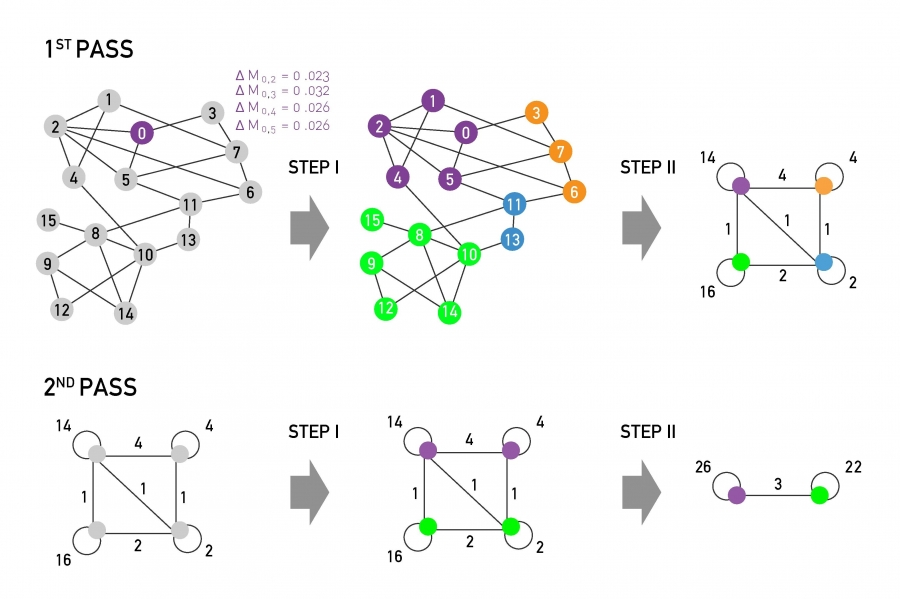

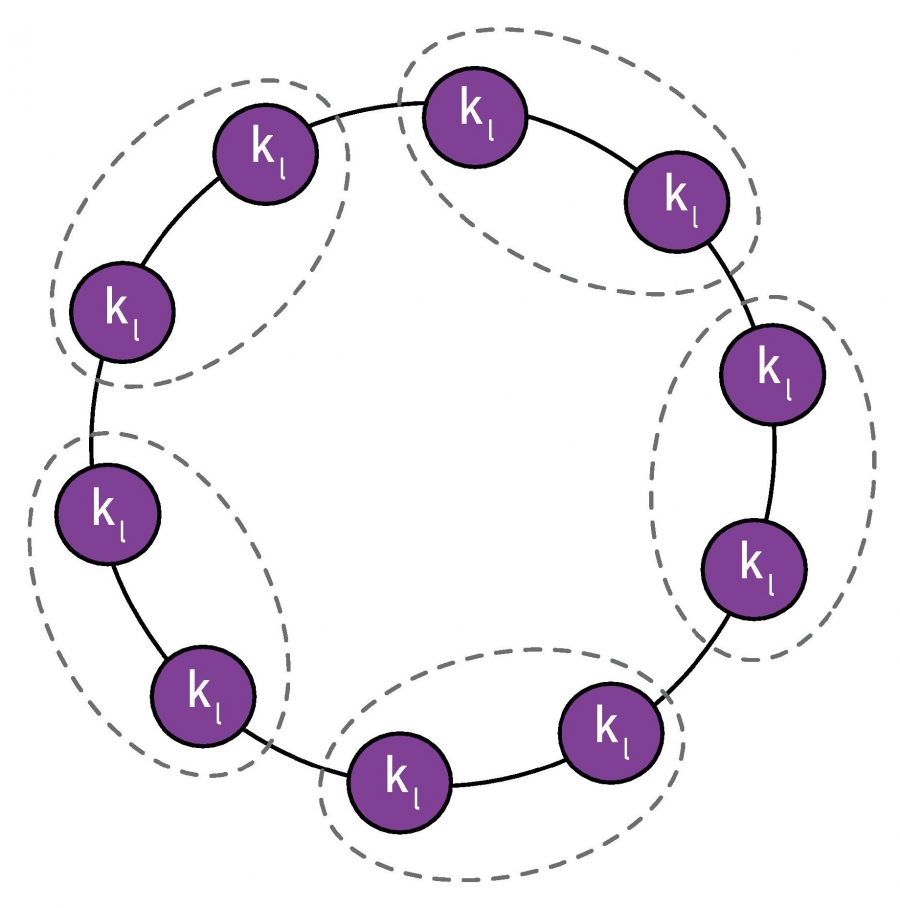

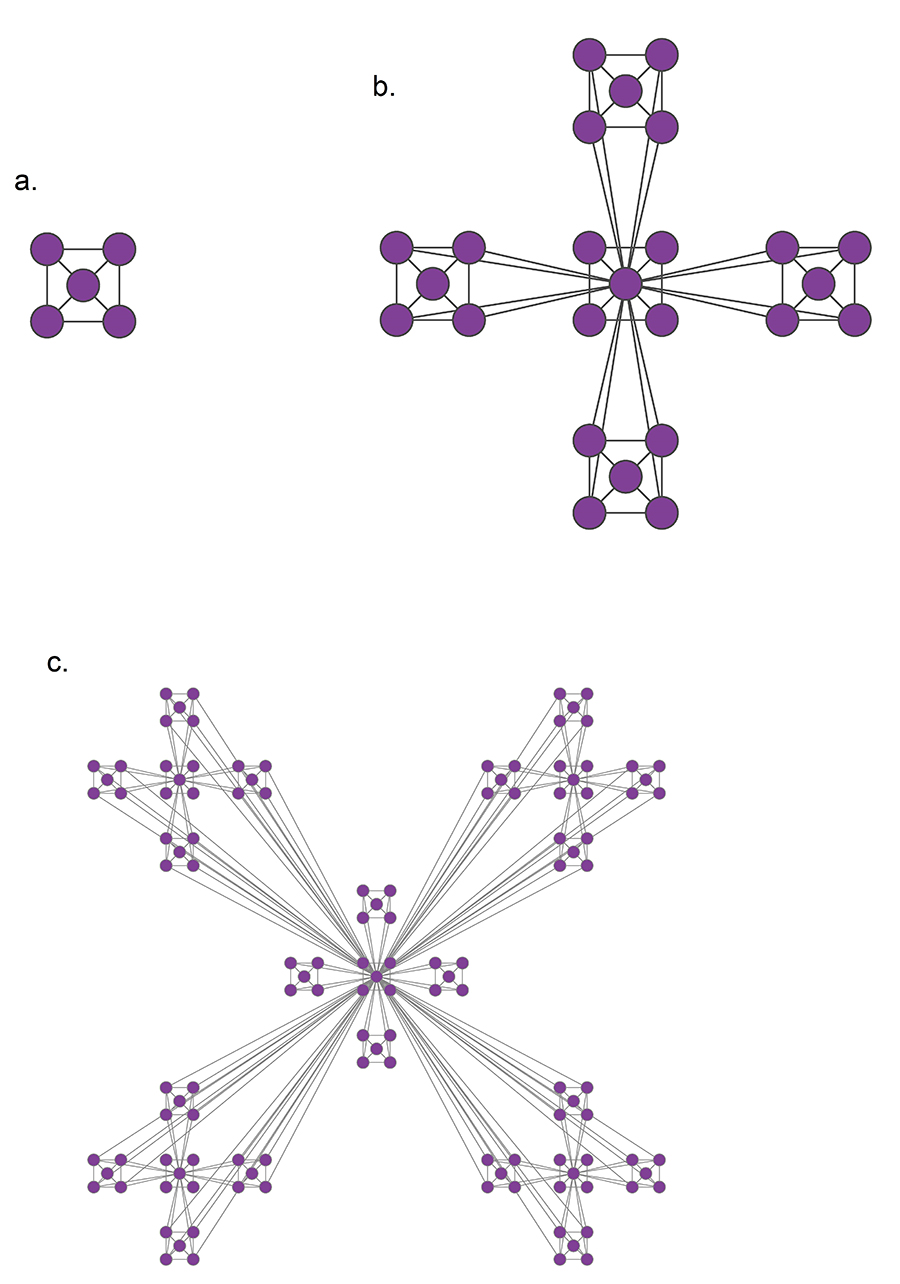

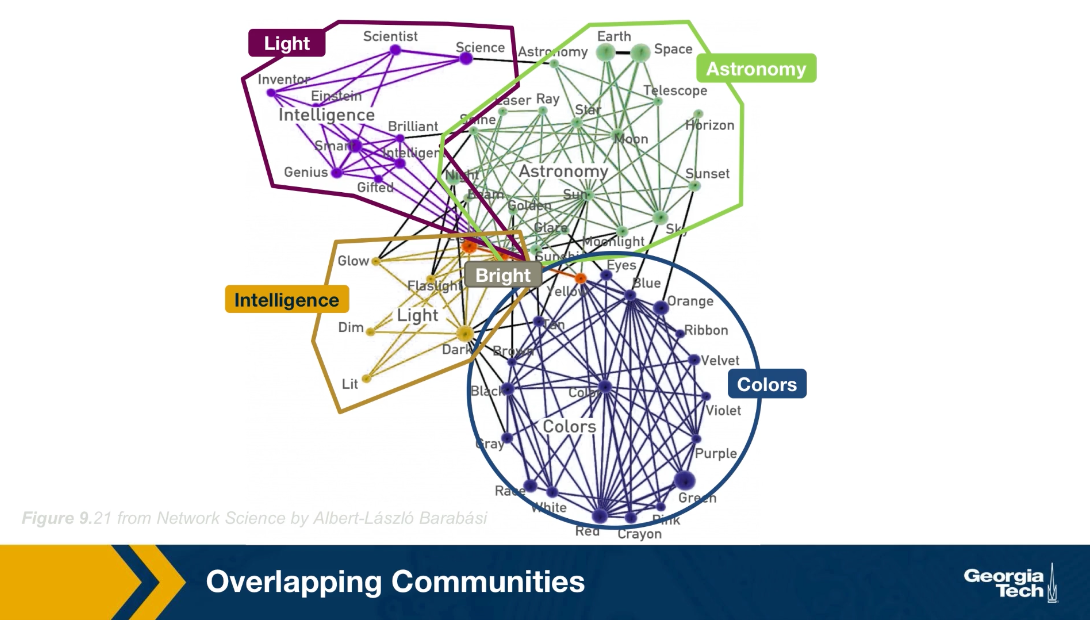

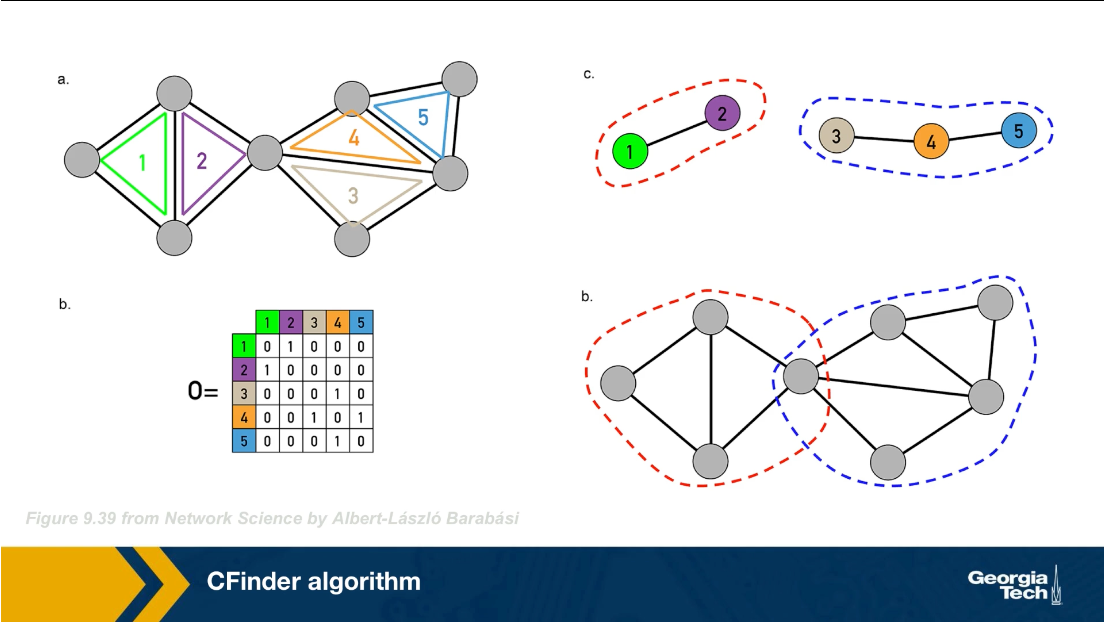

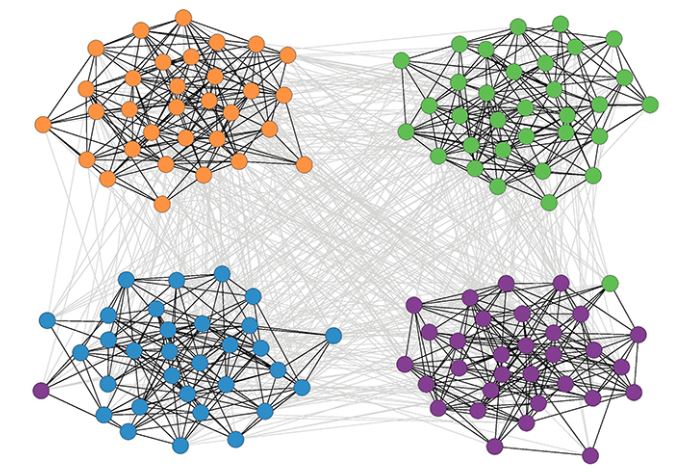

Another important problem in Network Science is to discover Communities – in other words, clusters of highly interconnected nodes. The density of the connections between nodes of the same community is much larger than the density of the connections between nodes of different communities.

Returning to the previous Game of Thrones visualization, each color represents a different community – with a total of 7 communities of different sizes.

For those of you that are familiar with the book or TV show (mostly seasons 3 and 4), these communities make a lot of sense. Up to that point in the story, Daenerys, for instance, was mostly interacting with the Dothrakis and with Barristan, while Jon was mostly interacting with characters at Castle Black.

There are many algorithms for Community Detection – and some of them are able to identify nodes that participate in more than one community. We will discuss such algorithms later in the course.

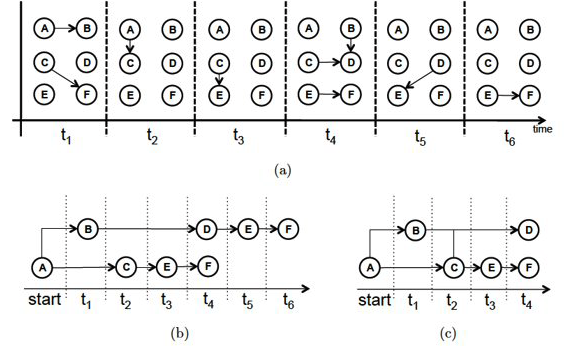

Dynamics of Networks

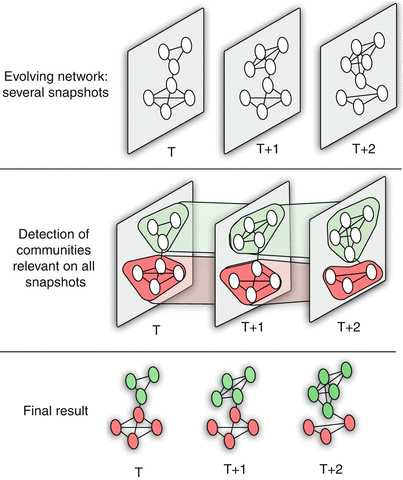

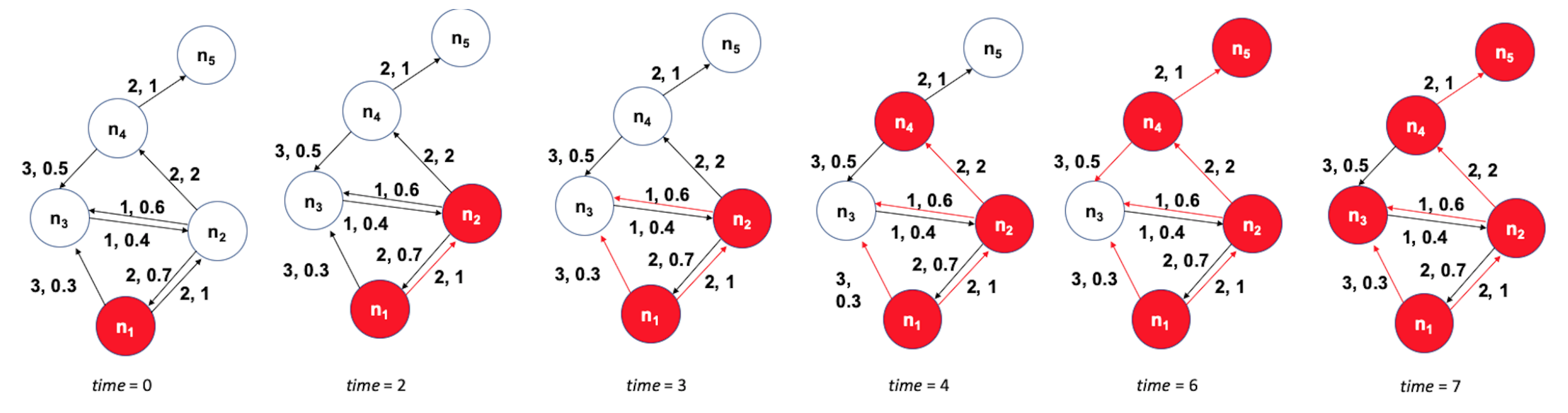

An important component of Network Science is the focus on Dynamic Networks – systems that change over time through natural evolution, growth or other dynamic rewiring processes.

For example, the brain’s neural network is changing dramatically during adolescence – but more recent research in neuroscience shows that brain connections also change when people learn something new or even when they meditate.

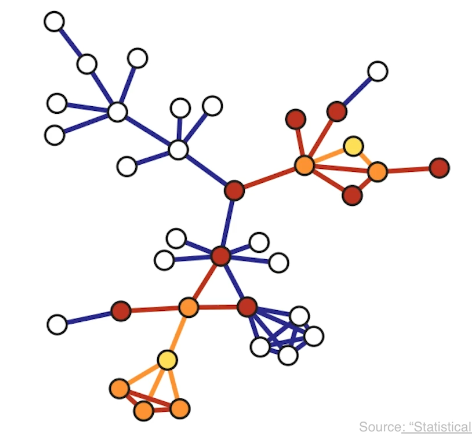

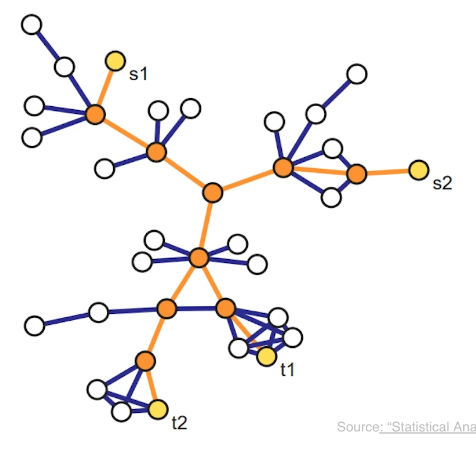

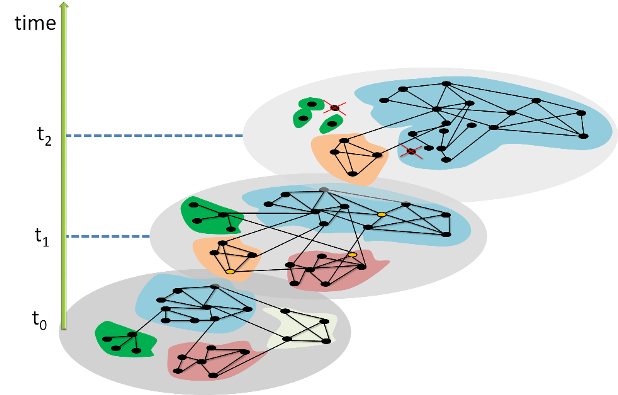

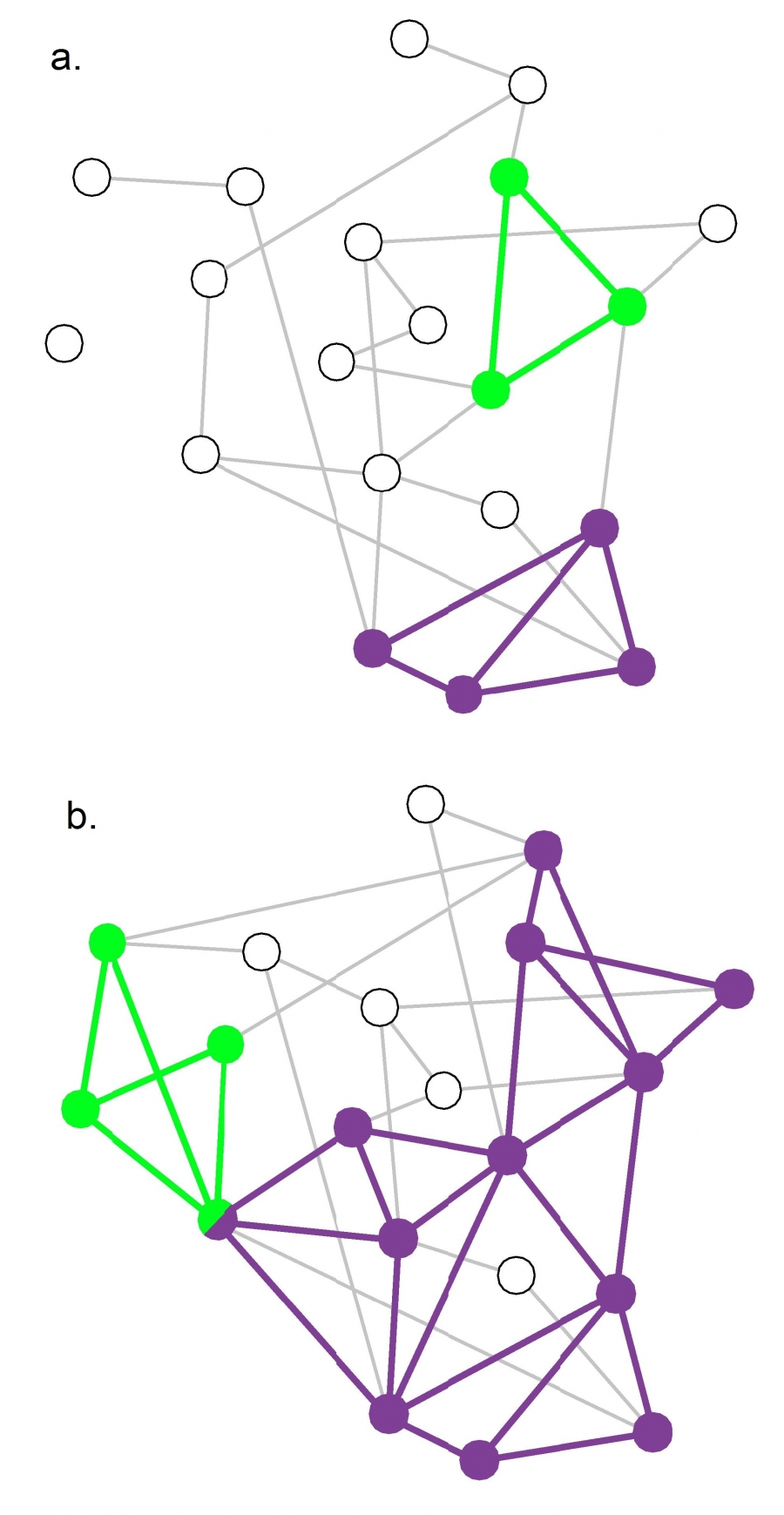

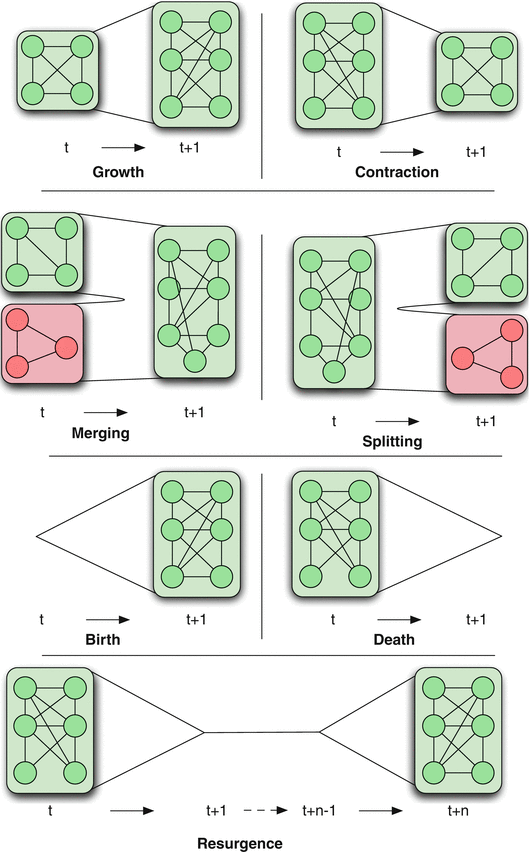

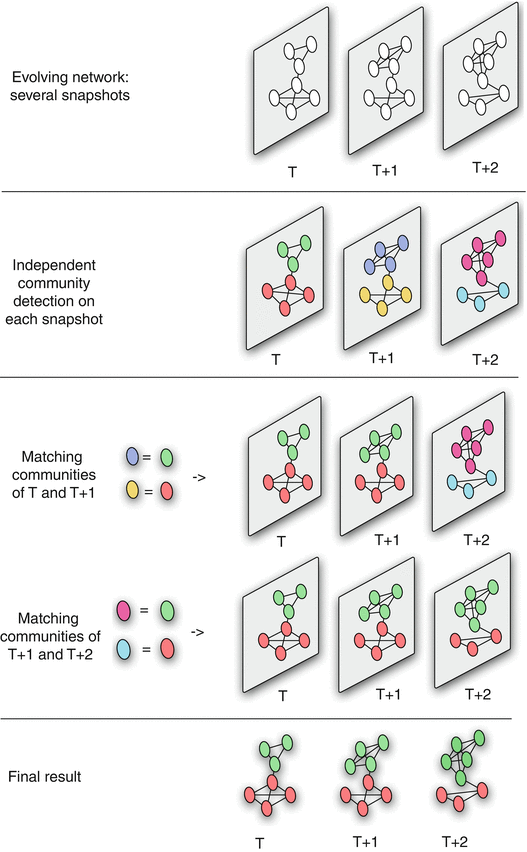

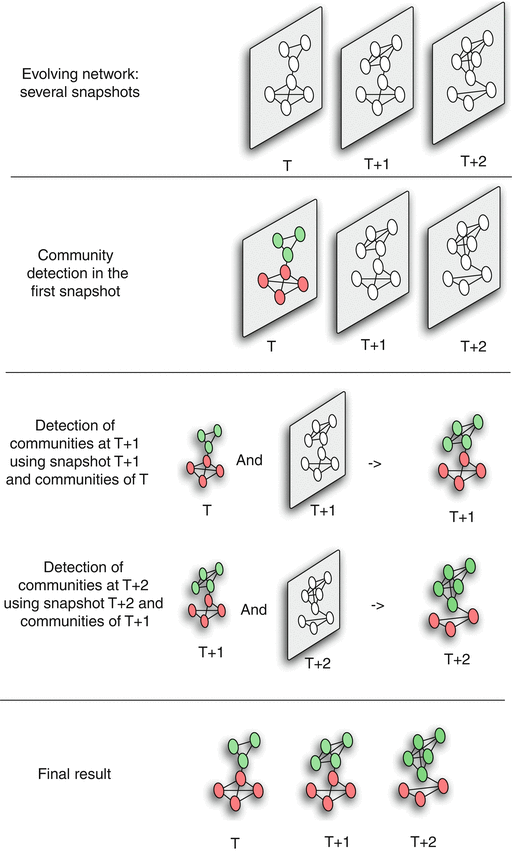

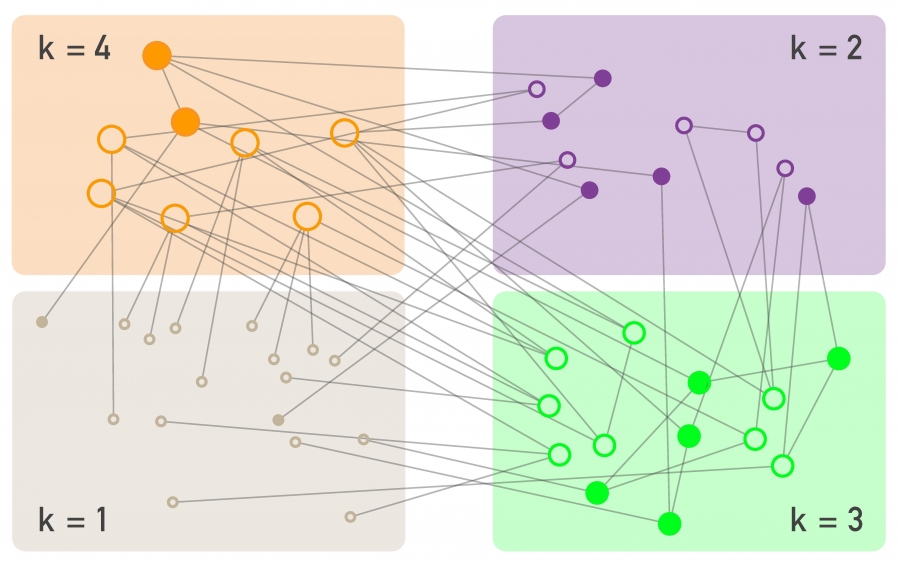

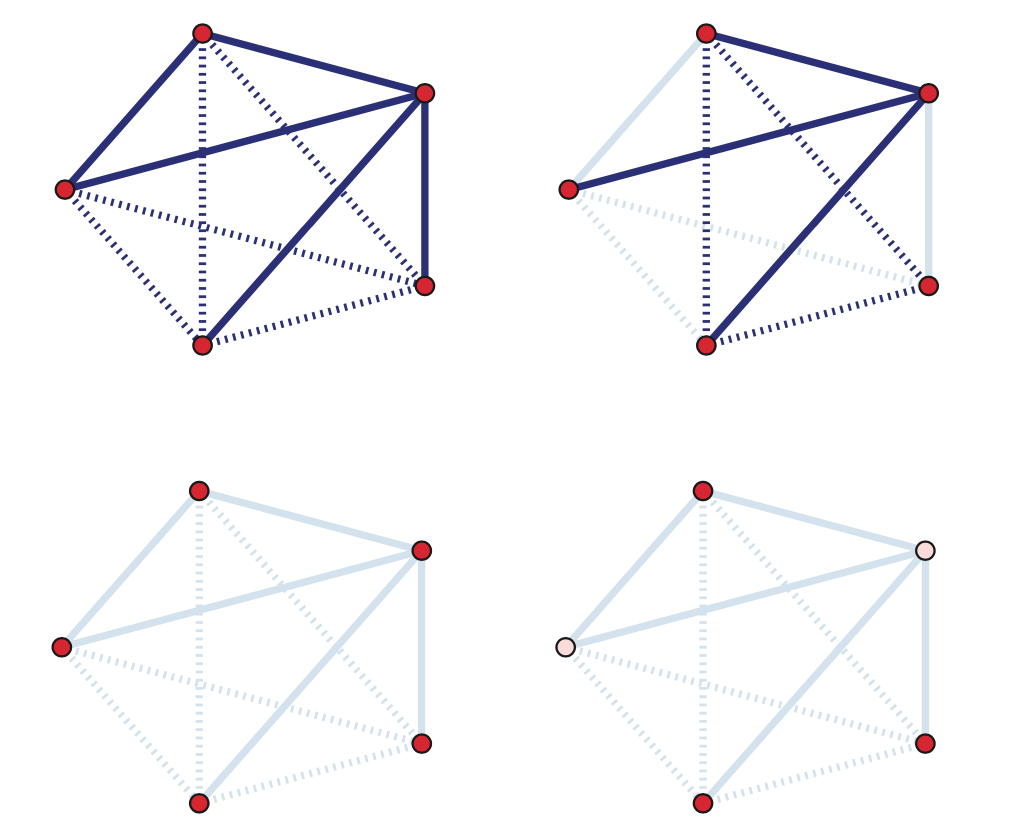

The image above shows how the community structure of a network may be changing over time. (Image Source: The University of Florida

The image above shows how the community structure of a network may be changing over time. (Image Source: The University of Florida

Note that the white and red communities are gradually absorbed by the blue and the green community gradually collapses.

We will study algorithms that can detect and quantify such dynamic processes in networks.

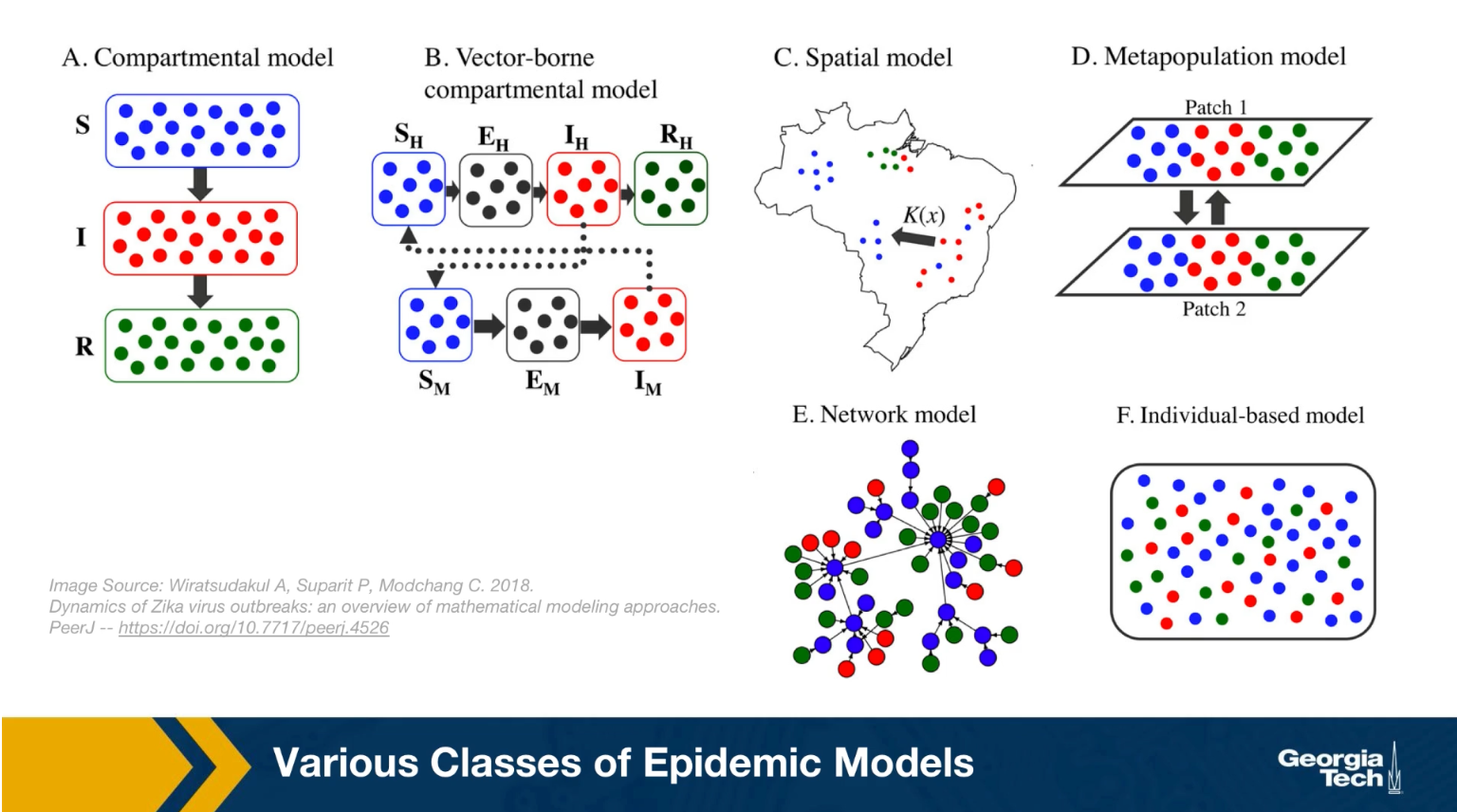

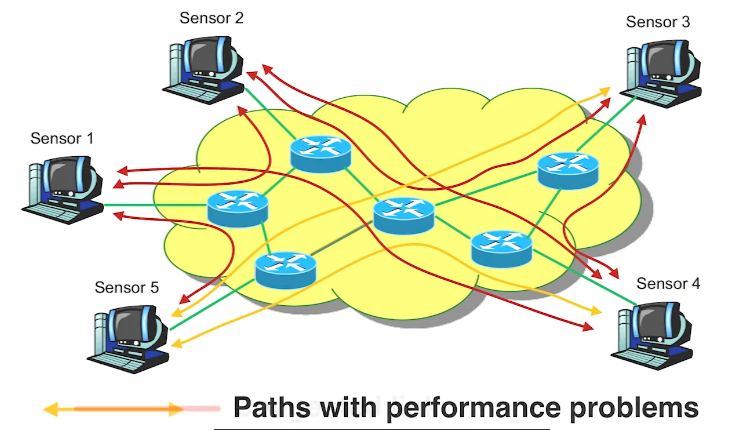

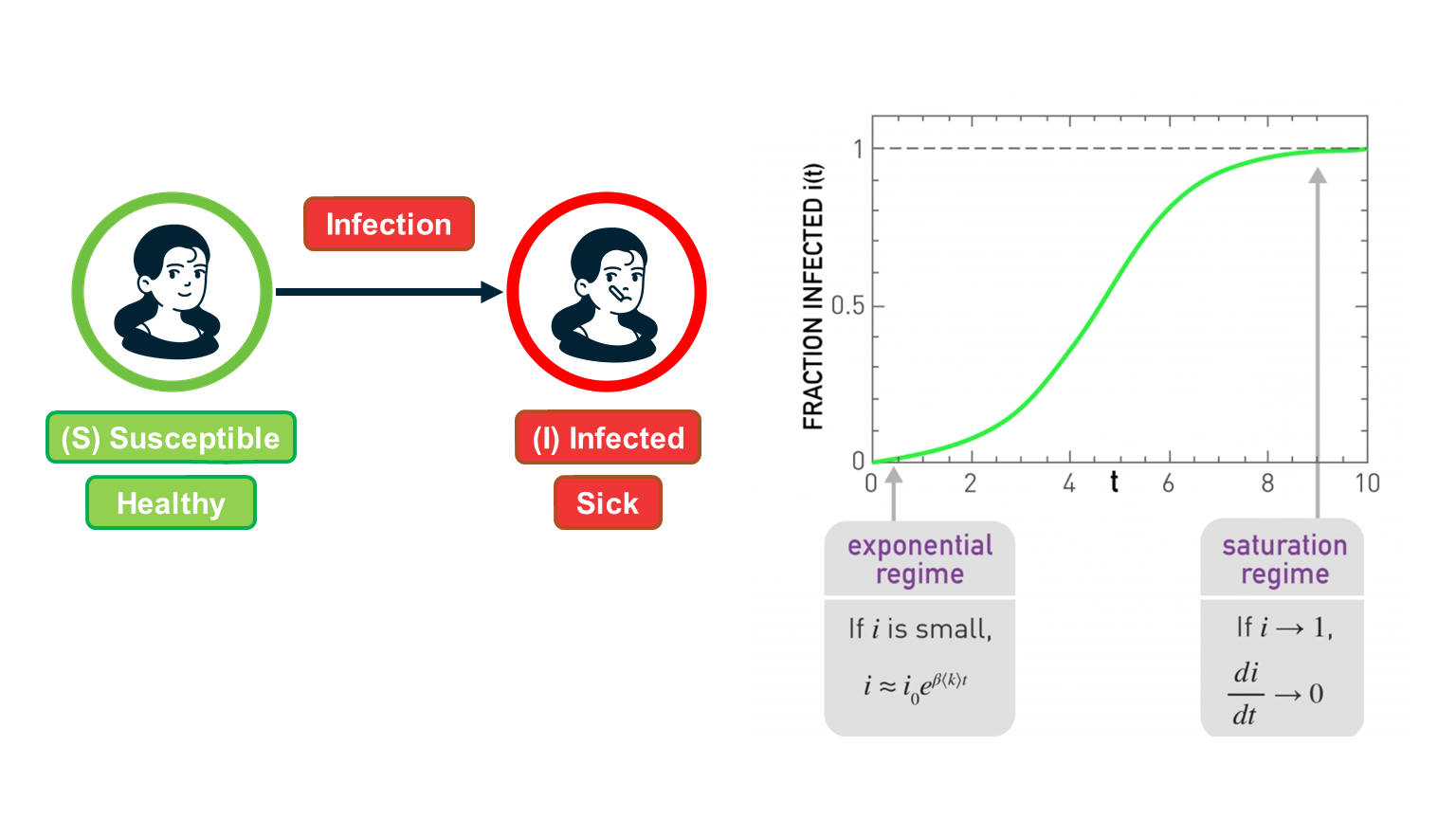

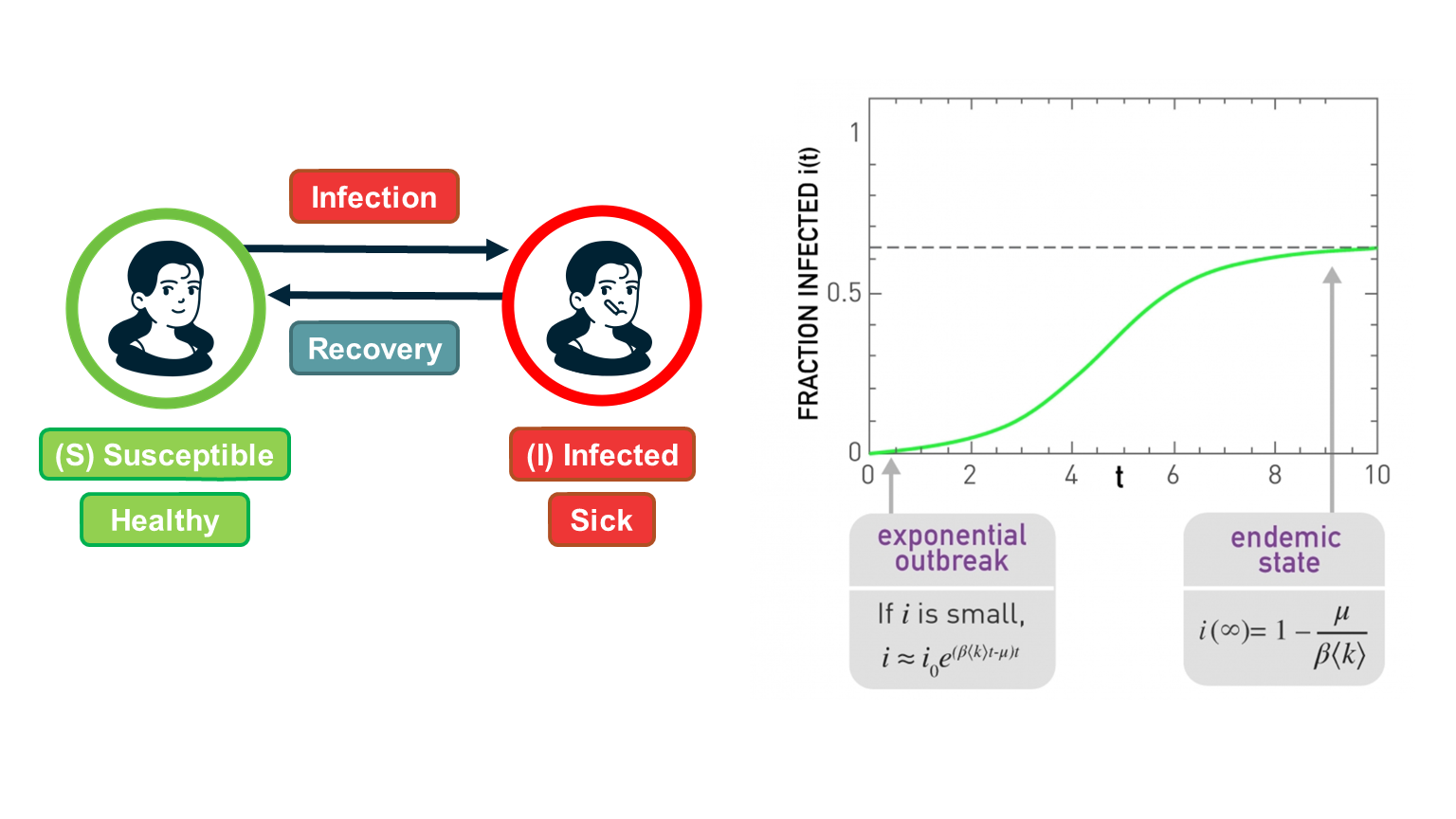

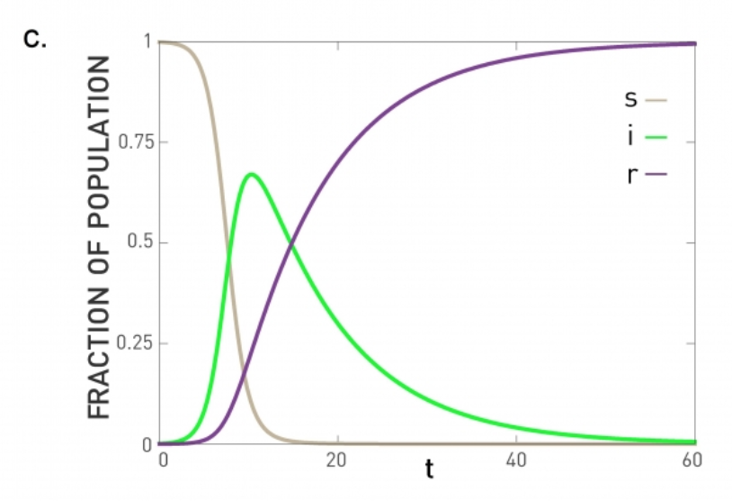

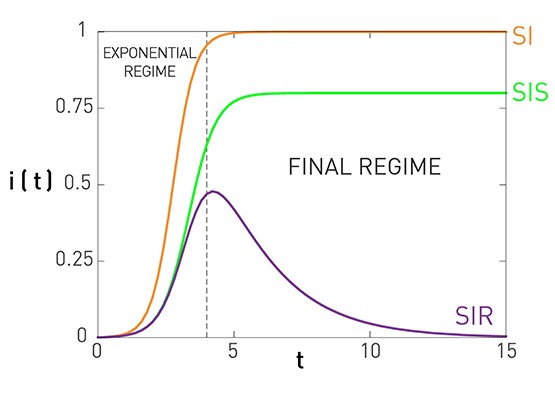

Another important problem in Network Science is the study of Dynamic Processes on Networks. Here, the network structure remains the same – but there is a dynamic process that is gradually unfolding on that network.

For example, the process may be an epidemic that spreads through an underlying social network.

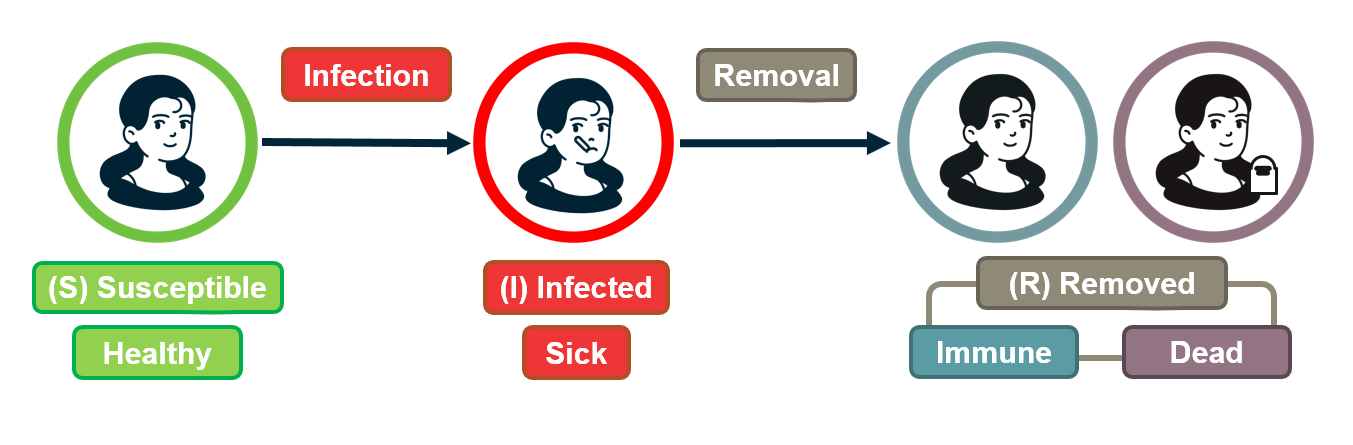

For certain viruses, such as HIV, the state of each human can be one of the following: healthy but susceptible to the virus, infected by the virus but not yet sick, or sick (symptomatic).

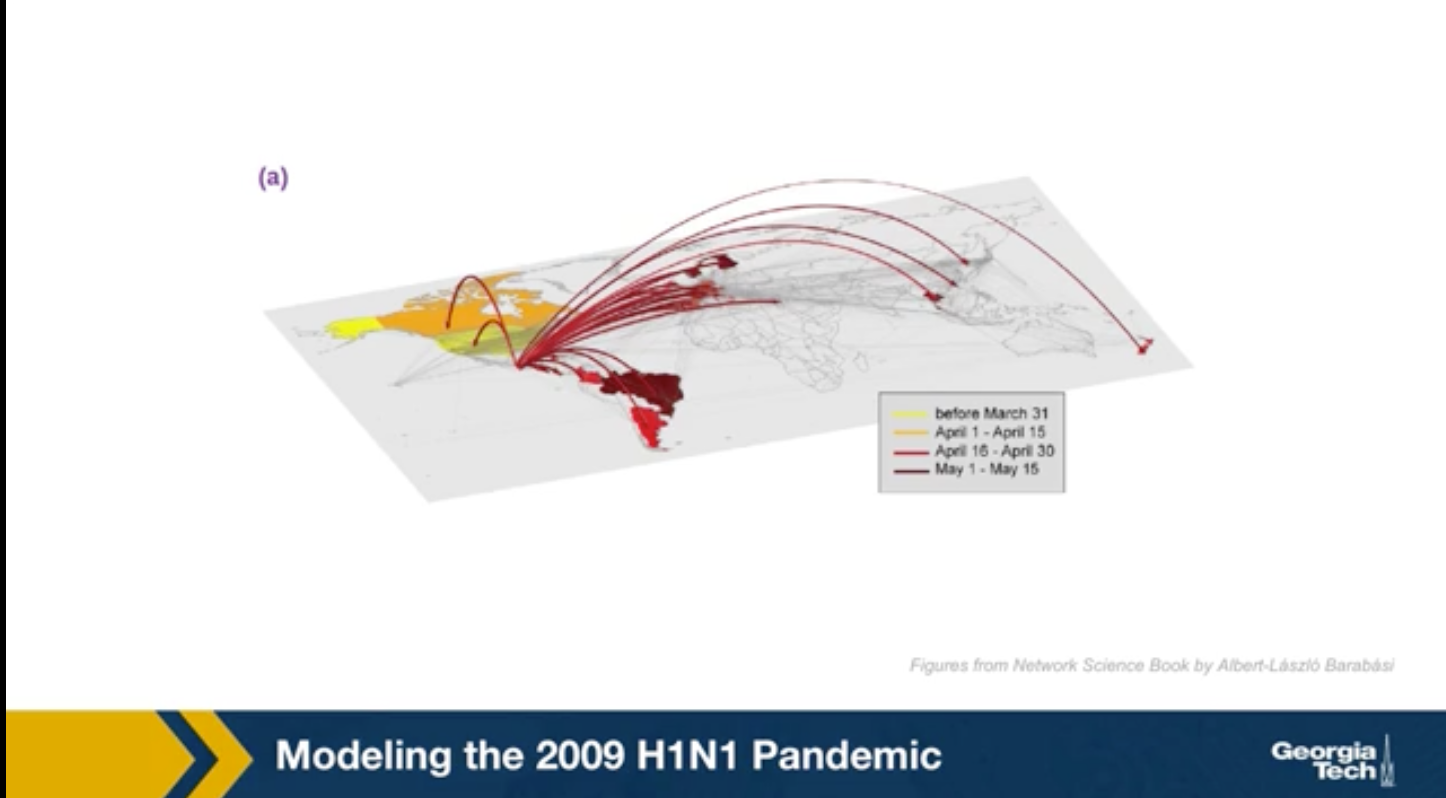

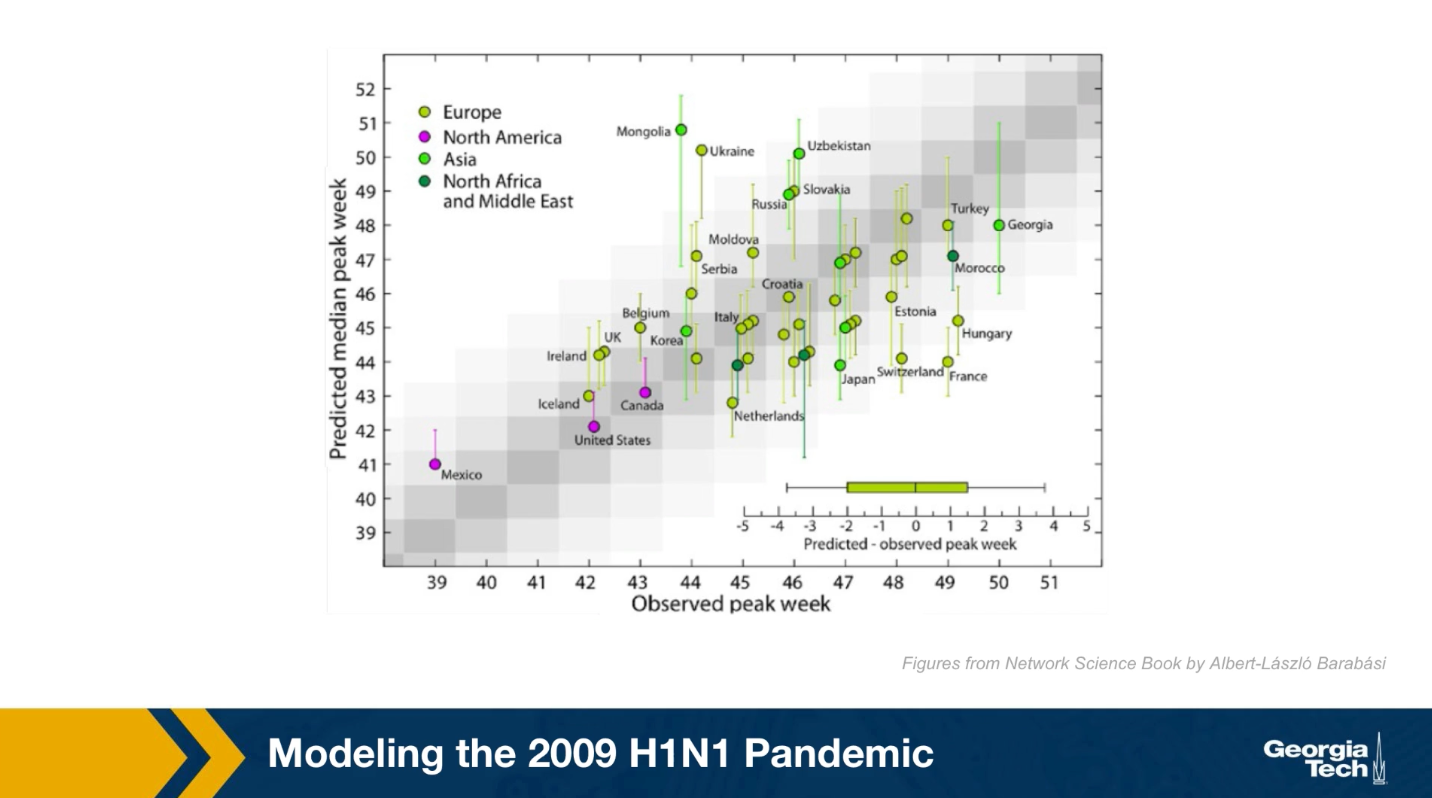

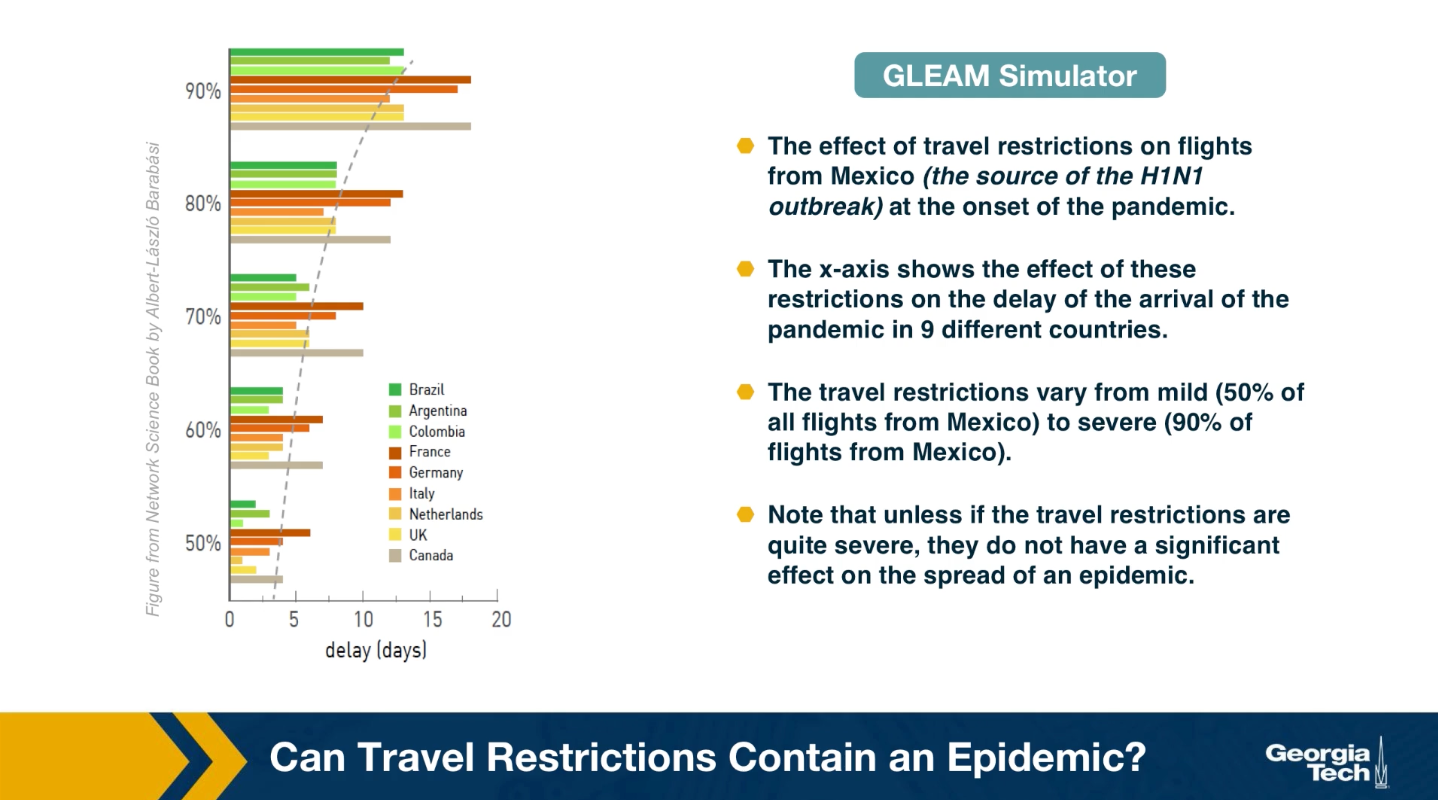

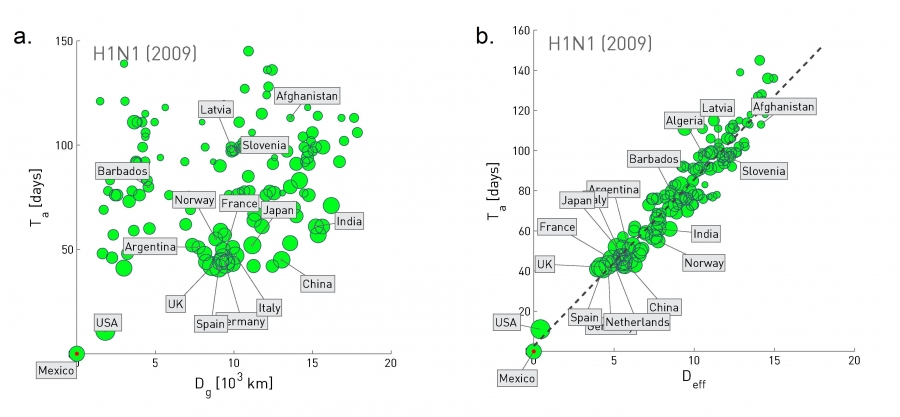

The video above shows a simulation of the spread of the H1N1 virus over the global air transportation network. The H1N1 outbreak started in Mexico in 2009 and it quickly spread throughout the world mostly through air transportation.

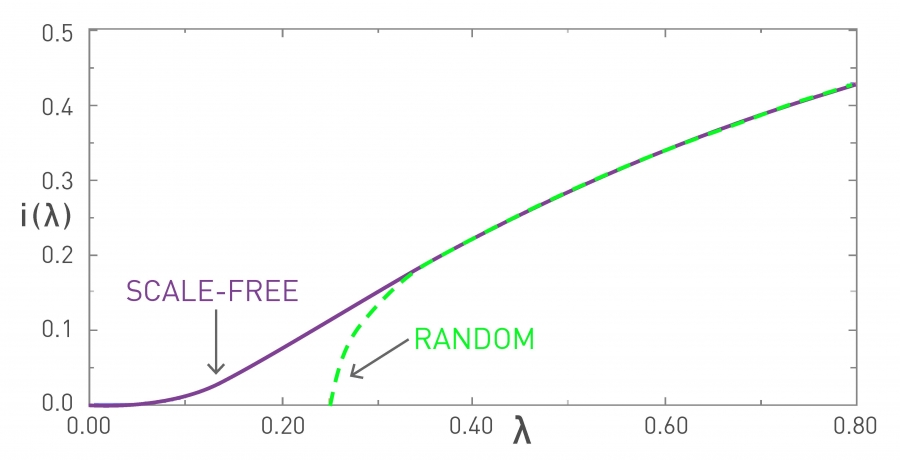

An important question in Network Science: how does the structure of the underlying network affect the spread of such epidemics?

As we will see later in the course, certain network properties enhance the spread of epidemics to the point that they can become pandemics before any intervention is possible. The only way to prevent such pandemics is through immunizations when they are available.

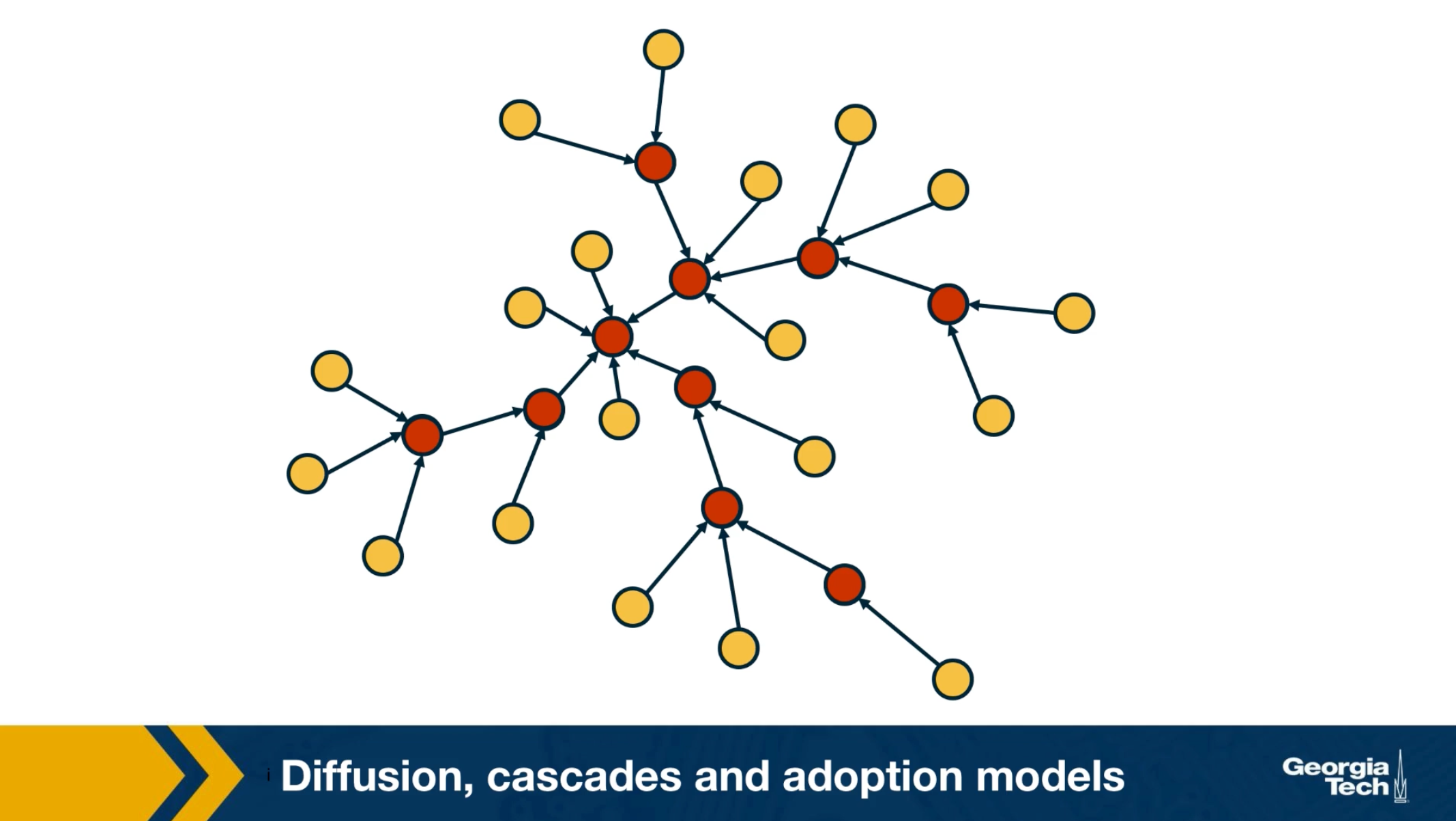

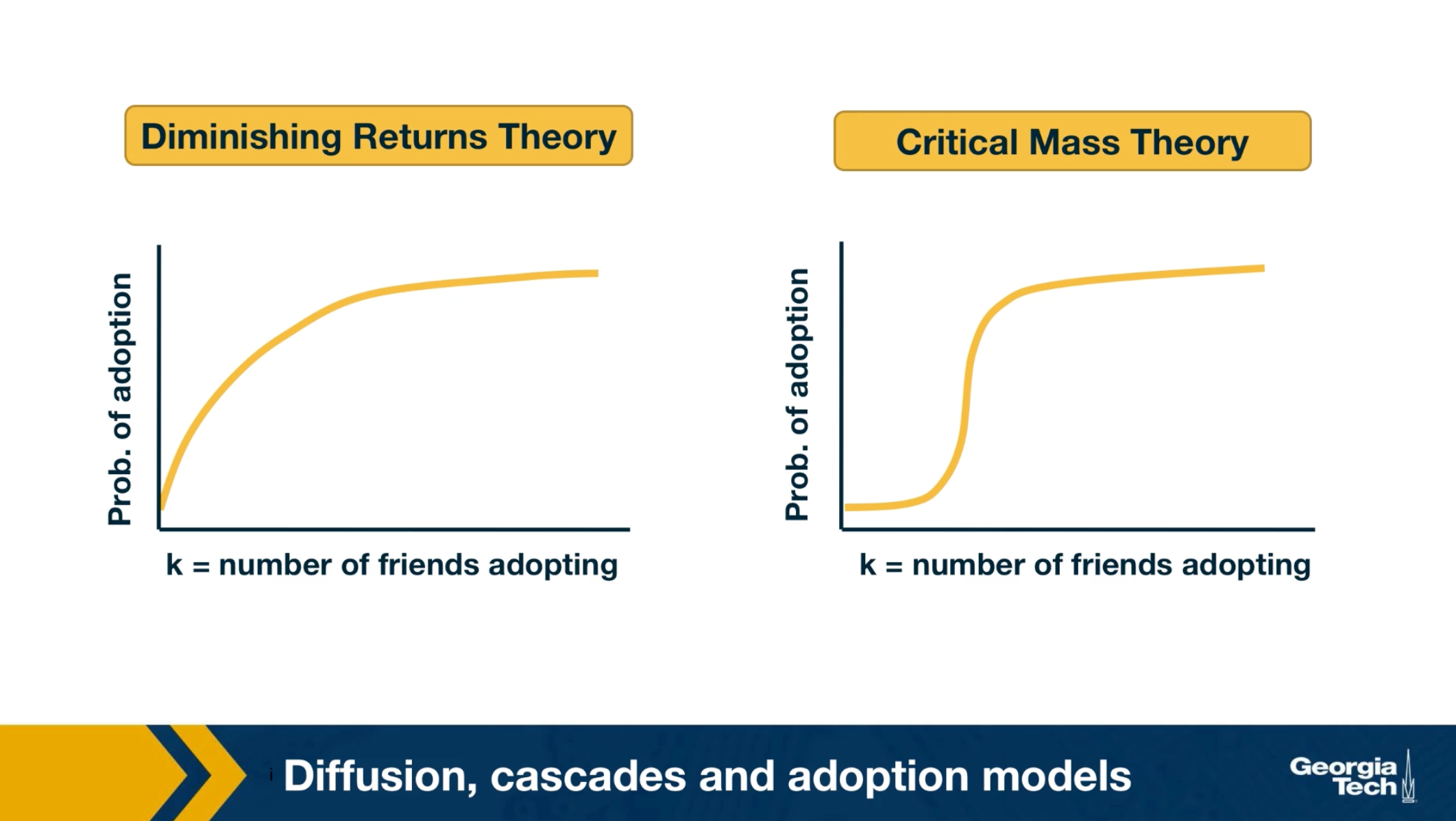

Influence and Cascade Phenomena

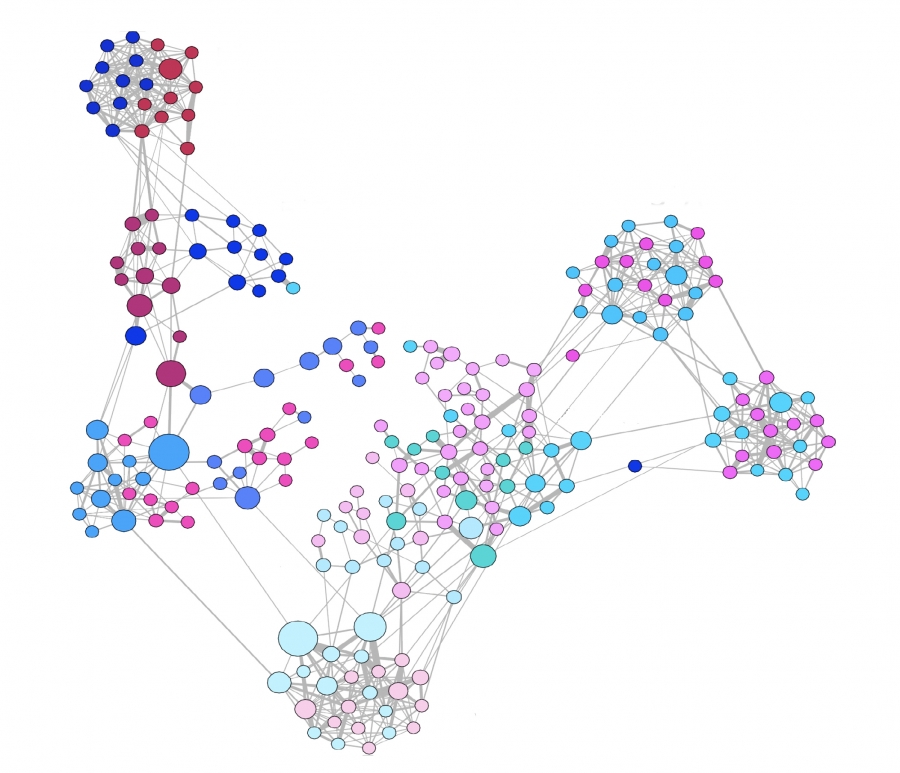

The dynamic processes that take place on a network are often not physical. For example, ideas, opinions, and other social trends and hypes can also spread through networks – especially over online social networks.

We will study such influence or “information contagion” phenomena in the context of mostly Facebook and Twitter.

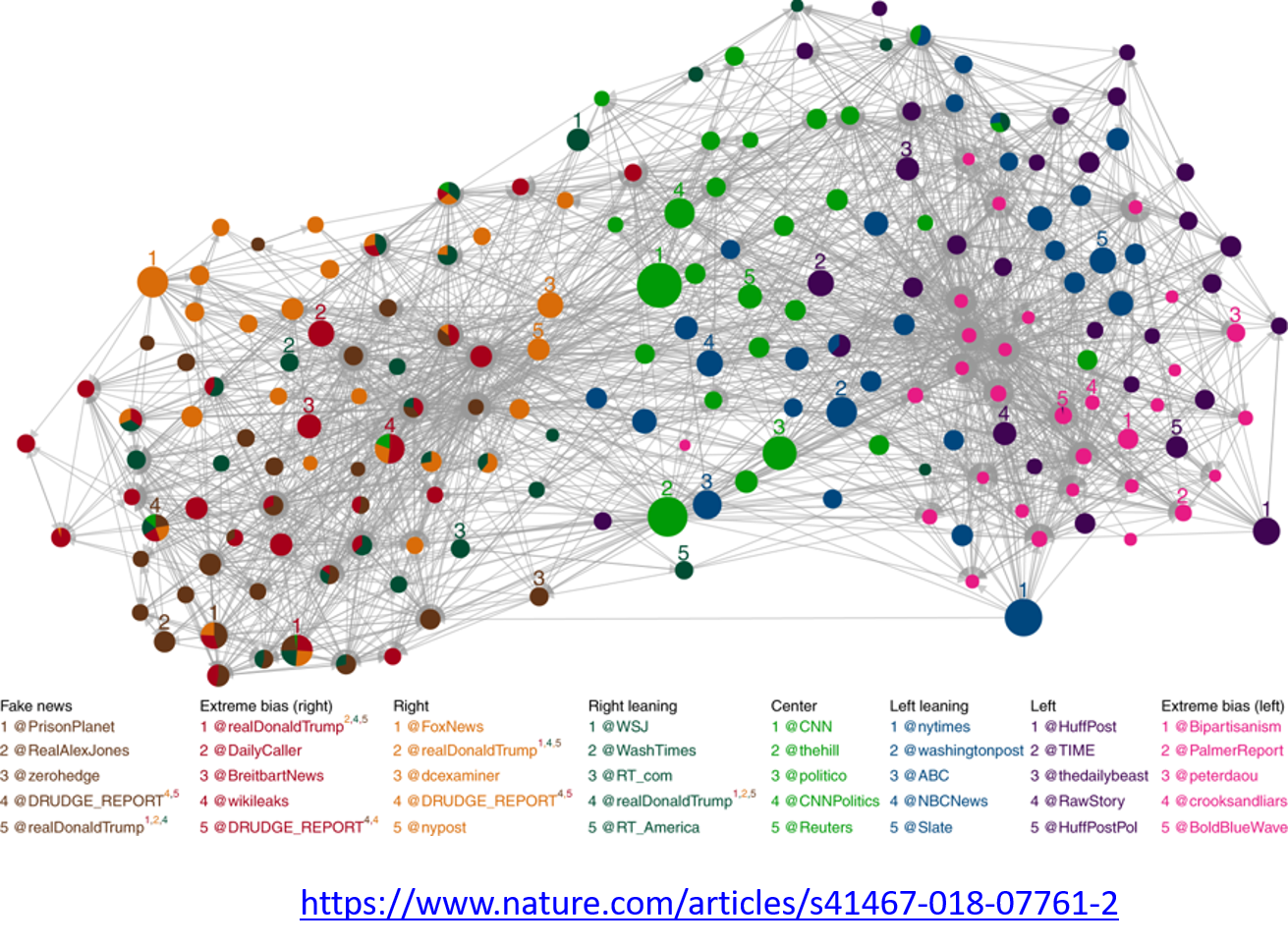

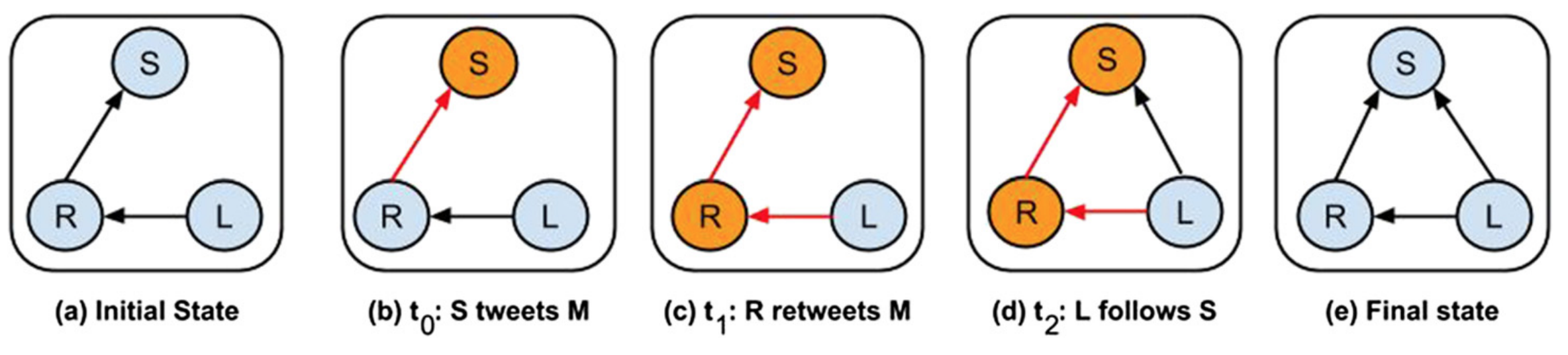

Image Source: Bovet, A., Makse, H.A. Influence of fake news in Twitter during the 2016 US presidential election. Nat Commun 10, 7 (2019)

Image Source: Bovet, A., Makse, H.A. Influence of fake news in Twitter during the 2016 US presidential election. Nat Commun 10, 7 (2019)

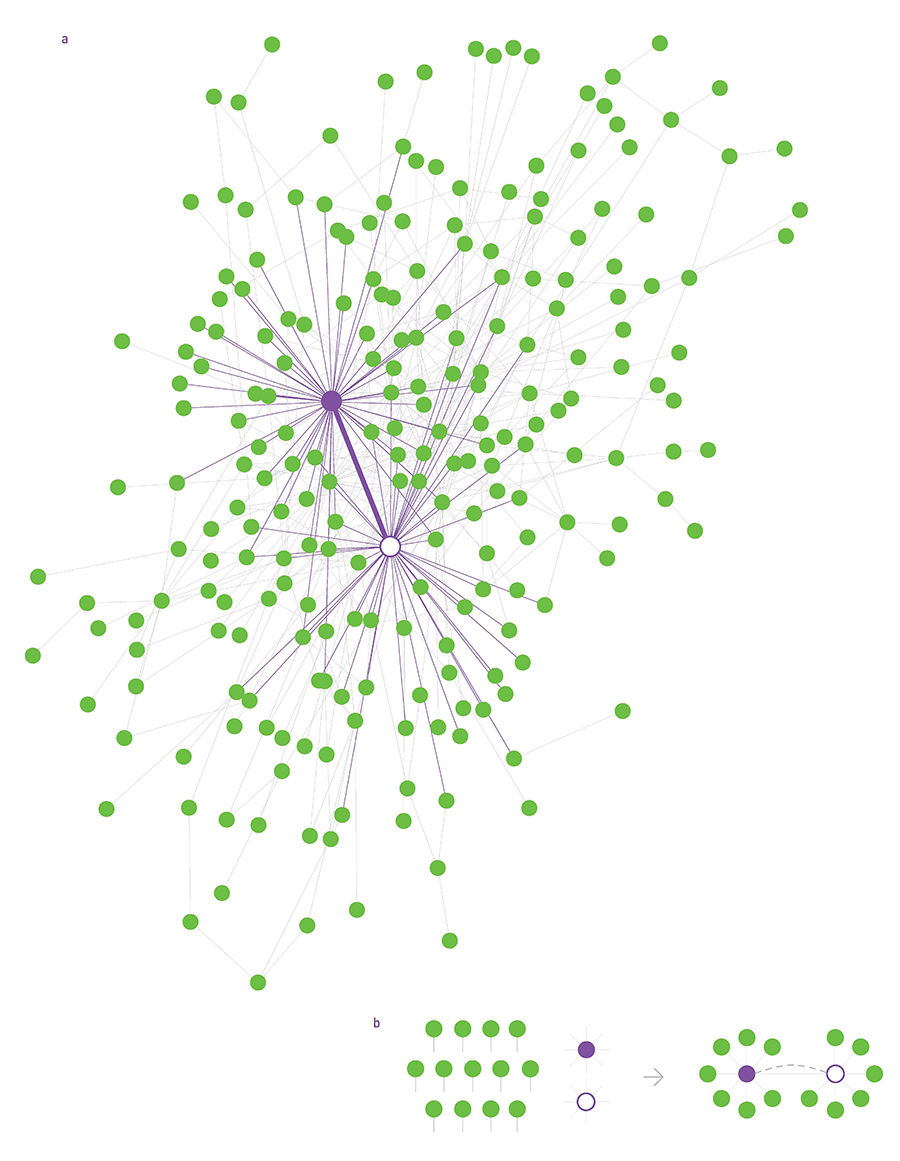

For example, the image above comes from a recent study focusing on the effect of misinformation (known as “fake news”) on Twitter in the 2016 US Presidential Elections.

The study used network science to identify the most influential spreaders of fake news as well as traditional news.

An important but still open research question is whether it is possible to develop algorithms that can identify influential spreaders of false information in real-time and block them.

Source Links

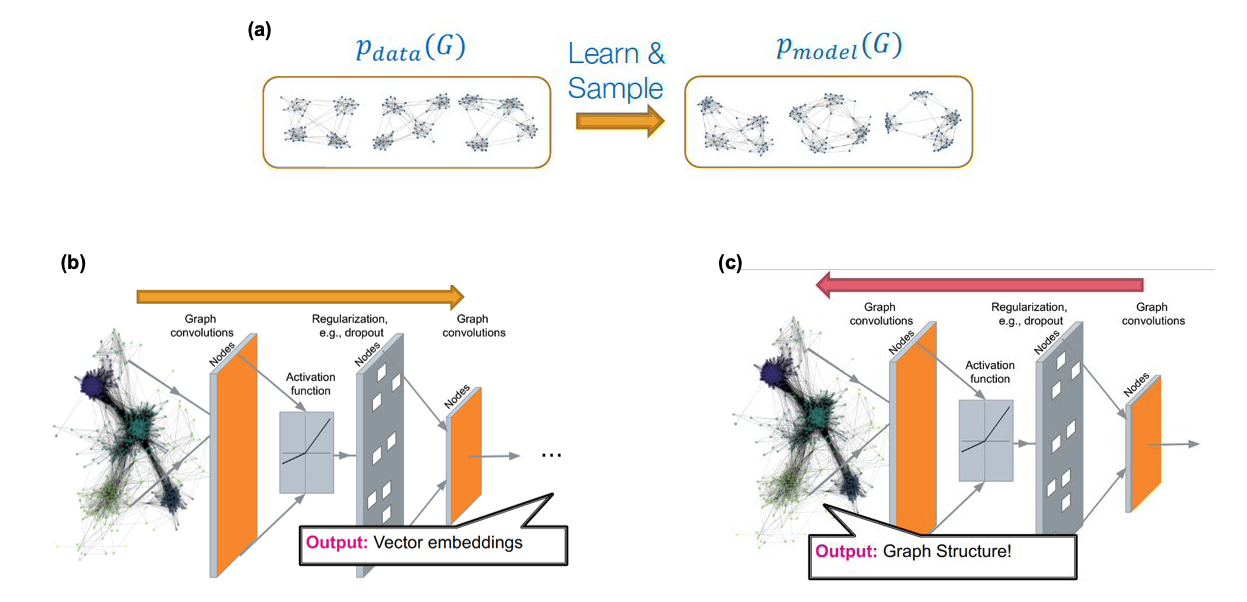

Machine Learning and Network Science

We will also study problems at the intersection of Network Science and Machine Learning.

As you probably know, Machine Learning generates statistical models from data and then uses these models in classification, regression, clustering, and other similar tasks.

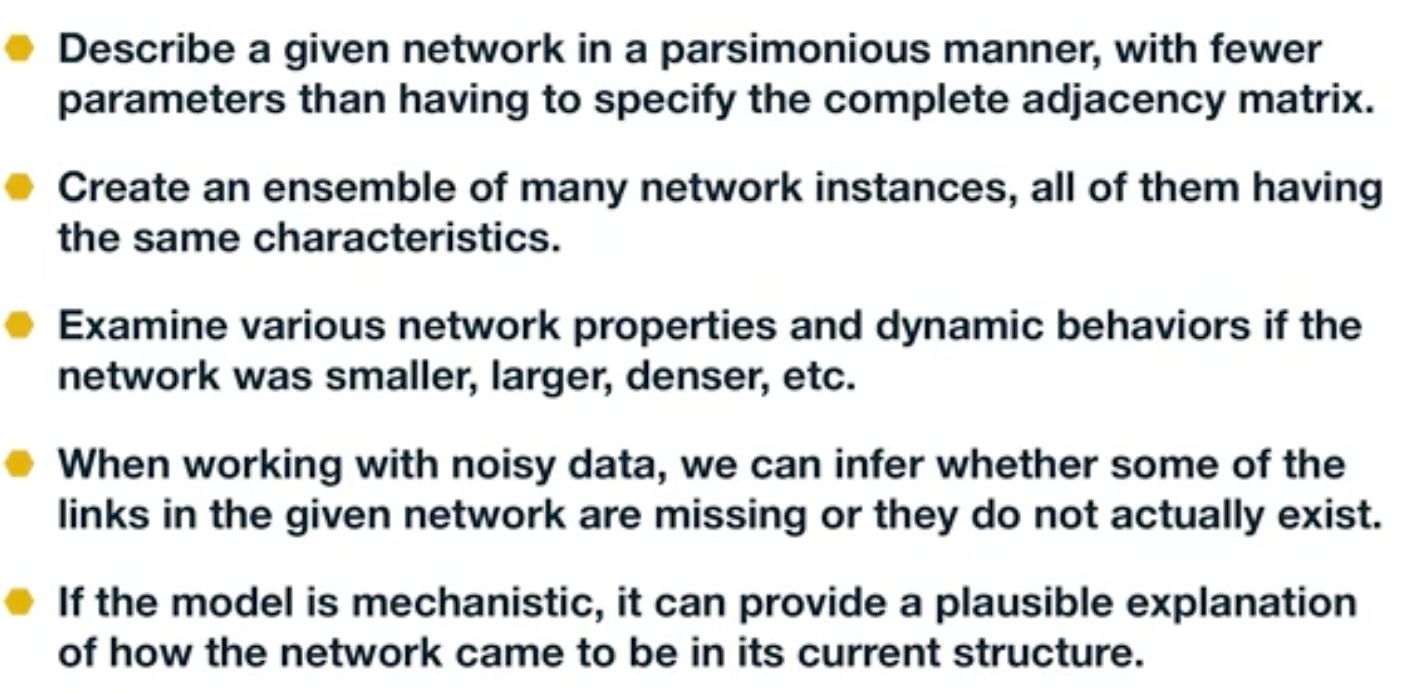

Network Science has contributed to this field by focusing on graph models – statistical models of static or dynamic networks that can capture the important properties of real-world networks in a parsimonious manner.

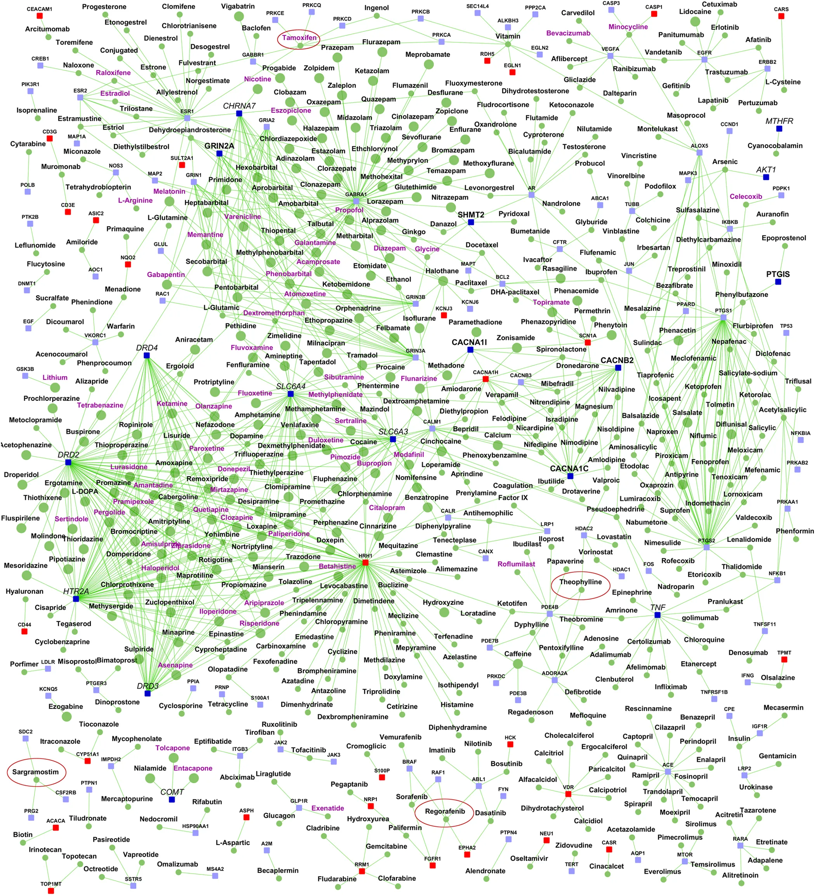

Image Source: Ganapathiraju, M., Thahir, M., Handen, A. et al. Schizophrenia interactome with 504 novel protein–protein interactions. npj Schizophr 2, 16012 (2016)

Image Source: Ganapathiraju, M., Thahir, M., Handen, A. et al. Schizophrenia interactome with 504 novel protein–protein interactions. npj Schizophr 2, 16012 (2016)

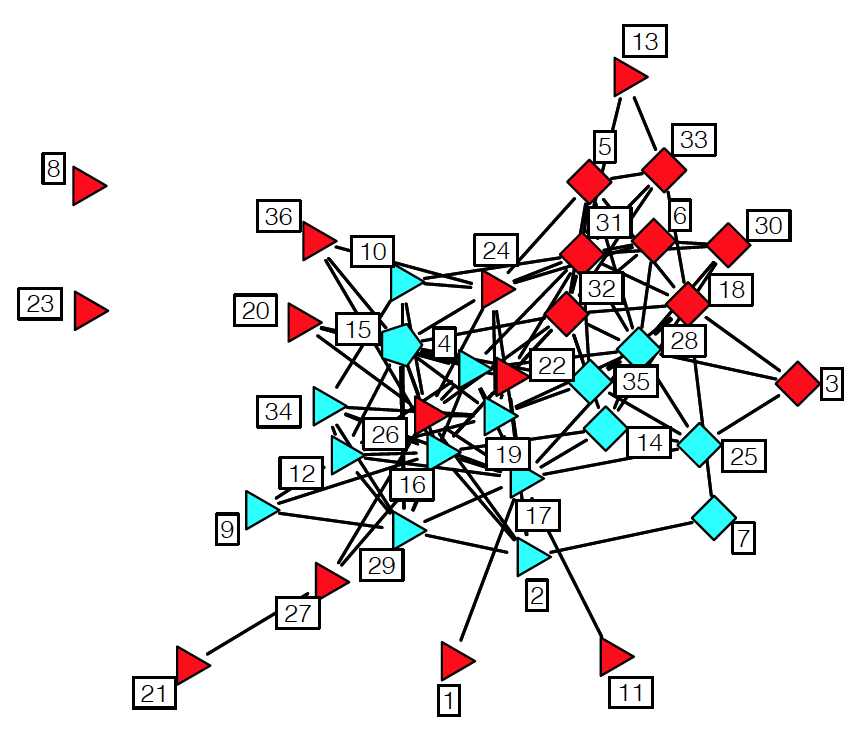

The image above comes from a recent research paper about schizophrenia

It shows the interactions between genes associated with schizophrenia, and drugs that target either specific genes/proteins or protein-protein interactions. Machine Learning models have been used to predict previously unknown interactions between drugs and genes.

The drugs are shown as round nodes in green, and genes as square nodes in dark blue, light blue or red. Nervous system drugs are shown as larger size green colored nodes compared with other drugs. Drugs that are in clinical trials for schizophrenia are labeled purple. You can explore the visualization interactively with the following link: Schizophrenia interactome with 504 novel protein–protein interactions.

The History of Network Science

Let’s talk now, rather briefly, about the history of network science.

First, it is important to emphasize that the term “network” has been used for decades in different disciplines.

For example, computer scientists would use the term to refer exclusively to computer networks, sociologists have been studying social networks for more than 50 years, and of course, mathematicians have been studying graphs for more than two centuries.

So what is new in network science?

Network Science certainly leveraged concepts and methods that were developed earlier in Graph Theory, Statistical Mechanics and Nonlinear Dynamics in Physics, Computer Science algorithms, Statistics and Machine Learning. The list below shows the key topics that each of these disciplines contributed to Network Science.

-

Graph theory:

- Study of abstract (mostly static) graphs

-

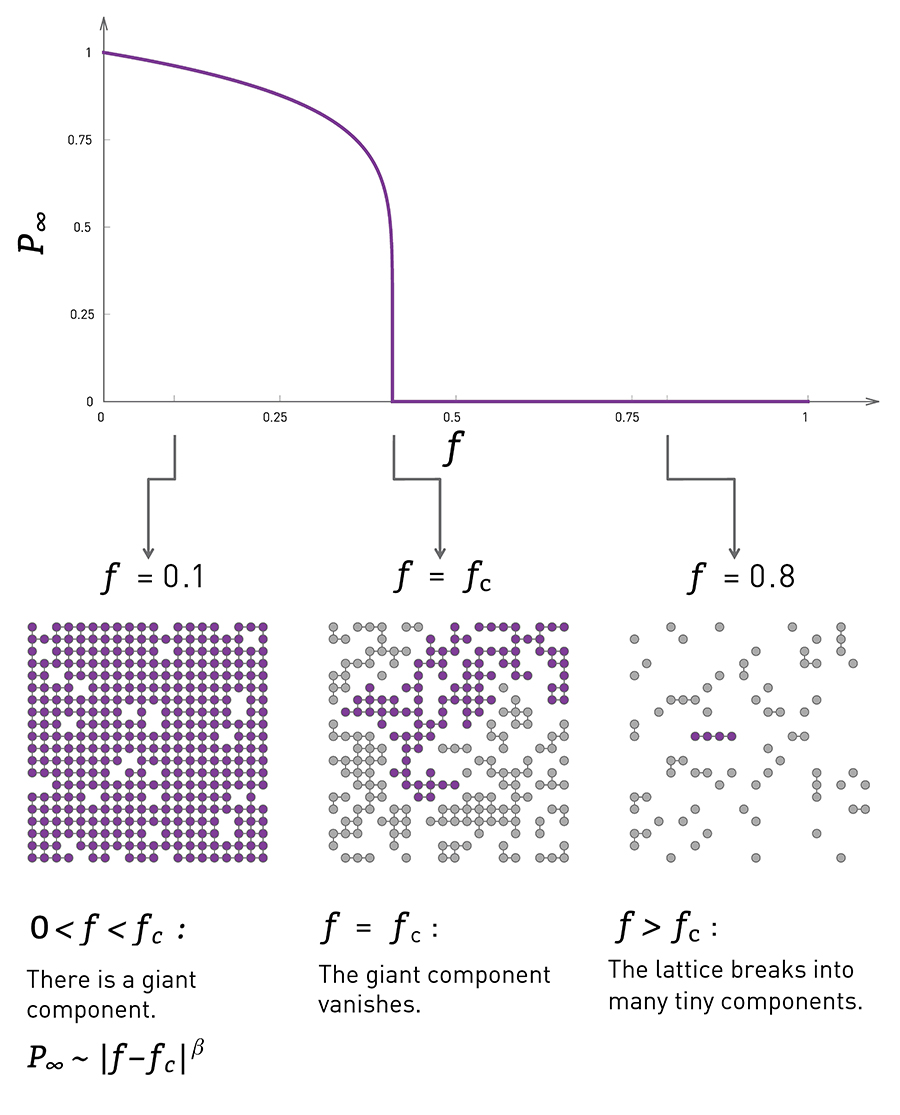

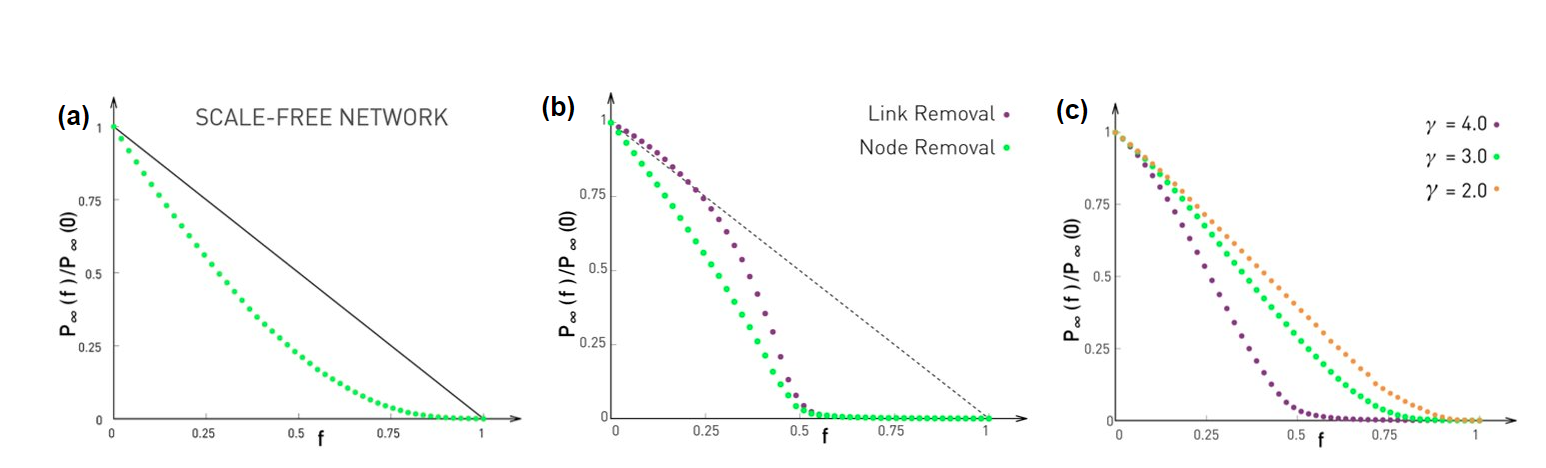

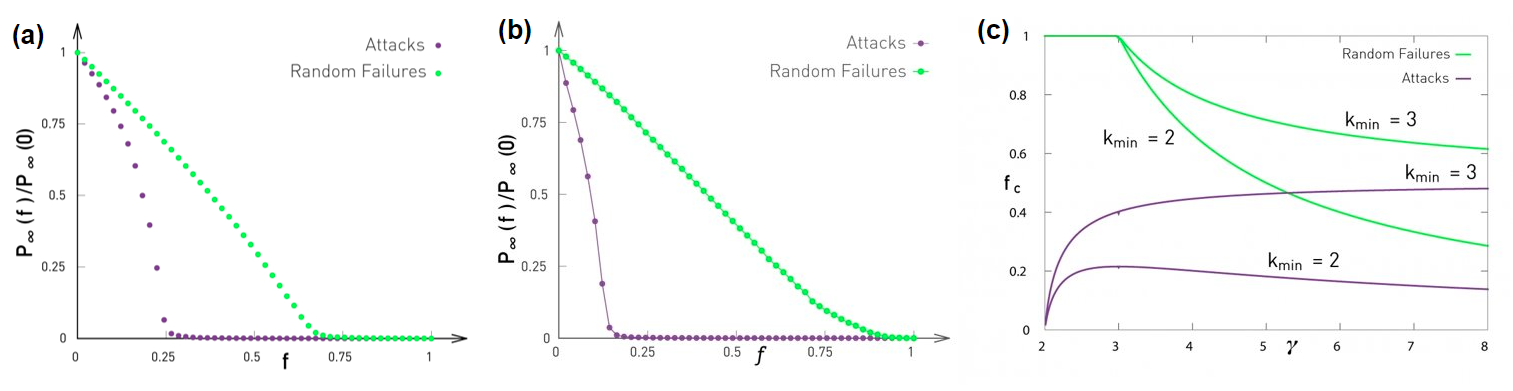

Statistical mechanics:

- Percolation, phase transitions

-

Nonlinear dynamics:

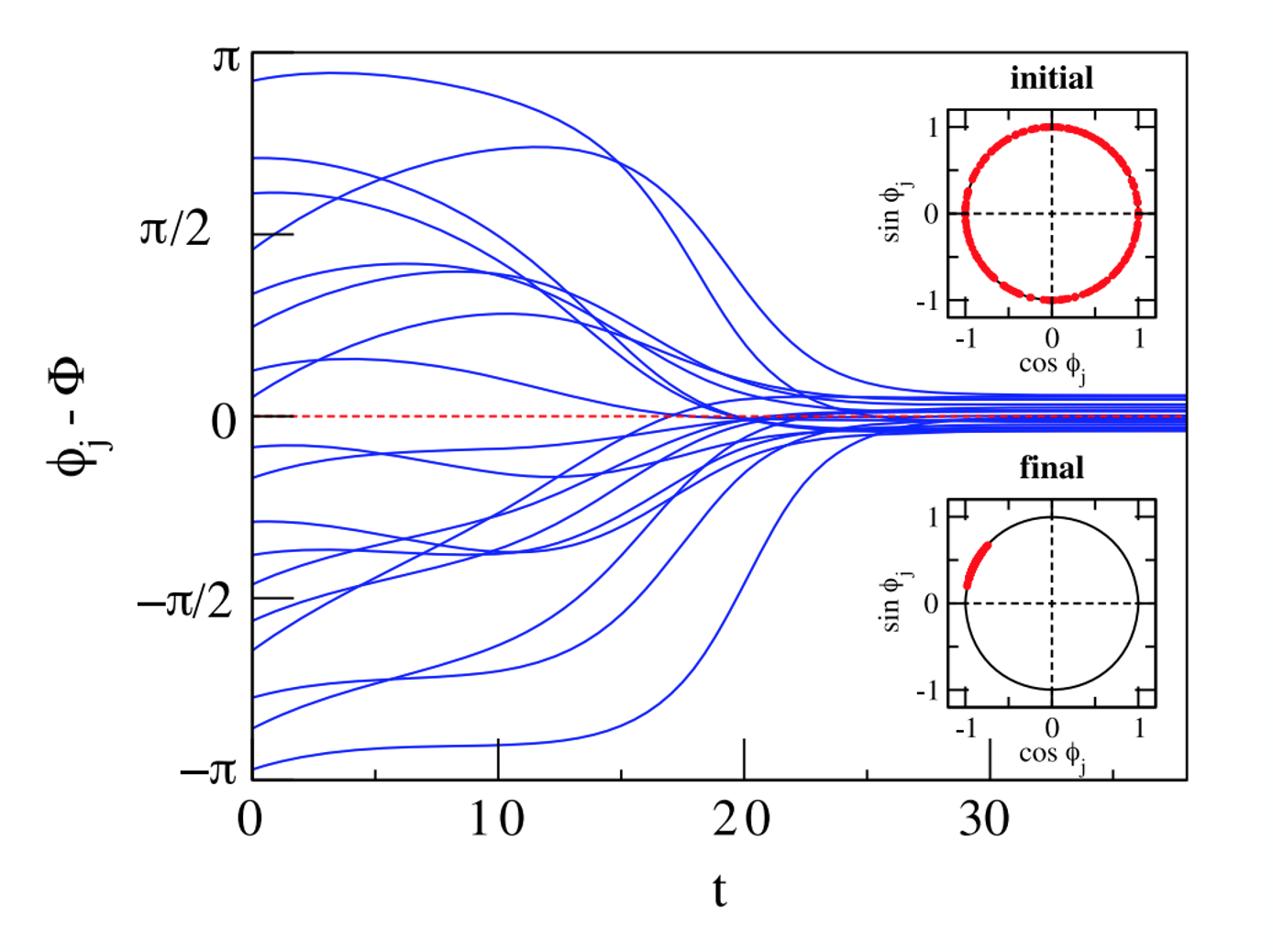

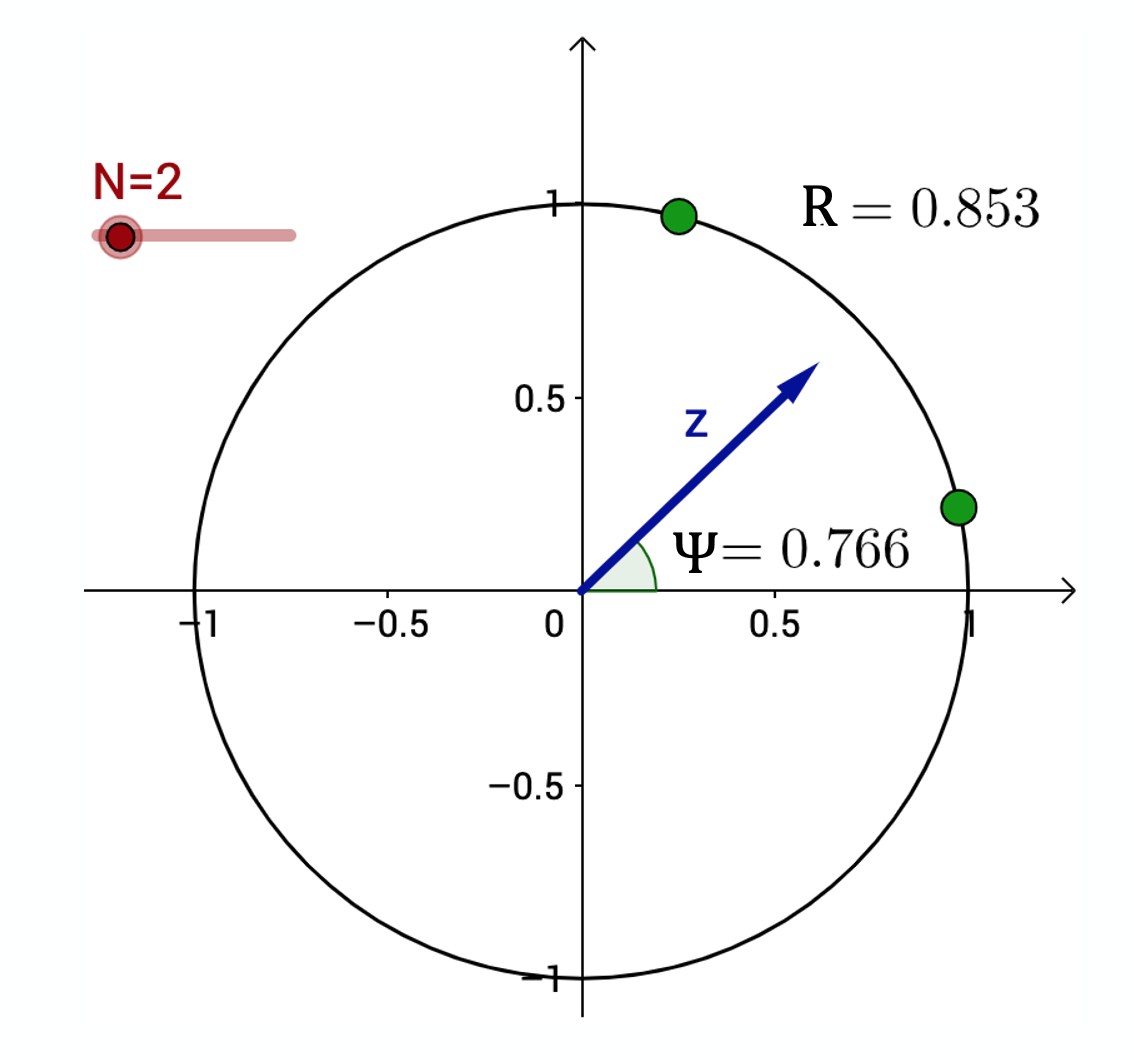

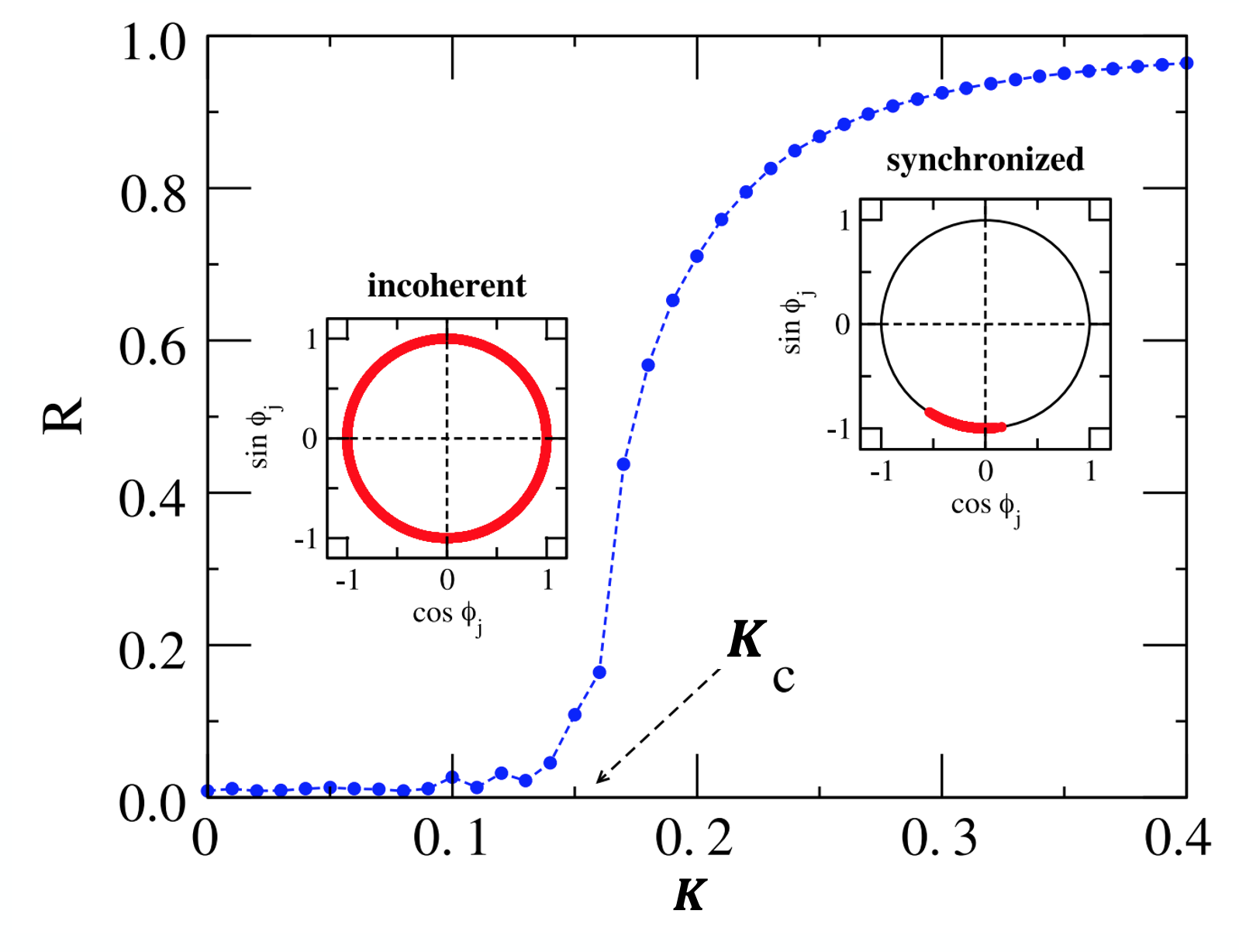

- Contagion models, threshold phenomena, synchronization

-

Graph algorithms:

- Network paths, clustering, centrality metrics

-

Statistics:

- Network sampling, network inference

-

Machine learning:

- Graph embeddings, node/edge classification, generative models

-

Theory of complex systems:

- Scaling, emergence

There are two main differences however between these disciplines and Network Science.

First, Network Science focuses on real-world networks and their properties – rather than on regular or random graphs, which are easier to analyze mathematically but not realistic. Most of the earlier work in graph theory or physics was assuming that networks have that kind of simple structure.

Second, Network Science provides a general framework to study complex networks independent of the specific application domain. This unified approach revealed that there are major similarities and universal properties in networks, independent of whether they represent social, biological or technological systems.

The Birth of Network Science

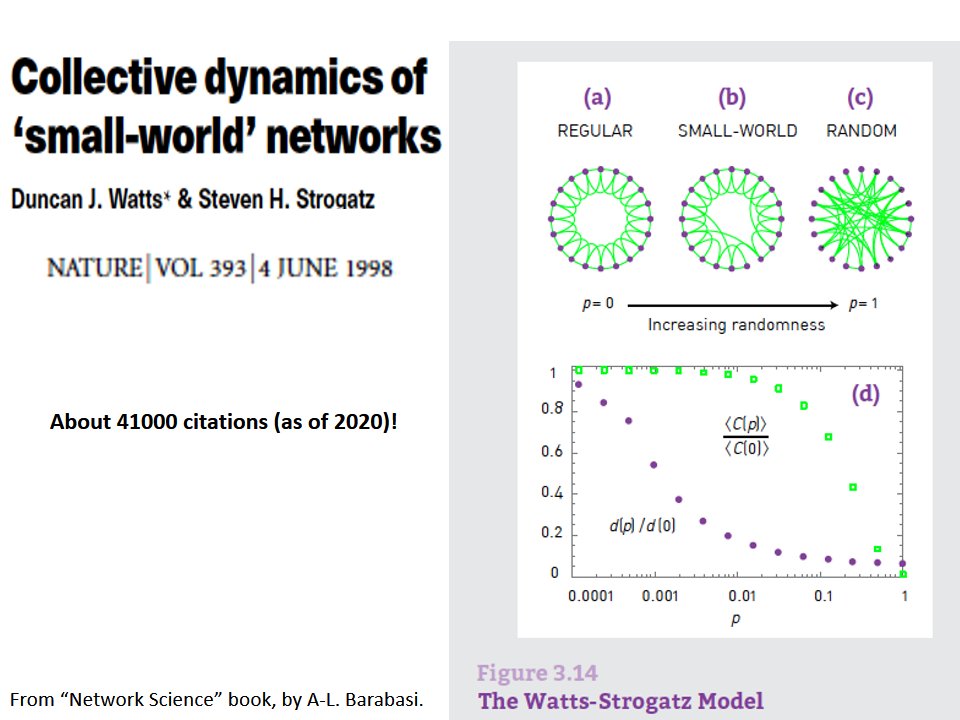

The birth of Network Science took place back in 1998 or 1999, with the publication of two very influential research papers.

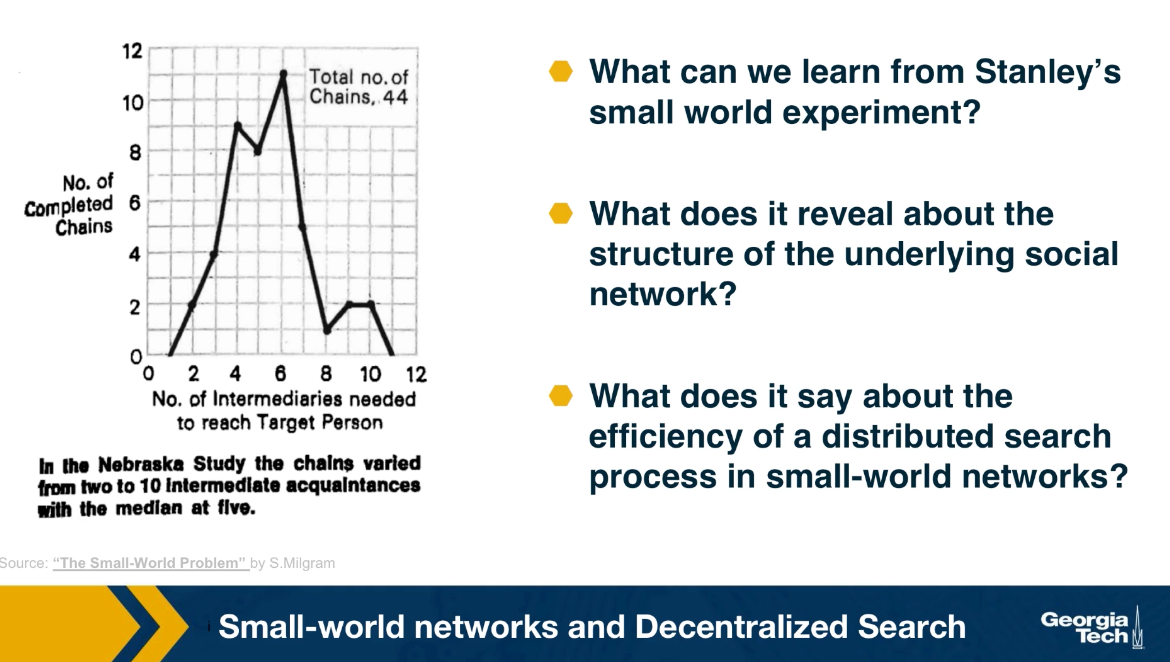

The first was the discovery by Watts and Strogatz of the Small-World property in real-world networks. Roughly speaking, this means that most node-pairs are close to each other, only within a small number of hops. You may have heard the term “six degrees of separation”, in the context of social networks, meaning that most people are connected with each other through a path of 6 (or so) acquaintances.

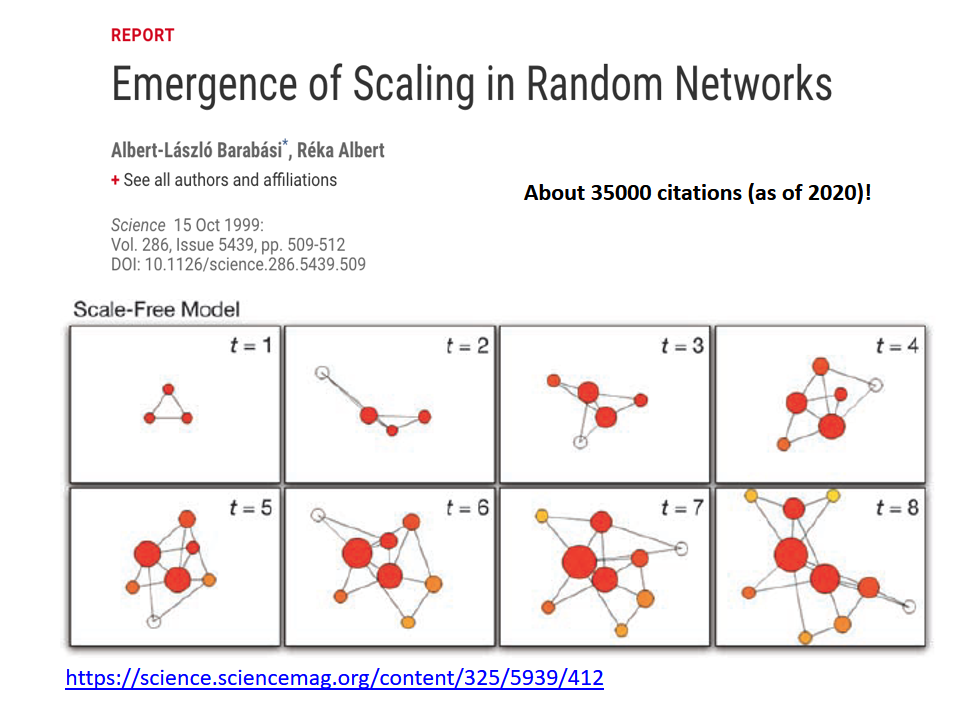

A second influential paper was published in 1999 by two physicists, Barabási and Albert.

That paper showed that real-world networks are “Scale Free”. This means that the number of connections that a node has is highly skewed: most nodes have a very small number of connections but there are few nodes, referred to as hubs, that have a much larger number of connections. Mathematically speaking, the number of connections per node follows a power-law distribution – something that we will discuss extensively later in this course.

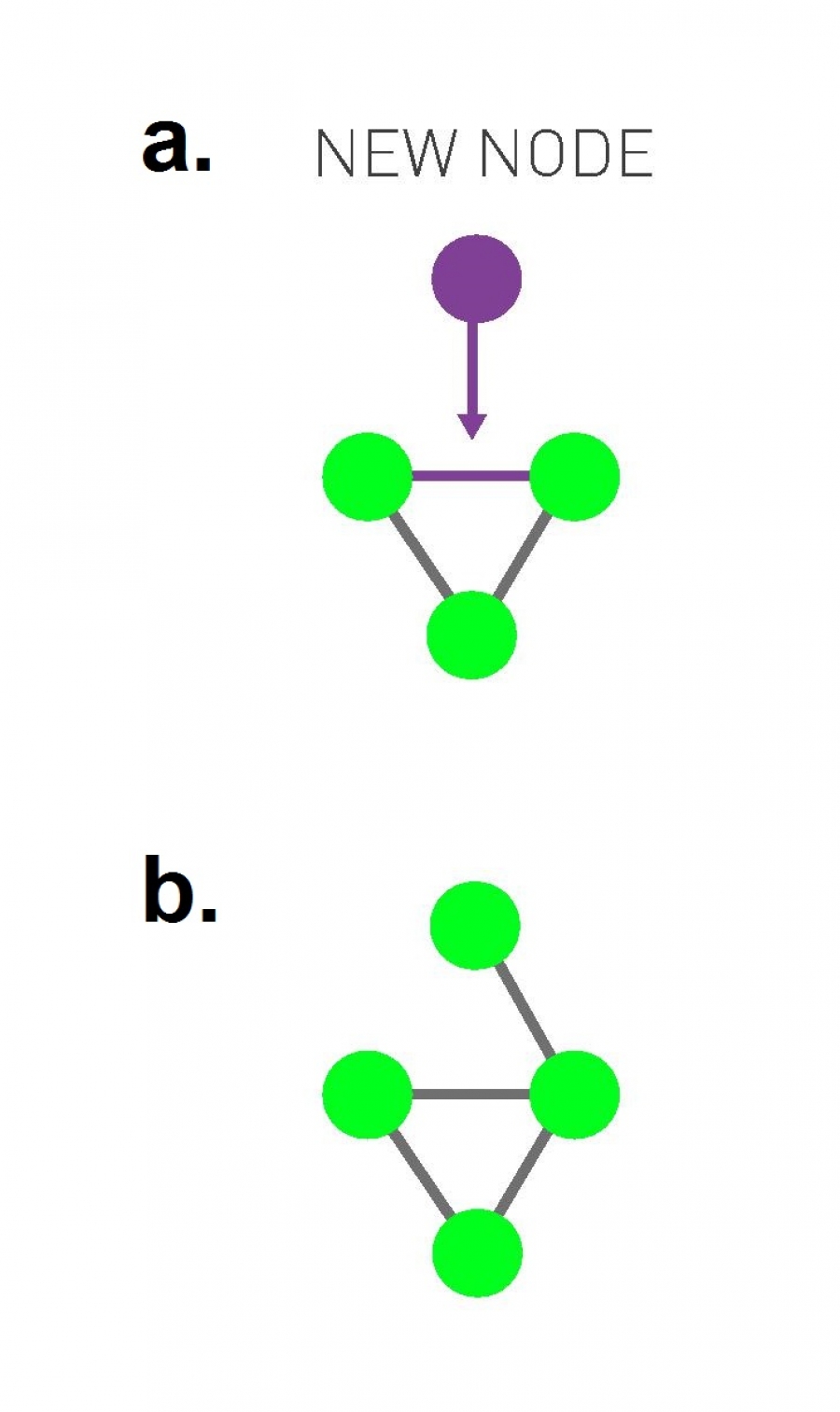

Barabási and Albert explained this general phenomenon based on a ”rich get richer” property. As a network gradually grows, new nodes prefer to create links to more well-connected existing nodes, and so the latter become increasingly more powerful in terms of connectivity. This is referred to as “preferential attachment” – and we will study it in detail later.

Source Links

TED Lecture: Albert-László Barabási

L2 - Relevant Concepts from Graph Theory

Overview

Required Reading

- Chapter-2 from A-L. Barabási, Network Science 2015.

- Chapter-2 from D. Easley and J. Kleinberg, Networks, Crowds and Markets Cambridge Univ Press, 2010.

Recommended Reading

An Introduction

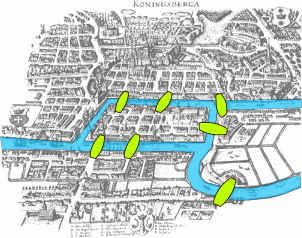

This visualization shows the seven bridges of Königsberg. The birth of graph theory took place in 1736 when Leonhard Euler showed that it is not possible to walk through all seven bridges by crossing each of them once and only once.

Food for Thought

Try to model this problem with a graph in which each bridge is represented by an edge, and the landmass at each end of a bridge is represented by a node. The graph should have four nodes (upper, lower, the island in the middle, and the landmass at the right) and seven edges. What is the property of this graph that does not allow to walk through each edge once and only once?

You can start from any node you want, and end at any node you want. It is ok to visit the same node multiple times but you should cross each edge only once (this is referred to as a Eulerian path in graph theory).

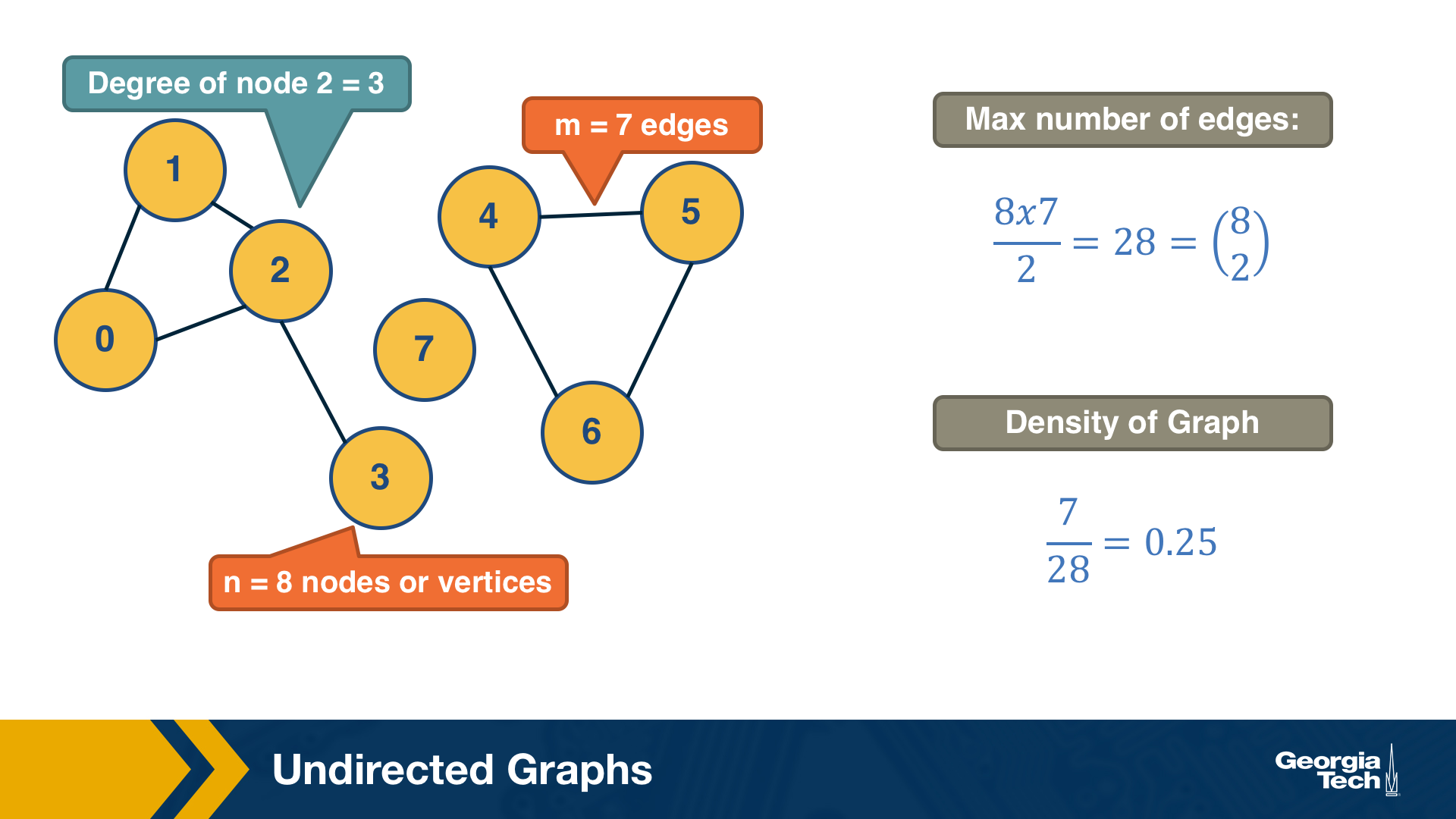

Undirected Graphs

Let’s start by defining more precisely what we mean by graph or network – we use these two terms interchangeably. We will also define some common types of graphs.

A graph, or network, represents a collection of dyadic relations between a set of nodes. This set is often denoted by V because nodes are also called vertices. The relations are referred to as edges or links, usually denoted by the set E. So, an edge (u,v) is a member of the set E, and it represents a relation between vertices u and v in the set V.

The number of vertices is often denoted by n and the number of edges by m. We will often use the notation G=(V,E) to refer to a graph with a set of vertices V and a set of edges E. This definition refers to the simplest type of graph, namely undirected and unweighted.

Typically we do not allow edges between a node and itself. We also do not allow multiple edges between the same pair of nodes. So the maximum number of edges in an undirected graph is $n(n-1)/2$– or “n-choose-2”. The density of a graph is defined as the ratio of the number of edges m by the maximum number of edges (n-choose-2). The number of connections of a node v is referred to as the degree of v. The example above illustrates these definitions.

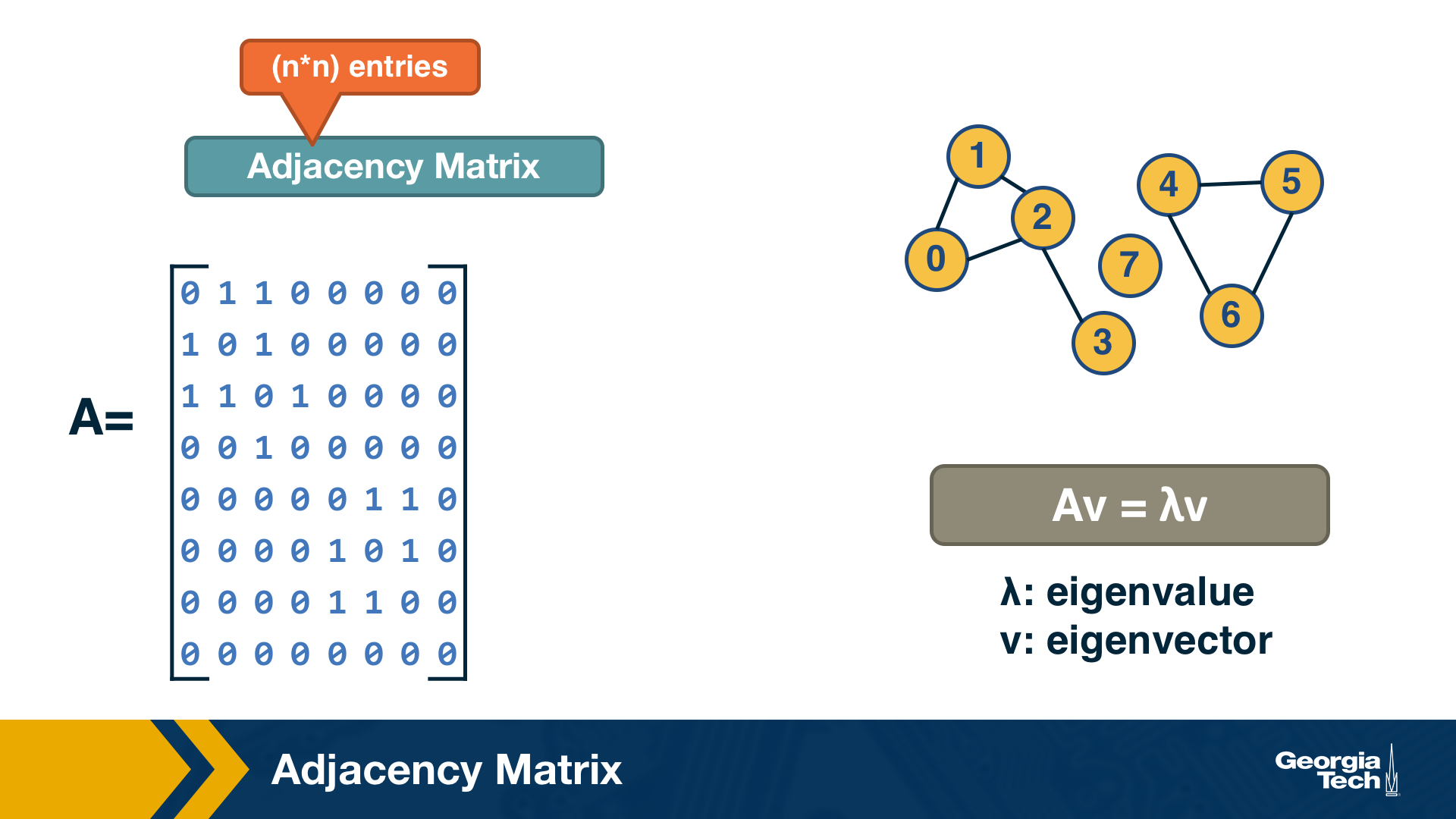

Adjacency Matrix

A graph is often represented either with an Adjacency Matrix, as shown in this visualization. The matrix representation requires a single memory access to check if an edge exists but it requires $n^2$ space. The adjacency matrix representation allows us to use tools from linear algebra to study graph properties.

For example, an undirected graph is represented by a symmetric matrix A – and so the eigenvalues of A are a set of real numbers (referred to as the “spectrum” of the graph). The equation at the right of the visualization reminds you the definition of eigenvalues and eigenvectors.

Food for Thought

How would you show mathematically that the largest eigenvalue of the (symmetric) adjacency matrix A is less or equal than the maximum node degree in the network? Start from the definition of eigenvalues given above.

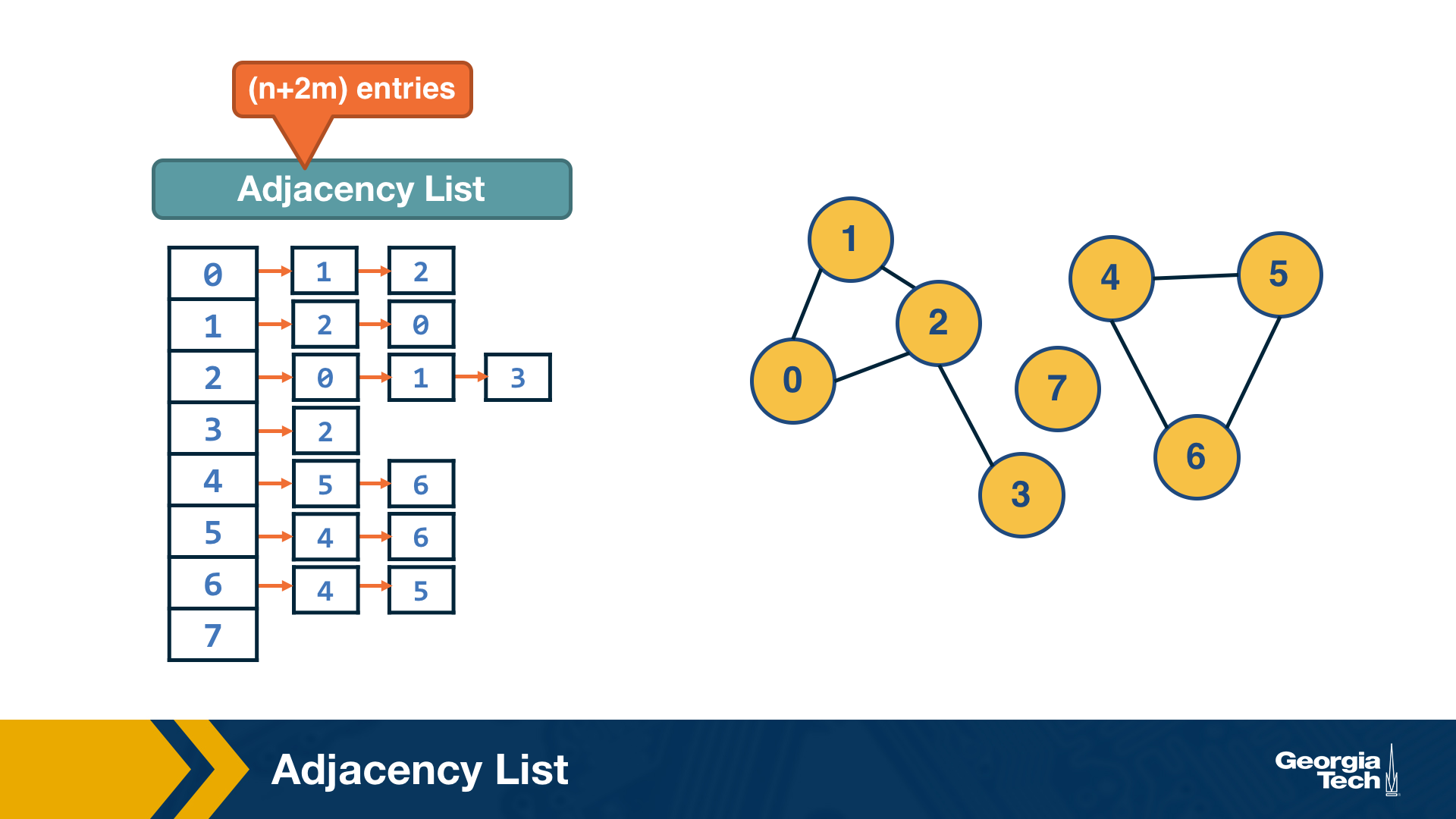

Adjacency List

The adjacency list representation requires n+2*m space because every edge is included twice.

The difference between adjacency matrices and lists can be very large when the graph is sparse. A graph is sparse if the number of edges m is much closer to the number of nodes n than to the maximum number of edges (n-choose-2). In other words, the adjacency matrix of a sparse graph is mostly zeros.

A graph is referred to as dense, on the other hand, if the number of edges is much closer to n-choose-2 than to n.

It should be noted that most real-world networks are sparse. The reason may be that in most technological, biological and social networks, there is a cost associated with each edge – dense networks would be more costly to construct and maintain.

Food for Thought

Suppose that a network grows by one node in each time unit. The new node always connects to k existing nodes, where k is a constant. As this network grows, will it gradually become sparse or dense (when n becomes much larger than k)?

Walks, Paths and Cycles

A walk from a node S to a node T in a graph is a sequence of successive edge that starts at S and ends at T. A walk may visit the same node more than once.

For example a walk that visits node C more than once (edges in red):

A path is a walk in which the intermediate nodes are distinct (edges in green).

A cycle on the other hand, is a path that starts and ends at the same node (edges in orange).

How can we efficiently count the number of walks of length k between nodes s and t?

The number of walks of length k between nodes s and t is given by the element (s,t) of the matrix $A^k$ (the k’th power of the adjacency matrix).

Let us use induction to show this:

For k=1, the number of walks is either 1 or 0, depending on whether two nodes are directly connected or not, respectively.

For k>1, the number of walks of length k between s and t is the number of walks of length k-1 between s and v, across all nodes v that connect directly with t. The number of walks of length k between s and v is given by the (s,v) element of the matrix $A^k$ (based on the inductive hypothesis). So, the number of walks of length k between s to t is given by:

\[\sum_{v \in V} A^{k-1}(s,v)A(v,t) = A^k(s,t)\]- Walks of length-3 from A to C:

- ABDC, ABAC, ACAC, ACDC

- Walks of length-3 from A to D:

- None

1

2

3

4

import numpy as np

X = np.array([[0,1,1,0],[1,0,0,1],[1,0,0,1],[0,1,1,0]])

X2 = np.matmul(X,X)

X3 = np.matmul(X2,X)

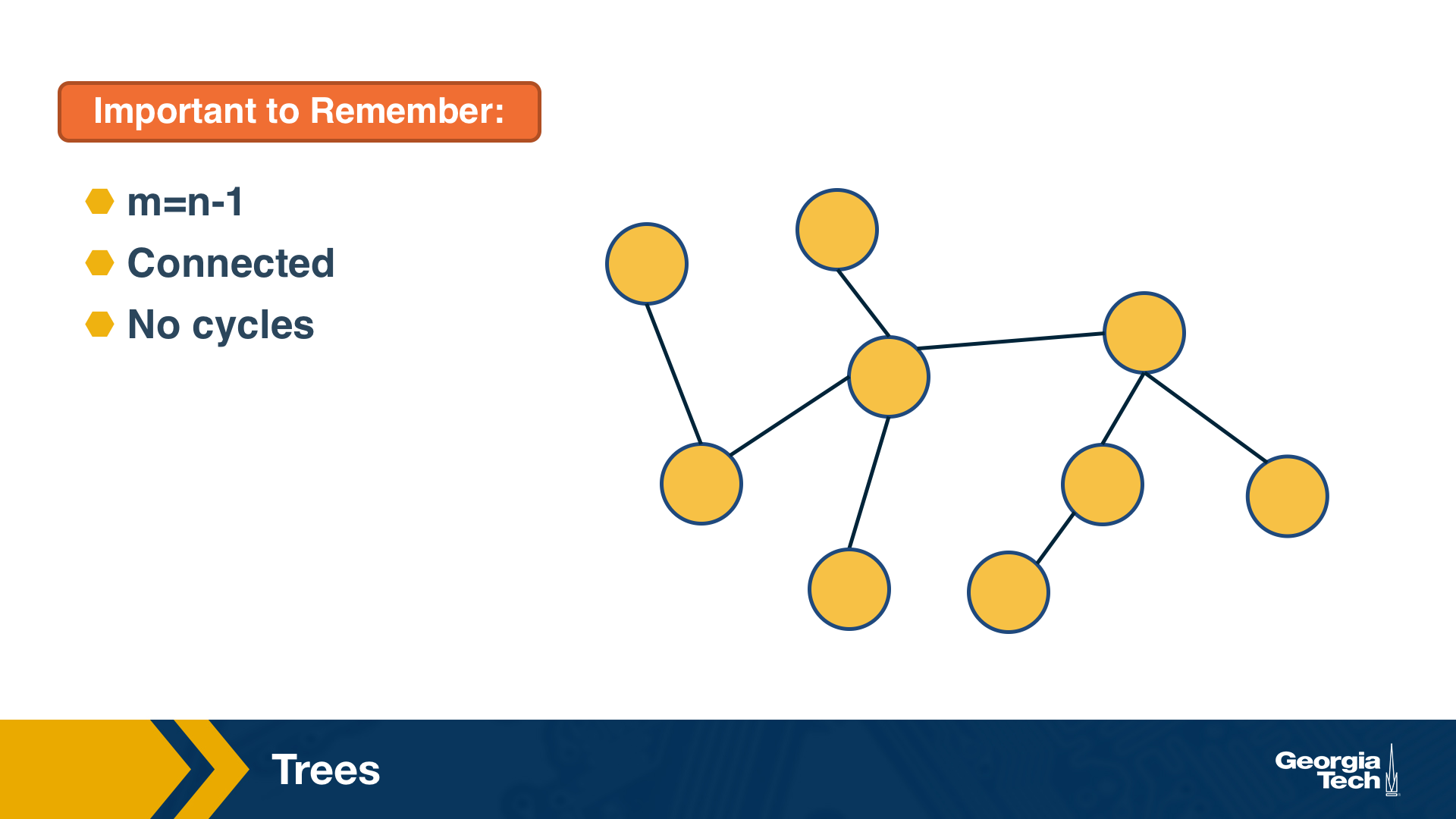

Trees and Other Regular Networks

In graph theory, the focus is often on some special classes of networks, such as trees, lattices, regular networks, planar graphs, etc.

In this course, we will focus instead on complex graphs that do not fit in any of these special classes. However, we will sometimes contrast and compare the properties of complex networks with some regular graphs.

For instance, trees are connected graphs that do not have cycles – and you can easily show that the number of edges in a tree of n nodes is always m=n-1.

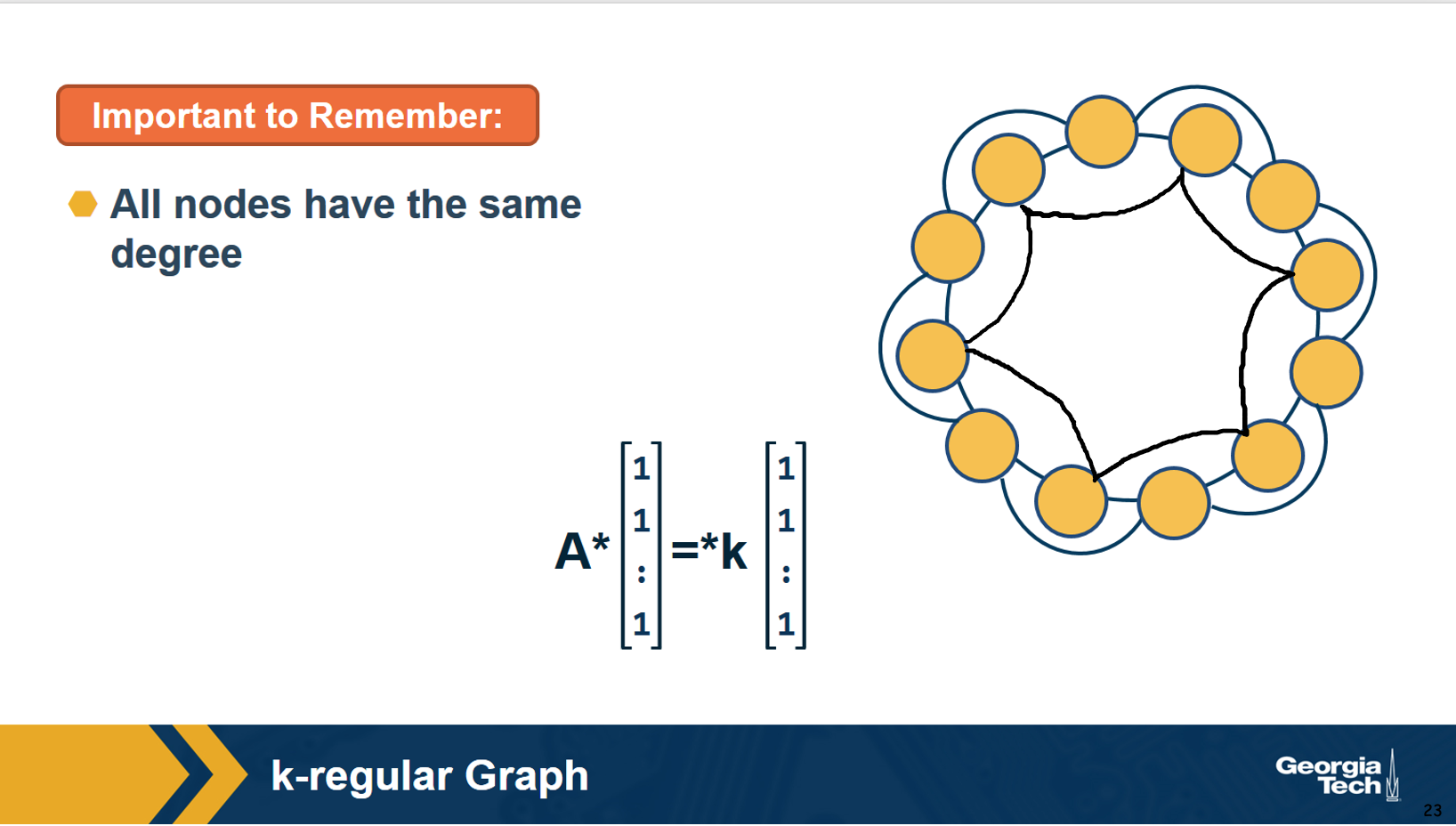

A k-regular graph is a network in which every vertex has the same degree k. The visualization shows an example of a k-regular network for k=4.

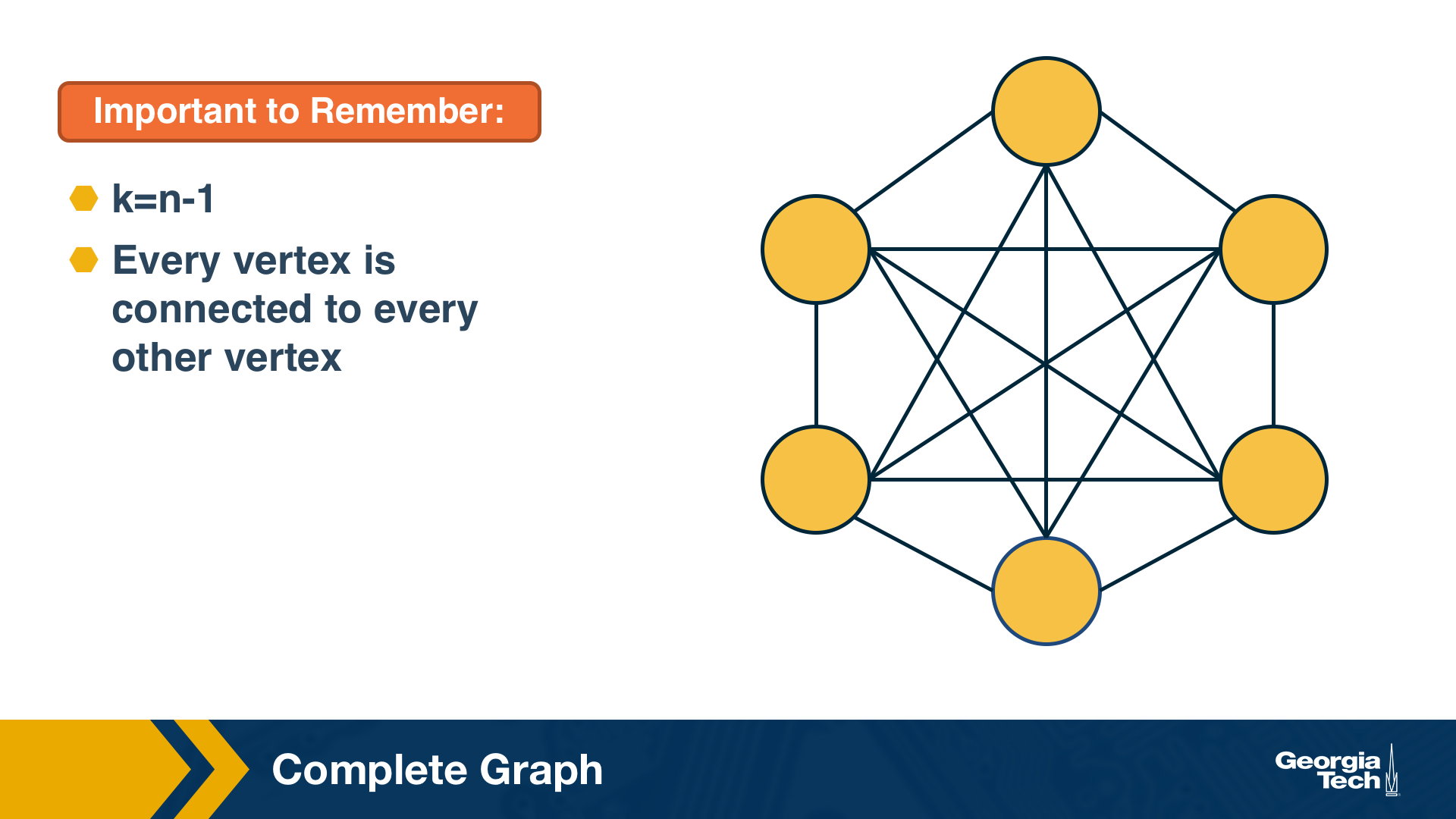

A complete graph (or “clique”) is a special case of a regular network in which every vertex is connected to every other vertex (k=n-1). The example shows a clique with 6 nodes.

Food for Thought

Suppose that a graph is k-regular. How would you show that a vector of n ones (1, 1, … 1) is an eigenvector of the adjacency matrix – and the corresponding eigenvalue is equal to k?

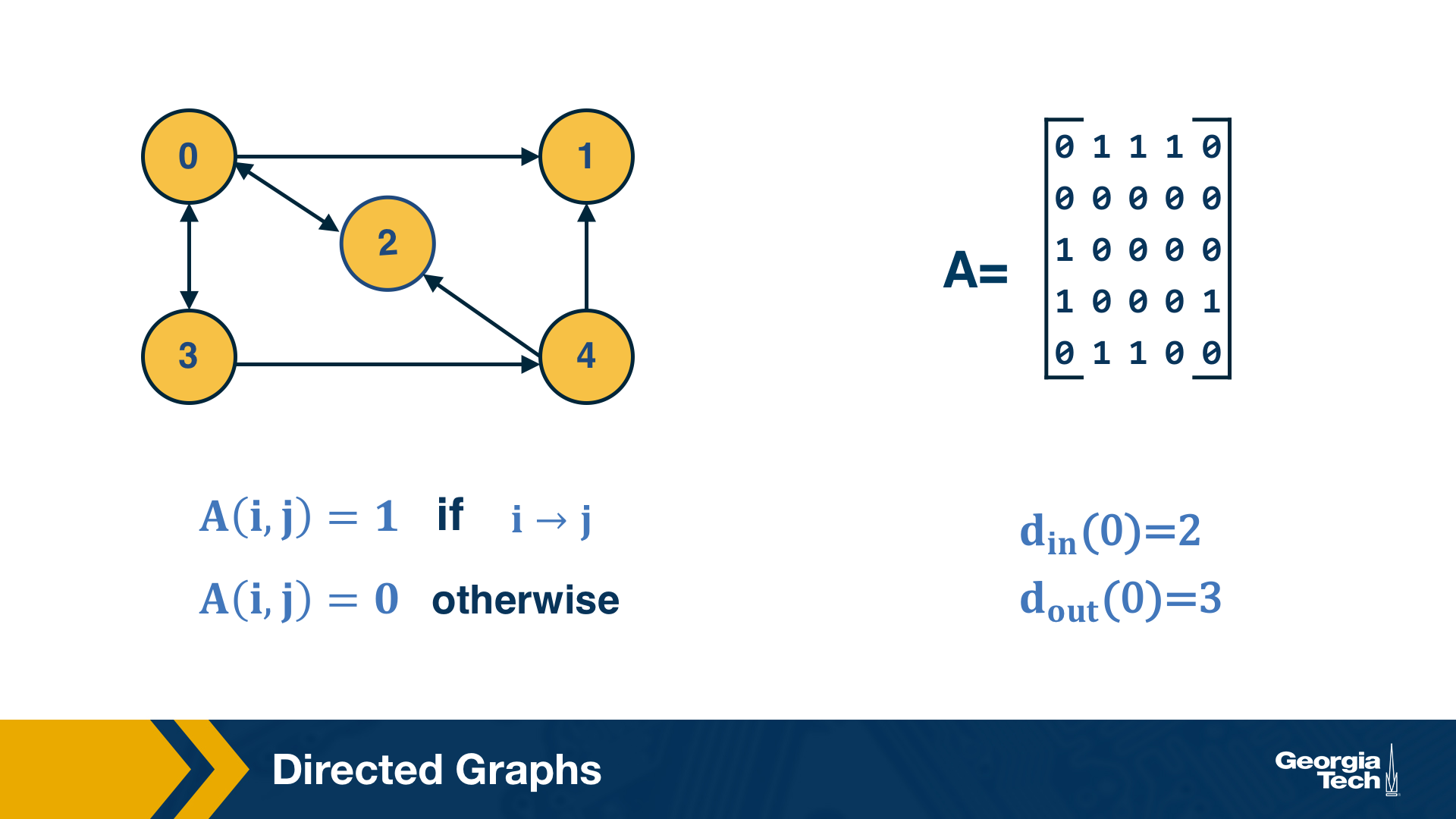

Directed Graphs

Another common class of networks is directed graphs. Here, each edge (u,v) has a starting node u and an ending node v. This means that the corresponding adjacency matrix may no longer be symmetric.

A common convention is that the element (i,j) of the adjacency matrix is equal to 1 if the edge is from node i to node j – please be aware however that this convention is not universal.

We also need to revise our definition of node degree: the number of incoming connections to a node v is referred to as in-degree of v, and the number of outgoing connections as out-degree of v.

Food for Thought

Do you see that the sum of in-degrees across all nodes v is equal to the number of edges m? The same is true for the sum of out-degrees.

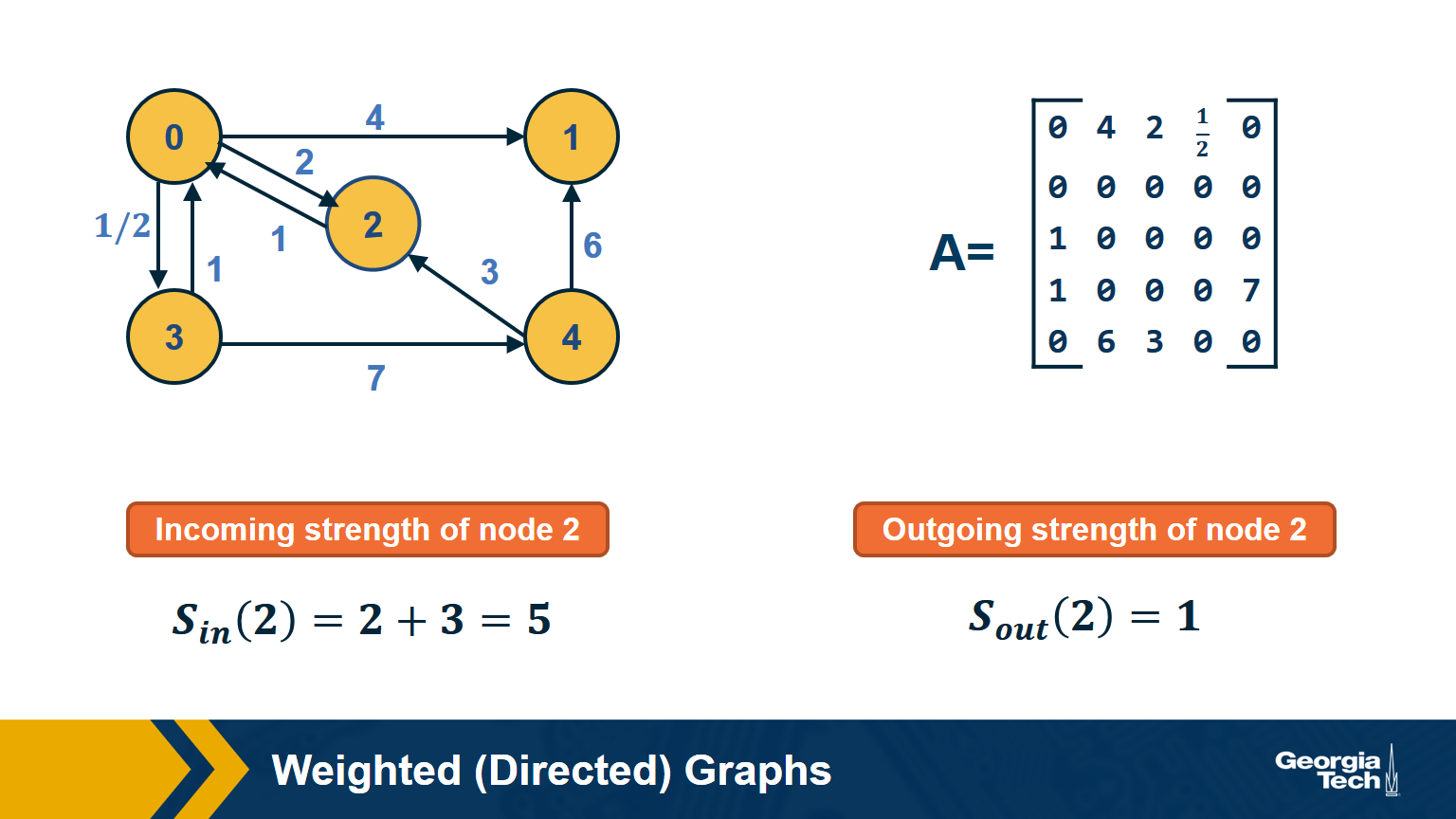

Weighted Directed Graphs

So far we assumed that all edges have the same strength – and the elements of the adjacency matrix are either 0s or 1s. In practice, most graphs have edges of different strength – we can represent the strength of an edge with a number. Such graphs are referred to as weighted.

In some cases the edge weights represent capacity (especially when there is a flow of some sort through the network). In other cases edge weights represent distance or cost (especially when we are interested in optimizing communication efficiency across the network).

In undirected networks, the “strength” of a node is the sum of weights of all edges that are adjacent to that node.

In directed networks, we define in-strength (for incoming edges) and out-strength (for outgoing edges).

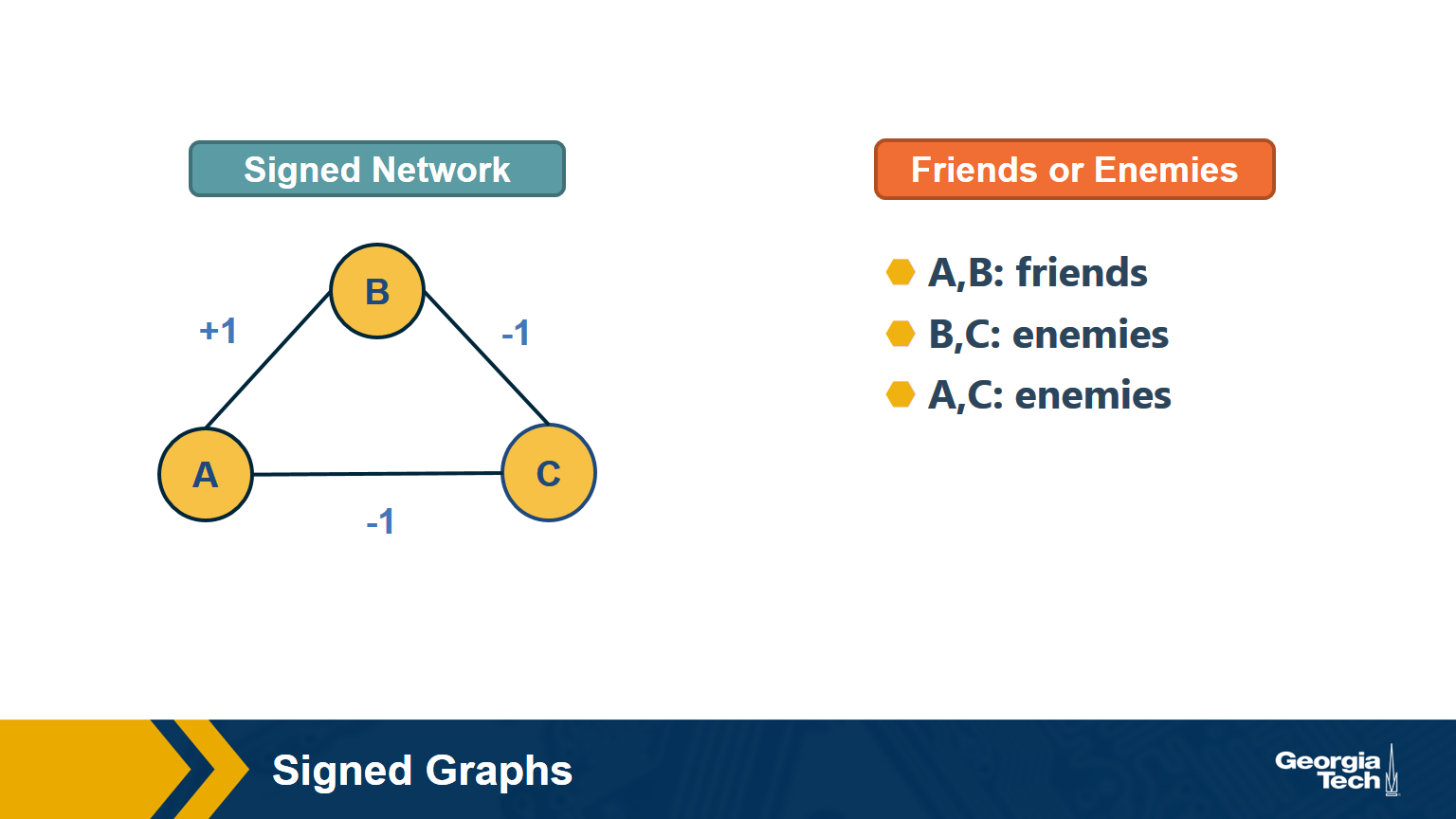

In signed graphs, the edge weights can be negative, representing competitive interactions. For example, think of a network of people in which there are both friends and enemies (as shown in the visualization above).

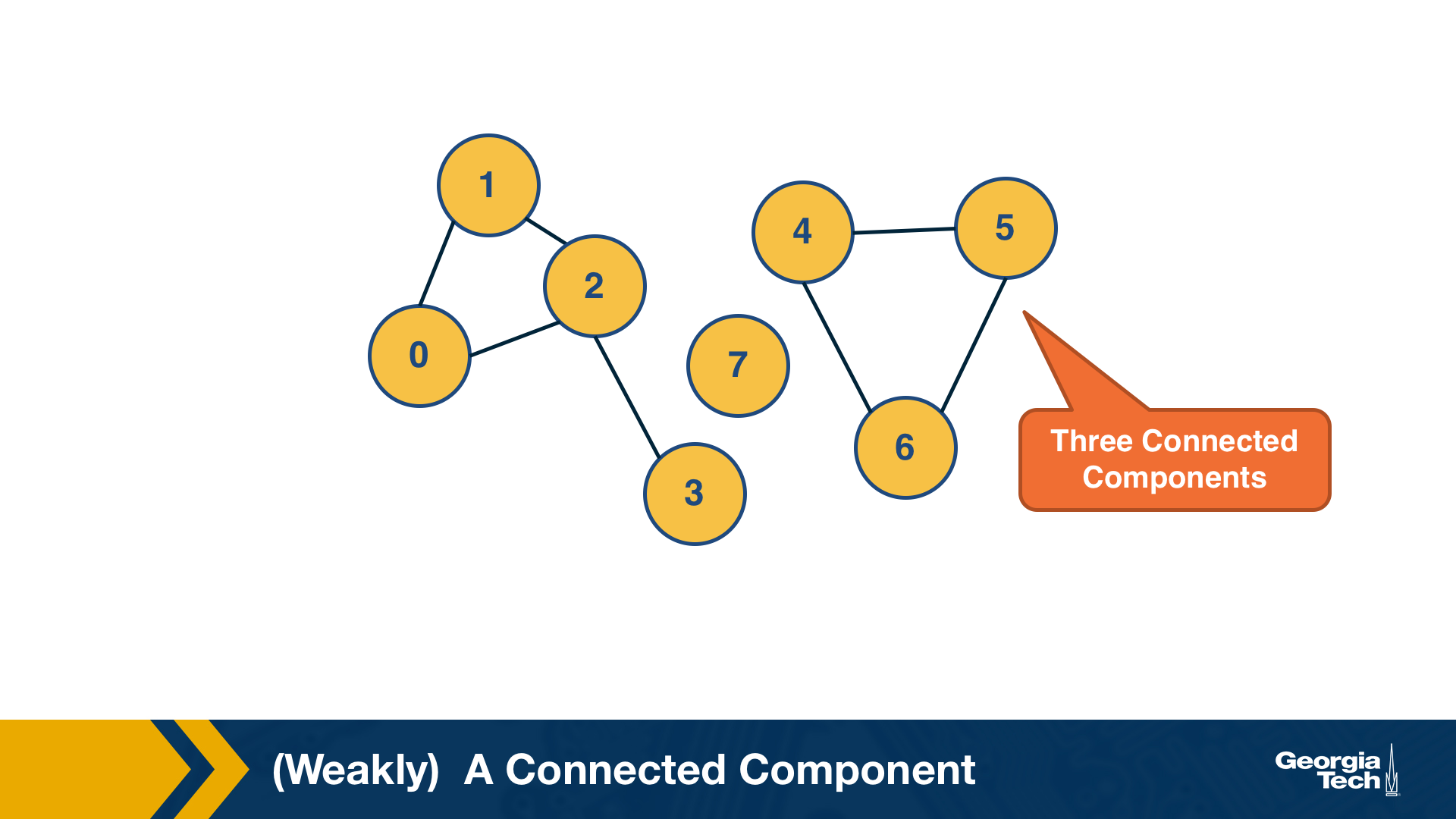

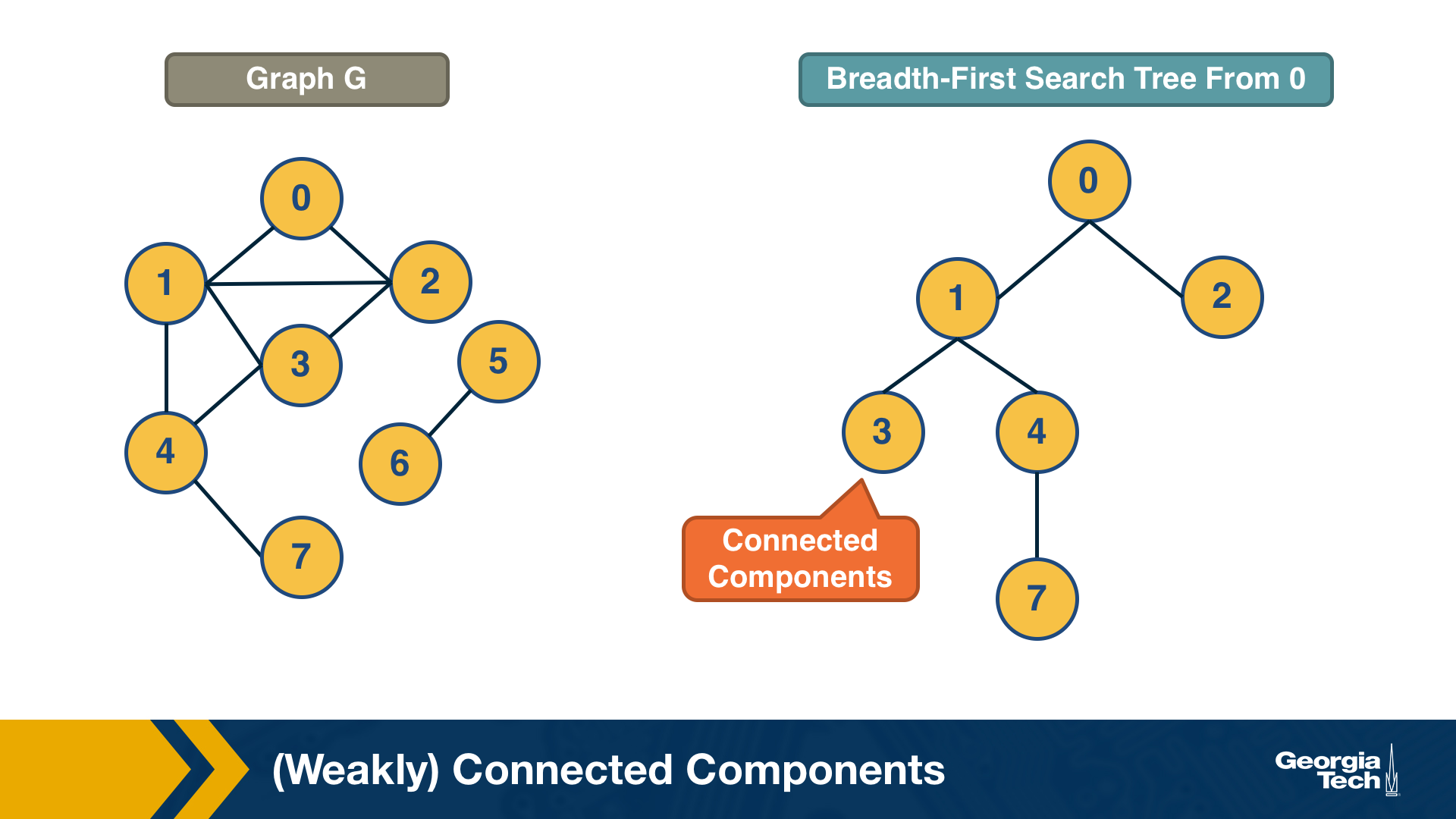

(Weakly) Connected Components

An undirected graph is connected if there is a path from any node to any other node. We say that a directed graph is weakly connected if and only if the graph is connected when the direction of the edge between nodes is ignored. It follows that if a directed graph is strongly connected, it is also weakly connected. In undirected graphs, we can simply refer to connected components.

As we saw in Lesson-1, there are many real-world networks that are not connected – instead, they consist of more than one connected components.

A breadth-first-search (BFS) traversal from a node s can be used to find the set of nodes in the connected component that includes s. Starting from any other node in that component would result in the same connected component.

If we want to compute the set of all connected components of a graph, we can repeat this BFS process starting each time from a node s that does not belong to any previously discovered connected component. The running-time complexity of this algorithm is 𝝝(m+n) time because this is also the running-time of BFS if we represent the graph with an adjacency list.

Food for Thought

If you are not familiar with the $O, \Omega, \Theta$ notation, please read about them at: https://en.wikipedia.org/wiki/Big_O_notationLinks

Strongly Connected Components

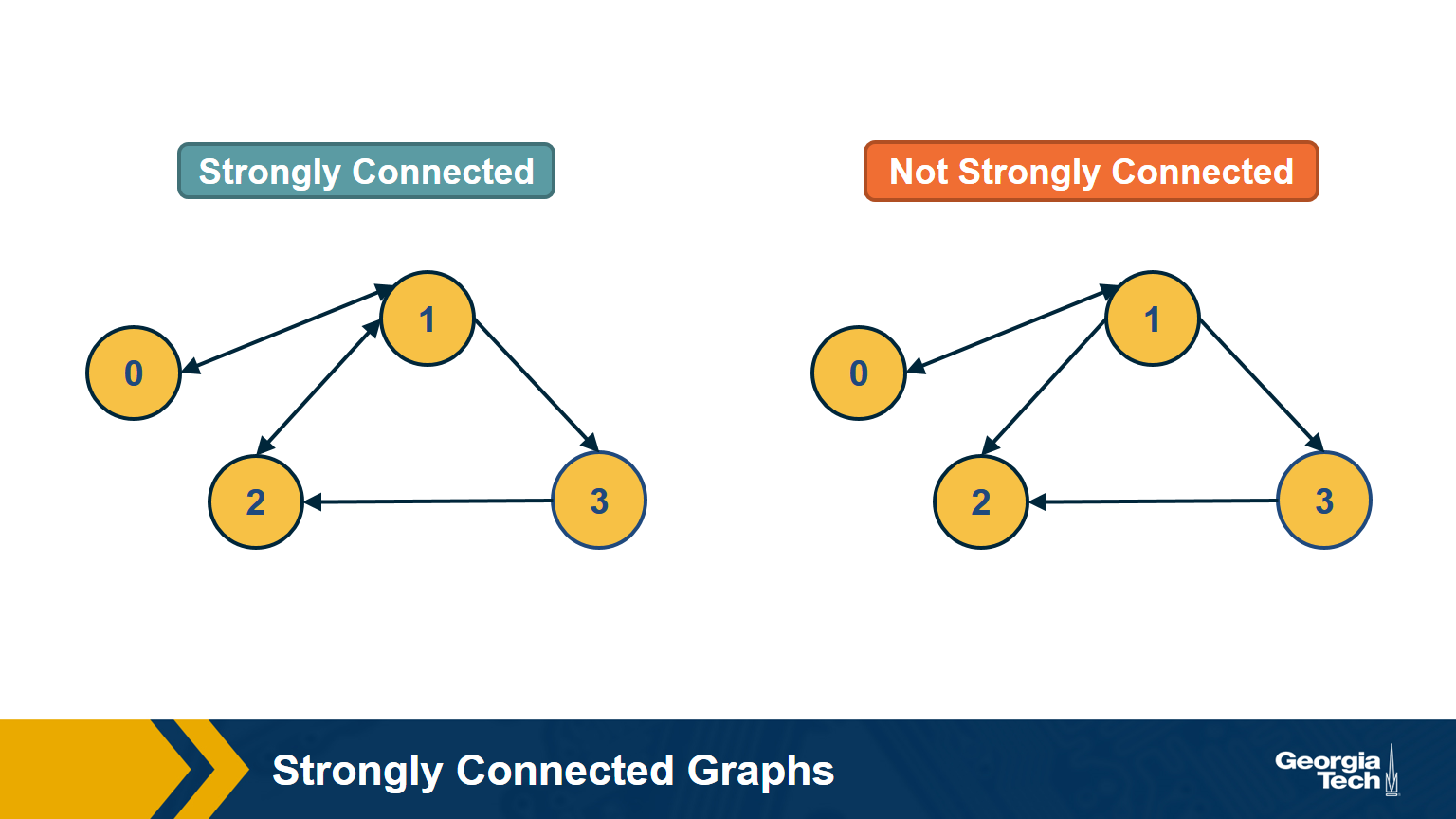

In directed graphs, the notion of connectedness is different: a node s may be able to reach a node t through a (directed) path – but node t may not be able to reach node s.

A directed graph is strongly connected if there is a path between all pairs of vertices. A strongly connected component(SCC) of a directed graph is a maximal strongly connected subgraph.

If the graph has only one SCC, we say that it is strongly connected. How would you check (in linear time) if a directed graph is strongly connected? Please think about this for a minute before you see the answer below.

Answer

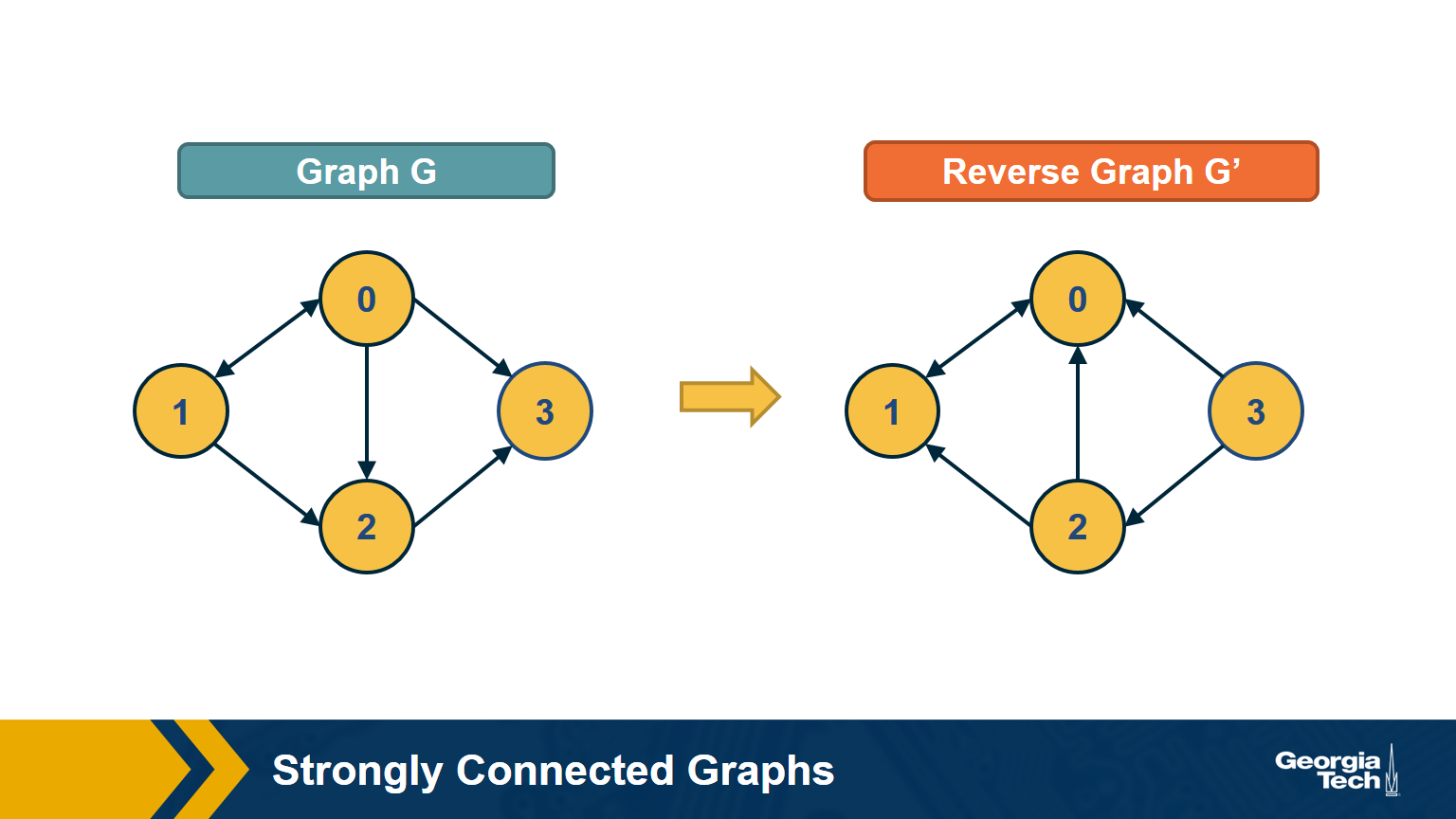

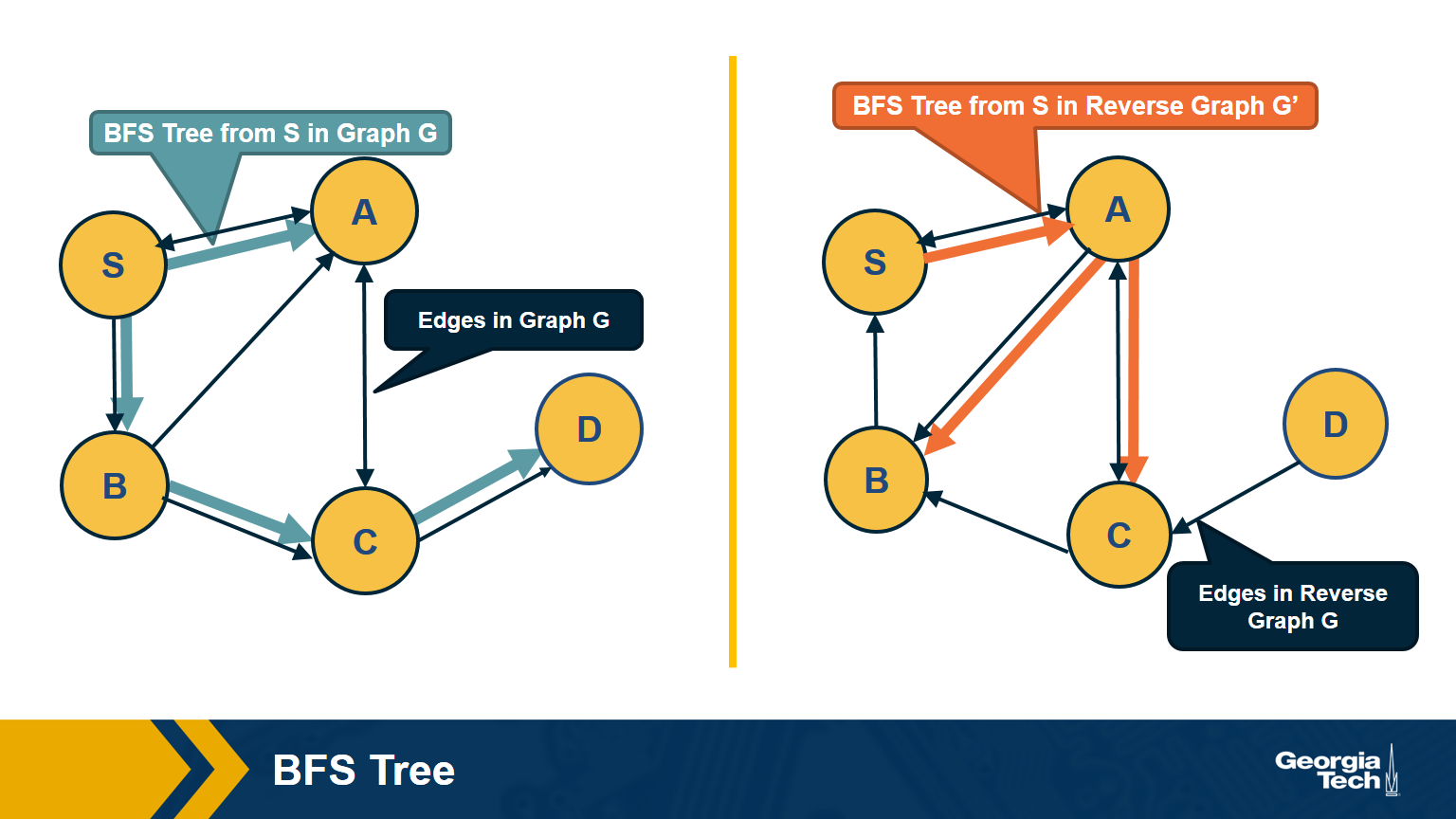

First, note that a directed graph is strongly connected if and only if any node s can reach all other nodes, and every other node can reach s. So, we can pick any node s and then run BFS twice. First, on the original graph G. Second, run BFS on the graph G’ in which each edge has opposite direction than in G – G’ is called the reverse graph of G. If both BFS traversals reach all nodes in G, it must be that G is strongly connected (do you see why?).

The visualization above shows an example in which node D cannot reach S (so S cannot reach D in the reverse graph).

How would you compute the set of strongly connected components in a directed graph? Two famous algorithms to do so are Tarjan’s algorithm and Kosaraju’s algorithm. They both rely on Depth-First Search traversals and run in 𝚯(n+m) time, if the graph is represented with an adjacency list.

Food for Thought

We suggest you study Tarjan’s or Kosaraju’s algorithm. For instance, Kosaraju’s algorithm is described at: https://en.wikipedia.org/wiki/Kosaraju%27s_algorithm

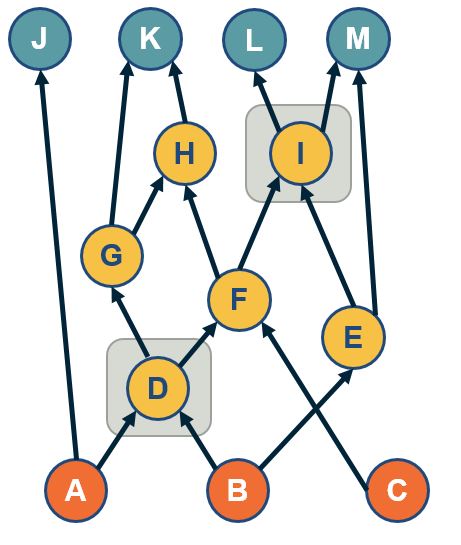

Directed Acyclic Graphs (DAGs)

A special class of directed graphs that is common in network science is those that do not have any cycles. They are referred to as directed acyclic graphs or DAGs. DAGs are common because they represent generalized hierarchy and dependency networks. In a generalized hierarchy a node may have more than one parent. An example of a dependency network is the prerequisite relations between college courses. We say that a directed network has a topological order if we can run its nodes so that every edge points from a node of lower rank to a node of higher rank.

A directed graph G:

A Topological Ordering of Graph G (All nodes in which all edges point from nodes at the left to nodes at the right):

Important facts about DAGS:

-

If a directed network has a topological order then it must be a DAG.

- This is easy to show: if the network had a cycle, there would be an edge from a higher-rank node to a lower-rank node – but this would violate the topological order property.

-

A DAG must include at least one source node. i.e a node with zero incoming edges.

- To see that, start from any node of the DAG and start moving backwards, following edges in the opposite direction. Given that there are no cycles and the graph has a finite number of nodes, we will eventually reach a source node.

-

If a graph is a DAG, then it must have a topological ordering.

- You can show this as follows:

- Start from a source node s (we already showed that every DAG has at least one source node).

- Then remove that source node s and decrement the in-degree of all nodes that s points to. The graph is still a DAG after the removal of s.

- Choose a new source node s’ and repeat the previous step until all nodes are removed. Note that the topological order of a DAG may not be unique.

- You can show this as follows:

Example:

Topological Ordering: G,A,B,E,D,C,F

Remove G:

Remove A:

remove B:

And continue to remove E,D,C,F.

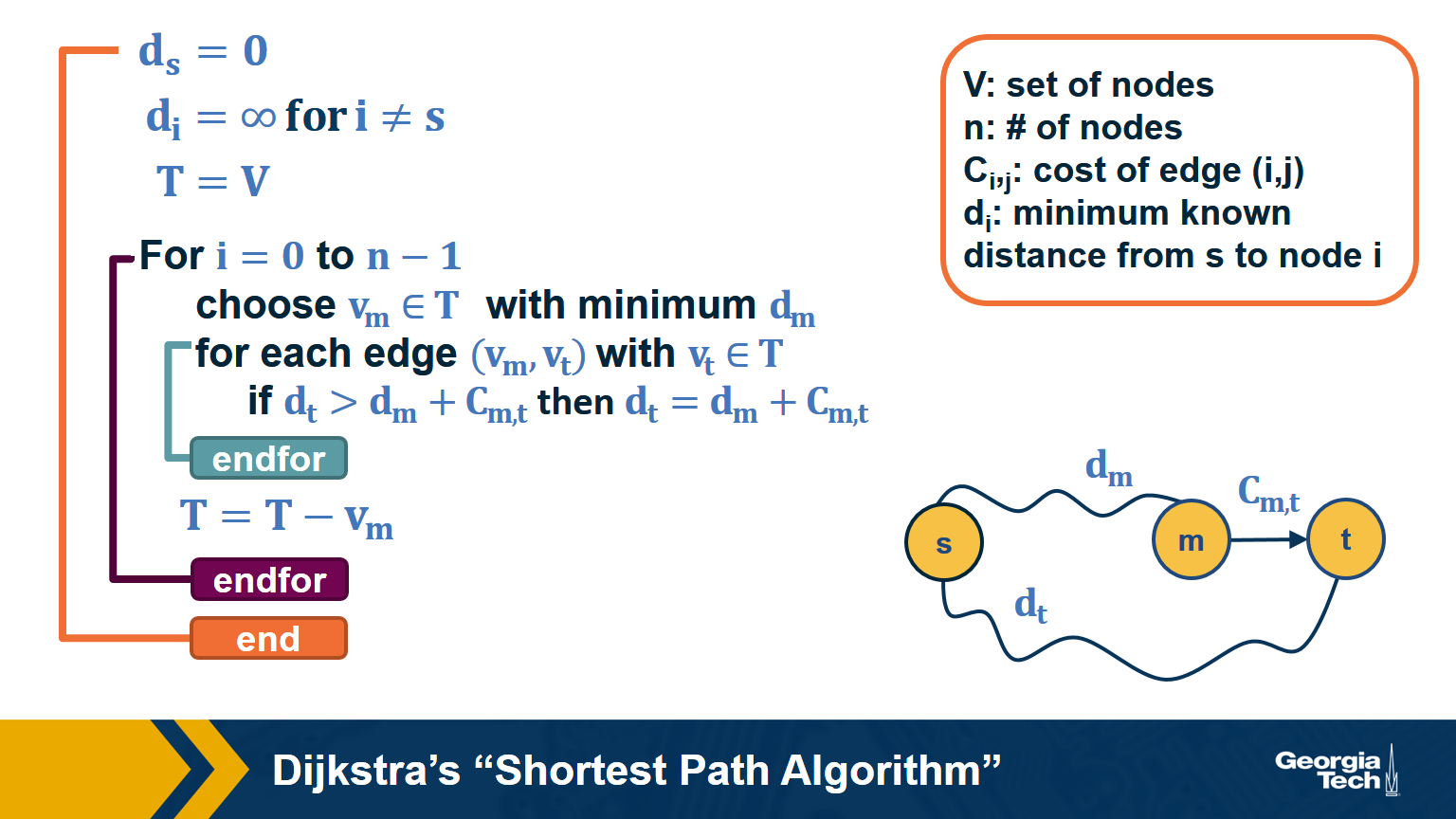

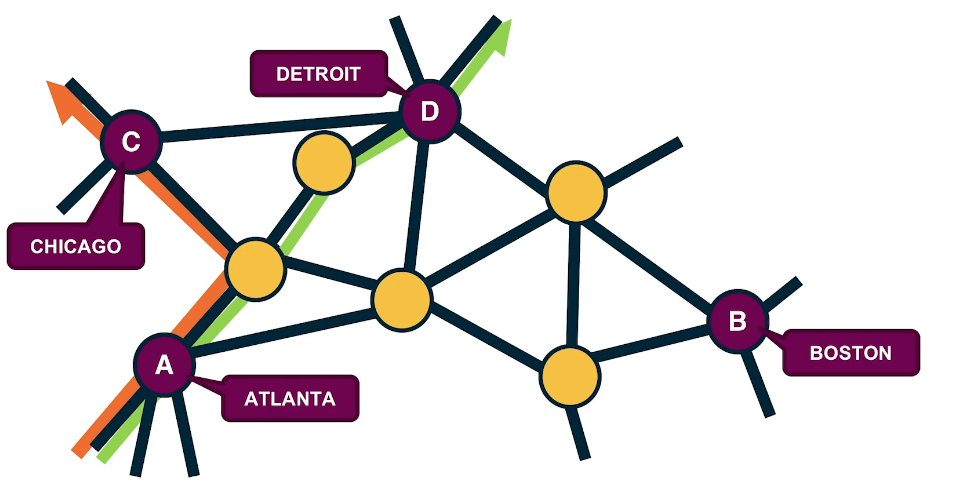

Dijkstra’s Shortest Path Algorithm

We are often interested in the shortest path (or paths) between a pair of nodes. Such paths represent the most efficient way to move in a network.

In unweighted networks, all edges have the same cost, and the shortest path from a node s to any other node in the same connected component (or SCC for directed networks) can be easily computed in linear time using a Breadth-First Search traversal from node s.

If the network is weighted, and the weight of each edge is its “length” or “cost”, we can use Dijkstra’s algorithm, showed above, to compute the shortest path from s to any other node. Note that this algorithm is applicable only if the weights are positive.

The key idea in the algorithm is that in each iteration we select the node m with the minimum known distance from s – that distance cannot get any shorter in subsequent iterations. We then update the minimum known distance to every neighbor of m that we have not explored yet, if the latter is larger than the distance from s to m plus the cost of the edge from m to t.

If the network is weighted and some weights are negative, then instead of Dijkstra’s algorithm we can use the Bellman-Ford algorithm, which is a classic example of dynamic programming. The running-time of Bellman-Ford is $O(mn)$, where m is the number of edges and n is the number of nodes. On the other hand, the running time of Dijkstra’s algorithm is $O(m+nlogn)$ if the latter is implemented with a Fibonacci heap (to identify the node with the minimum distance from s in each iteration of the loop).

Food for Thought

If you are not familiar with Fibonacci heaps, we suggest you review that data structure at: https://en.wikipedia.org/wiki/Fibonacci_heap

Random Walks

In some cases, we do not have a complete map of the network. Instead, we only know the node that we currently reside at, and the connections of that node. When that is the case, it is often useful to perform a random walk in which we move randomly between network nodes.

The simplest example of a random walk is to imagine a walker that is stationed at node $v$. The walker then randomly selects a neighbor of $v$ and moves to that neighbor. If the network is unweighted, the walker will transition along each edge with a probability of $\frac{1}{k}$ where $k$ is the number of outgoing edges for that particular node. If a network is weighted, the transition probabilities are functions of the edge weights. These transition probabilities can be represented with a matrix $P$ in which the $(i,j)$ element is the probability that the walker moves from node $i$ to node $j$.

If the walker continues randomly moving from neighbor to neighbor and recording the number of times it transitions along a particular edge, a probability distribution of finding the walker on a particular node at any given time emerges. This distribution is known as the stationary distribution.

So how can we calculate this distribution using our transition matrix $P$? There are two ways to approach this. For the first, we need a vector that represents the walker probability for each node at a time $t$. Let’s call this vector $q_t$. Often we are given the probability values for the initial state of each node, but the stationary distribution is not dependent on the initial state probabilities. This means we can assign each node any initial probability so long as they all add up to 1. Next, we describe each iteration of the random walk by the equation: $q_{t+1} = P^Tq_t$, where $P^T$ is the transpose of the transition matrix. For each iteration of $t$, the $i_{th}$ element of the resulting vector $q_{t+1}$ is nothing but the probability of $i$ being the current node calculated as the probability incoming edge $(j,i)$ was taken, times the probability that the walker was at previous node $j$. We can express this as

\[\begin{aligned} P(current_{node} = i) &= \sum_{j=1}^N P(edge(prev_{node}=j,current_{node}=i )) \\ &\times P(prev_{node}=j) \end{aligned}\]where $N$ is the total number of nodes in the graph. As $t$ increases, the probability values of will converge asymptotically. Note that the sum of the elements of $q_t$ will remain equal to 1 for any time $t$.

Even though this method takes several iterations of time to find the distribution, note that the distribution itself does not change over time. Exploiting this fact leads us to our second method as follows.

Let $q$ be the stationary distribution expressed as a column vector. It satisfies the relationship $P^Tq = q$ for transition matrix $P$. Recall from linear algebra that a transition matrix $T$ has an eigenvector $v$ if $Tv= \lambda v$ for an eigenvalue $\lambda$. From this, we can see that the eigenvectors of $P^T$ are the stationary distribution expressed as column vectors where the eigenvalue $\lambda$ = 1.

For example:

Then the transition matrix:

\[P = \begin{bmatrix} 0 & 1 & 0 \\ 0 & 0 & 1 \\ .5 & .5 & 0 \end{bmatrix},\]And the stationary distribution:

\[\begin{aligned} q &= P^Tq \\ q &= \begin{bmatrix} 1/5 \\ 2/5 \\ 2/5 \end{bmatrix} \end{aligned}\]An important result of this is that, in undirected and connected networks, a stationary distribution always exists. It is not, however, necessarily unique. See also the first “food-for-thought” question below.

Food for Thought

- Show that in undirected and connected networks in which the elements of the matrix P are strictly positive (and so there is at least a small probability of transitioning from every node to every other node), the steady-state probability vector q is unique and it is the leading eigenvector of the transition matrix $P$. Hint: the largest eigenvalue of $P^T$ is equal to 1. Why?

- What can go wrong with the stationary distribution equation in directed networks?

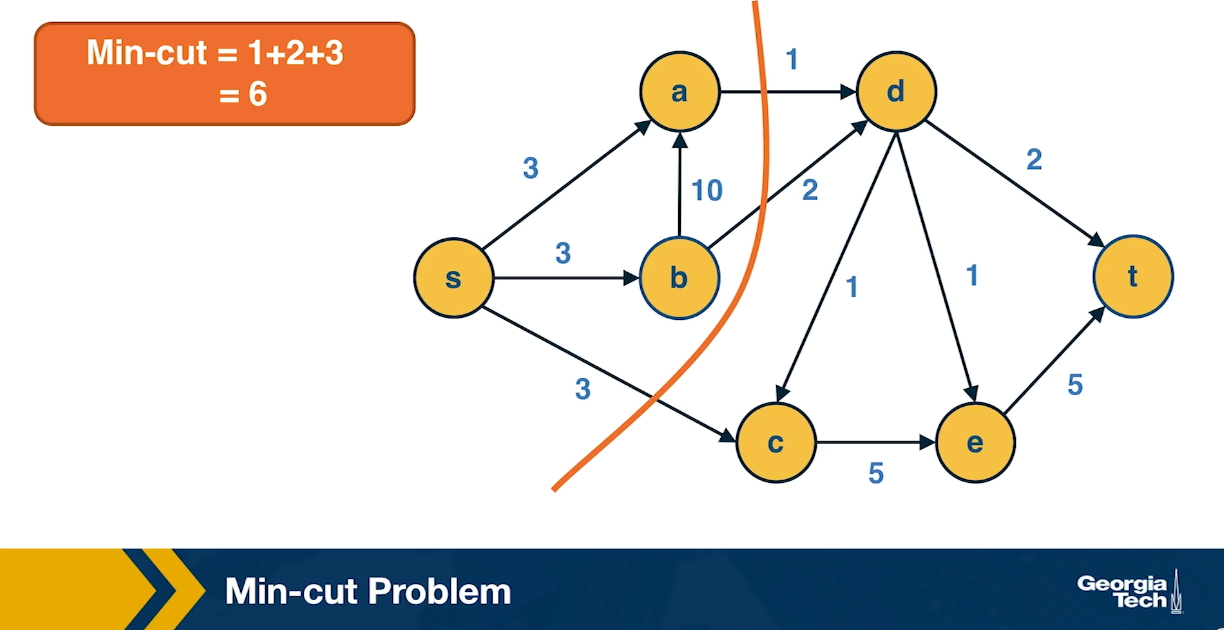

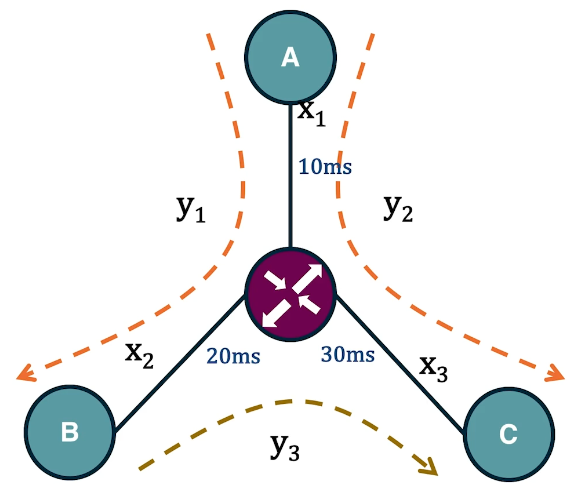

Min-Cut Problem

Another important concept in graph theory (and network science) is the notion of a minimum cut (or min-cut). Given a graph with a source node s and a sink node t, we define as cut(s,t) of the graph a set of edges that, if removed, will disrupt all paths from s to t.

In unweighted networks, the min-cut problem refers to computing the cut with the minimum number of edges. In weighted networks, the min-cut refers to the cut with minimum sum of edge weights.

Max-flow Problem

Another problem that occurs naturally in networks that have a source node s and a target node t is to compute a “flow” from s to t.

The edge weights here represent the capacity of each edge, i.e., an edge of weight w>0 cannot carry more than w flow units.

Additionally, edges cannot have a negative flow.

The total flow that arrives at a non-terminal node v has to be equal to the total flow that departs from v – in other words, flow is conserved.

The max-flow problem refers to computing the maximum amount of flow that can originate at s and terminate at t, subject to the capacity constraints and the flow conservation constraints.

The max-flow problem can be solved efficiently using the Ford-Fulkerson algorithm, as long as the capacities are rational numbers. In that case, the running time of the algorithm is $O(mF)$, where m is the number of edges and F is the maximum capacity of any edge.

The algorithm works by constructing a residual network, which shows at any point during the execution of the algorithm the residual capacity of each edge. In each iteration, the algorithm finds a path from s to t with some residual capacity (we can use BFS or DFS on the residual network to do that). Suppose that the minimum residual capacity among the edges of the chosen path is f. We add f on the flow of every edge (u,v) along that path, and decrease the capacity of those edges by f in the residual network. We also add f on the capacity of every reverse edge (v,u) of the residual network. The capacity of those reverse edges is necessary so that we can later reduce the flow along the edge (u,v), if needed, by routing some flow on the edge (v,u).

In the next step, $s \rightarrow a \rightarrow t$ reduces by 1 unit

Then:

Then:

So the max flow is 3.

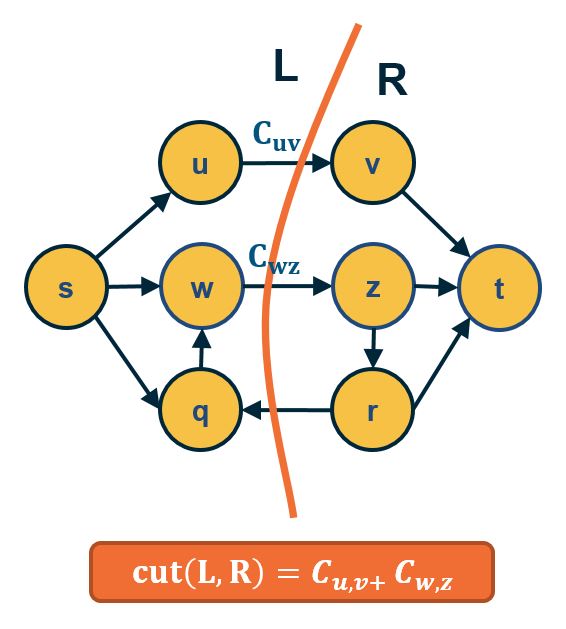

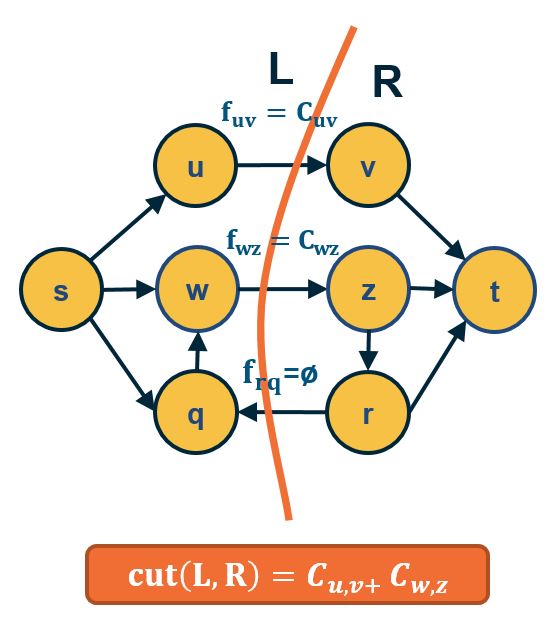

Max-flow=Min-cut

An important result about the min-cut and max-flow problems is that they have the same solution: the sum of the weights of the min-cut is equal to the max-flow in that network.

- Part A:

- ANY cut(L,R) such that s∈L and t∈R has capacity ≥ ANY flow from s to t.

- Thus: mincut(s,t)≥maxflow(s,t)

- Part B:

- IF $f^*=$ maxflow(s,t) , the network can be partitioned in two sets of nodes L and R with s∈L and t∈R , such that:

- All edges from L to R have flow =capacity

- All edges from R to L have flow = 0.

- So, edges from L to R define a cut(s,t) with capacity = maxflow(s,t) and, because of Part A, this cut is mincut(s,t).

- Thus: mincut(s,t)=maxflow(s,t)

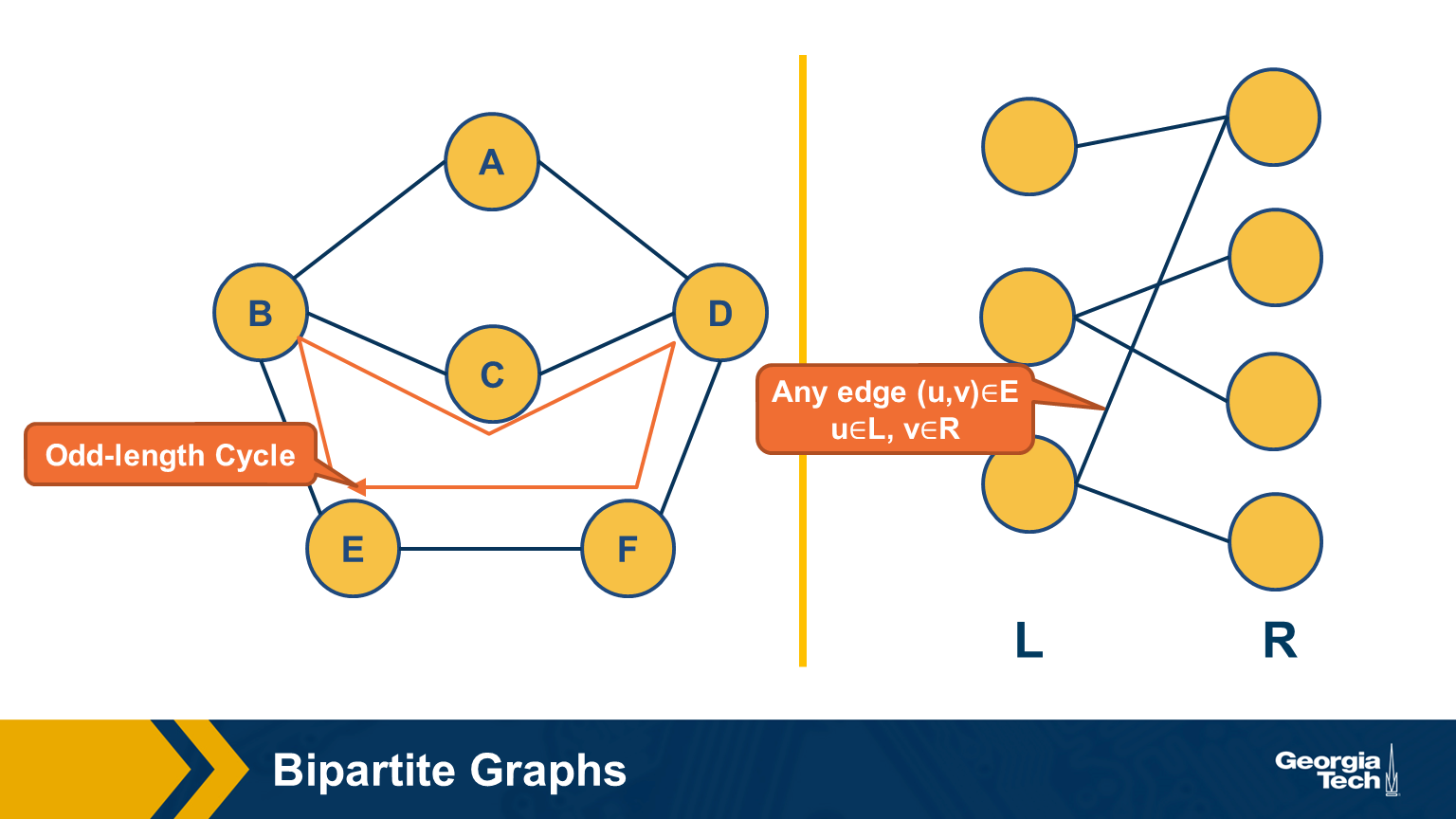

Bipartite Graphs

Another important class of networks is bipartite graphs. Their defining property is that the set of nodes V can be partitioned into two subsets, L and R, so that every edge connects a node from L and a node from R. There are no edges between L nodes – or between R nodes.

Food for Thought Show the following theorem. A graph is bipartite if and only if it does not include any odd-length cycles.

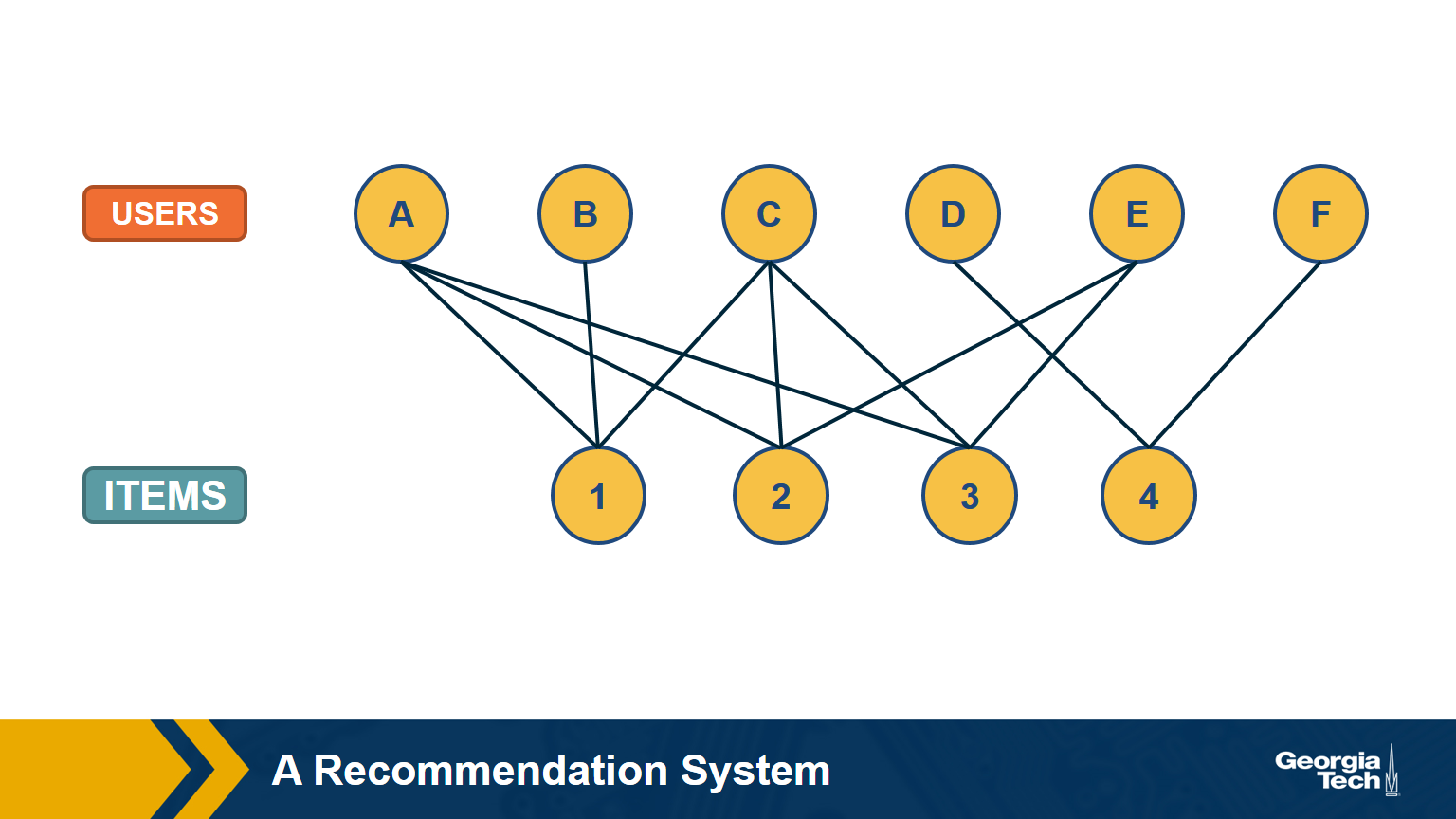

A Recommendation System as a Bipartite Graph

Let’s close this lesson with a practical application of bipartite graphs.

Suppose you want to create a “recommendation system” for an e-commerce site. You are given a dataset that includes the items that each user has purchased in the past. You can represent this dataset with a bipartite graph that has users on one side and items on the other side. Each edge (user, item) represents that that user has purchased the corresponding item.

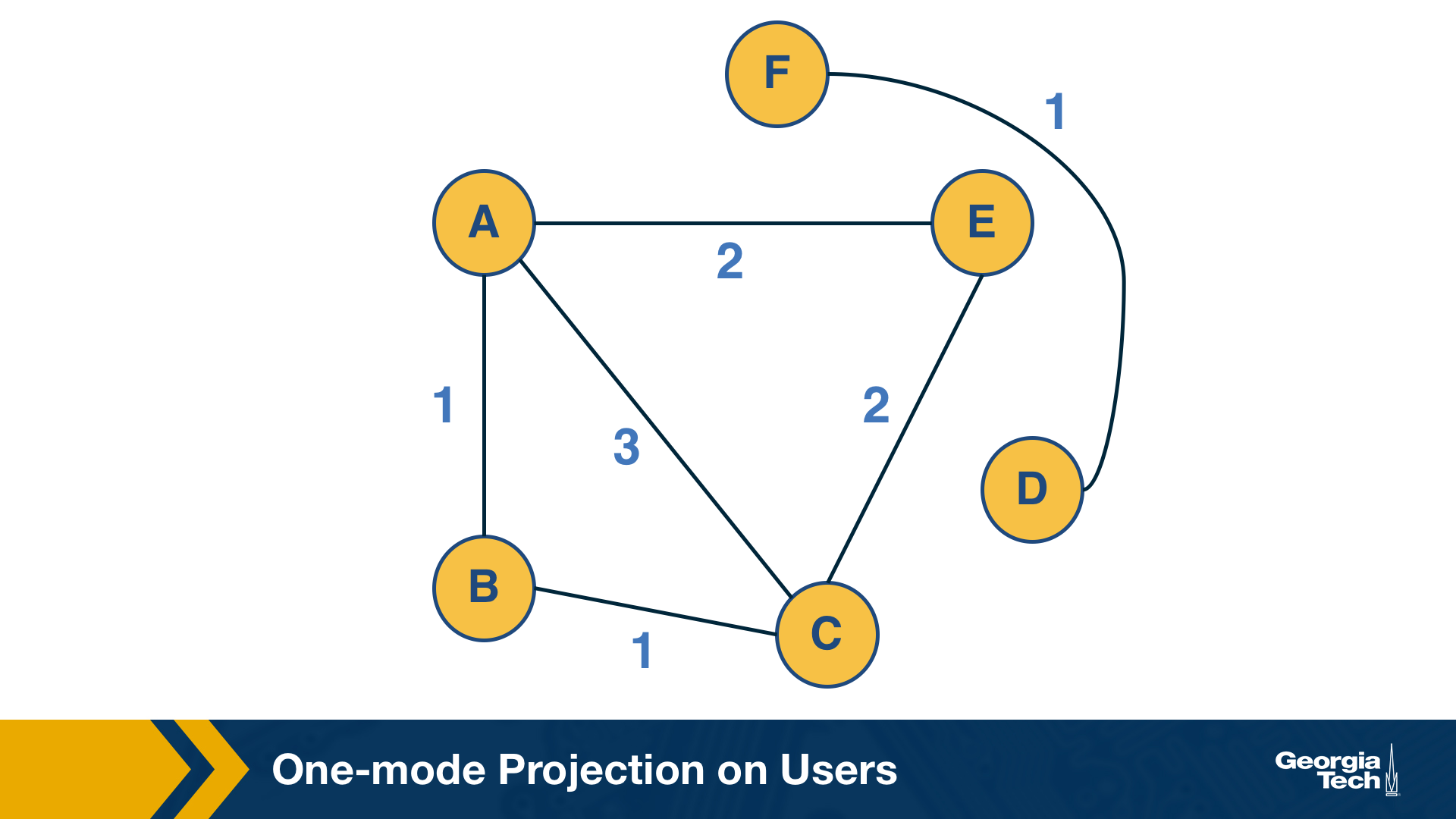

How would you find users that have similar item preferences? Having such “similar users” means that we can give recommendations that are more likely to lead to a new purchase.

This question can be answered by computing the “one-mode projection” of the bipartite graph onto the set of users. This projection is a graph that includes only the set of users – and an edge between two users if they have purchased at least one common item. The weight of the edge is the number of items they have both purchased.

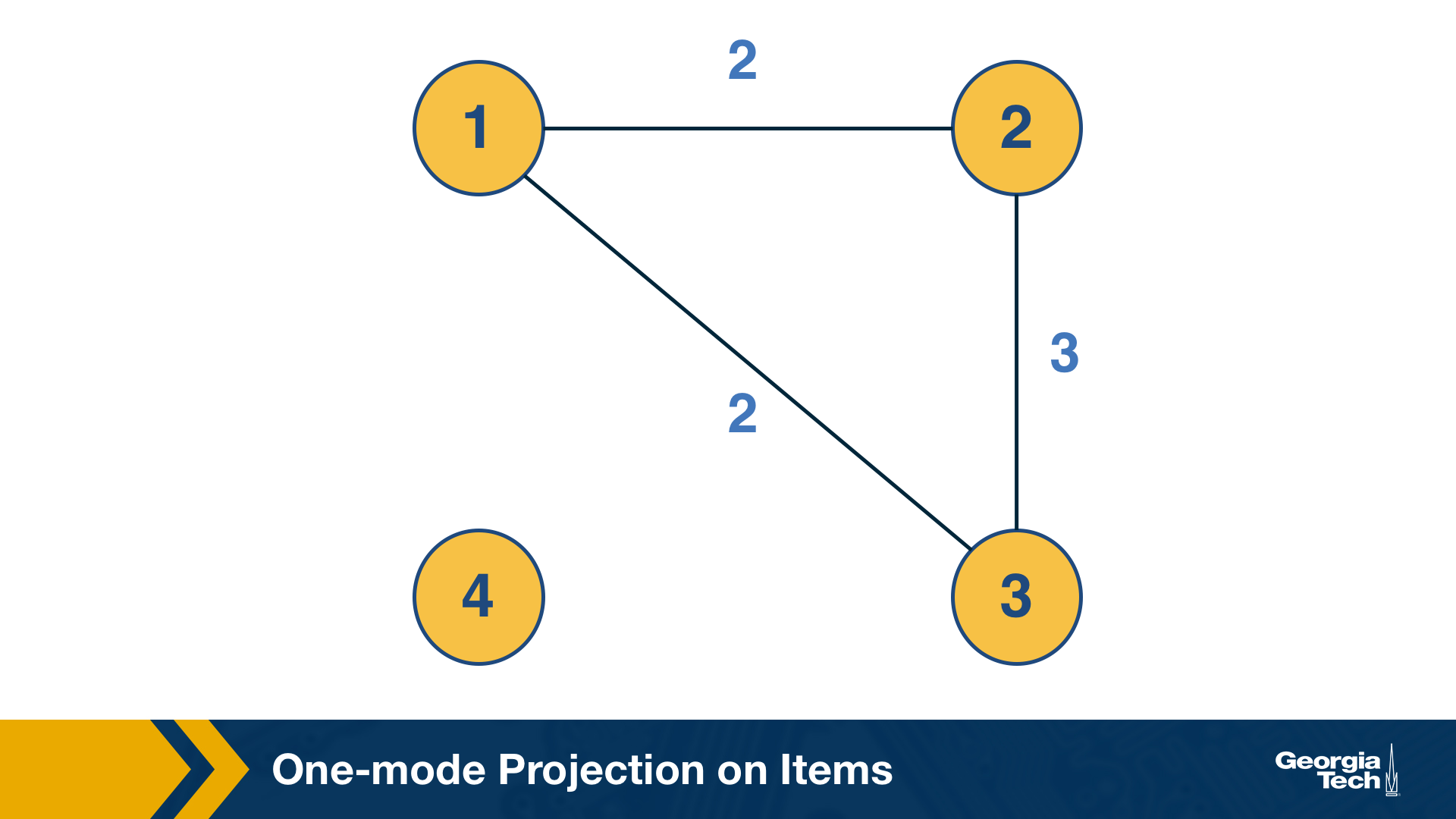

How would you find items that are often purchased together by the same user? Knowing about such “similar items” is also useful because we can place them close to each other or suggest that the user considers them both.

This can be computed by the “one-mode projection” of the bipartite graph onto the set of items. As in the previous projection, two items are connected with a weighted edge that represents the number of users that have purchased both items.

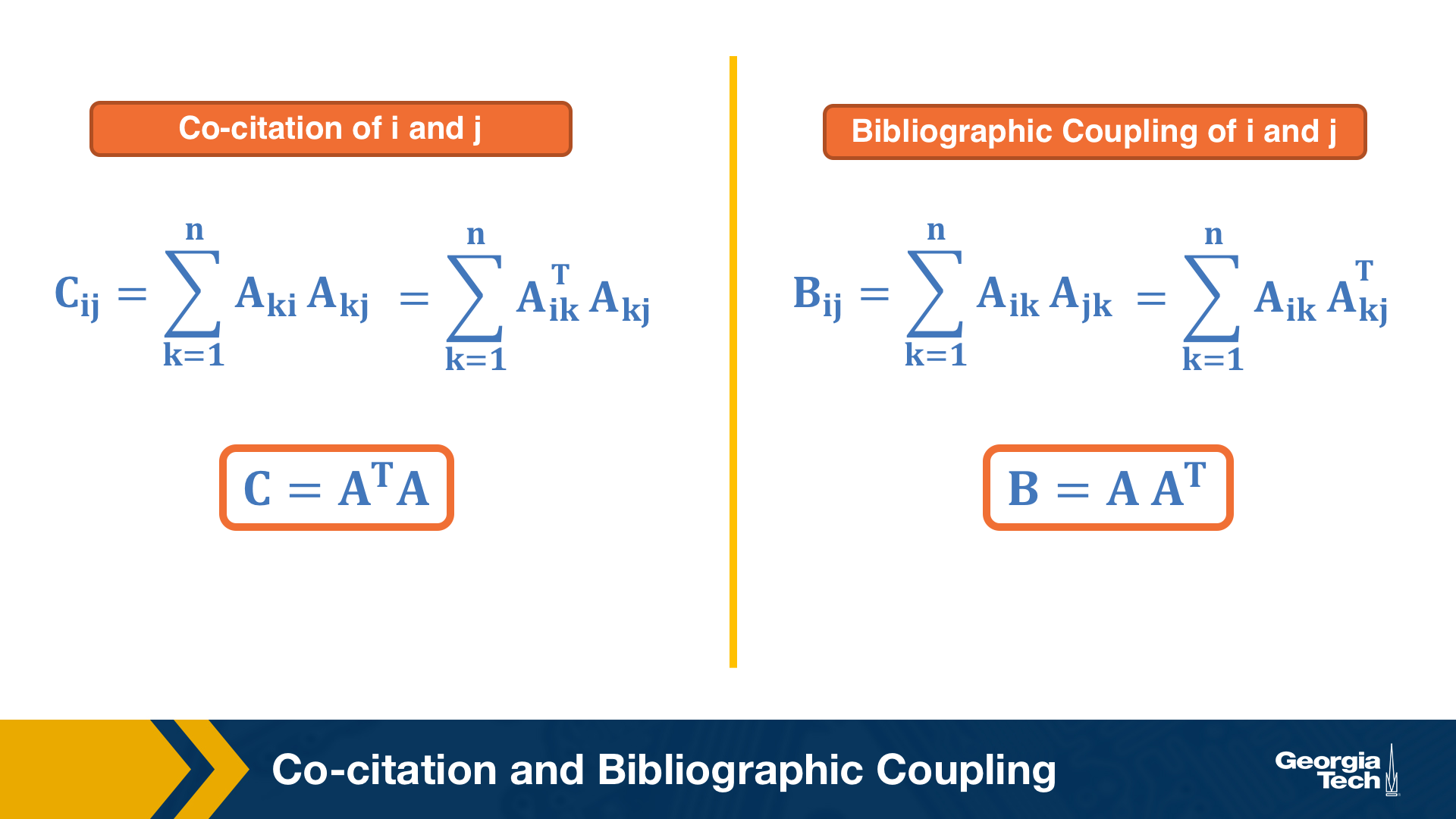

Co-citation and Bibliographic Coupling

The previous one-mode projections can also be computed using the adjacency matrix A that represents the bipartite graph.

Suppose that the element (i,k) of A is 1 if there is an edge from i to k – and 0 otherwise.

The co-citation metric $C_{i,j}$ for two nodes i and j is the number of nodes that have outgoing edges to both i and j. If i and j are items, then the co-citation metric is the number of users that have purchased both i and j.

On the other hand, the bibliographic coupling metric $B_{i,j}$ for two nodes i and j is the number of nodes that receive incoming edges from both i and j. If i and j are users, then the bibliographic coupling metric is the number of items that have been purchased by both i and j.

As you can see both metrics can be computed as the product of $A$ and $A^T$ – the only difference is the order of the matrices in the product.

Example python code using the above graphs:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

import networkx as nx

contents_users = ["A","B","C","D","E","F"]

contents_projects = [1,2,3,4]

contents_edges = [("A",1),("A",2),("A",3),("B",1),("C",1),("C",2),("C",3),("D",4),("E",2),("E",3),("F",4)]

len(contents_edges)

G = nx.Graph()

G.add_nodes_from(contents_users, bipartite = 0)

G.add_nodes_from(contents_projects,bipartite = 1)

G.add_edges_from(contents_edges)

bi_M = nx.algorithms.bipartite.biadjacency_matrix(G,

row_order = contents_users,

column_order = contents_projects)

bi_M.todense()

"""

array([[1, 1, 1, 0],

[1, 0, 0, 0],

[1, 1, 1, 0],

[0, 0, 0, 1],

[0, 1, 1, 0],

[0, 0, 0, 1]])

"""

user_matrix = bi_M @ bi_M.T

projects_matrix = bi_M.T @ bi_M

user_matrix.todense()

"""

array([[3, 1, 3, 0, 2, 0],

[1, 1, 1, 0, 0, 0],

[3, 1, 3, 0, 2, 0],

[0, 0, 0, 1, 0, 1],

[2, 0, 2, 0, 2, 0],

[0, 0, 0, 1, 0, 1]])

"""

projects_matrix.todense()

"""

array([[3, 2, 2, 0],

[2, 3, 3, 0],

[2, 3, 3, 0],

[0, 0, 0, 2]])

"""

Lesson Summary

The objective of this lesson was to review a number of important concepts and results from graph theory and graph algorithms.

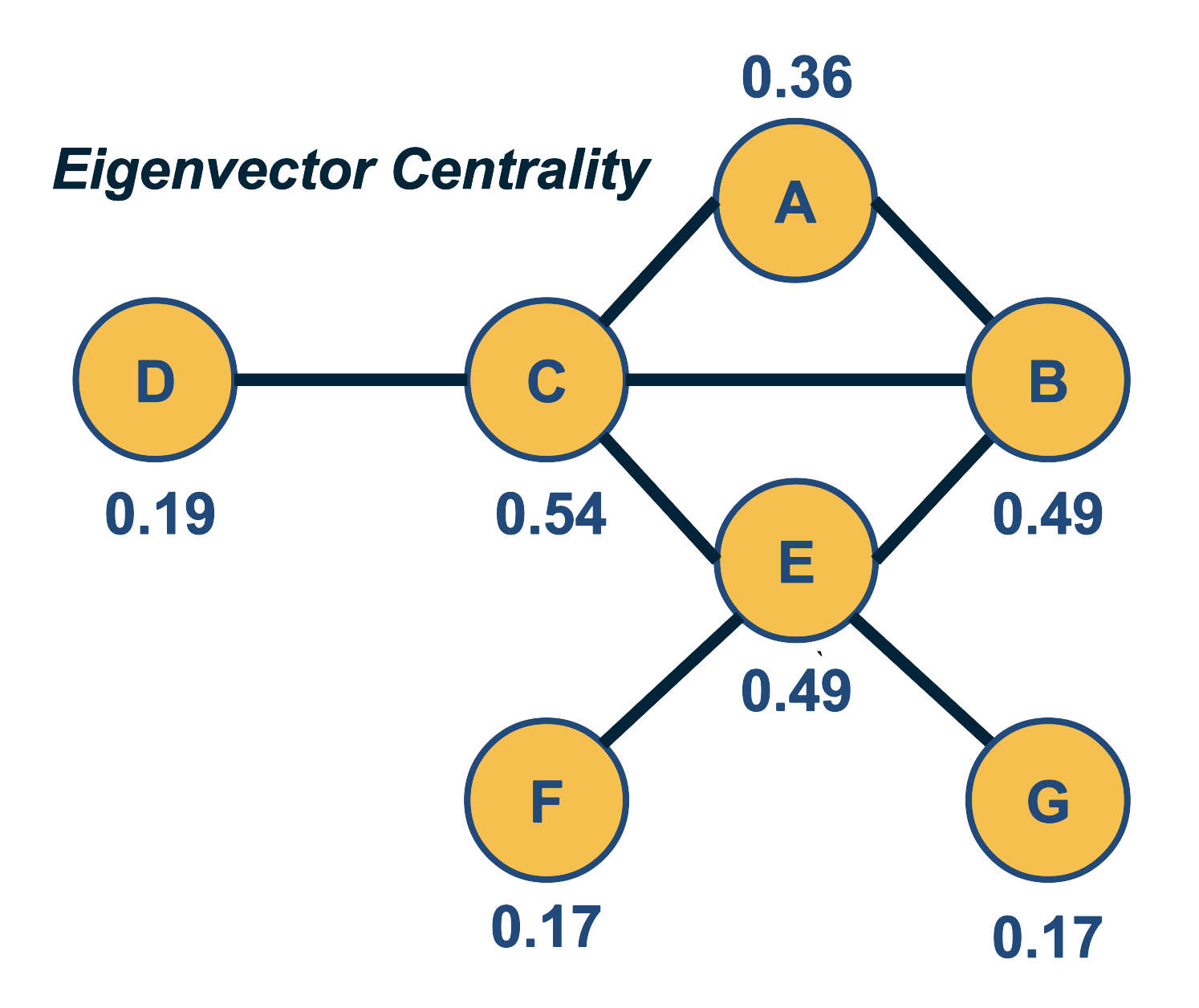

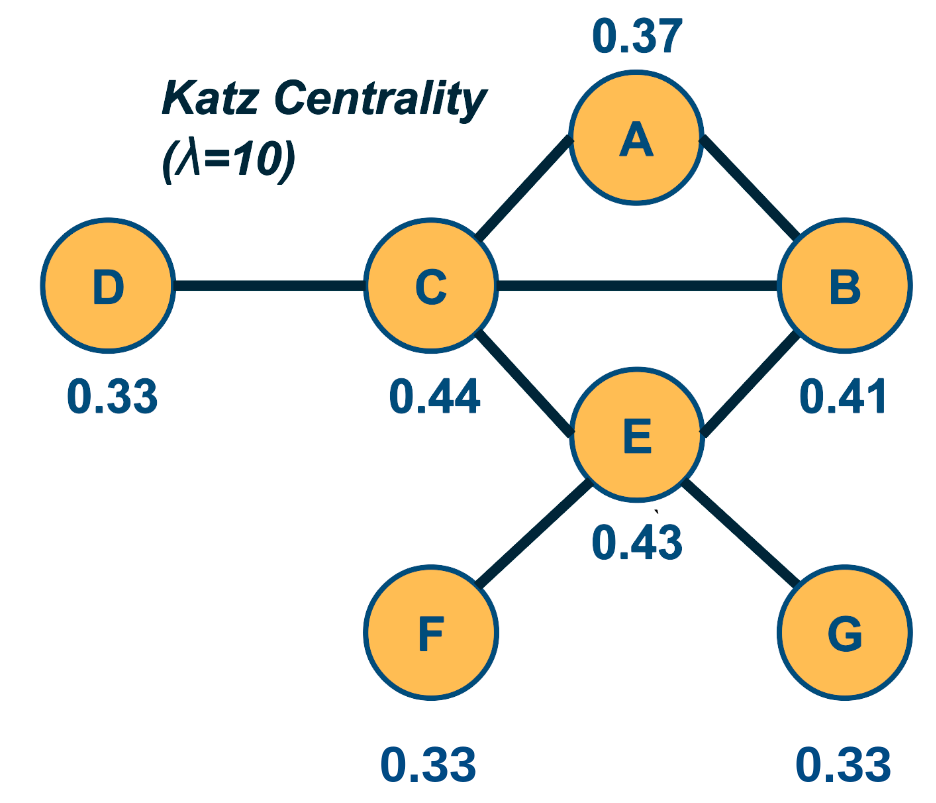

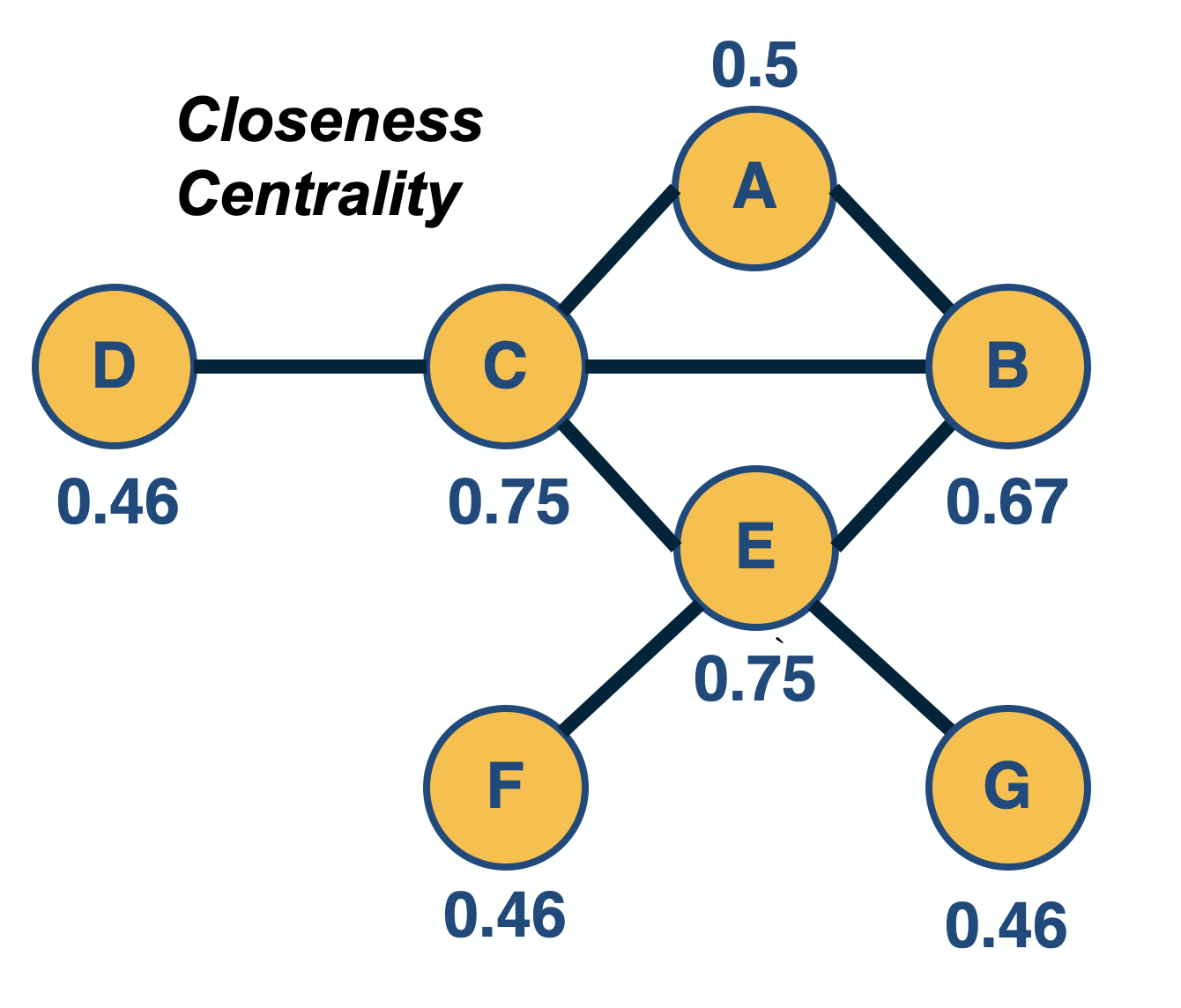

We will use this material in subsequent lessons. For example, the notion of random walks will be important in the definition of the PageRank centrality metric, while the spectral properties of an adjacency matrix will be important in the eigenvector centrality metric.

The Module-1 assignment will also help you understand these concepts more deeply, and to learn how to apply them in practice with real-world network datasets.

Module two

L3 - Degree Distribution and The “Friendship Paradox”

Overview

Required Reading

- Chapter-3 from A-L. Barabási, Network Science 2015.

- Chapter-7 (sections 7.1, 7.2, 7.3, 7.5) from A-L. Barabási, Network Science 2015.

Recommended Reading

- Simulated Epidemics in an Empirical Spatiotemporal Network of 50,185 Sexual Contacts.Luis E. C. Rocha, Fredrik Liljeros, Petter, Holme (2011)

Degree Distribution

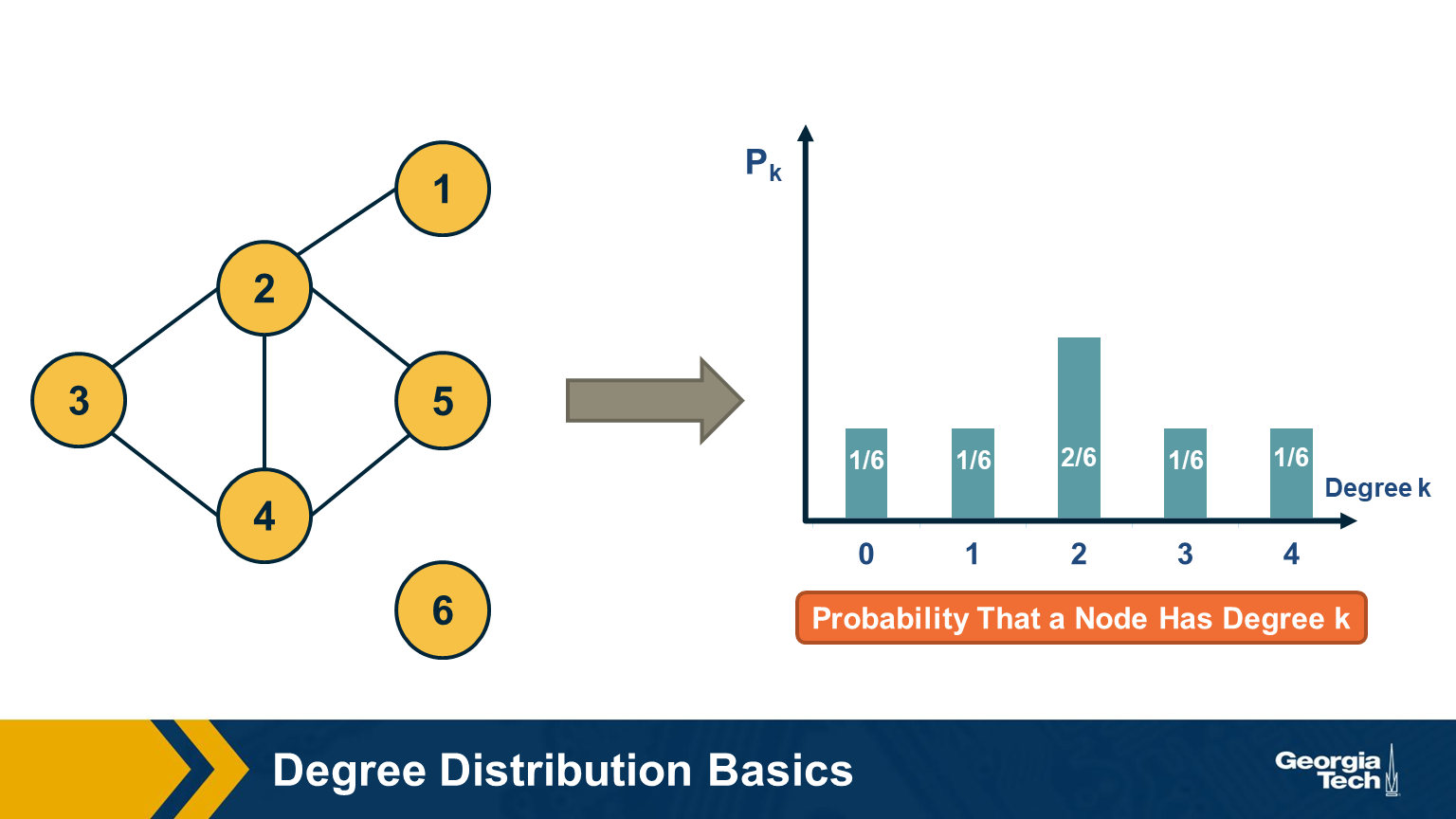

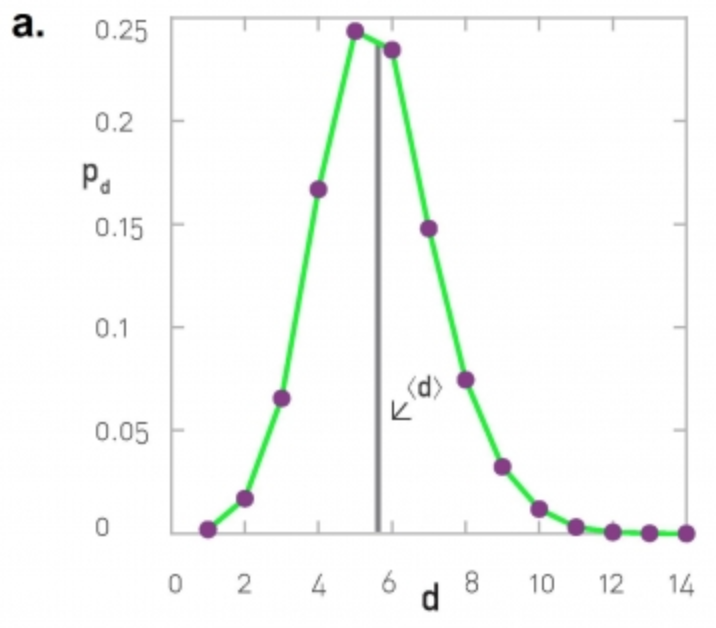

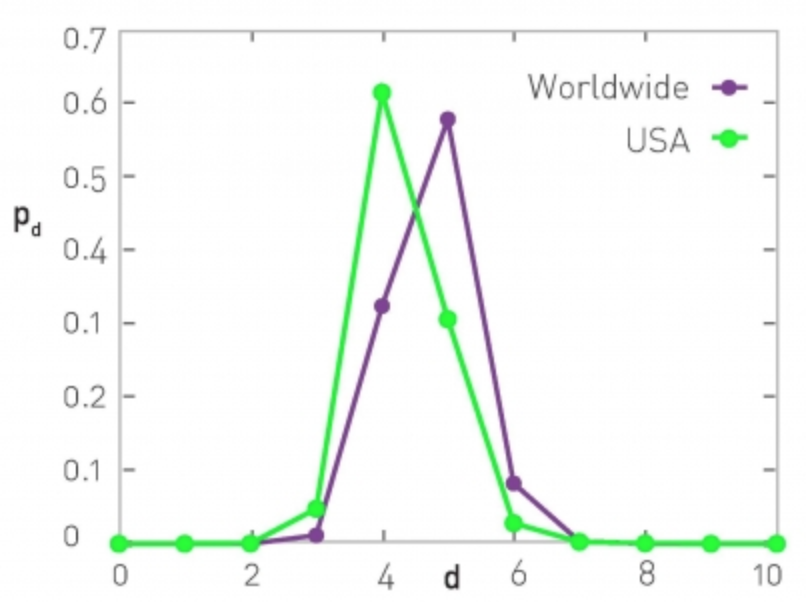

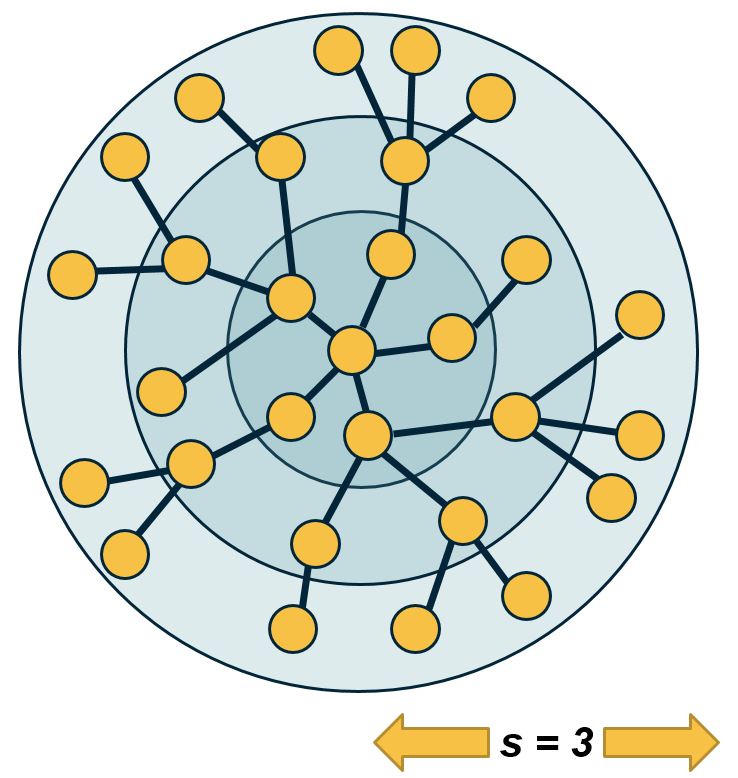

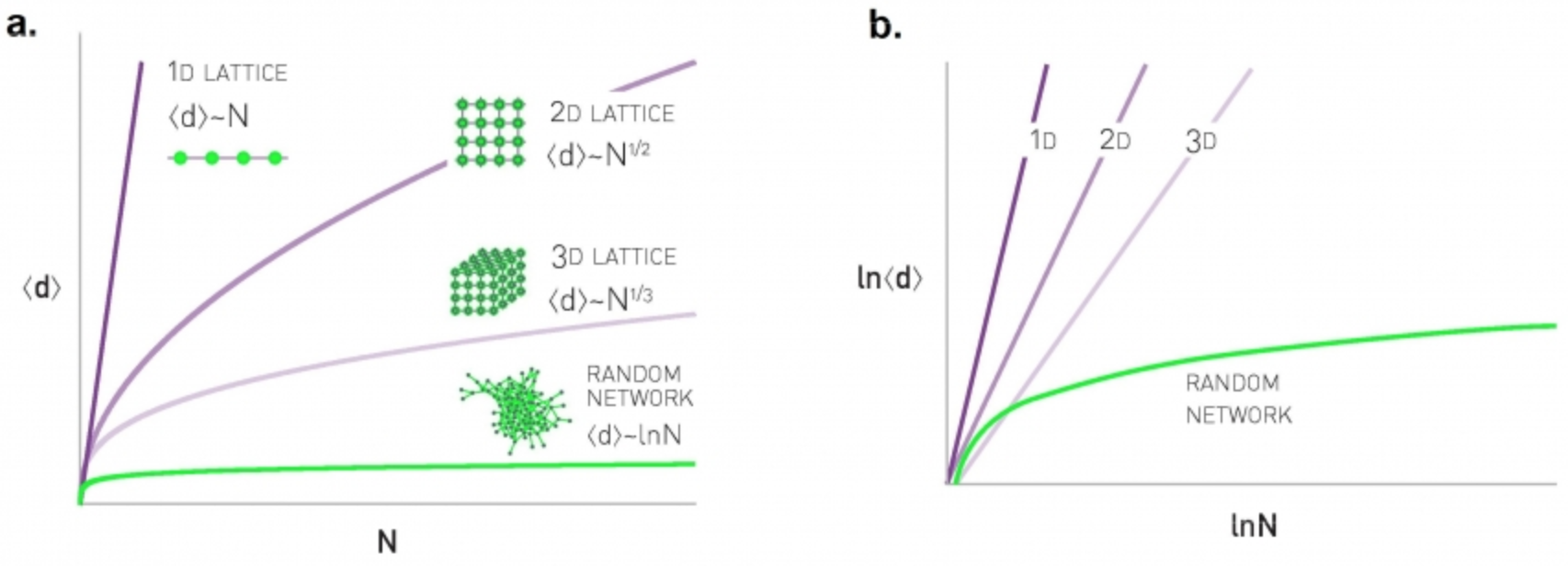

The degree distribution of a given network shows the fraction of nodes with degree k.

If we think of networks as random samples from an ensemble of graphs, we can think of $p_k$ as the probability that a node has degree k, for k>=0.

The network in this visualization has six nodes and the plot shows the empirical probability density function (which is a histogram) for the probability $p_k$.

Degree Distribution Moments

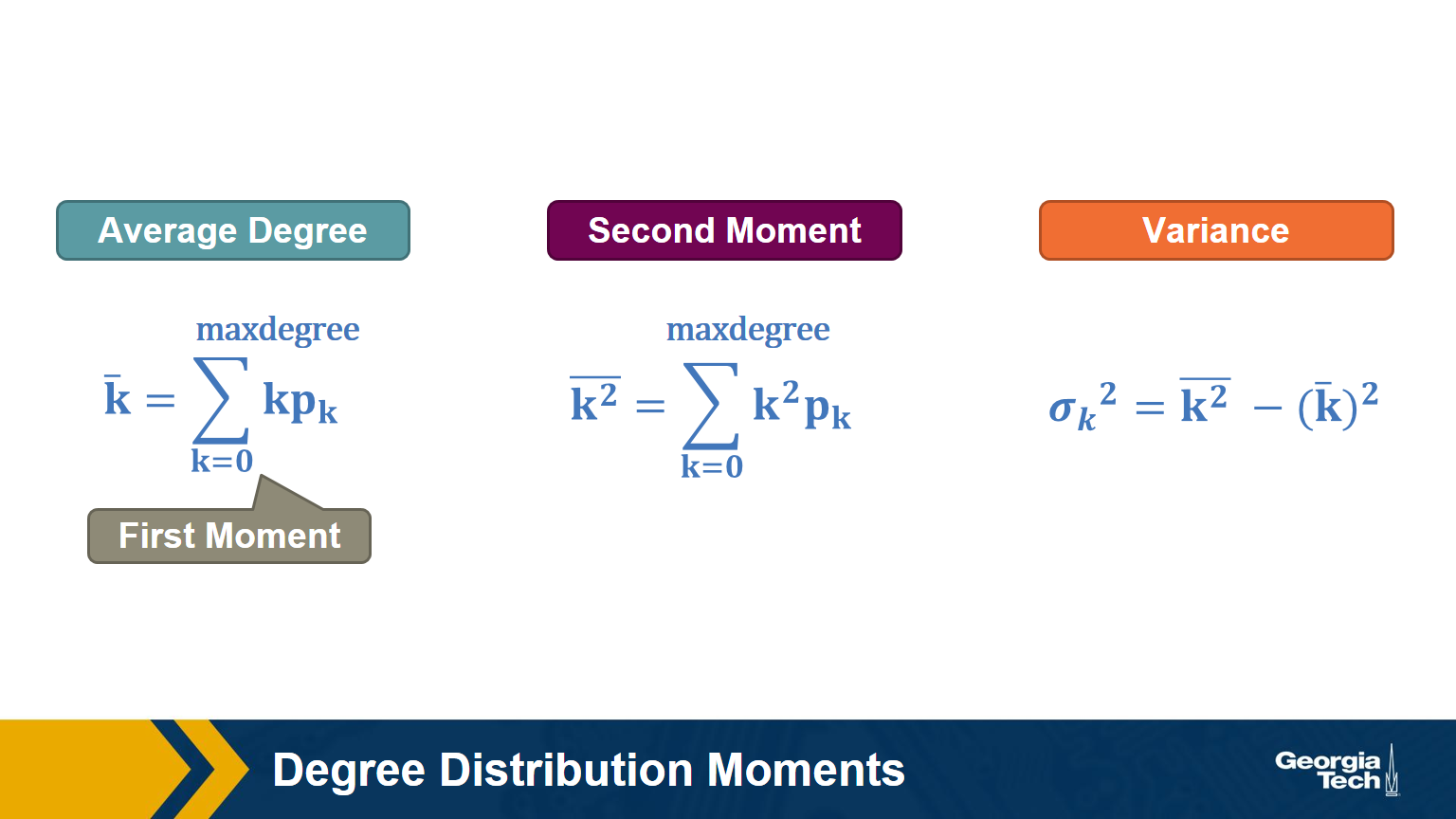

Recall that, given the probability distribution of a random variable, we can compute the first moment (mean), second moment, variance, etc.

The above formulas show the moments that we will mostly use in this course: the average degree, the second moment of the degree (the average of the squared degrees), and the variance of the degree distribution.

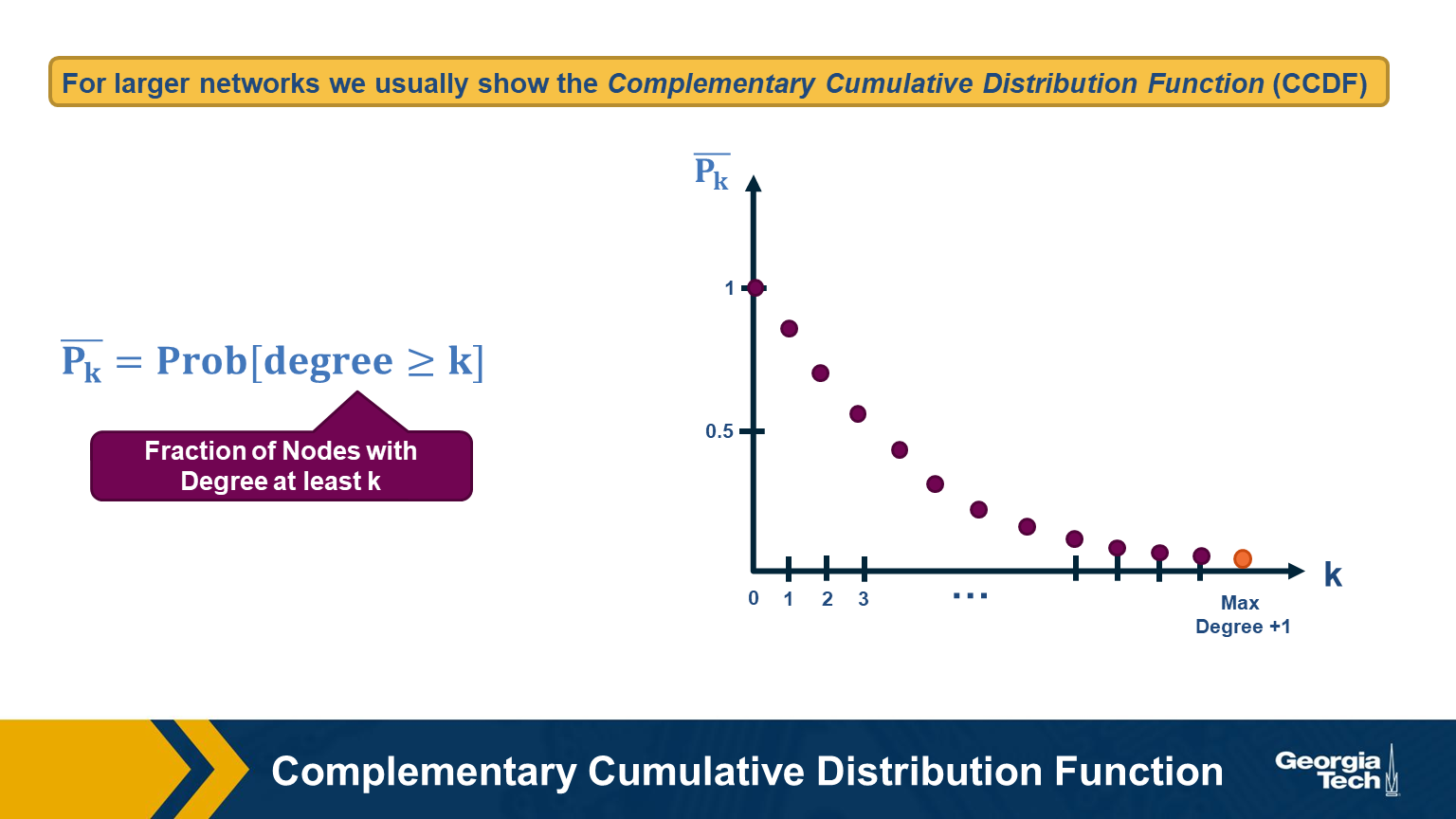

For larger networks, we usually do not show the empirical probability density function $p_k$. Instead, we show the probability that the degree is at least k, for any k>=0.

This is referred to as the Complementary Cumulative Distribution Function (denoted as C-CDF). Note that $\bar{P}_k$= Prob[degree>=k] is the sum of all $p_x$ values for x>=k.

Two Special Degree Distributions

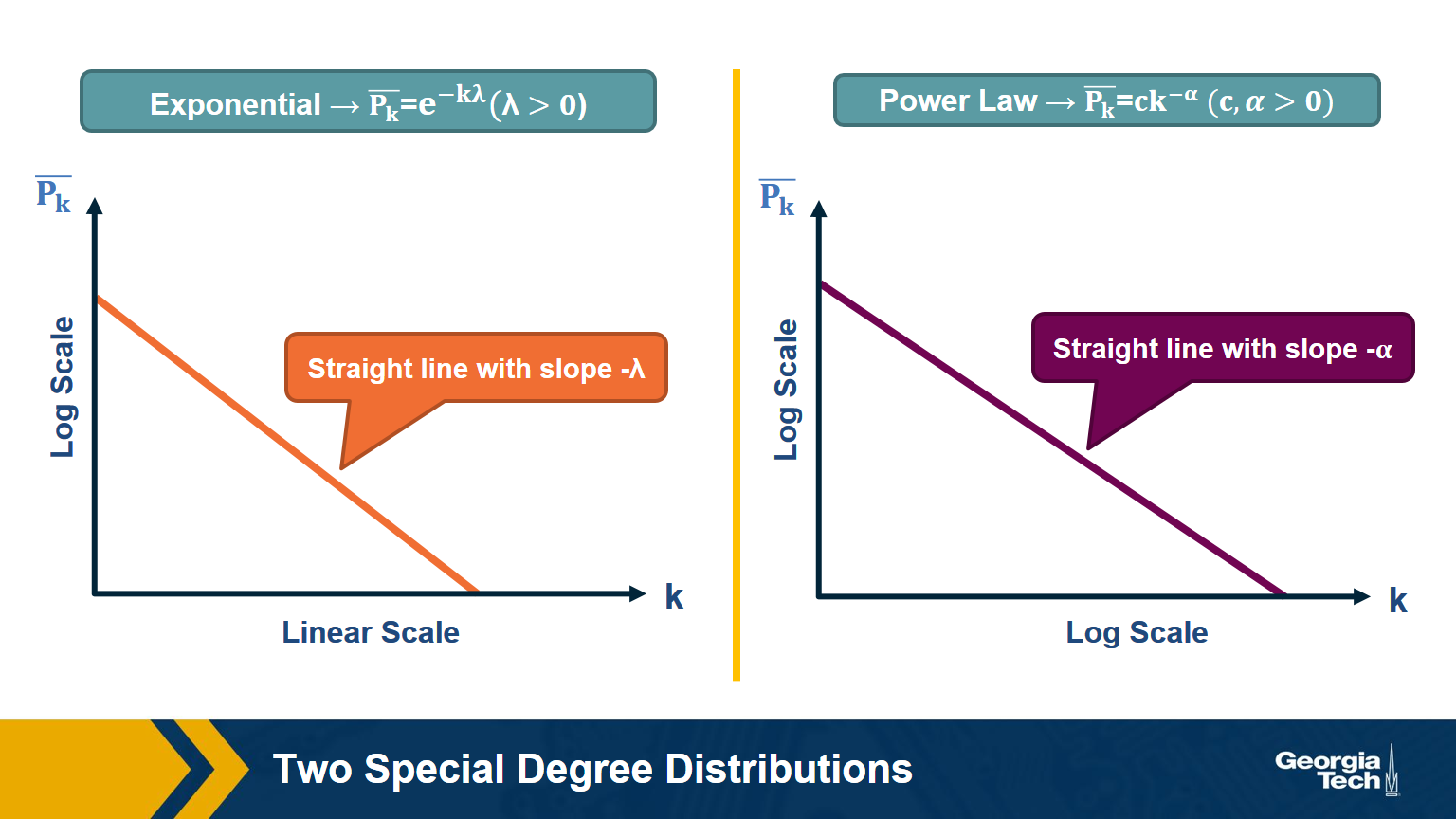

The C-CDF plots are often shown using a logarithmic scale at the x-axis and/or y-axis. Here is why.

Suppose that the C-CDF decays exponentially fast. In a log-linear plot (as shown at the left in the image above), this distribution will appear as a straight line with slope $-\lambda$. The average degree in such networks is 1/$\lambda$. The probability that a node has degree at least k drops exponentially fast with k.

On the other hand, in many networks, the C-CDF decays with a power-law of k. For example, if $\alpha=2$, the probability to see a node with a degree at least k drops proportionally to $1/k^2$ . In a log-log plot (as shown at the right), this distribution will appear as a straight line with slope $\alpha$. As we will see later in this course, such networks are referred to as “scale-free” and they are likely to have nodes with much larger degree than the average degree.

Food for Thought

Show why the previous two distributions give straight lines when we plot them in a log-linear and log-log scale, respectively. Also show that, with the exponential degree distribution, the probability to see nodes with degree more than 10 times the average is about 1/10000 of the probability to see nodes with higher degree than the average. On the other hand, with the power-law distribution, the probability to see nodes with degree more than 10 times the average is 1/100 of the probability to see nodes with higher degree than the average (when $\alpha =2$).

Example: Degree Distribution of a Sex-Contact Network

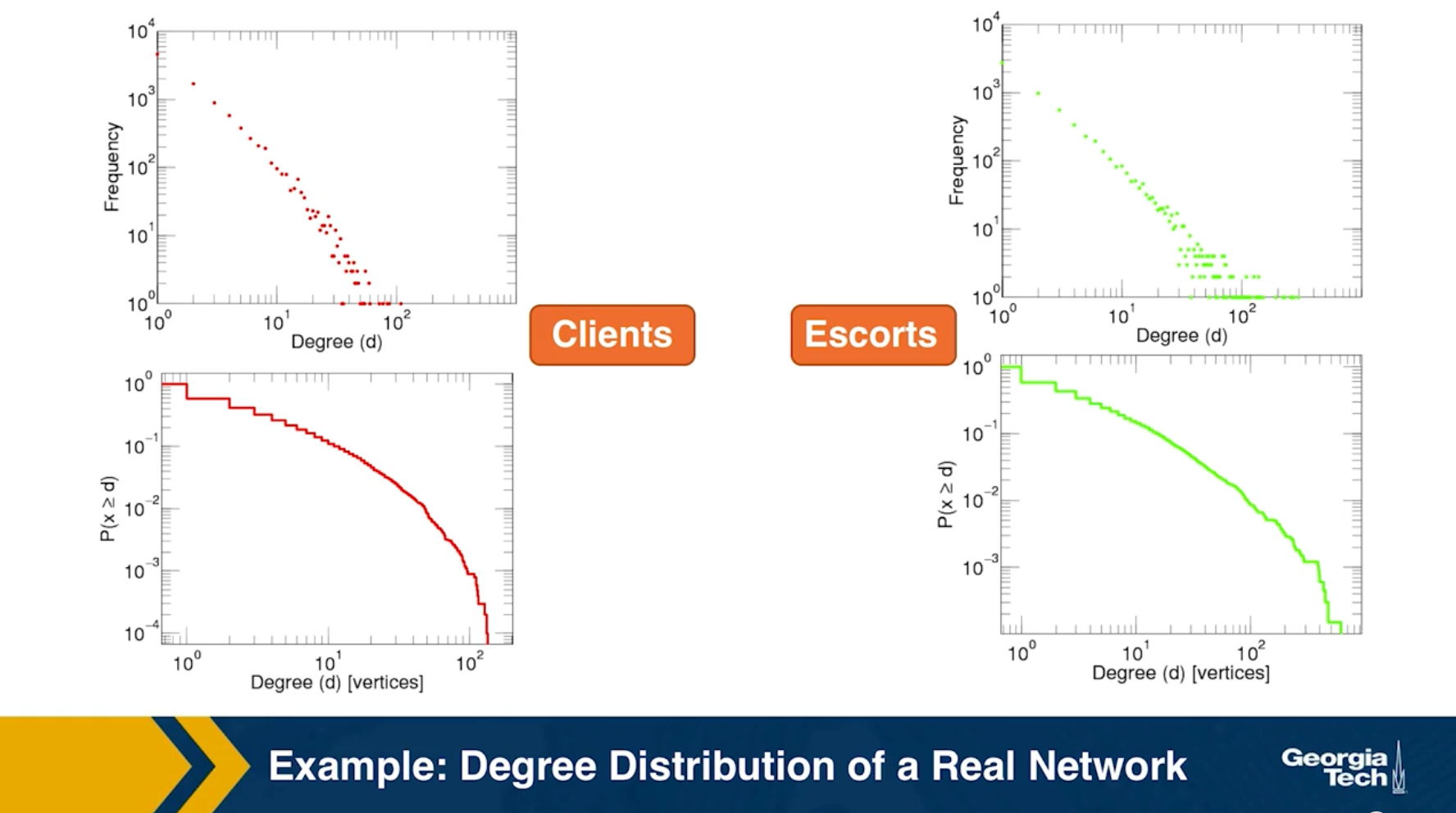

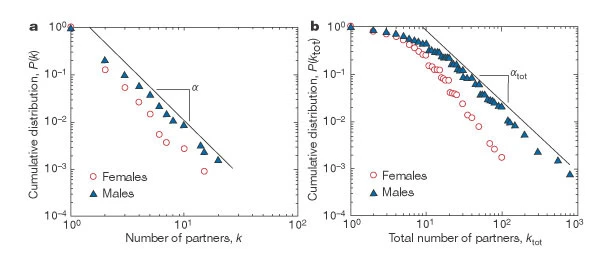

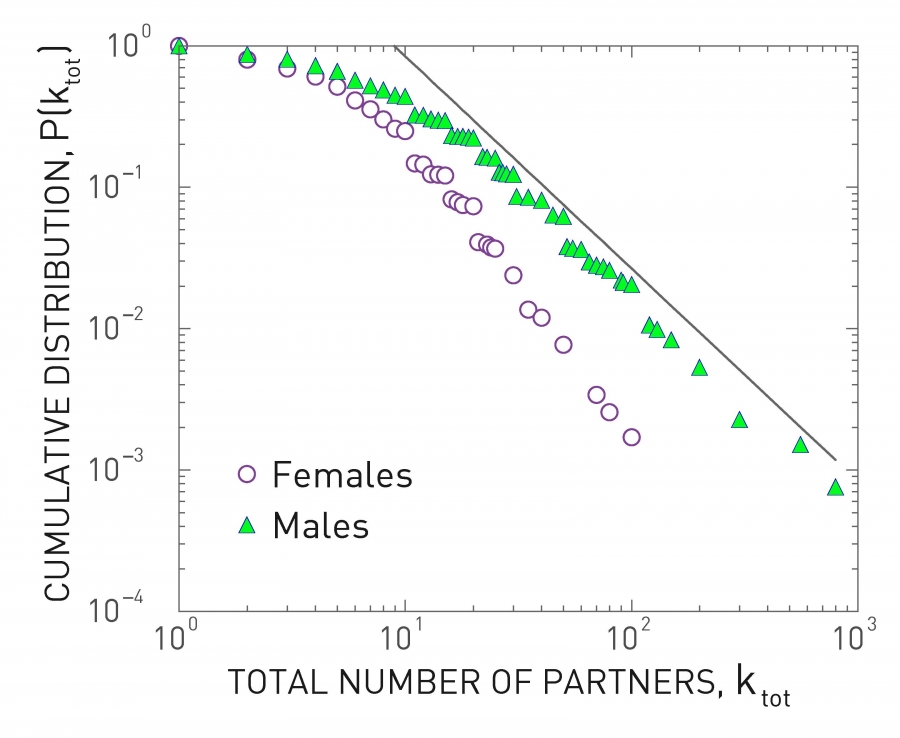

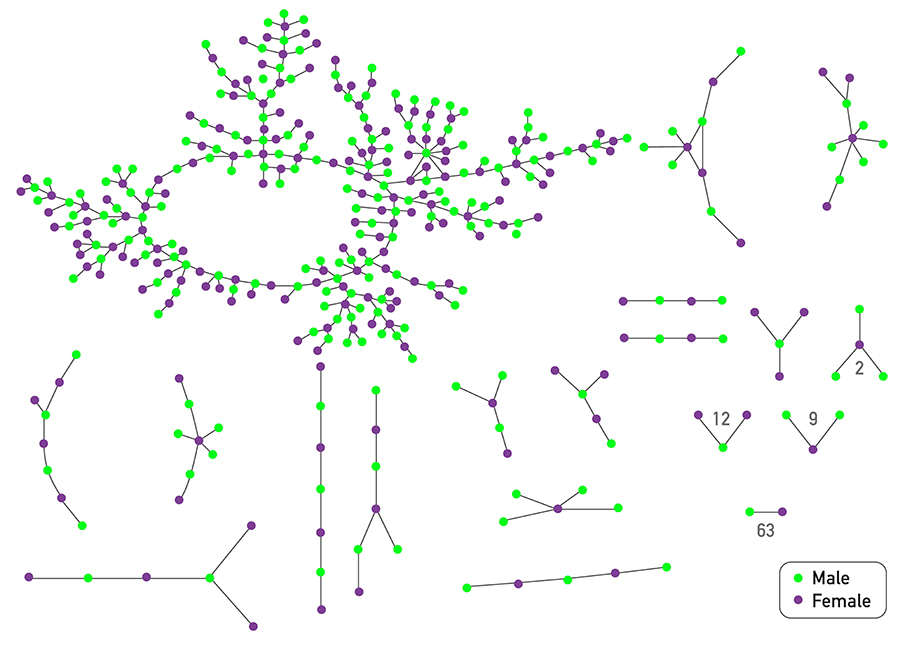

Human sexual contacts for a special temporal network, the underlying structure over which sexually transmitted infections (STI) spread. By understanding the structure of the network, we can better understand the dynamics of such infections. Here we show you some results from a bipartite network between sex buyers and their escorts. The nodes in this bipartite network are either male sex buyers, about 17,000 of them or female escorts about 10,000 of them. An edge between them denotes sexual intercourse between a male sex buyer and the female escort.

The average degree of the male buyer is about 5. The average escort degree is about 7.6 and the maximum degree of nay node is actually 615. What you see in these plots is the probability density function of the node degrees, either for male clients or female escorts.

The plots at the lower part are the complimentary cumulative distribution functions of the degrees for this network in log log scale. These plots as you can see, they are not straight lines of course, but if we focus on the part of the distribution that extends up to the probability of 10 to the minus three, (0.001), They can be approximated as straight lines.

Note that even though 90% of the escorts have fewer than 20 clients there is a small number of hubs escorts that have a significantly larger number of clients. As we will see later in this course, such nodes that have a very very large degree can play a major role in epidemics.

Simulated Epidemics in an Empirical Spatiotemporal Network of 50,185 Sexual Contacts.Luis E. C. Rocha, Fredrik Liljeros, Petter, Holme (2011)

Friendship paradox

Informally, the friendship paradox states: “On the average, your friends have more friends than you”.

In more general and precise terms, we will prove that: “The average degree of a node’s neighbor is higher than the average node degree”.

The probability that a random edge connects to a node of degree k

Let’s start by deriving a simple fact that we will use repeatedly in this course.

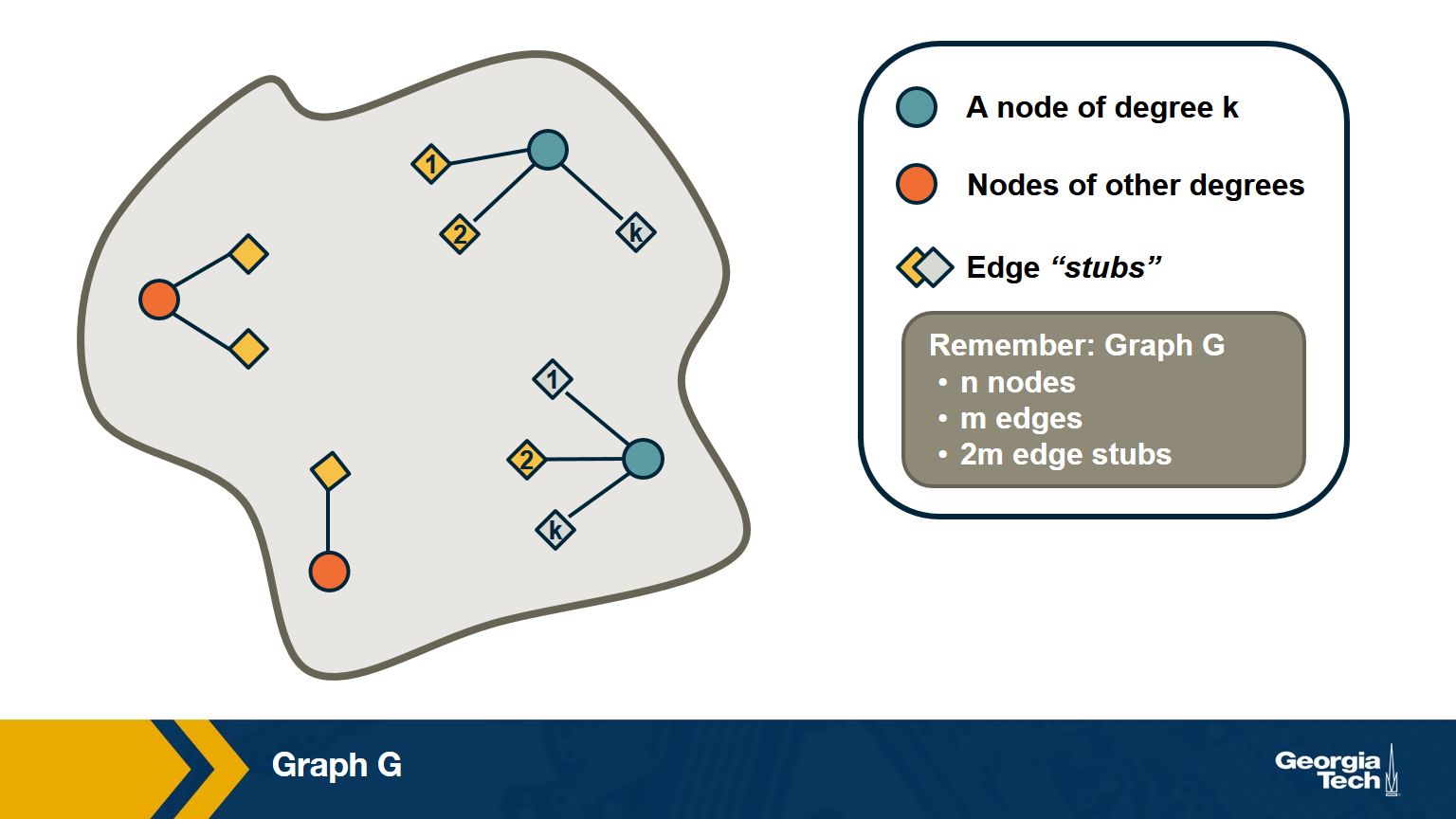

Suppose that we pick a random edge in the network – and we randomly select one of the two end-points of that edge – we refer to those end-points as the stubs of the edge. What is the probability $q_k$ that a randomly chosen stub belongs to a node of degree k?

This is easy to answer when the degrees of connected nodes are independent.

\[\begin{aligned} q_k &= \text{(number of nodes of degree k)} \\ &\times \text{ (probability an edge connects to a specific node of degree k)} \\ &= np_k \frac{k}{2m} \\ &= \frac{ kp_k}{\frac{2m}{n}} \\ &= \frac{kp_k}{\bar{k}} \end{aligned}\]Note that the probability that the randomly chosen stub connects to a node of degree k is proportional to both k and the probability that a node has degree k.

This means that, for nodes with degree $k \ge \bar{k}$, , it is more likely to sample one of their stubs than the nodes themselves. The opposite is true for nodes with degree $k \le \bar{k}$.

Based on the previous derivation, we can now ask: what is the expected value of a neighbor’s degree?

Note that we are not asking for the average degree of a node. Instead, we are interested in the average degree of a node’s neighbor.

This is the same as the expected value of the degree of the node we select if we sample a random edge stub. Lets denote this expected value as $\bar{k}_{nn}$.

The derivation is as follows:

\[\begin{aligned} \bar{k}_{nn} &= \sum_{k=0}^{k_{\text{max}}} k \cdot q_k \\ &= \sum_k k \frac{kp_k}{\bar{k}} \\ &= \frac{\sum_k k^2p_k}{\bar{k}} \\ & = \frac{\bar{k^2}}{\bar{k}} \\ &= \frac{(\bar{k})^2+(\sigma_k)^2}{\bar{k}} \\ &= \bar{k} + \frac{\sigma_k^2}{\bar{k}} \end{aligned}\]We can now give a mathematical statement of the friendship paradox: as long as the variance of the degree distribution is not zero, and given our assumption that neighboring nodes have independent degrees, the average neighbor’s degree is higher than the average node degree.

The difference between the two expected values (i.e., $\sigma_k^2/\bar{k}$) increases with the the variability of the degree distribution.

Food for Thought

Can you explain in an intuitive way why the average neighbor’s degree is larger than the average node degree, as long as the degree variance is not zero?

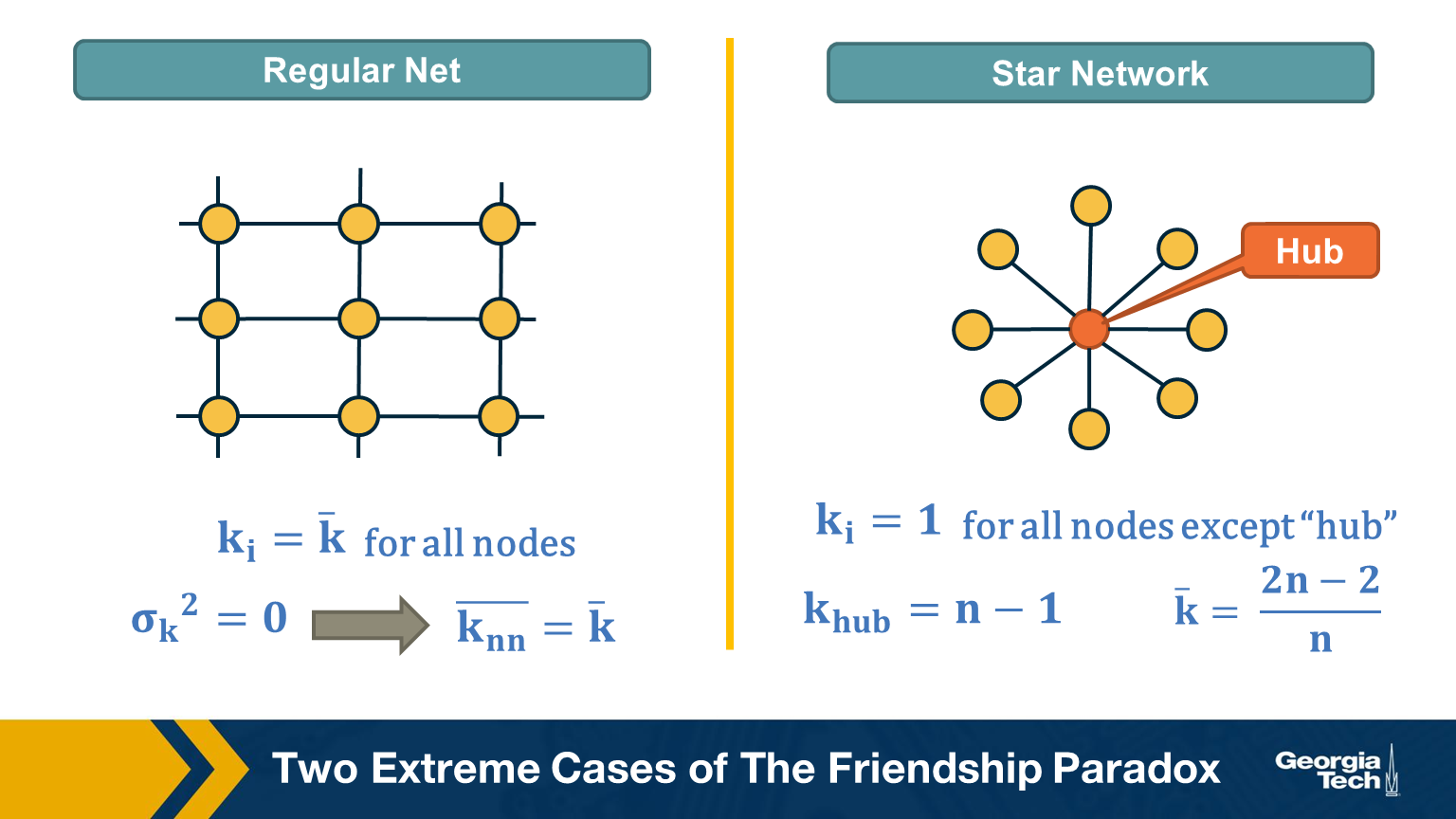

Two Extreme Cases of The Friendship Paradox

Think of two extremes in terms of degree distribution: an infinitely large regular network in which all nodes have the same degree (and thus the degree variance is 0), and an infinitely large star network with one hub node at the center and all peripheral nodes connecting only to the hub.

In the regular network, the degree variance is zero and the average neighbor’s degree is not different than the average node degree.

In the star network, on the other hand, the degree variance diverges as n increases, and so does the difference between the average node degree and the average neighbor degree.

Food for Thought

Derive the second moment of the degree distribution for the star network as the size of the network tends to infinity.

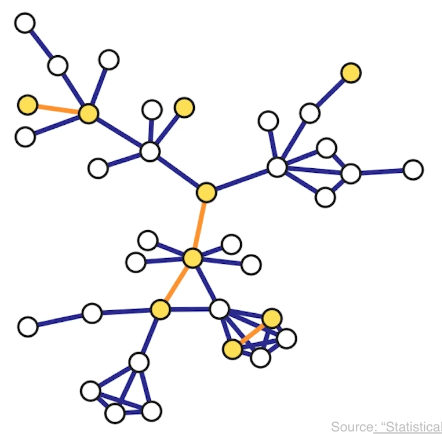

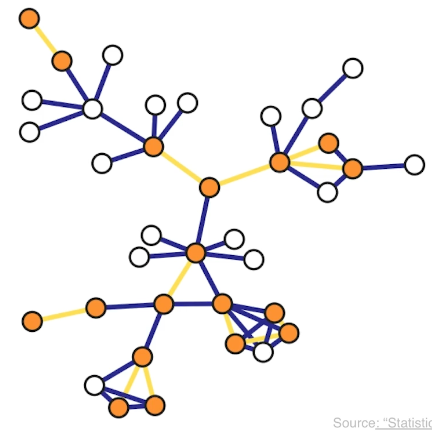

Application of Friendship Paradox in Immunization Strategies

An interesting vaccination strategy that is based on friendship paradox is refer to as Acquaintance immunization.

Instead of vaccinating random people, we select few few individuals, and ask each of them to identify his or her contact with a maximum number of connections. These contacts may be a sexual partner or other type of contact depending on the underlying virus. Now, based on the friendship paradox, we know that even network include some hubs, then they are probably connected to some of the random selected individuals we choose to survey.

The G(n,p) model (ER Graphs)

Let’s consider now the simplest random graph model and its degree distribution.

This model is referred to as G(n, p) and it can be described as follows: the network has n nodes and the probability that any two distinct nodes are connected with an undirected edge is p.

The model is also referred to as the Gilbert model, or sometimes the Erdős–Rényi (ER) model, from the last names of the mathematicians that first studied its properties in the late 1950s.

Note that the number of edges m in the G(n,p) is a random variable. The expected number of edges is $p \cdot \frac{n(n-1)}{2}$, the average node degree is $p \cdot (n-1)$, the density of the network is $p$ and the degree variance is $p\cdot(1-p)\cdot(n-1)$. These formulas assume that we do not allow self-edges.

The degree distribution of the G(n,p) model follows the Binomial(n-1,p) distribution because each node can be connected to n-1 other nodes with probability p.

Note that the G(n,p) model does not necessarily create a connected network – we will return to this point a bit later.

Also, in the G(n,p) model there are no correlations between the degrees of neighboring nodes. So, if we return to the friendship paradox, the average neighbor degree at a G(n,p) network is

- $\bar{k}_{nn} = \bar{k} + (1-p)$ (using the Binomial distribution)

- or $\bar{k}_{nn} = \bar{k} + 1$ (using the poisson approximation when $p \ll 1$ )

In other words, if we reach a node v by following an edge from another node, the expected value of v’s degree is one more than the average node degree.

Food for Thought

Derive the previous expressions for the average neighbor degree with both the Binomial and Poisson degree distributions.

Degree Distribution of G(n,p) Model

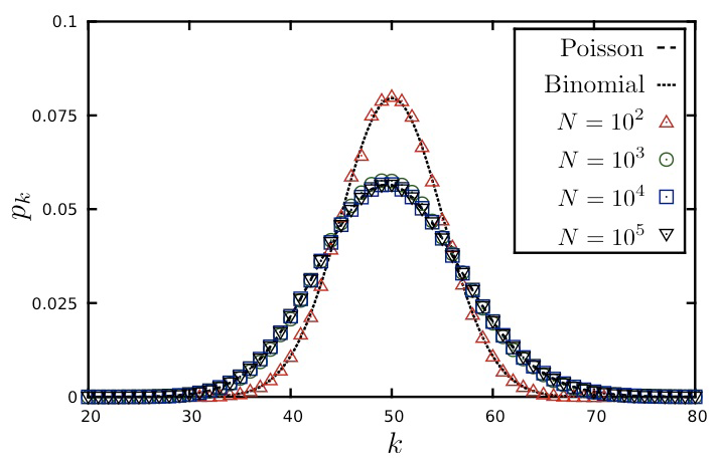

Here is a well-known fact that you may have learned in a probability course: the Binomial distribution can be approximated by the Poisson distribution as long as $p$ is much smaller than 1. In other words, this approximation is true for sparse networks in which the average degree $p \cdot (n-1)$ is much lower than the size of the network n. The Poisson distribution is described by:

\[\begin{aligned} p_k &= e^{-\bar{k}} \cdot \frac{\bar{k}^k}{k!}, k = 0,1,2, ...\\ \bar{k} &= p \cdot (n-1) \\ \sigma_k^2 &= \bar{k} \end{aligned}\]Because of the $\frac{1}{k!}$ term, $p_k$ decreases with $k$ faster than expontentially.

You may ask, why to use the Poisson approximation instead of the Binomial(n-1,p) distribution?

The reason is simple: the Poisson distribution has a single parameter, which is the average node degree $\bar{k}$.

The visualization shows the degree distribution for a network in which the average degree is 50. As we increase the number of nodes n, we need to decrease the connection probability p so that their product remains constant. Note that the Poisson distribution is a rather poor approximation for n=100 (because the average node degree is half of n) but it is excellent as long as n is larger than 1000.

Food for Thought Try to derive mathematically the Poisson distribution from the Binomial distribution for the case that p is much smaller than 1 and n is large. If you cannot do it, refer to a textbook or online resource for help.

Connected Components in G(n,p)

Clearly, there is no guarantee that the G(n,p) model will give us a connected network. If $p$ is close to zero, the network may consist of many small components. So an important question is: how large is the Largest Connected Component (LCC) of the G(n,p) model?

Here is an animation that shows a network with n=1000 nodes, as we increase the average node degree $\bar{k}$ (shown at the upper-left of the animation). Recall that the connection probability is approximately $p \approx \frac{\bar{k}}{n}$.

As you see, initially we have only small groups of connected nodes – typically just 2-3 nodes in every connected component.

After the first 5-10 seconds of the animation however, we start seeing larger and larger connected components. Most of them do not include any loops – they form tree topologies.

Gradually, however, as the average degree approaches the critical value of one, we start seeing some connected components that include loops.

Something interesting happens when the average degree exceeds one (about 40 seconds after the start of the animation): the largest connected component (LCC), which is identified with a different color than the rest of the nodes, starts covering a significant fraction of all network nodes. It starts becoming a “giant component”.

If you continue watching this animation until the end (it takes about five minutes), you will see that this giant component gradually changes color from dark blue to light blue to yellow to red – the color “temperature” represents the fraction of nodes in the LCC. Eventually, all the nodes join the LCC when the average node degree is about 6 in this example.

Size of LCC in G(n,p) as Function of p

We can derive the relation between p and the size of the LCC as follows:

Suppose that S is the probability that a node belongs in the LCC. Another way to think of S is as the expected value of the fraction of network nodes that belong in the LCC.

Then, $\bar{S} = 1- S$ is the probability that a node does NOT belong in the LCC.

That probability can be written as:

\[\bar{S} = \big( (1-p) + p \cdot \bar{S} \big)^{n-1}\]The first term refers to the case that a node v is not connected to another node, while the second term refers to the case that v is connected to another node that is not in the LCC.

Since, $p = \frac{\bar{k}}{n-1}$, , the last equation can be written as:

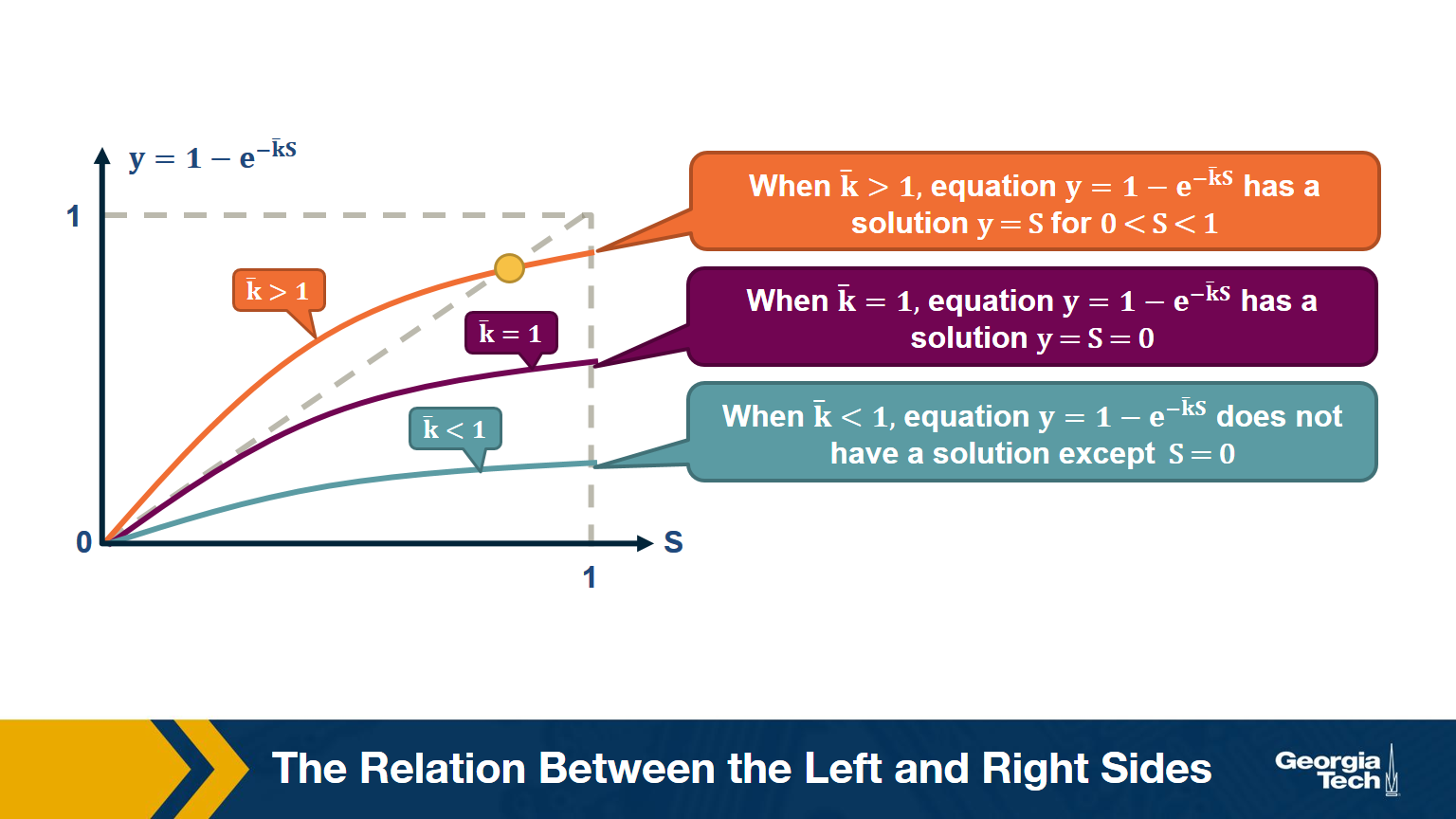

\[\begin{aligned} \bar{S} &= \bigg( 1- \frac{\bar{k}}{n-1} \bigg( 1- \bar{S}\bigg)\bigg)^{n-1} \\ ln \bar{S} &= (n-1) ln \bigg( 1- \frac{\bar{k}}{n-1} \bigg( 1- \bar{S}\bigg)\bigg) \\ &\approx -(n-1)\frac{\bar{k}}{n-1}\bigg(1-\bar{S}\bigg) (\text{by taylor expansion})\\ S &= 1-e^{-\bar{k}S} \end{aligned}\]The visualization shows the relation between the left and right sides of the previous equation, i.e., the relation between S and $1-e^{-\bar{k}S}$.

The equality is true when the function $y = 1-e^{-\bar{k}S}$ crosses the diagonal x=y line.

Note that the derivative of y with respect to S is approximately $\bar{k}$ when S approaches 0.

So, if the average degree is larger than one, the function y(S) starts above the diagonal. It has to cross the diagonal at a positive value of S because its second derivative of y(S) is negative. That crossing point is the solution of the equation $S=1-e^{-\bar{k}S}$. . This means that if the average degree is larger than one ($\bar{k} > 1$), the size of the LCC is S>0.

One the other hand, if the average node degree is less (or equal) than 1, the function y(S) starts with a slope that is less (or equal) than 1, and it remains below the diagonal y=x for positive S. This means that if the average node degree is less or equal than one, the average size of the LCC in a G(n,p) network includes almost zero nodes.

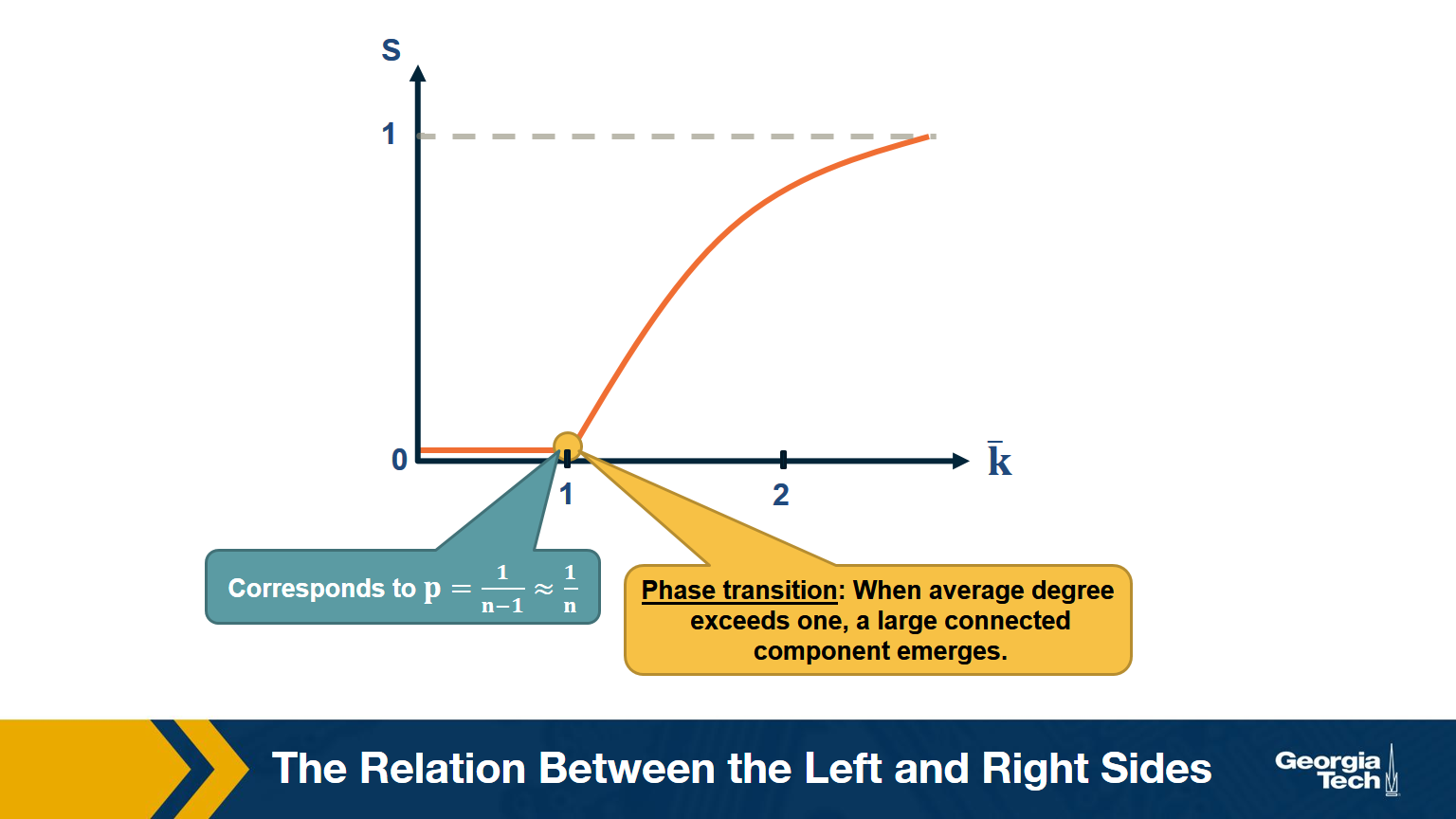

The visualization shows how S increases with the average node degree $\bar{k}$. Note how the LCC suddenly “explodes” when the average node degree is larger than 1. This is referred to as “phase transition”. A phase transition that we are all familiar with is what happens to water when its temperature reaches the freezing or boiling temperature: the macroscopic state changes abruptly from liquid to solid or gas. Something similar happens with G(n,p) when the average node degree exceeds the critical value $\bar{k}=1$: the network suddenly acquires a “giant connected component” that includes a large fraction of all network nodes.

Note that the critical point corresponds to a connection probability of $p=\frac{1}{n-1}\approx \frac{1}{n}$ because $\bar{k} = (n-1) \times p $.

When Does G(n,p) have a Single Connected Component?

Here is one more interesting question about the size of the LCC: how large should p (or $k$) be so that the LCC covers all network nodes?

Note that the previous derivation did not answer this question – it simply told us that there is a phase transition when $k =1$

Suppose again that S is the probability that a node belongs in the LCC.

Then, the probability that a node does NOT connect to ANY node in the LCC :

\[\left(1-p\right)^{S \, n}\approx\left(1-p\right)^n\:if\:S\approx1\]The expected number of nodes not connecting to LCC:

\[\overline{k_o}\:=\:n\cdot\left(1-p\right)^n\:=\:n\left(1-\frac{np}{n}\right)^n\approx n\cdot e^{-np}\]Recall that $\left(1-\frac{x}{n}\right)^n\approx e^{-x}$ when $x\ll n$. So we assume at this point of the derivation that the network is sparse ($p \ll 1$).

If we set $\overline{k_o}$ to less than one node, we get that:

\[\begin{aligned} \:n\cdot e^{-np}&\le\:1 \\ -np&\le\ln\left(\frac{1}{n}\right)=-\ln n \\ p&\ge\frac{\ln n}{n} \\ \overline{k}&=np\:\ge\ln n \end{aligned}\]which means that when the average degree is higher than the natural logarithm of the network size ($\bar{k}>\ln{n}$) we expect to have a single connected component.

Degree Correlations

We assumed throughout this lesson that the degree of a node does not depend on the degree of its neighbors. In other words, we assumed that there are no degree correlations.

Mathematically, if nodes u and v are connected, we have assumed that:

Prob[degree(u) = k | degree(v) = k'] =Prob[degree(u) = k | u connects to another node] =- $q_k$ =

- $p_k \cdot \frac{k}{\bar{k}}$

Note: this probability does not depend on the degree k’ of neighbor v. Such networks are referred to as neutral.

In general, however, there are correlations between the degrees of neighboring nodes, and they are described by the conditional probability distribution:

P[k'|k] = Prob[a neighbor of a k-degree node has degree k']

The expected value of this distribution is referred to as the average nearest-neighbor degree $k_{nn}(k)$ of degree-k nodes:

\[k_{nn}(k) = \sum_{k'} k' \cdot P(k'|k)\]In a neutral network, we have already derived that $k_nn(k)$ is independent of k (recall that we derived $k_{nn}(k) = \bar{k} + \frac{\sigma_k^2}{\bar{k}} = \bar{k}_{nn}$

In most real networks, $k_{nn}(k)$$ depends on k and it shows an increasing or decreasing trend with k.

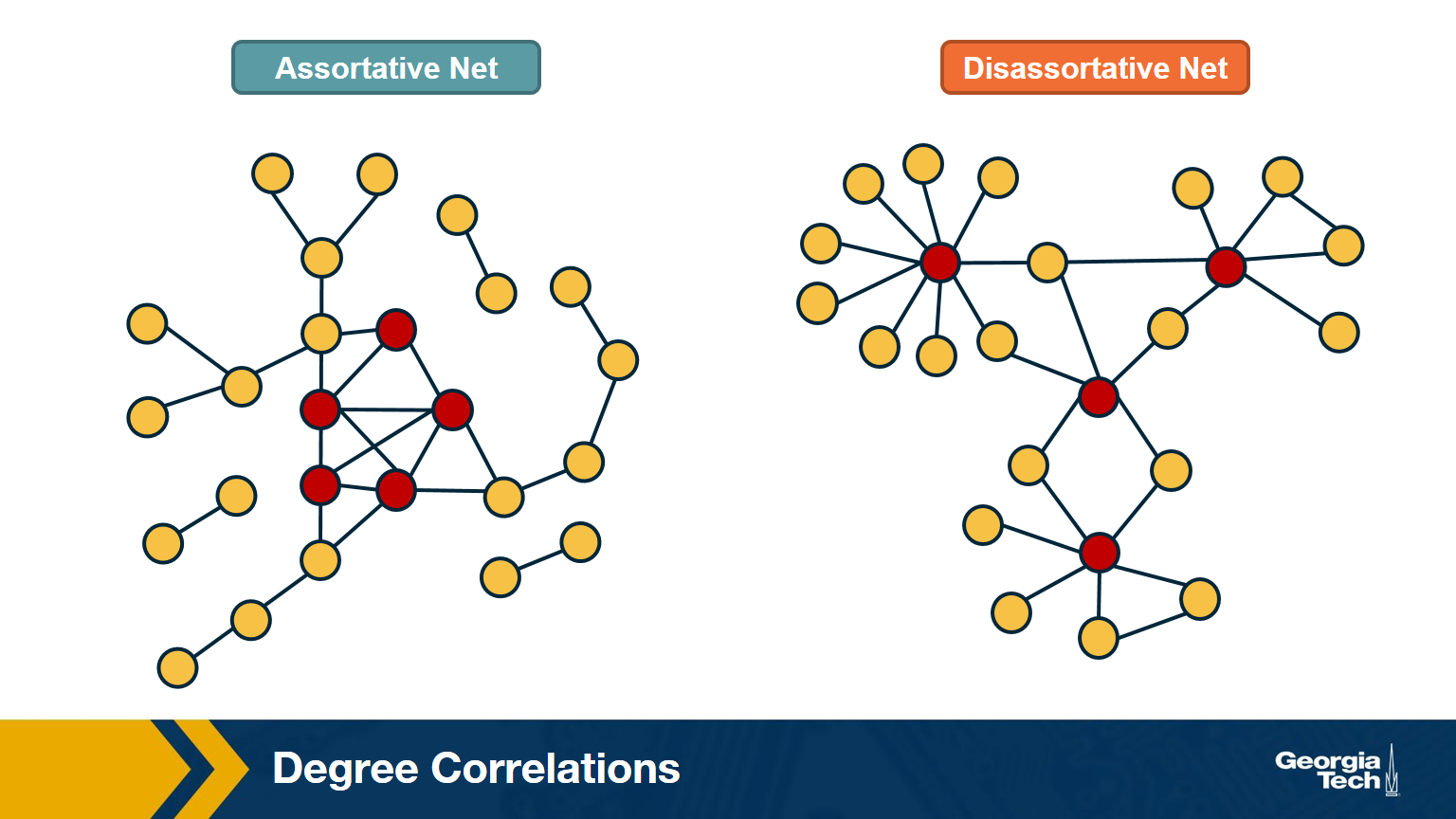

The network at the left shows an example in which small-degree nodes tend to connect with other small-degree nodes (and similarly for high-degree nodes).

The network at the right shows an example of a network in which small-degree nodes tend to connect to high-degree nodes.

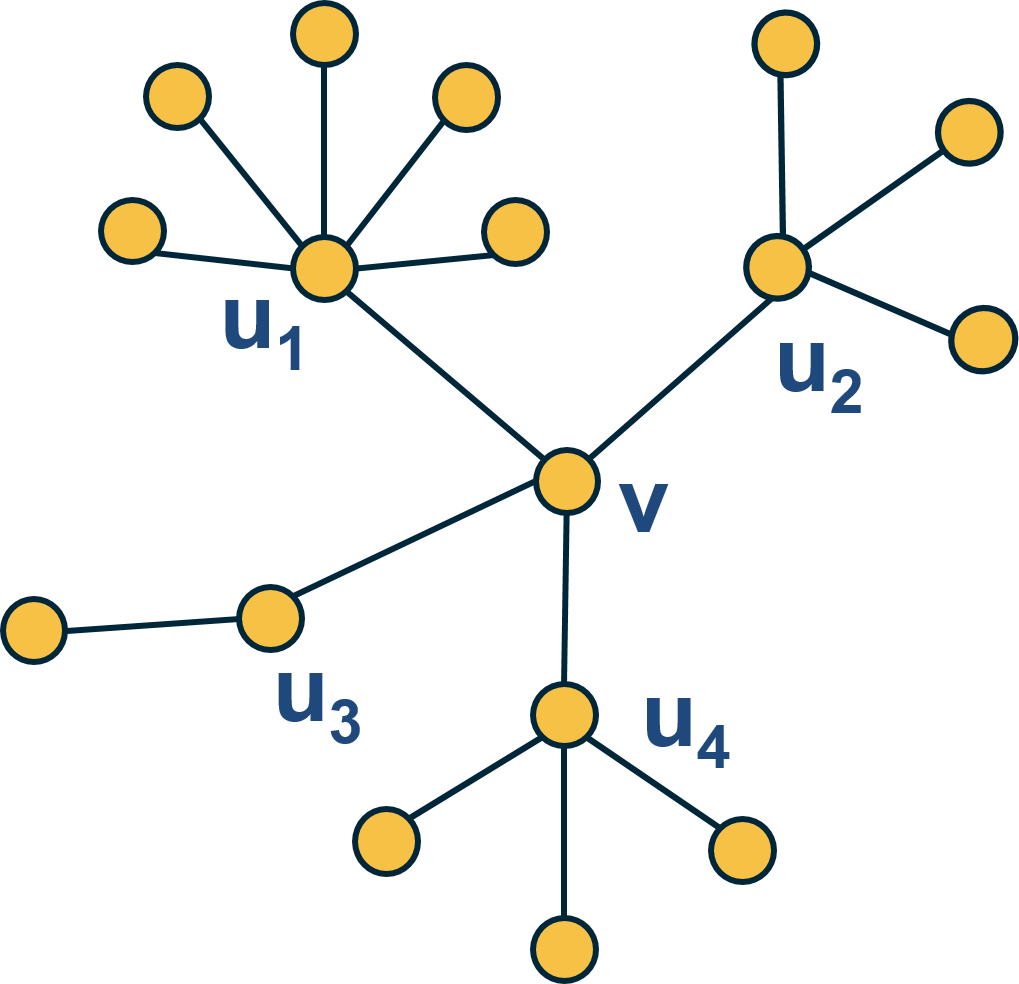

Here is an example: what is the average nearest neighbor degree of node v in this network?

\[\overline{k_{nn}}\left(v\right)\:=\frac{\:1}{k\left(v\right)}\sum_{i=1}^{k\left(v\right)}k\left(u_i\right)=\frac{6+4+2+4}{4}\:=\:4\]To calculate $k_{nn}(k)$ we compute the average value of $k_{nn}(v)$ for all nodes v with $k(v)=x$ .

Then, we plot $k_{nn}(k)$ versus k, and examine whether that plot shows a statistically significant positive or negative trend.

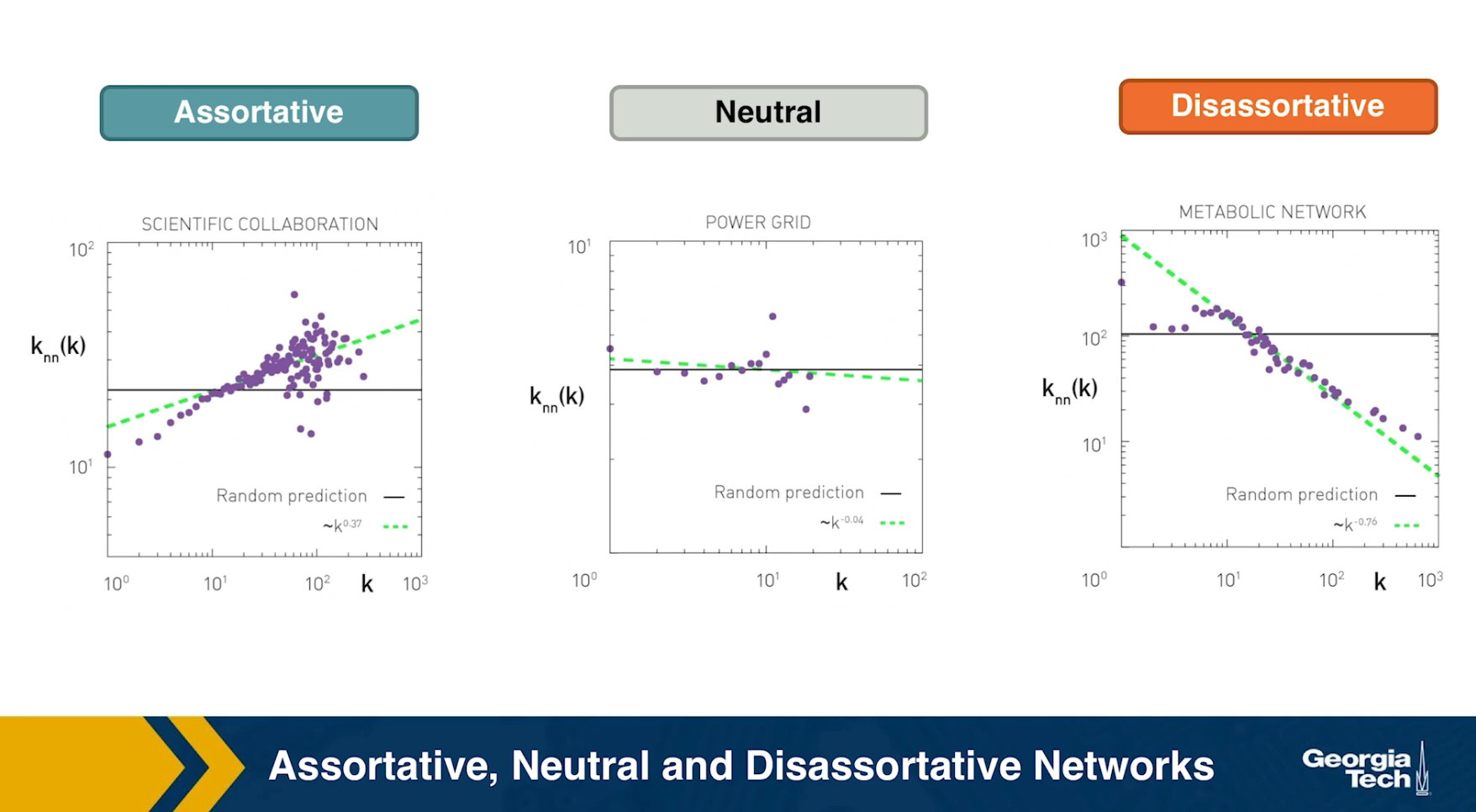

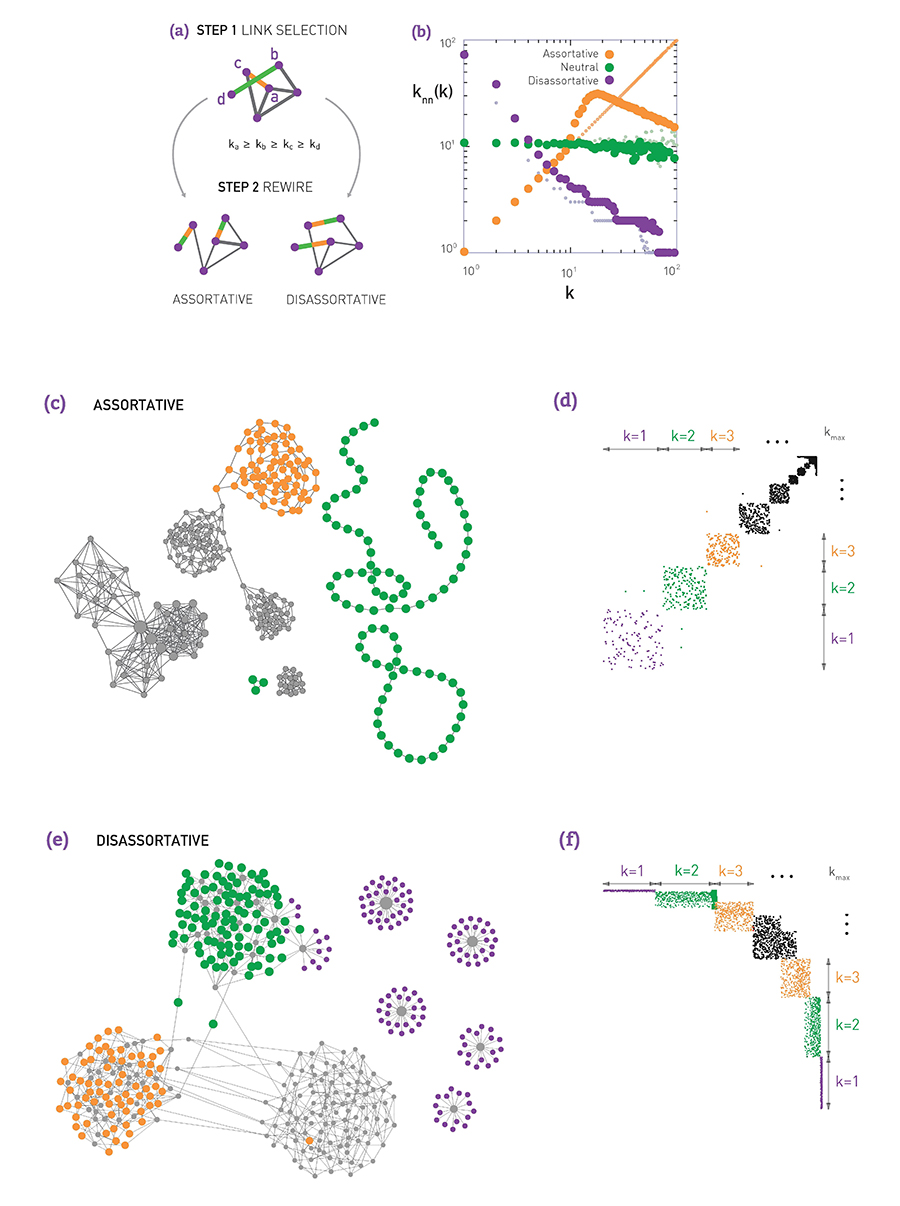

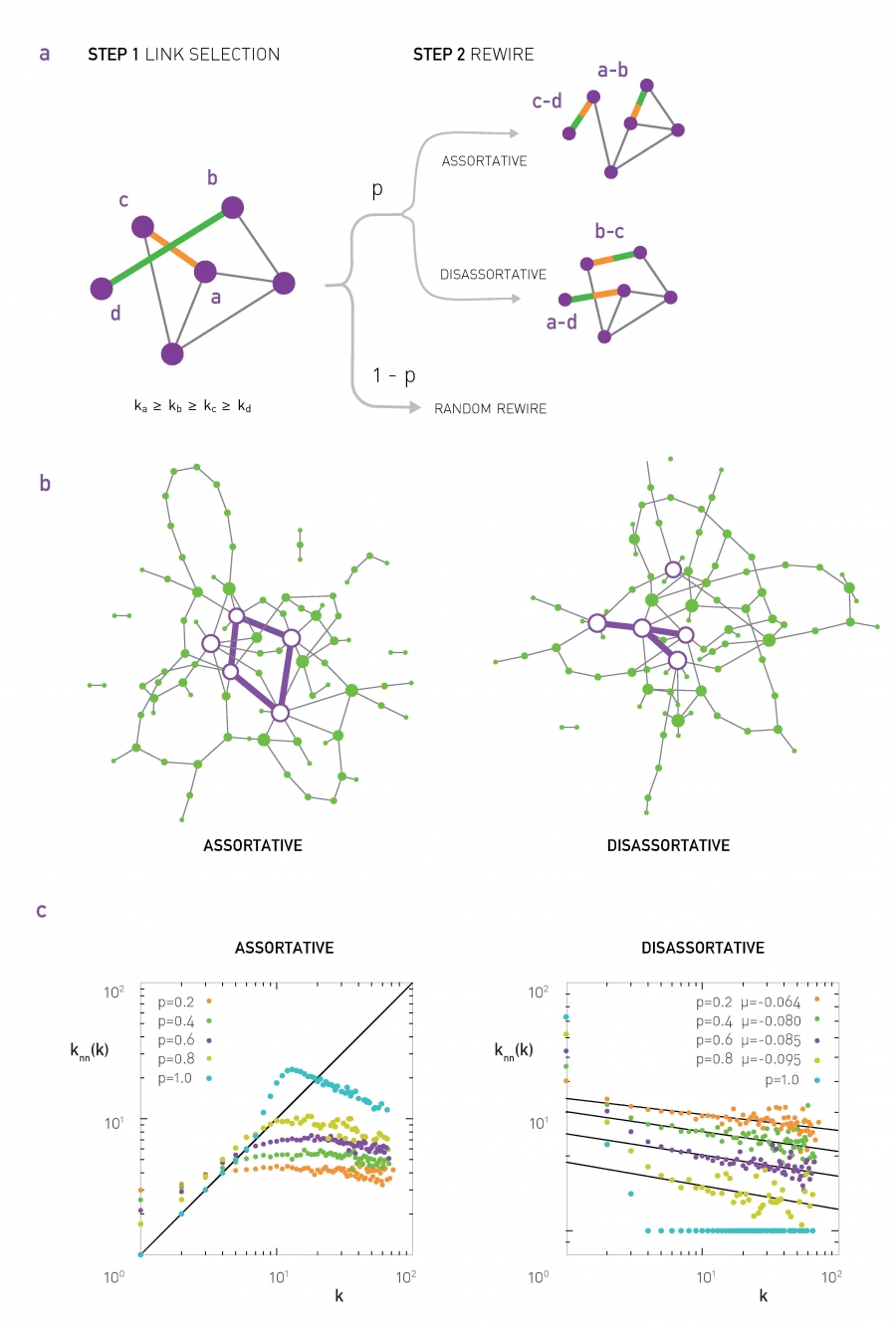

How to Measure Degree Correlations

One way to quantify the degree correlations in a network is by modeling (i.e., approximating) the relationship between the average nearest neighbor degree $k_{nn}(k)$ and the degree k with a power-law of the form:

\[{k_{nn}}\left(k\right)\approx a\cdot k^{\mu}\]Then, we can estimate the exponent $\mu$ from the data.

If $\mu >0$, we say that the network is Assortative: higher-degree nodes tend to have higher-degree neighbors and lower-degree nodes tend to have lower-degree neighbors. Think of celebrities dating celebrities, and loners dating other loners.

If $\mu < 0$, we say that the network is Disassortative: higher-degree nodes tend to have lower-degree neighbors. Think of a computer network in which high-degree aggregation switches connect mostly to low-degree backbone routers.

If $\mu$ is statistically not significantly different from zero, we say that the network is Neutral.

Food for Thought

Suppose that instead of this power-law relation between $k_{nn}(k)$ and k we had used a linear statistical model. How would you quantify degree correlations in that case?

Hint: How would you apply Pearson’s correlation metric to quantify the correlation between degrees of adjacent nodes?

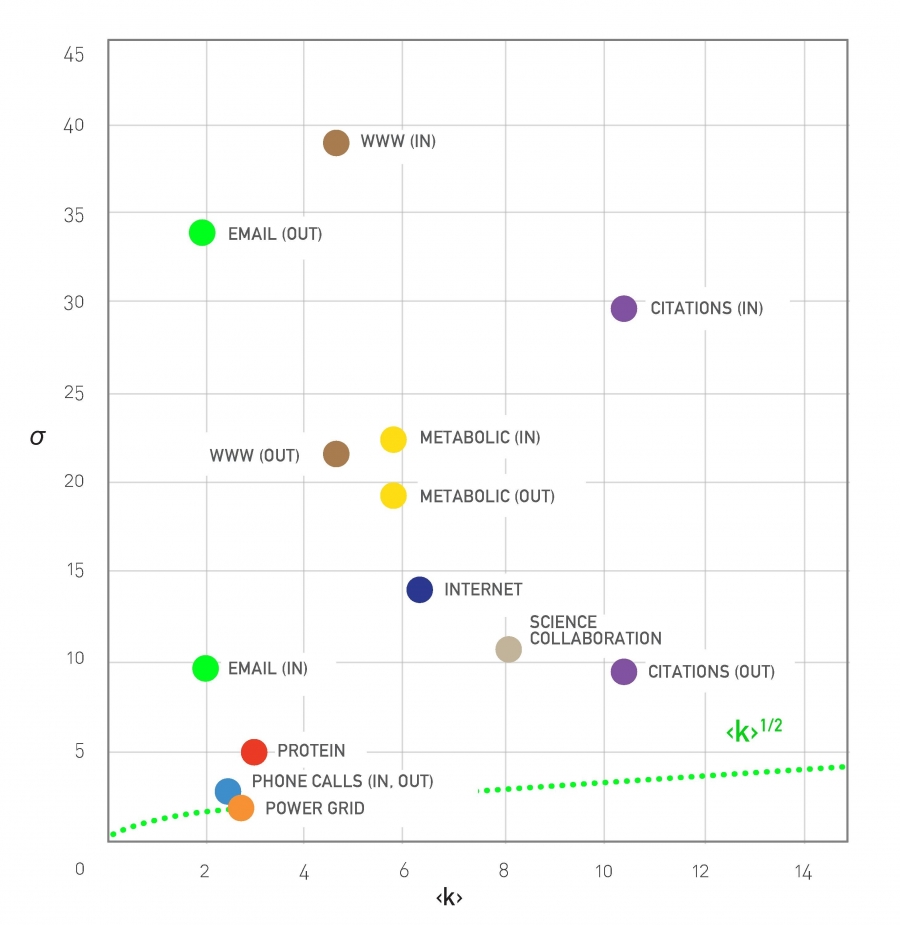

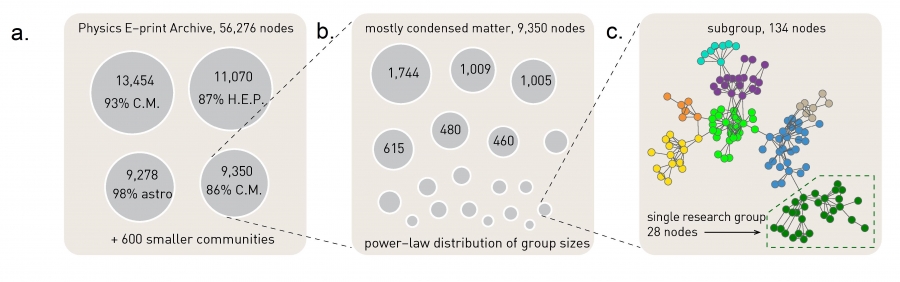

Assortative, Neutral and Disassortative Networks

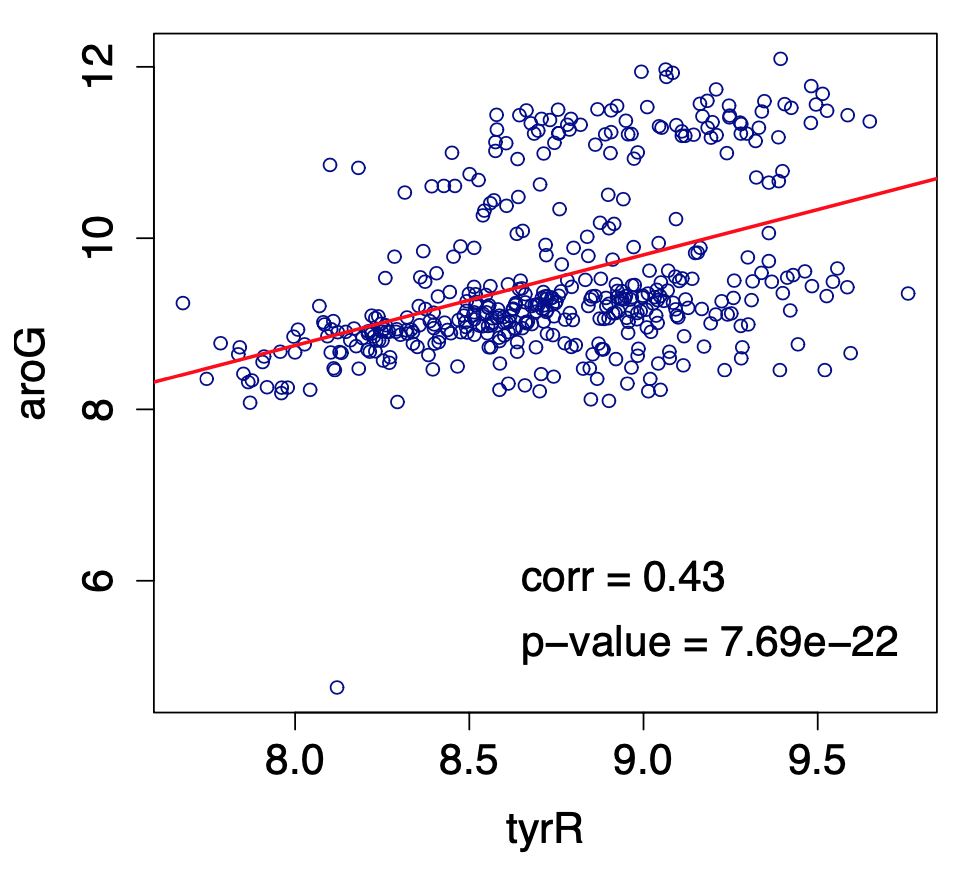

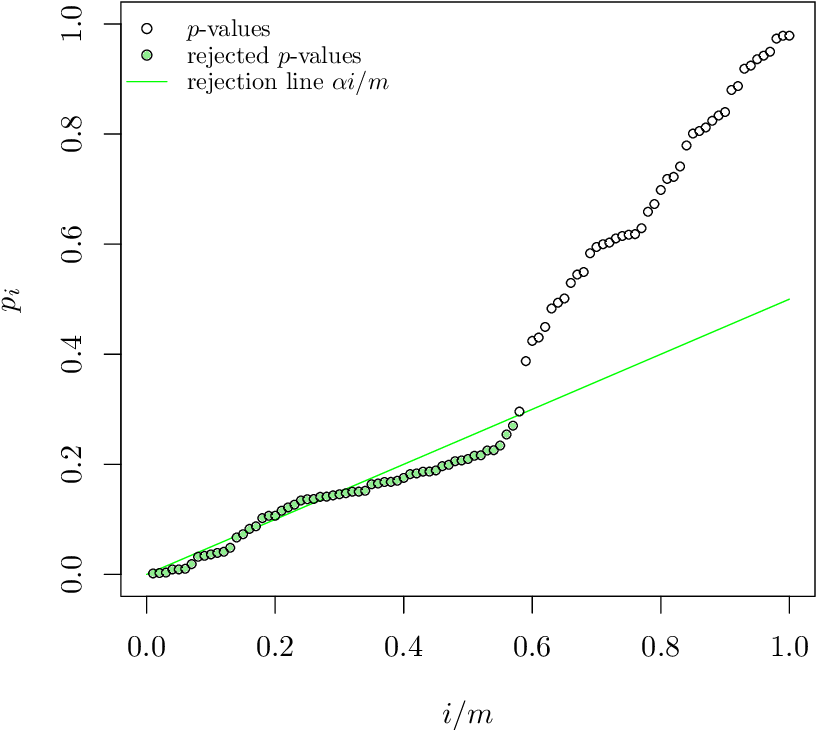

Let’s look at some examples of science degree correlation plots from real world networks. The first network refers to the collaboration between a group of scientists, two nodes are connected if they have written at least one research paper together. Notice that the data is quite noisy especially when the degree K is more than 70.

The reason is simply that we did not have a large enough sample of such nodes with large degrees. Nevertheless, we clearly see a positive correlation between the degree K and the degree of the nearest neighbor which is shown in the y axis.

If we model the data with a power law relation, the exponent $\mu$ is approximately 0.37 in this case. We can use this value to quantify and compare the sort of activity of different networks when the estimate is $\mu$ is statistically significant.

The second network refers to a portion of the power grid in the United States. the data in this case does not support a strong correlation between the degree K and the degree of the nearest neighbor. So it is safe to assume that this network is what we call neutral

The third network refers to a metabolic network where nodes here are metabolites and they are connected if two metabolites A and B appear in the opposite side of the same chemical reaction in a biological cell. The data shows a strong negative correlation in this case but only if the nodes have degree 5, 10, or higher. If we model the data with power law relation, the exponent $\mu$ is approximately minus 0.86. This suggests that complex metabolites such as glucose are either synthesized through a process called anabolism or broken down into through a process called catabolism into a large number of simpler molecules such as carbon dioxide.

Lesson Summary

The main objective of this lesson was to explore the notion of “degree distribution” for a given network. The degree distribution is probably the first thing you will want to see for any network you encounter from now on. It gives you a quantitative and concise description of the network’s connectivity in terms of average node degree, degree variability, common degree modes, presence of nodes with very high degrees, etc.

In this context, we also examined a number of related topics. First, the friendship paradox is an interesting example to illustrate the importance of degree variability. We also saw how the friendship paradox is applied in practice in vaccination strategies.

We also introduced G(n,p), which is a fundamental model of random graphs – and something that we will use extensively as a baseline network from now on. We explained why the degree distribution of G(n,p) networks can be approximated with the Poisson distribution, and analyzed mathematically the size of the largest connected component in such networks.

Obviously, the degree distribution does not tell the whole story about a network. For instance, we talked about networks with degree correlations. This is an important property that we cannot infer just by looking at the degree distribution. Instead, it requires us to think about the probability that two nodes are connected as a function of their degrees.

We will return to all of these concepts and refine them later in the course.

L4 - Random vs. Real Graphs and Power-Law Networks

Overview

Required Reading

- Chapter 4 (sections 4.1, 4.2, 4.3, 4.4., 4.7, 4.8, 4.12), A-L. Barabási , Network Science, 2015.

- Chapter 5 (sections 5.1, 5.2, 5.3), A-L. Barabási ,Network Science 2015

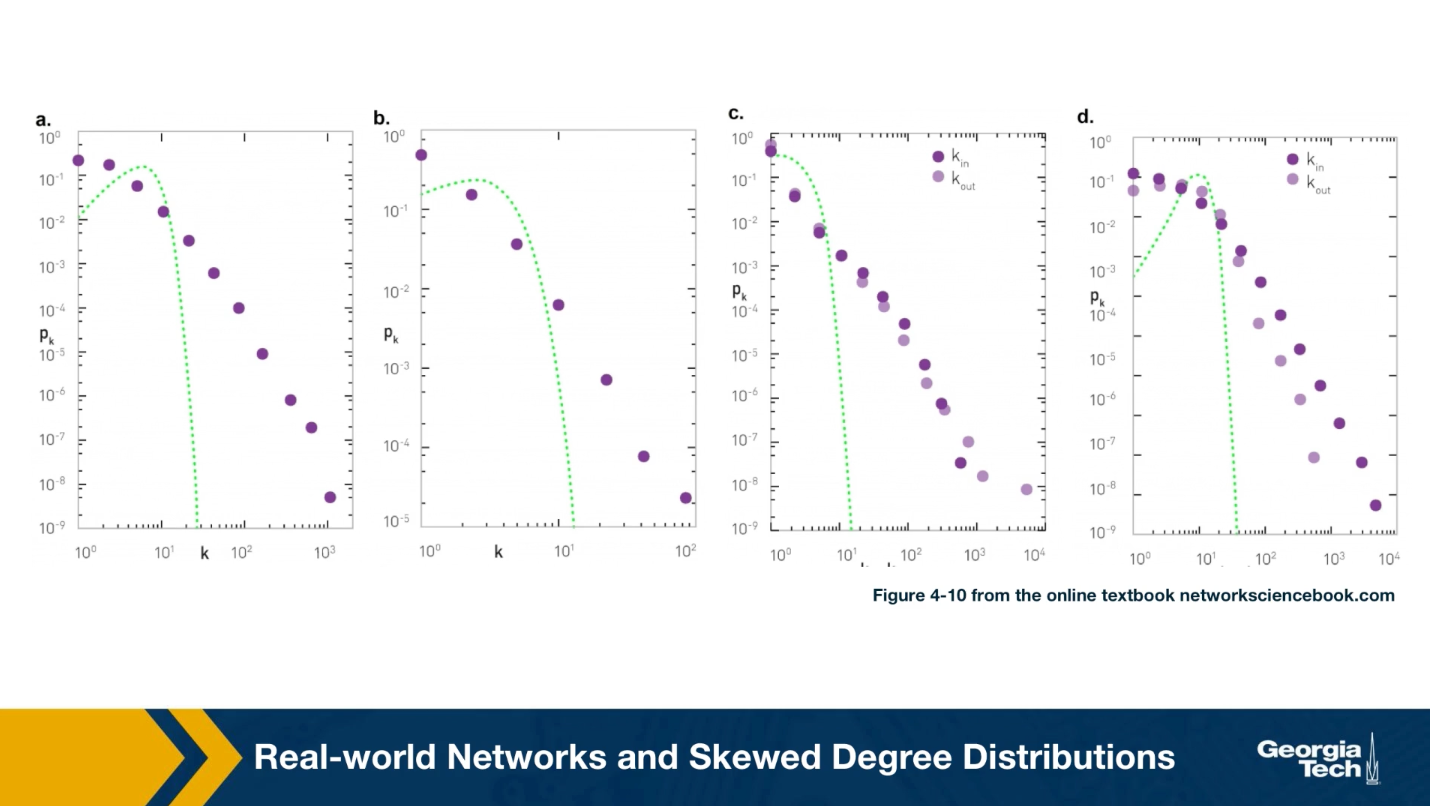

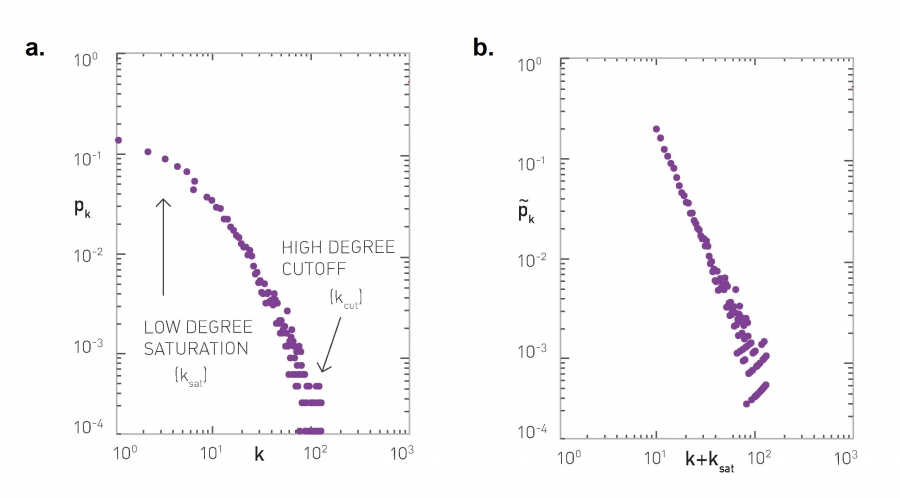

Degree Distribution of Real Networks

What is the degree distribution of real networks?

- Scientists used to think that real networks can be modeled as random ER graphs.

- Such networks follow the binomial degree distribution.

- In the late 1990s, researchers observed that real networks are very different, with highly skewed degree distribution.

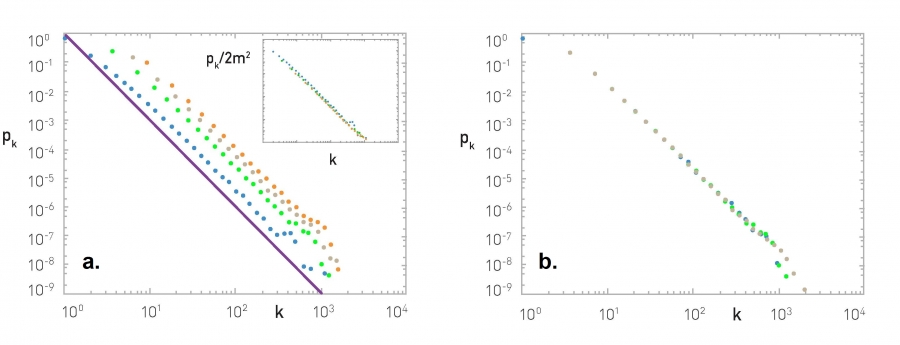

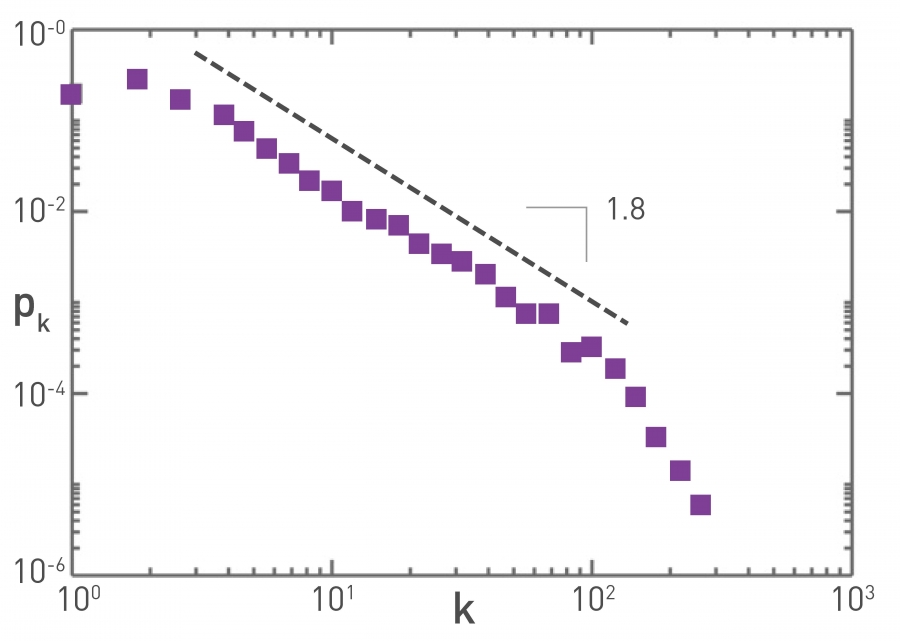

- For many networks, the power law degree distribution $p_k \sim k^{-\alpha}$ is a more appropriate model.

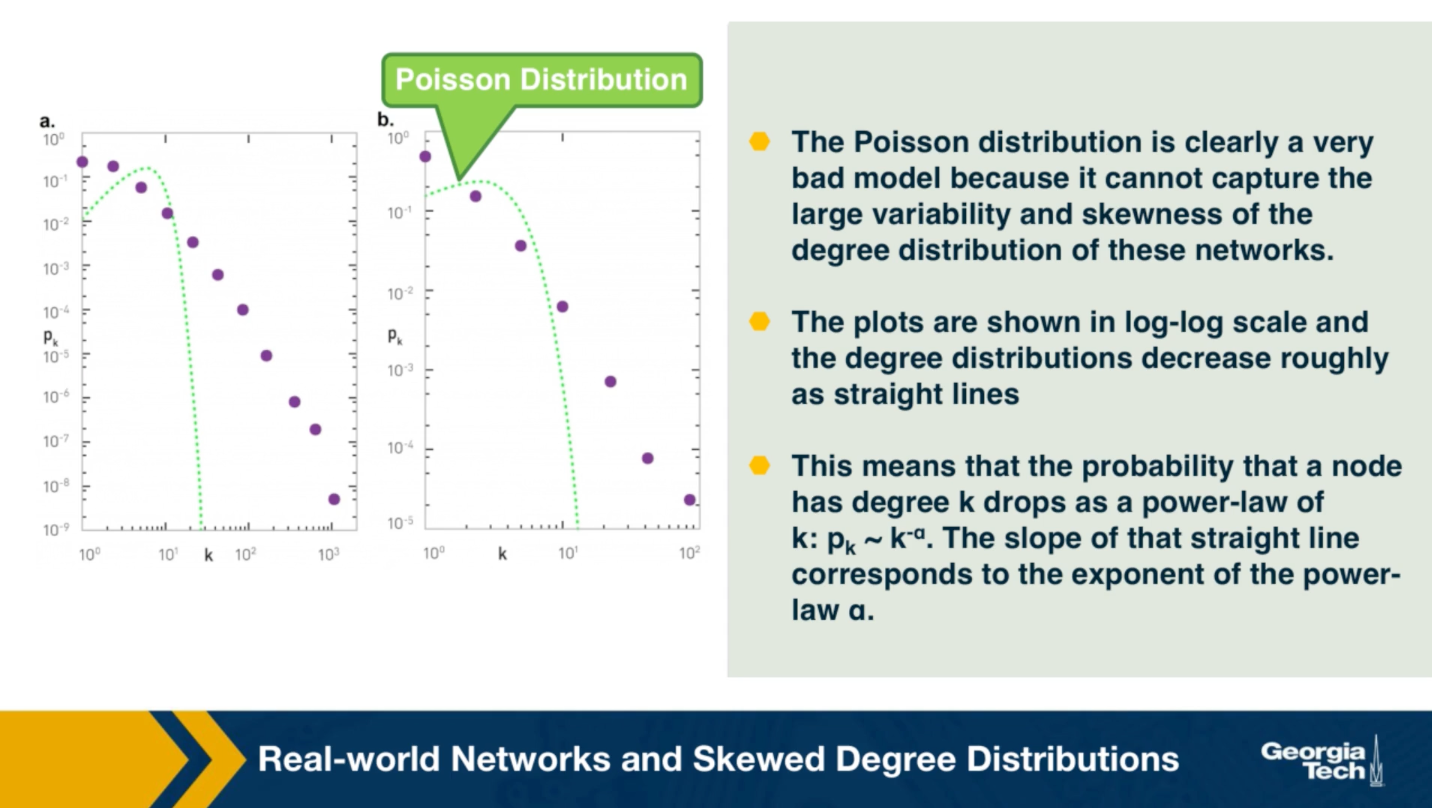

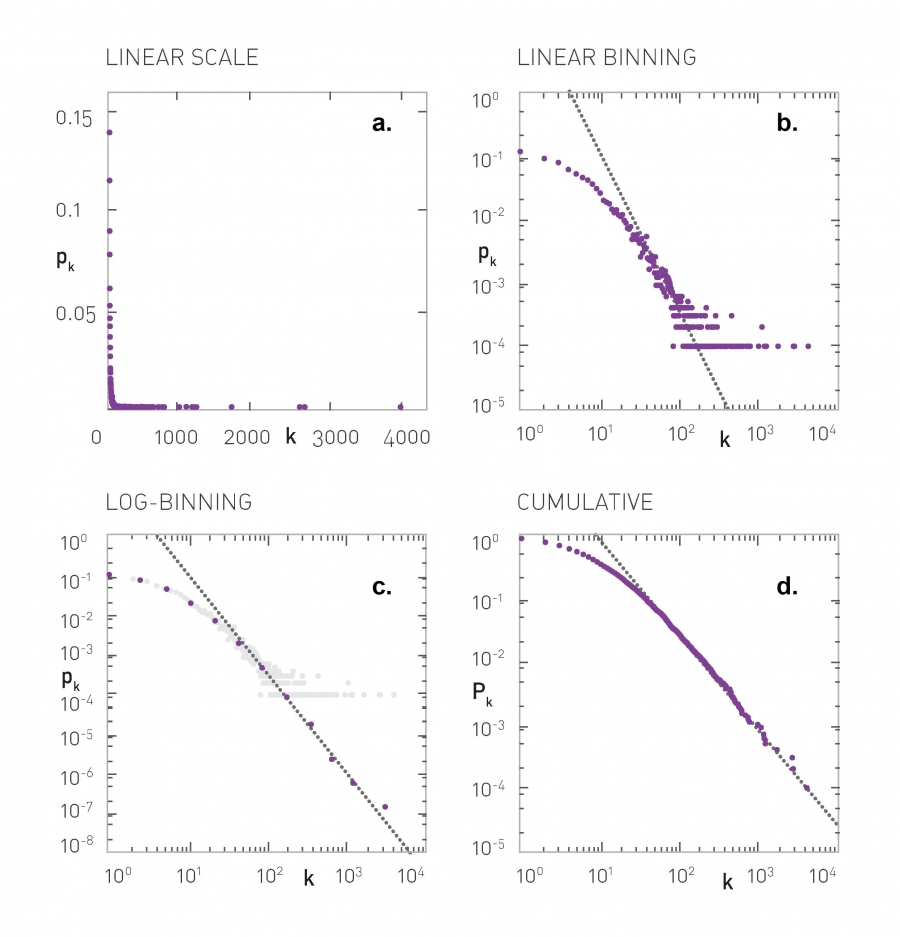

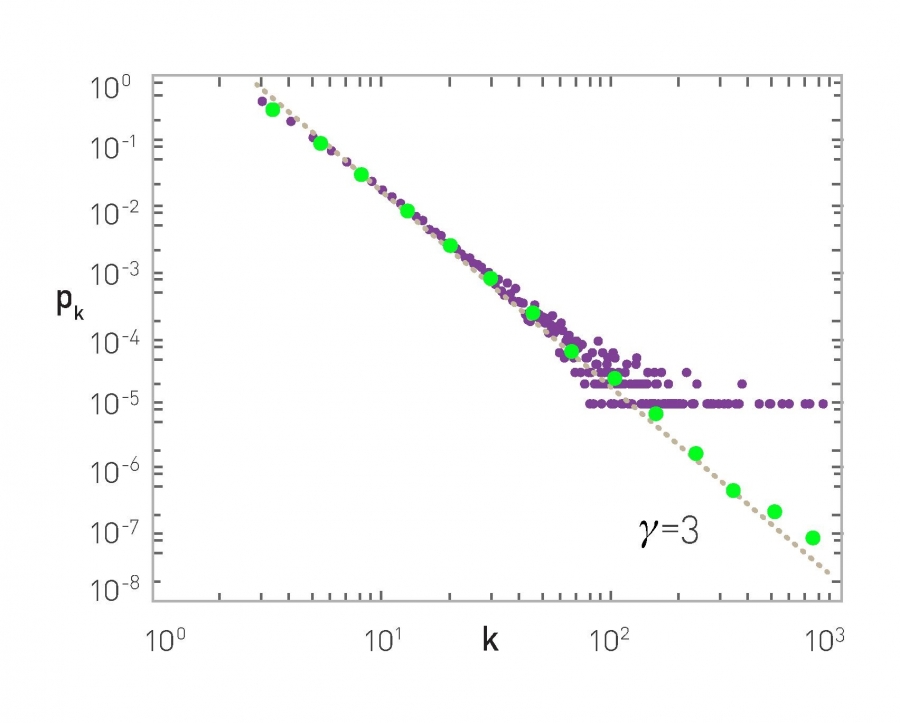

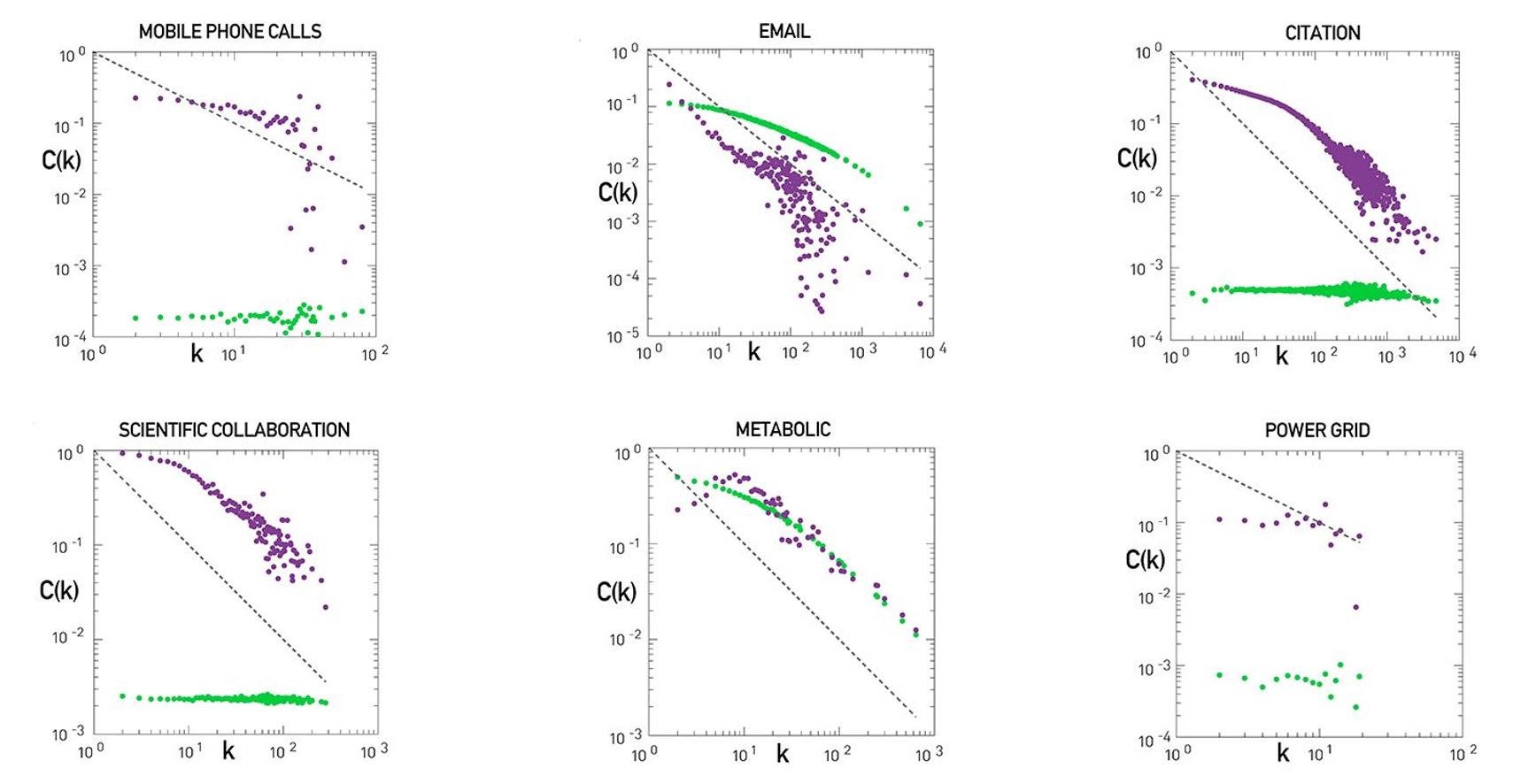

For instance, the plots that you see here illustrate the measured degree distribution of an older internet topology at the router level, a protein-protein interaction network, an email social network, which shows basically who sends email to whom and a citation network showing which papers cite other papers.

Note that the last two networks are directed and so the corresponding plots show separately, the in-degree and the out-degree distributions. You can find more information about these networks in table 41 of your textbook.

The plot also shows in green (the second plot), the poisson distribution with the same average degree as the observed network. c

Please note the following points about these plots:

- The poisson distribution is clearly a very bad model because it cannot capture the large variability and skewness of the degree distribution of these networks.

- The plots are shown in the log-log scale and the degree distributions decreases roughly as straight lines

- This means that the probability that a node has degree k drops as a power-law of $k:p_k \sim k^{-\alpha}$. The slope of that straight line corresponds to the exponent of the power-law $\alpha$.

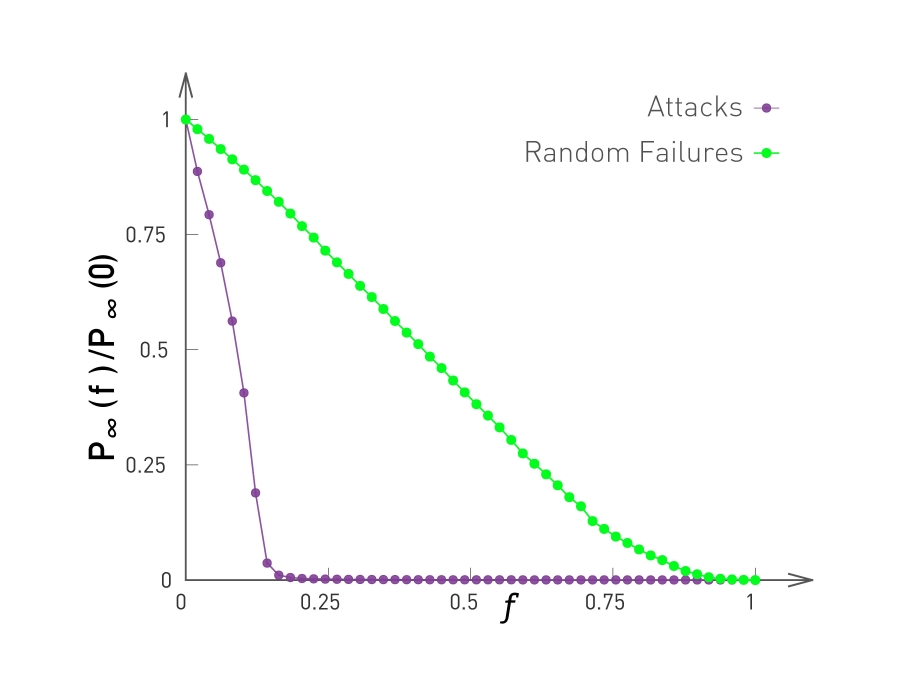

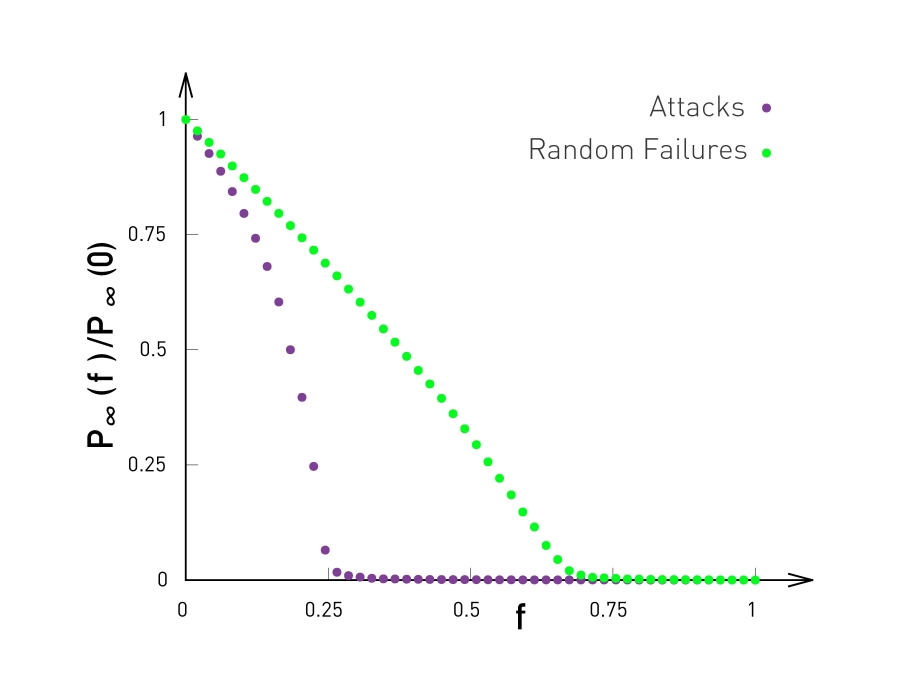

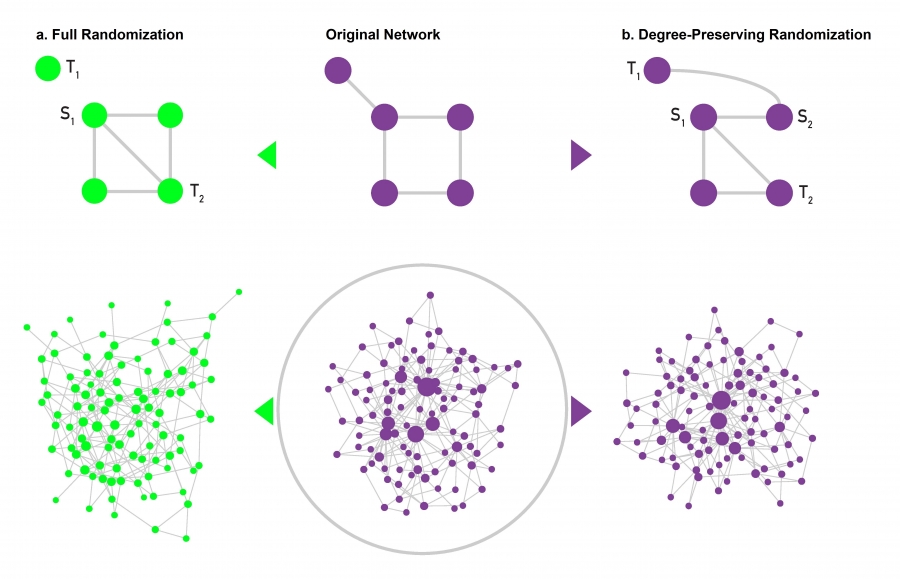

This observation is not just a statistical technicality. The fact that the real world networks often follow a power-law degree distribution has major implications about the function robustness and efficiency as we will see later in the semester.

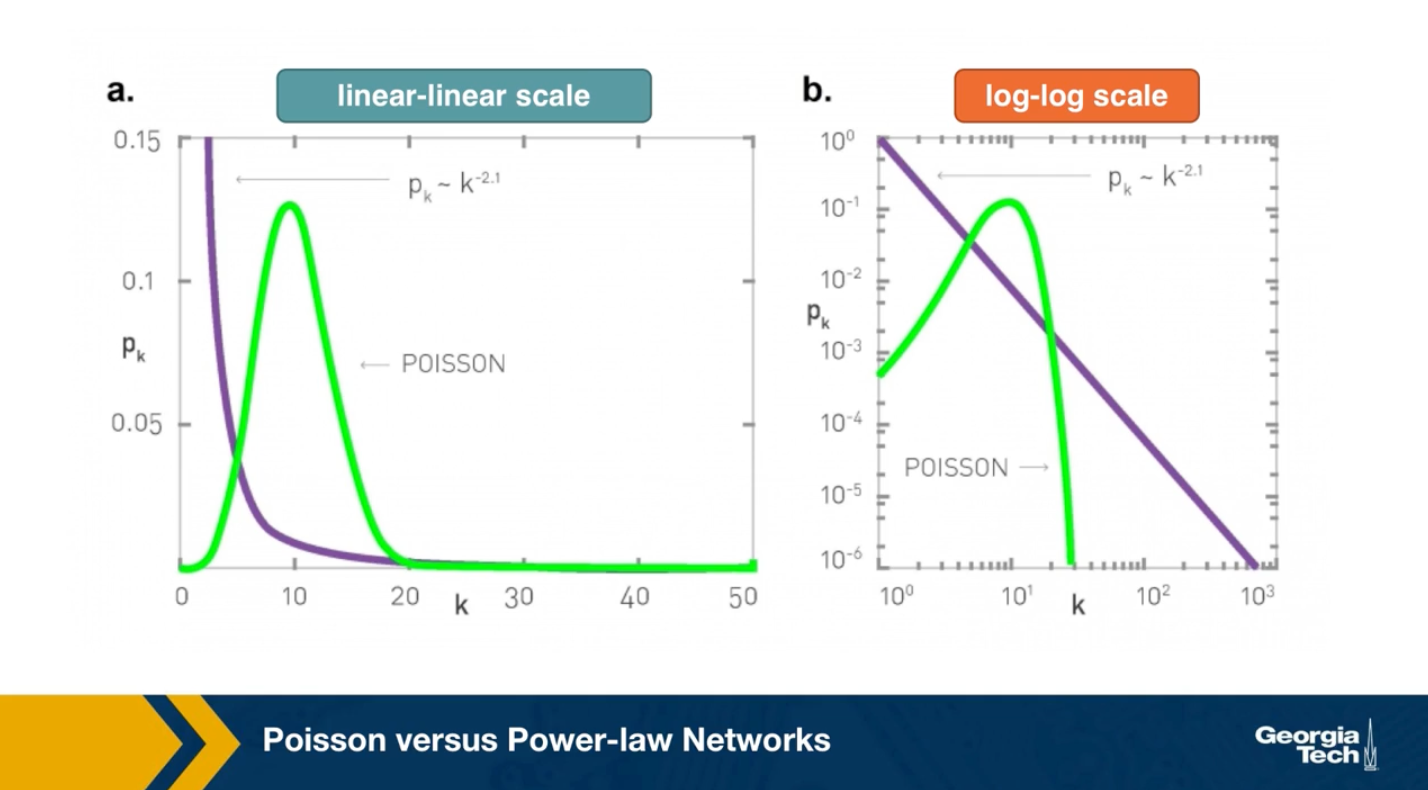

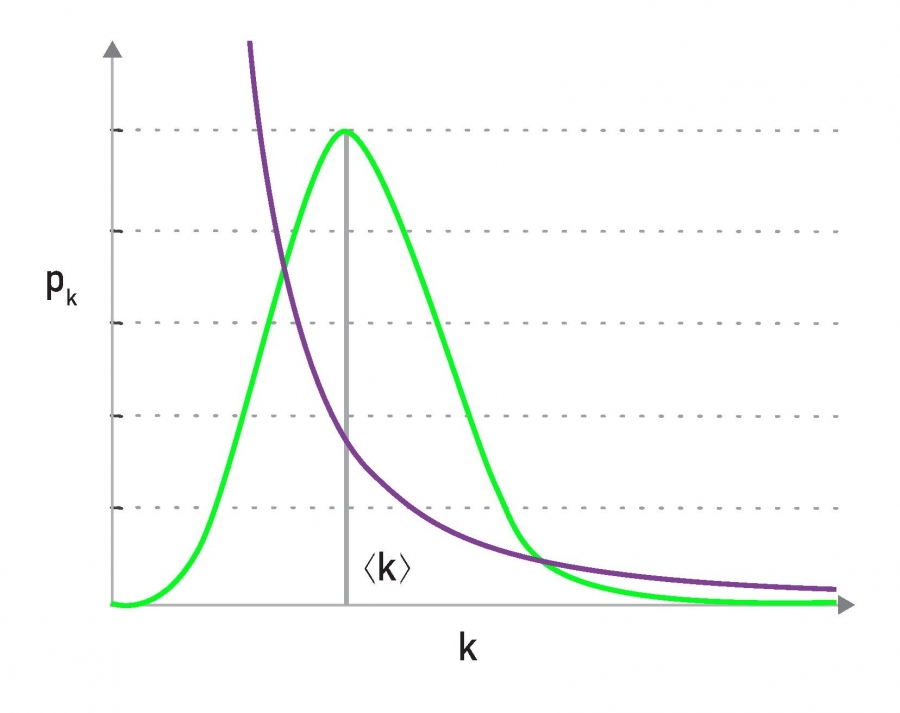

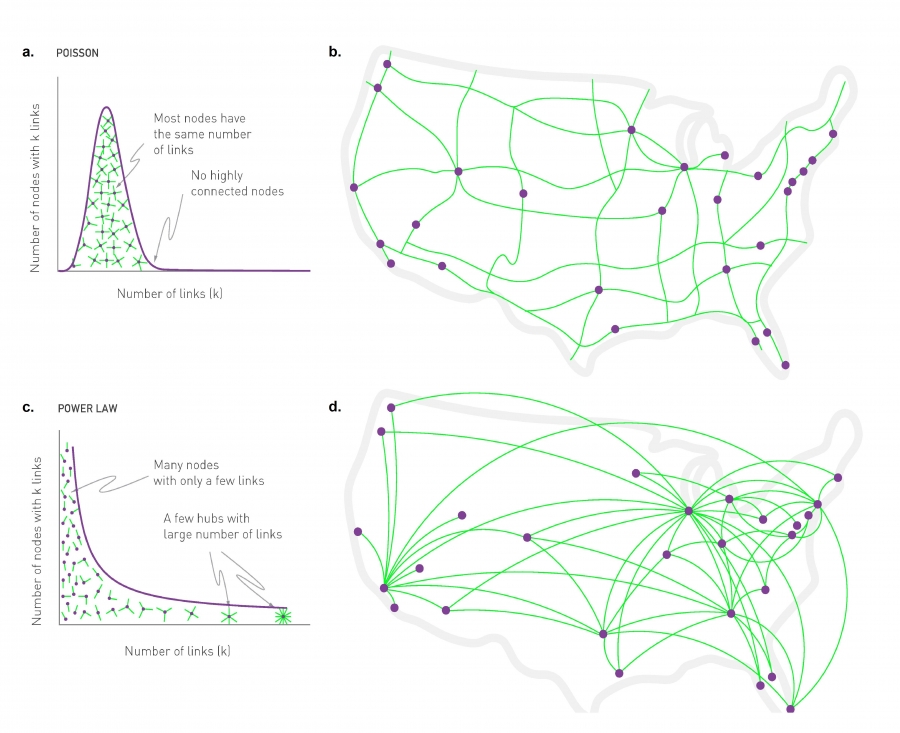

To further visualize the differences between a poisson and power law distribution, here are two distributions in both linear-linear and log-log scales.

The poisson distribution has an average degree of 11 here, while the power-law distribution has a lowered average degree set to 3, and the exponent is 2.1. The linear-linear plot shows that almost bell-shaped form of the poisson distribution centered around its mean. The power-law distribution on the other hand is not centered around the specific value. The major difference between the two distributions becomes clear in log-log scale. We see that the poisson distribution cannot produce values that are much larger than its average.

In this example, the maximum value of the poisson distribution is roughly 30, almost 3 times larger than the mean. The power-law distribution extends over three orders of magnitude and there is a non negligible probability that we get values that are much greater than its mean.

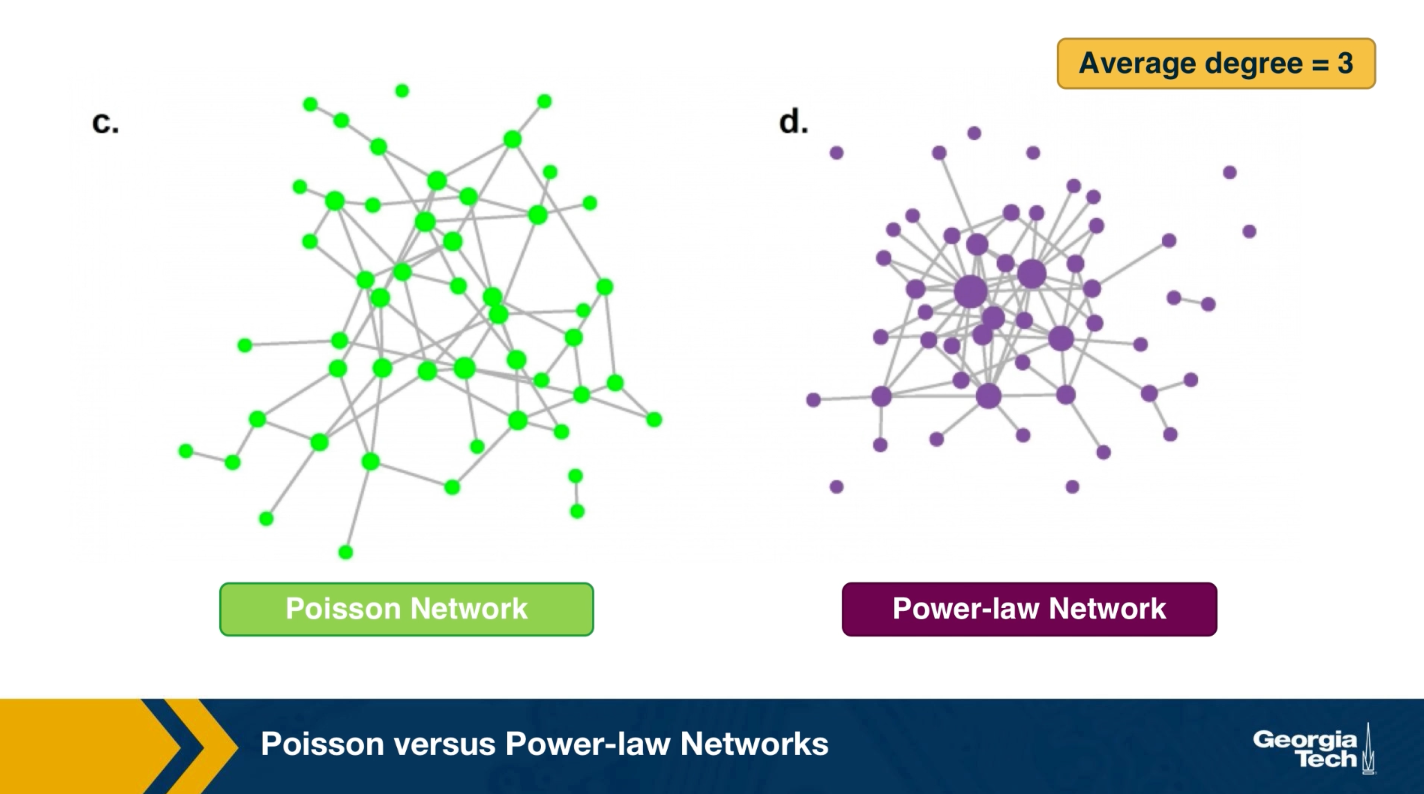

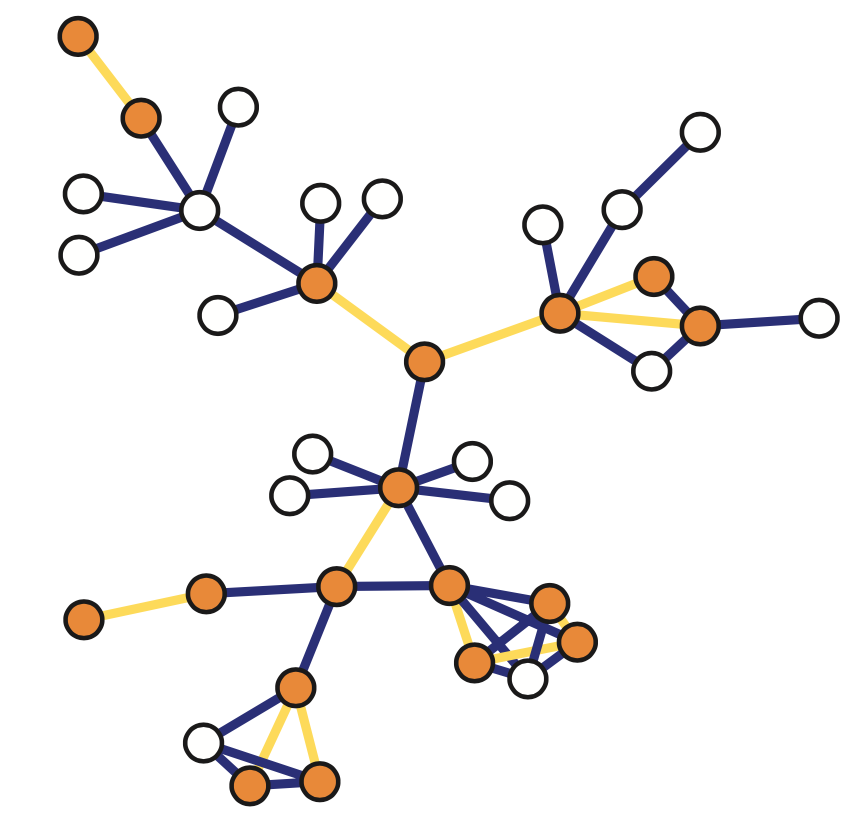

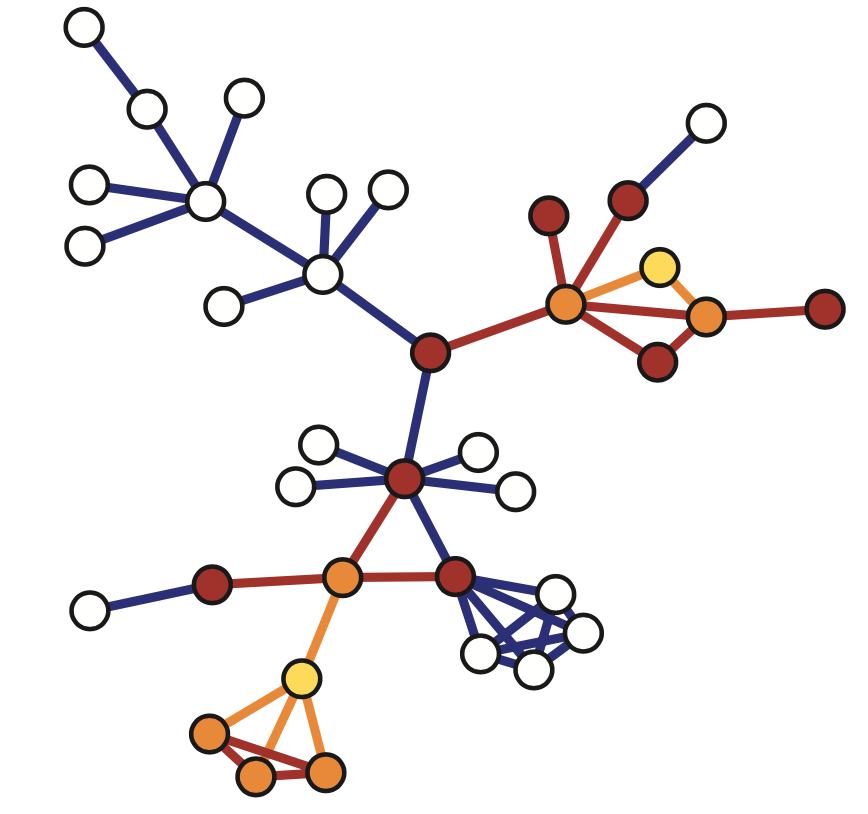

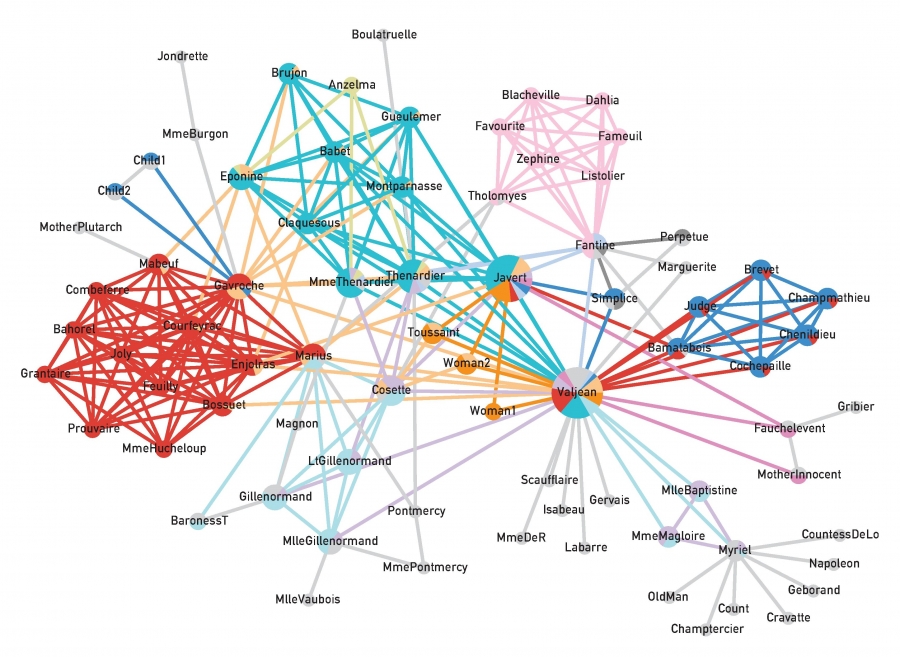

The visualization here, shows 2 networks with 50 nodes. They both have the same average degree equal to 3. The network at the left follows the poisson distribution, while the network at the right follows a power-law distribution with exponent 2.1.

The size of the nodes is drawn to be proportional to the degree. Note that the poisson network is uniform looking, there is not much variability in the degree of different nodes. The power-law network on the other hand, is very heterogeneous in that respect, some nodes are completely disconnected, many nodes have a degree of only 1 while 2 or 3 nodes have a much higher degree than the average. It is those nodes that we refer to as hubs.

Power-law Degree Distribution

A “power-law network” has a degree distribution that is defined by the following equation:

\[p_k = ck^{-\alpha}\]In other words, the probability that the degree of a node is equal to a positive integer $k$ is proportional to $k^{-\alpha}$ where $\alpha$ >0.

The proportionality coefficient c is calculated so that the sum of all degree probabilities is equal to one for a given $\alpha$ and a given minimum degree $k_{\text{min}}$ (the minimum degree may not always be 1).

\[\sum_{k=k_{\text{min}}}^\infty p_k = 1\]The calculation of c can be simplified if we approximate the discrete degree distribution with a continuous distribution:

\[\begin{aligned} \, \, \int_{k=k_{min}}^{\infty} p_k = \, c\int_{k=k_{min}}^{\infty} k^{-\alpha} = - \frac{c}{\alpha-1} k^{-(\alpha-1)} |_{k_{min}}^{\infty} = \frac{c}{\alpha-1} k_{min}^{-(\alpha-1)} = 1 \end{aligned}\]which gives:

\[c = \left(\alpha-1\right) \, {k_{min}^{\alpha-1}}\]So, the complete equation for a power-law degree distribution is:

\[p_k= \frac{\alpha-1}{k_{min}} \, \bigg(\frac{k}{k_{min}}\bigg)^{-\alpha}\]The Complementary Cumulative Distribution Function (C-CDF) is:

\[P[\mbox{degree} \geq k] = \bigg(\frac{k}{k_{min}}\bigg)^{-(\alpha-1)}\]Note that the exponent of the CCDF function is $\alpha-1$, instead of $\alpha$. So, if the probability that a node has degree $k$ decays with a power-law exponent of 3, the probability that we see nodes with degree greater than $k$ decays with an exponent of 2.

For directed networks, we can have that the in-degree or the out-degree or both follow a power-law distribution (with potentially different exponents).

Food for Thought

Prompt 1: Repeat the derivations given here in more detail.

Prompt 2: Can you think of a network with n nodes in which all nodes have about the same in-degree but the out-degrees are highly skewed?

The Role of the Exponent of a Power-law Distribution

What is the mean and the variance of a power-law degree distribution?

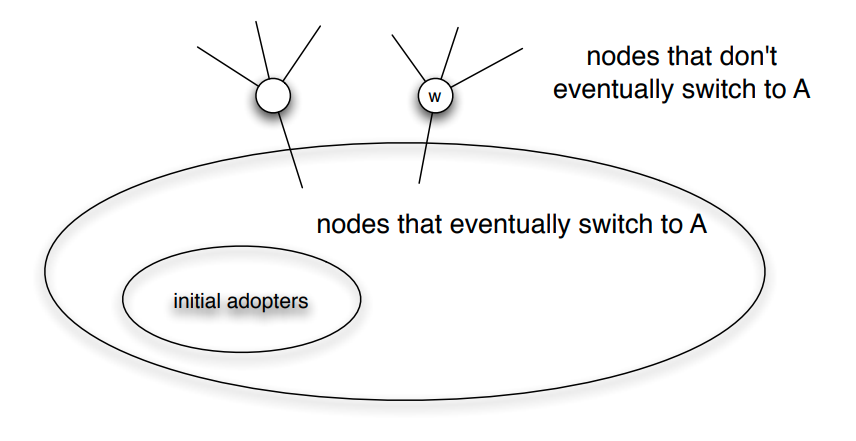

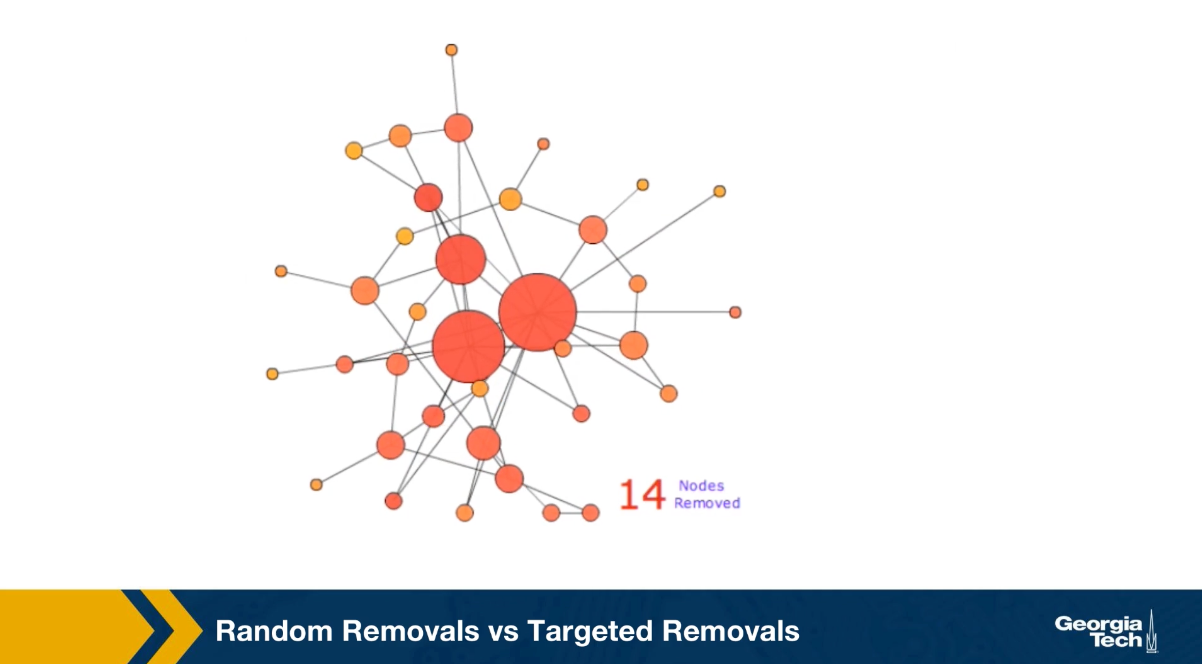

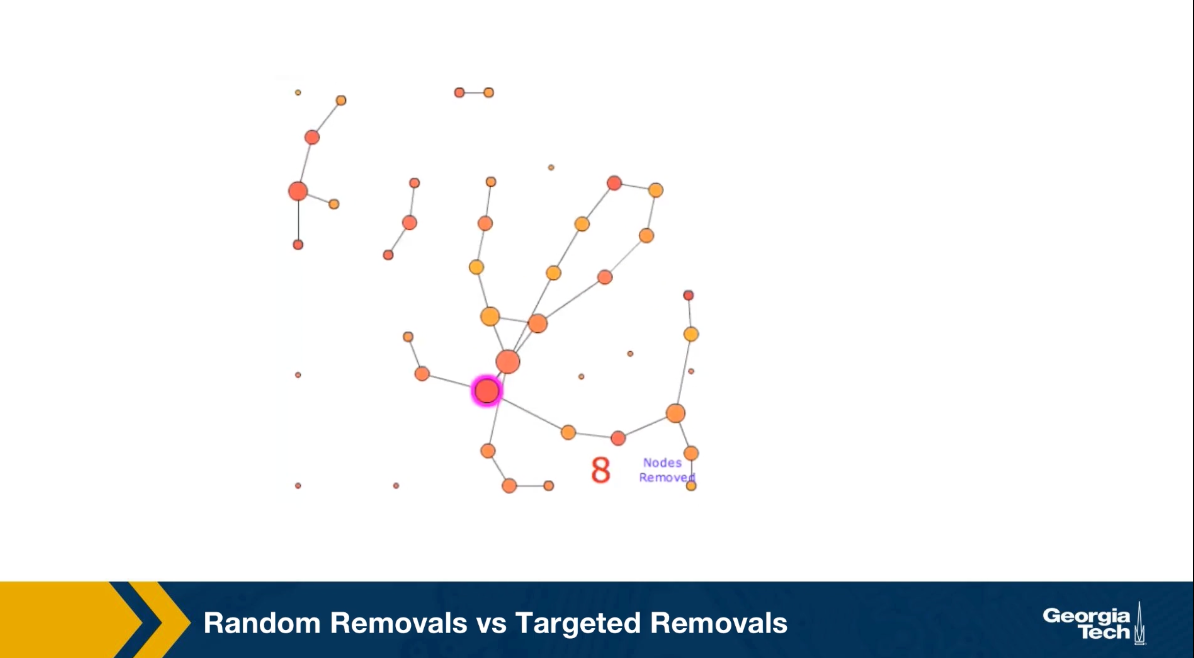

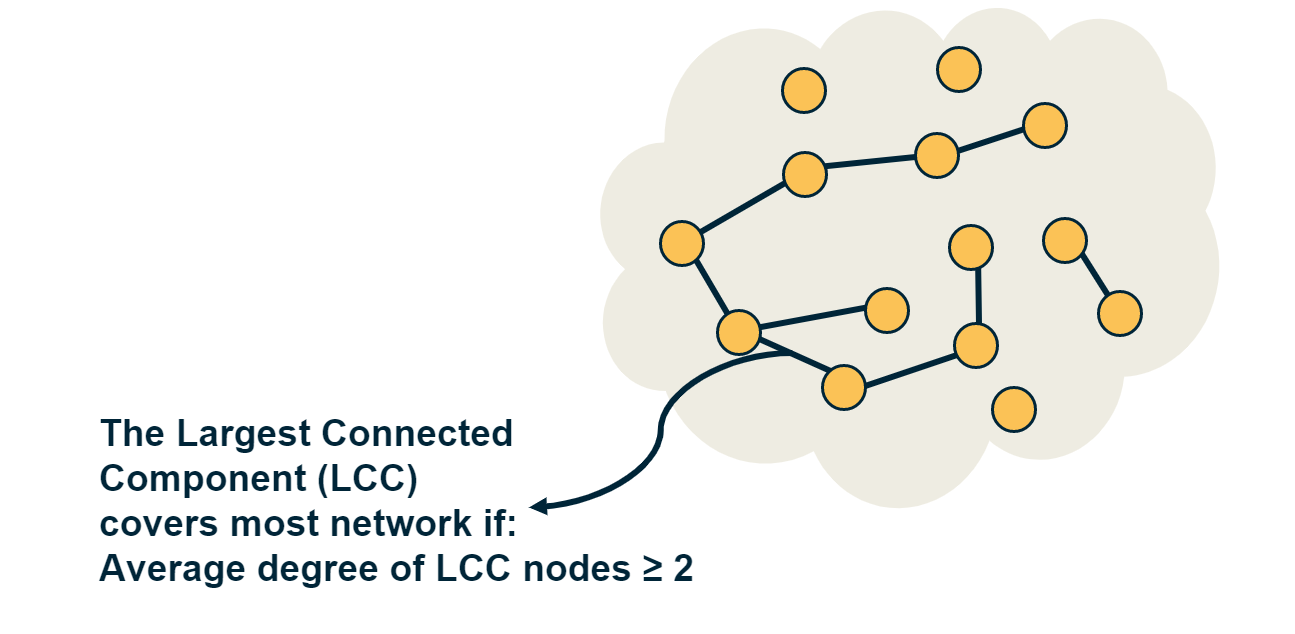

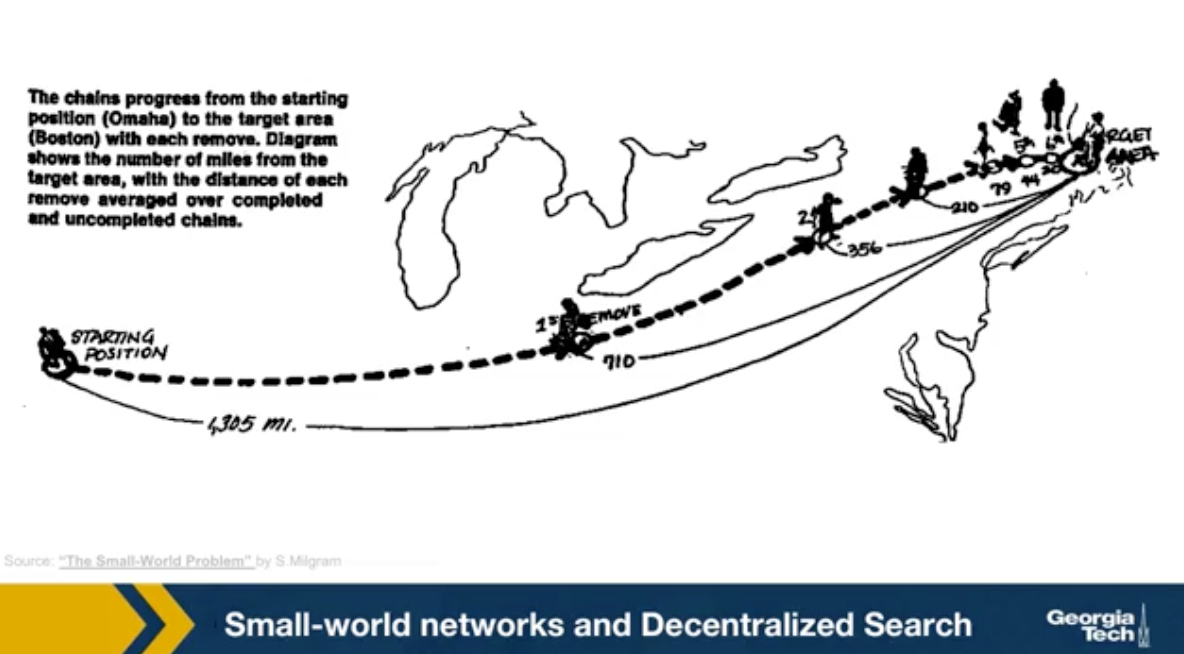

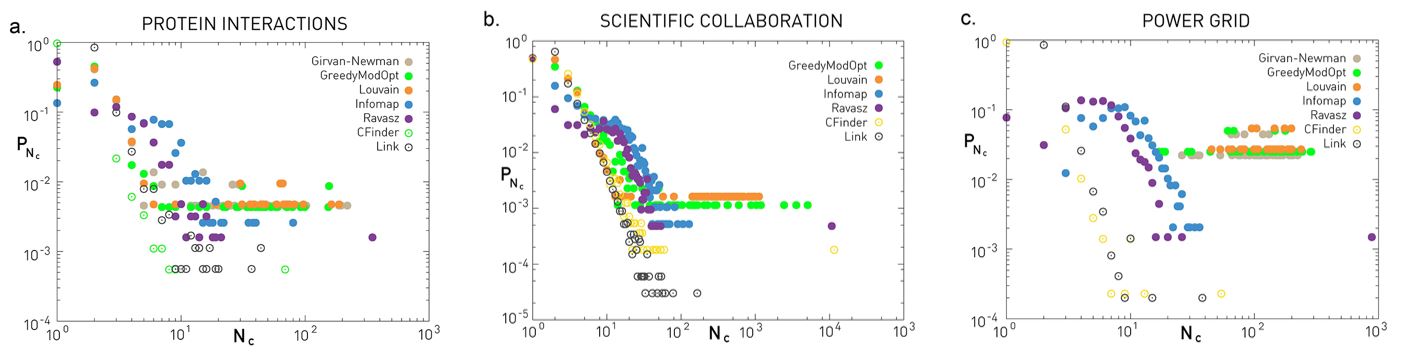

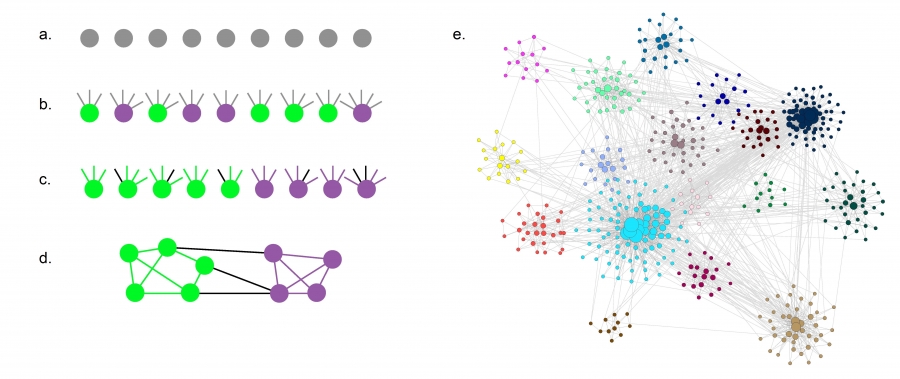

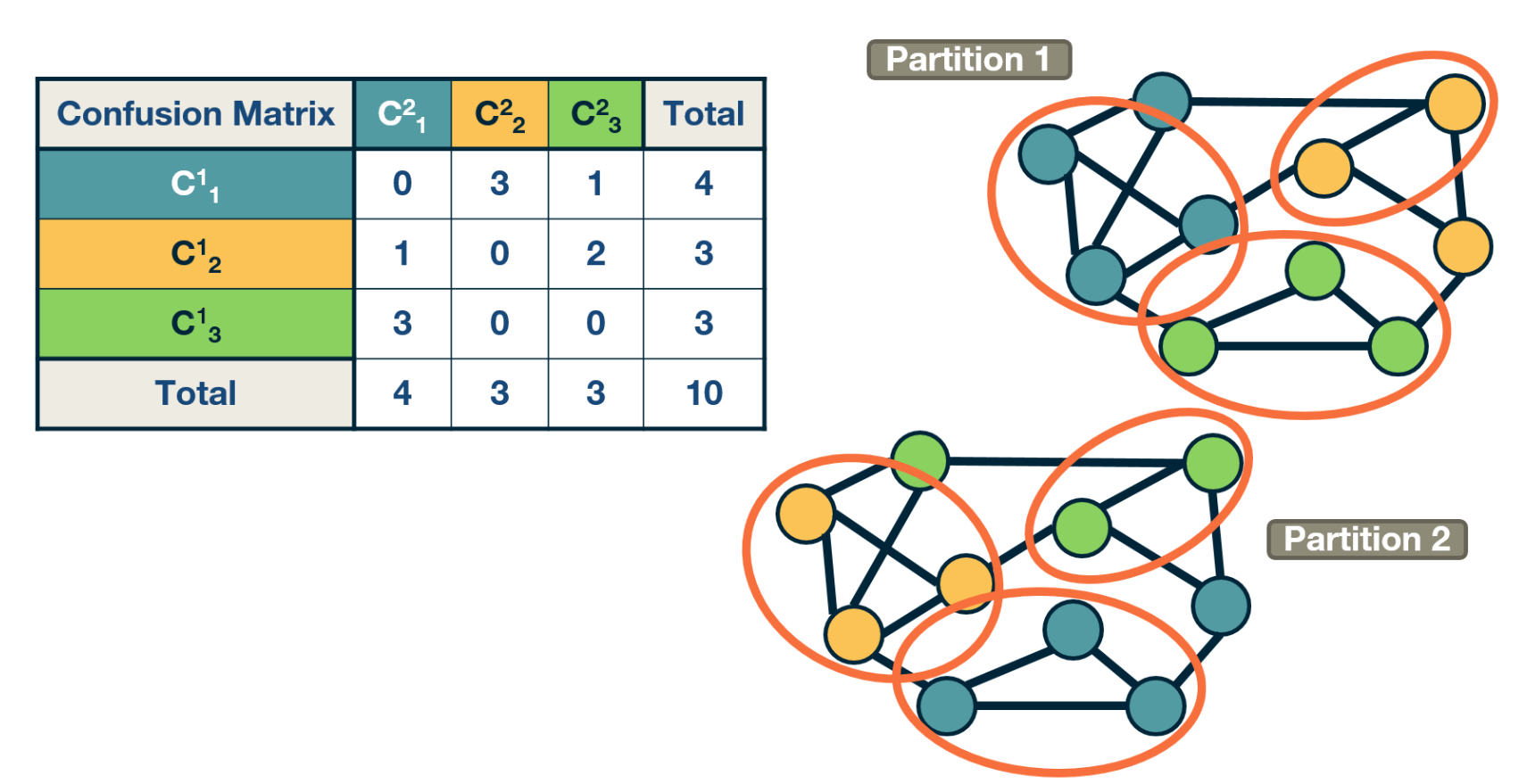

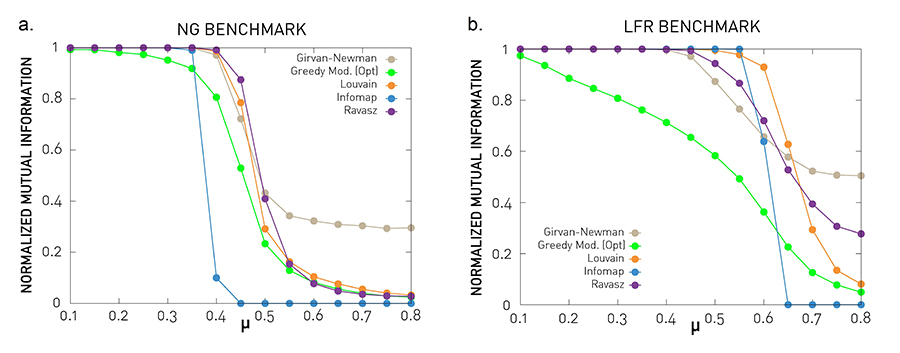

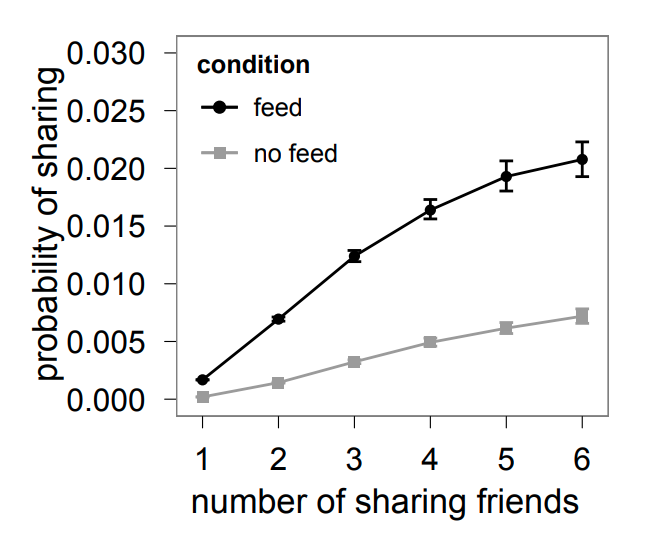

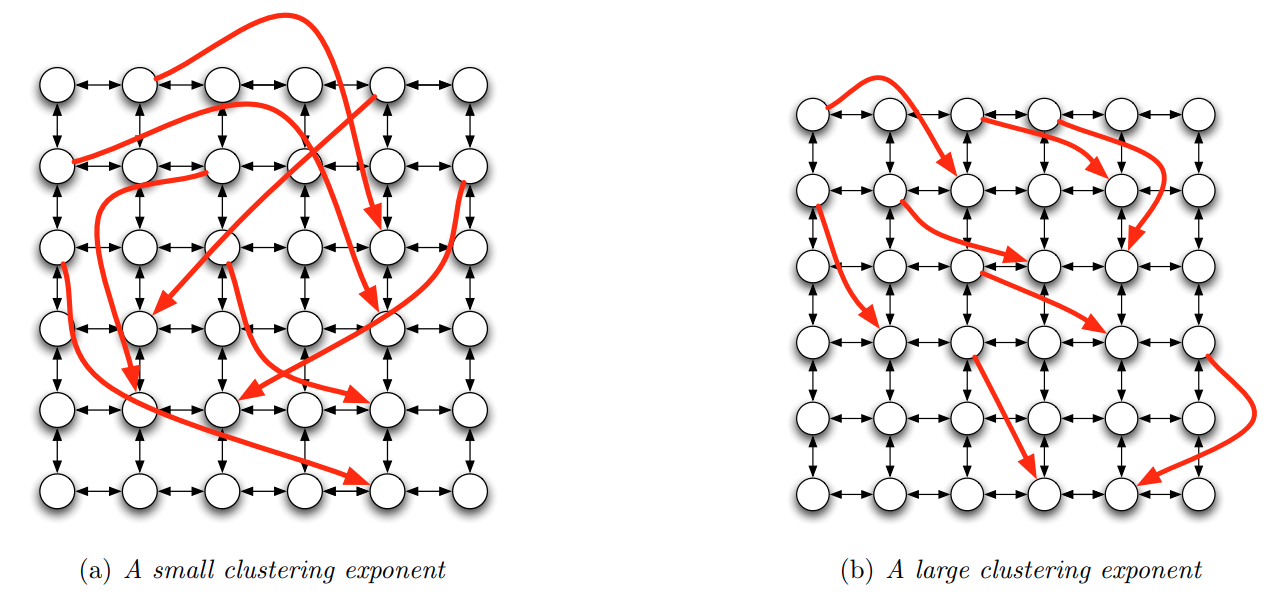

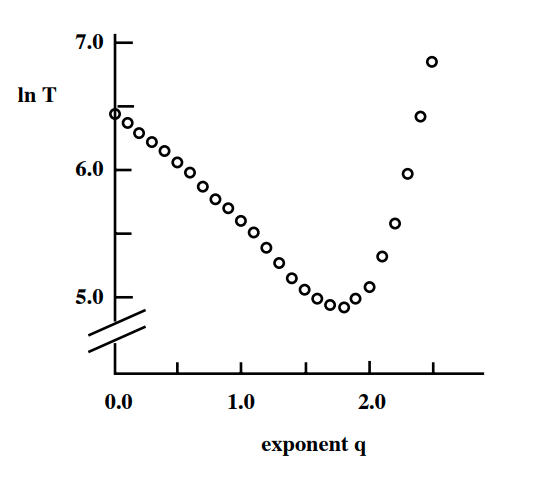

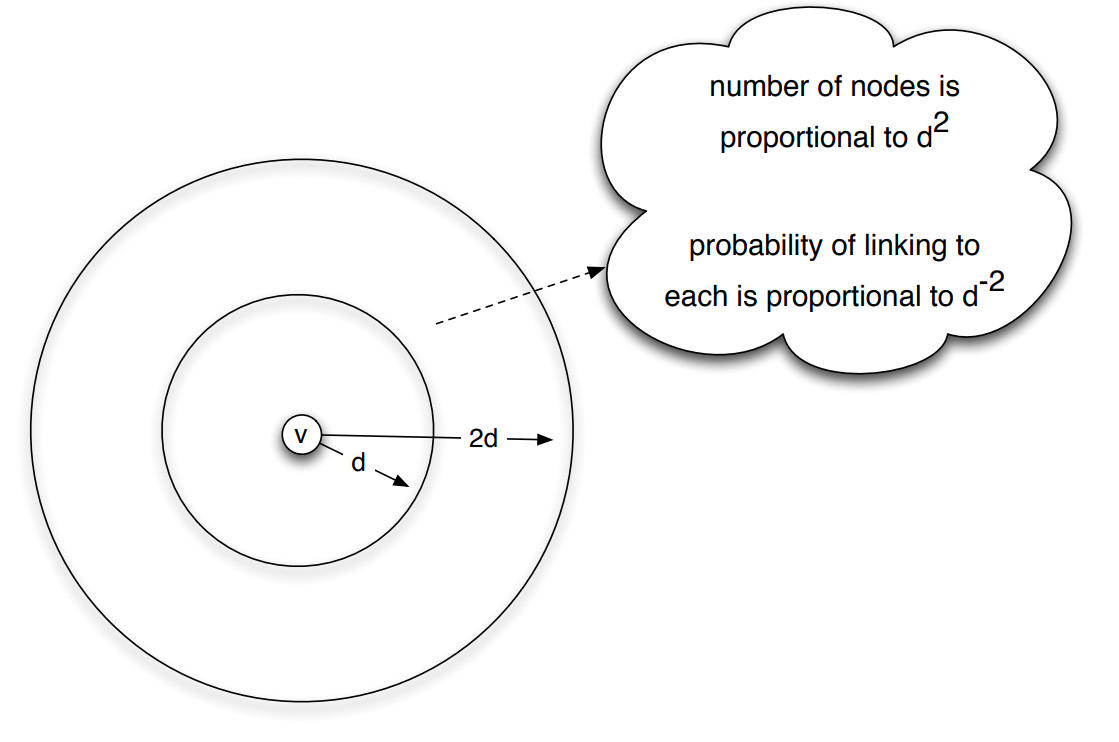

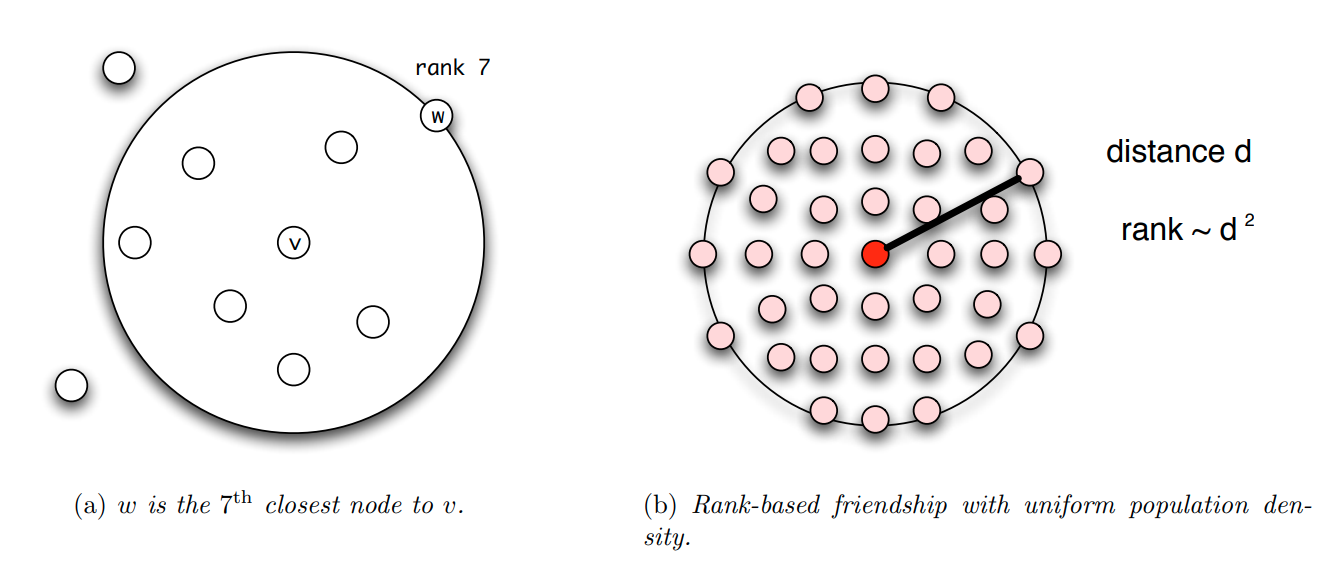

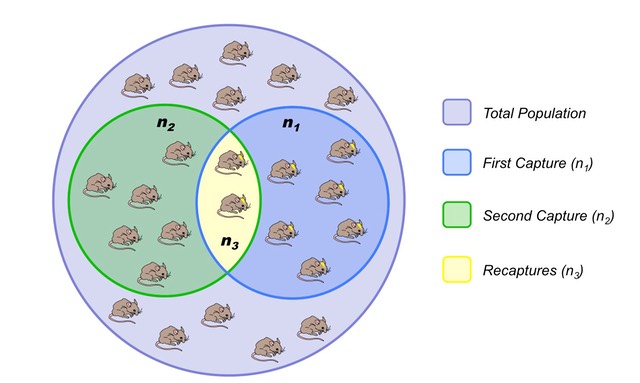

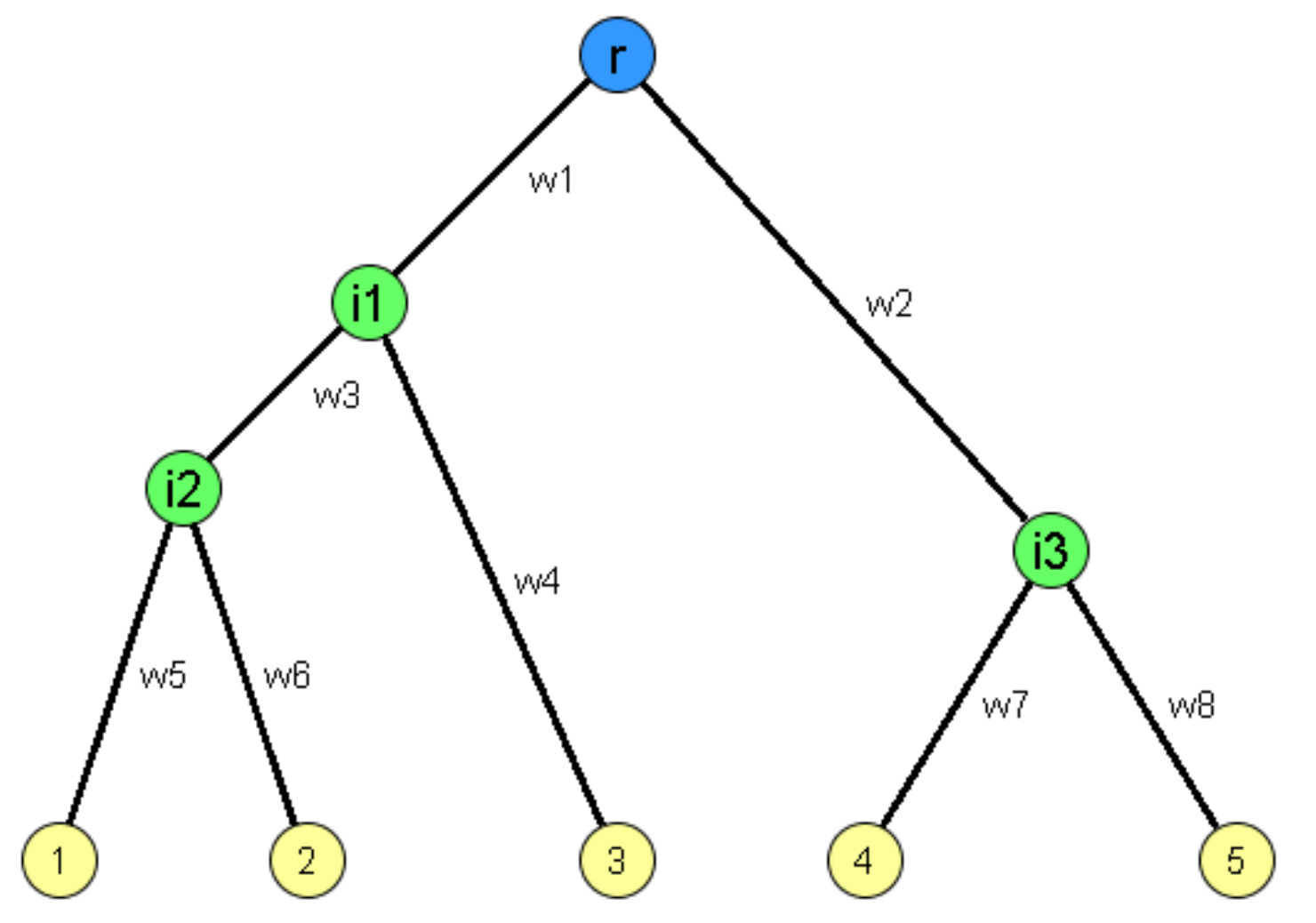

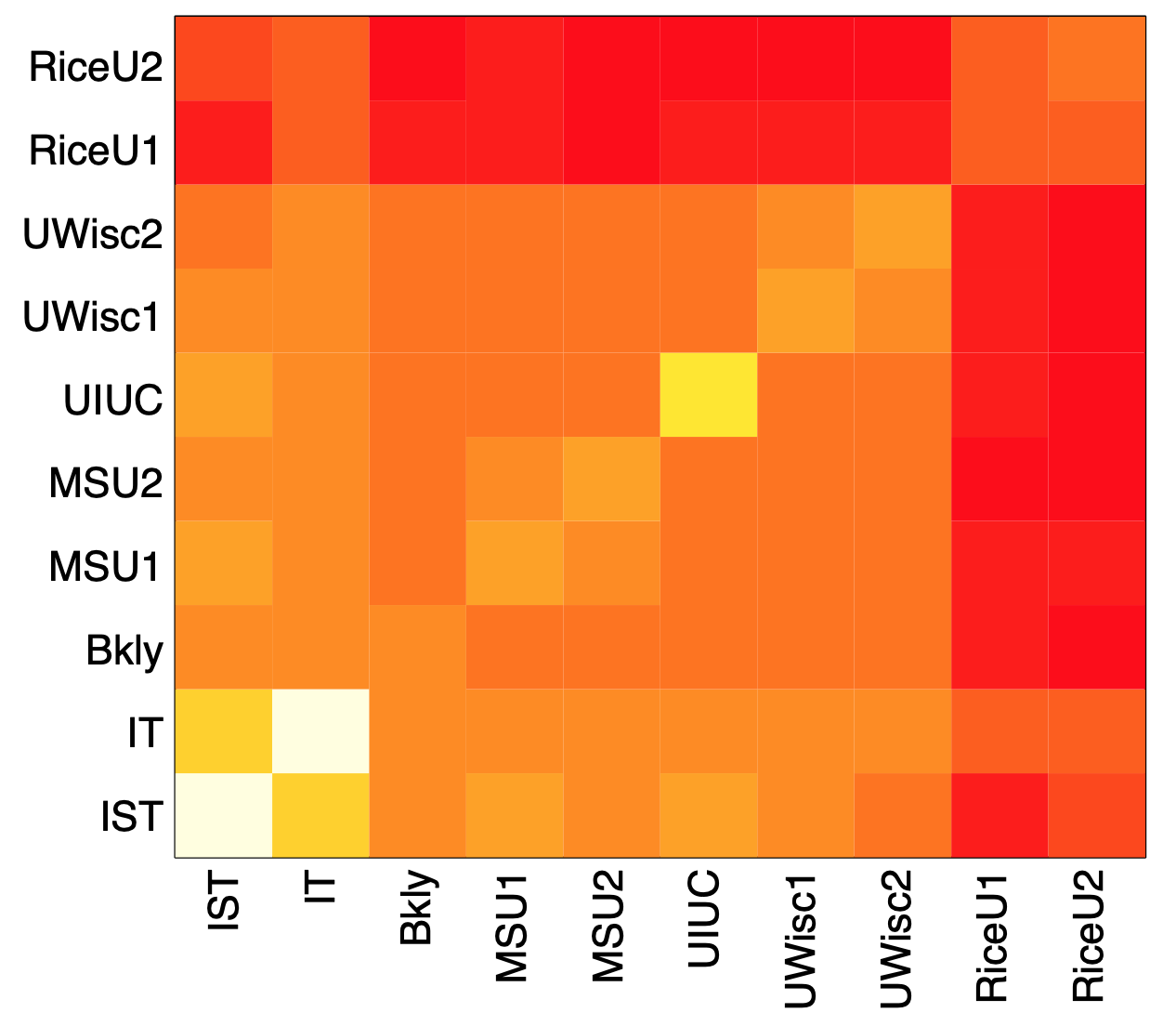

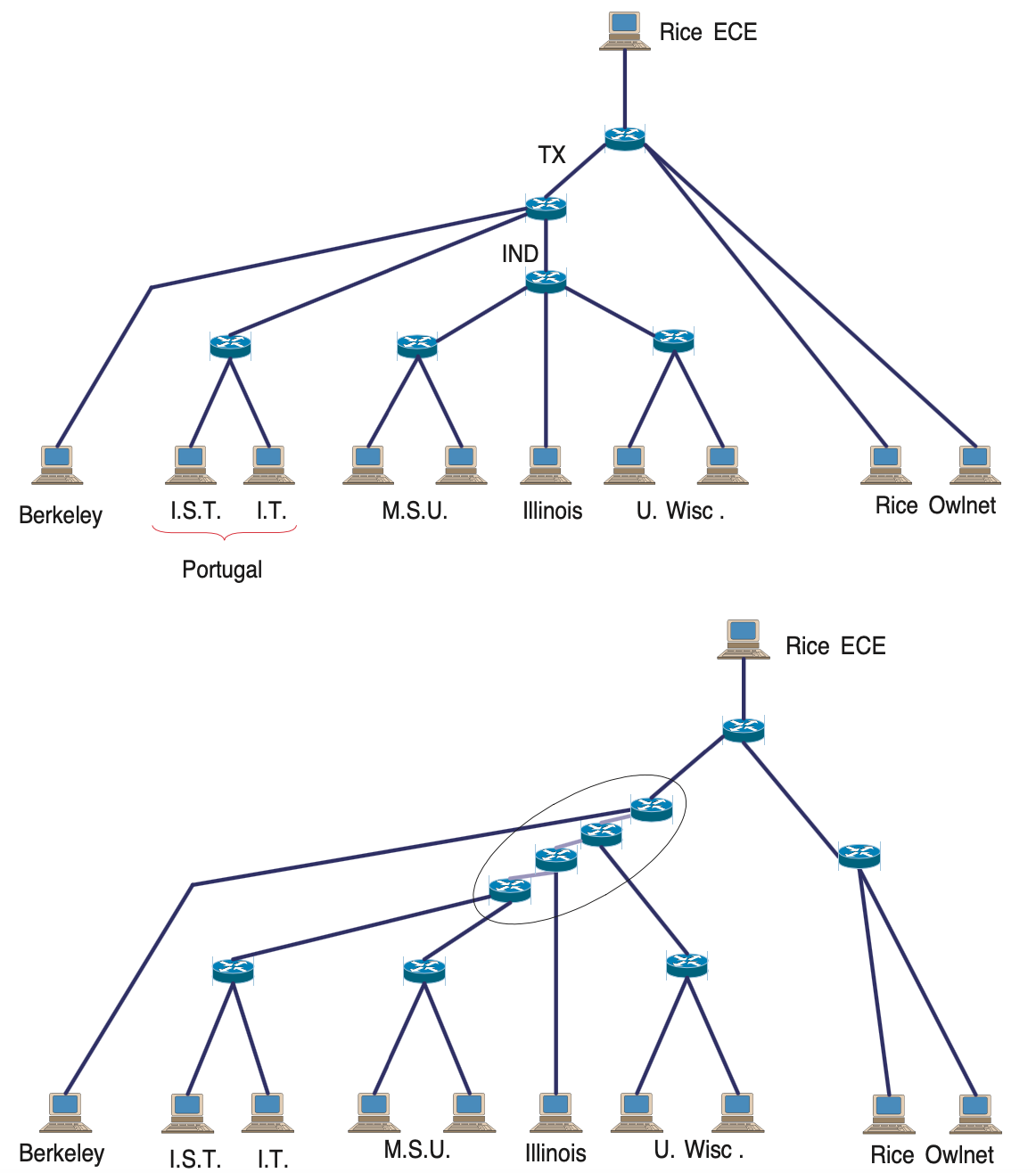

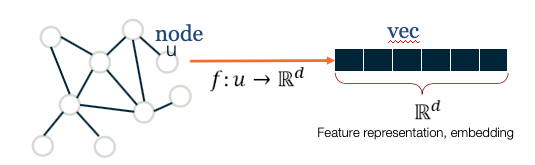

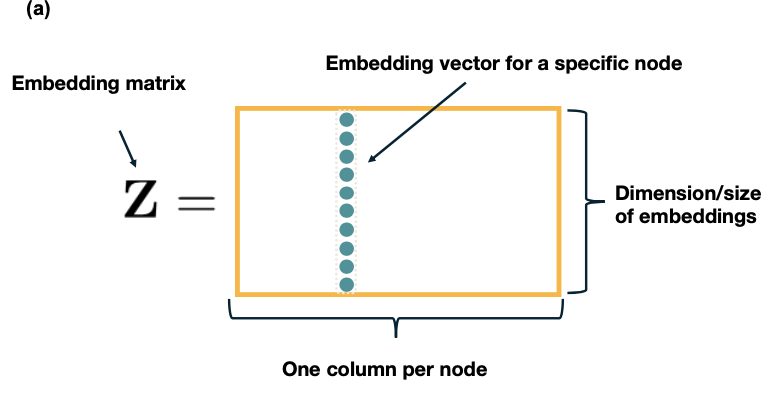

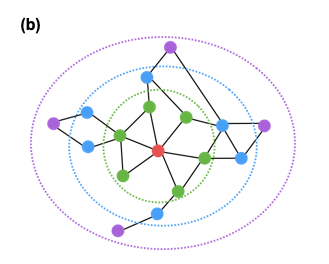

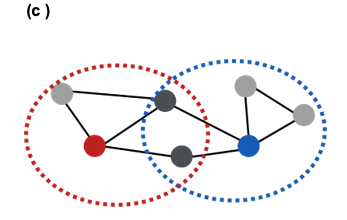

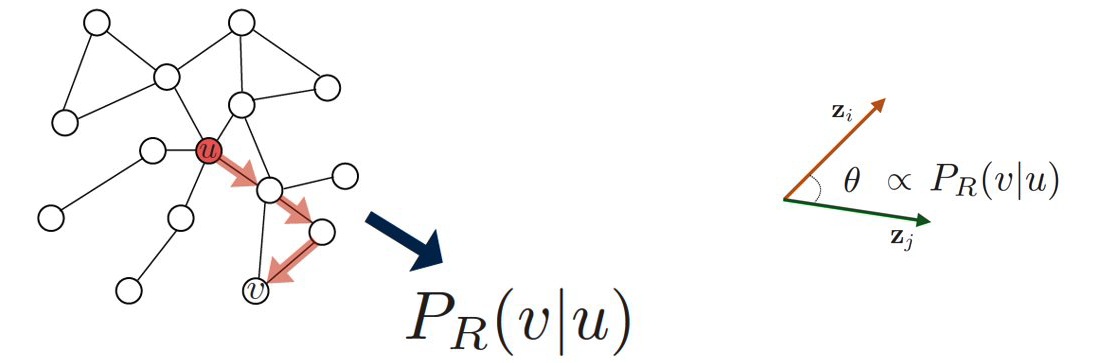

More generally we can ask: what is the m’th statistical moment of a power-law degree distribution? It is defined as: