CS7650 OMSCS - Natural Language Processing Notes

Content of these notes are from Georgia Tech OMSCS 7650: Natural Language Processing by Prof. Mark Riedl. They kindly allowed this to be shared publicly, please use them responsibly!

Module 1: Introduction to NLP

Introduction to NLP

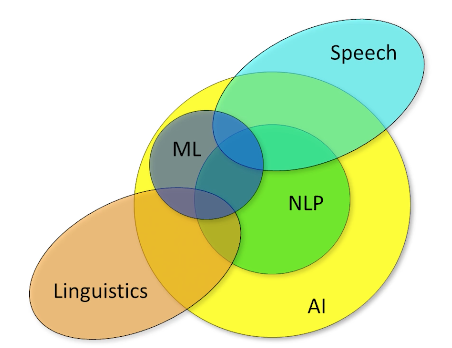

What is Natural Language Processing?

- The set of methods for making human language accessible to computers

- The analysis or understanding (to some degree) of what a text means

- Generation of fluent, meaningful, context-appropriate text

- The acquisition of the above from knowledge, and increasingly, data

So if NLP is algorithms but what then is natural language?

- A structured system of communication (sharing information) that has evolve naturally in humans through use and repetition without conscious planning for premeditation.

- Humans discovered that sharing information could be advantageous

- Initially through making sounds to which a mutually understood meaning was ascribed

- Later: markings

- Language is thus an agreed-upon protocol for moving information from one person’s mind to another person’s mind

Now, we have evolved to more than just one language.

- Different groups coalesced around different systems, English, Spanish, Sign Language etc.

- Each has its own structure that we humans learn

- Each has “rules” though often not well-defined or enforced

- Syntax: The rules for composing language

- Semantics: The meaning of the composition

Characteristics of languages - Lossy But efficient (Can omit information / have errors but message is still conveyed)

- As an example “For Sale: baby shoes, never worn”

Non-Natural languages

- Languages that are deliberately planned

- Generally have well-defined rules of compositions

- E.g python, javascript, c++

- Syntax structured so as to eliminate any chance of ambiguity

For this course we are not going to focus on non natural languages.

What does it mean to say “I understand” or “know” a language?

- I know English

- I can produce english and affect change on others

- I can receive english and act reasonably in response

- For example Does a 3 year old know english? (To an extent)

- Does a dictionary know english? (Encompass it but does not operationalize it)

- What to tell a computer so it understands english as well as a 3 year old?

- As well as an adult?

- What about a computer? How much do they need to know or understand?

Overall:

- We do not really have a definition of what it means to “understand” a language

- We have powerful algorithms that can imitate human language production and understanding

- NLP is still very much an open research problem

- Next, we will look at some of the applications of NLP and why human languages are so tricky for computers

Why Do We Want Computers To Understand Languages?

About human computer interaction.

- Want to affect change on computers without having to learn a specialized language.

- Want to enable computers to affect change on us without having to learn a specialized language

- Error messages

- Explanations

A large portion of all recorded data is in natural language, and produced by humans for the consumption by humans. Natural language is also inaccessible to computational systems, and by doing so, it open up a few applications:

- Detect patterns in text on social media

- Knowledge discovery

- Dialogue

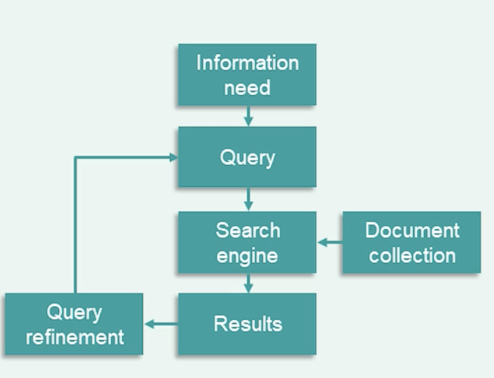

- Document Retrieval

- Writing assistance

- Prediction

- Translation

WHy is NLP hard?

- Multiple meanings: bank, mean, ripped (e.g mean is the average? or someone is being mean?)

- Domain specific meanings: Latex (Latex allergy, writing papers)

- Assemblages

- Words assemblages: Take out, drive through

- Portmanteaus: Brunch, scromit (screaming while vomiting, lunch+breakfast)

- Metaphor (Burn the midnight oil, i don’t give a rat’s ass)

- Non-literal (Can you pass the salt)

- New words (covid, droomscrolling, truthiness, amirite, yeet, vibe shift)

Other reasons why they are hard:

- Syntax is the rules of composition

- Grammar: The recognition that parts of sentence have different roles in understanding

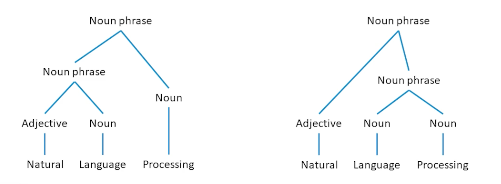

- Syntactic ambiguity

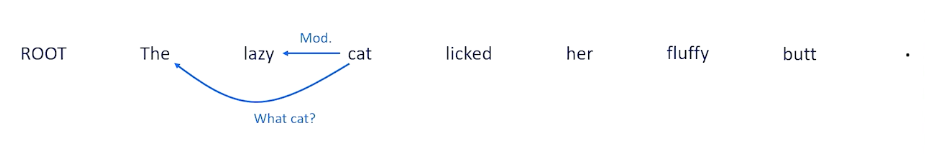

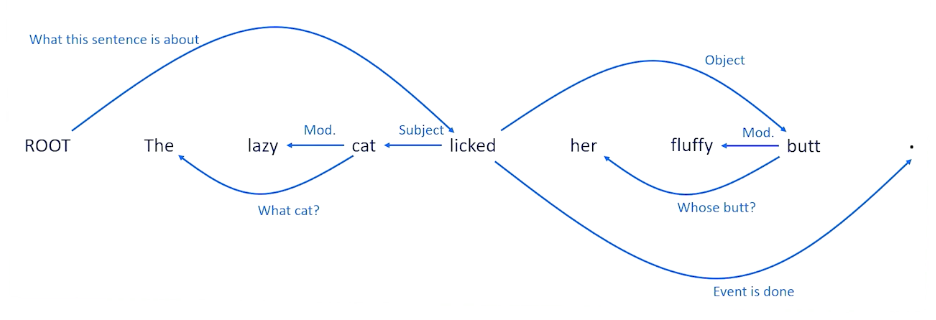

The left tree in the means the processing of natural languages which is exactly what we want computers to be able to do. Now let’s take the exact same phrase, but to put language and processing together into a noun phase, and add adjective to that, now I have language processing, which is natural, which is what humans do but does the exact opposite of what we want computers to do.

Other examples:

- We saw the woman with the sandwich wrapped in paper

- What was wrapped in paper? The sandwich or woman?

- There is a bird in the cage that likes to talk

- Miners refuse to work after death.

Probability and statistics

Brief relationship:

Two important mathematical and algorithmic tools. The first way is to use probability and statistics. why?

Take for example these two statement about movie reviews, are they positive or negative?

- This film doesn’t care about cleverness, wit, or any other kind of intelligent humor

- There are slow and repetitive, but it has just enough spice to keep it interesting.

We can recast this as a probability statement; what is the probability that the review is positive?

- $Prob(positive\_review \lvert w_1, w_2, …, w_n)$

- $Prob(positive\_review \lvert \text{“there”,”are”,”slow”, “and”, “repetitive”, “parts”})$

- What is the probability that we see the word “repetitive” in a positive review?

We can do the same about text generation, e.g

- Once upon a time _____

- $P(w_n \lvert w_1, w_2, …, w_{n-1})$

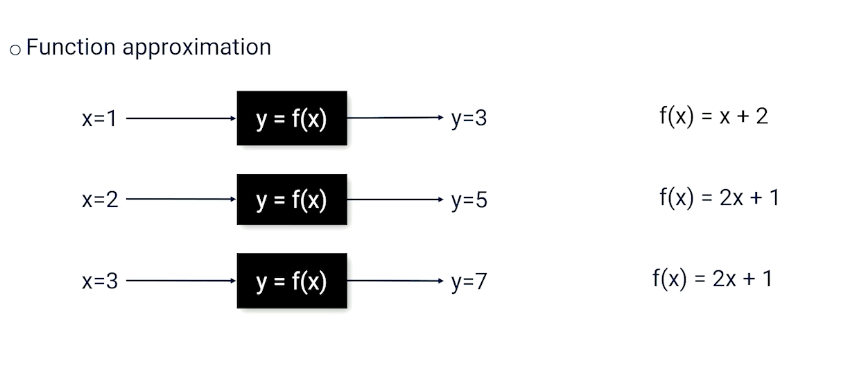

The other tool we have is deep learning.

- A significant portion of the state of the art in NLP is achieve using a technique called deep learning

- a class of machine learning algorithms that use chains of differentiable core modules to learn from data a function that maps inputs to outputs $f(x) \rightarrow y$

- Sentiment classification

- $x$ = input words

- $y$ = positive, negative labels

- Text generation, $x$ is input words, $y$ is successor word.

- words are everywhere – dialogue, document analysis, document retrieval, etc. Needs to be able to process natural language.

- Human languages, which are easy for us, make communication non-trivial for computers

Module 2: Foundations

Random Variables

A placeholder for something we care about.

- Random variables can hold particular values

- BEARD <True, False>

- Car <Sedan, coupe,suv, pickup, van>

- Temperature <-100, … + 100>

- Word <a,an, … zoology>

A random variable comes with probability distribution over values. e.g

- P(Beard) = <0.6, 0.4>

Likewise for temperature, it can be a continuous so it will be a curve. For words the probability will very sparse.

In NLP, What is the probability that i am in a world in which X has a particular value? What is the probability that I or my AI system is in a world in which some variable X has a particular value?

Do note the notations, $P(X=x) = 0.6$ represents the probability of $X=x$, versus $P(X)$ which is asking for distribution.

Complex Queries

- Marginal: $P(A) = \sum_b P(A \lvert B=b)$

- Union: $P(A \cup B) = P(A) + P(B) - P(A \cap B)$

- Conditional: $P(A \lvert B) =\frac{P(A \cap B)}{P(B)}$

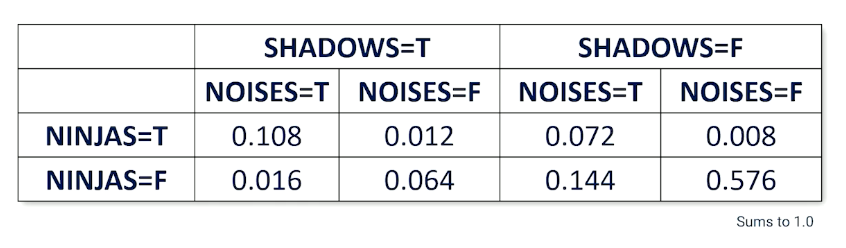

Full Joint distribution

When you have more than one random variable, the full joint distribution describes the possible worlds.

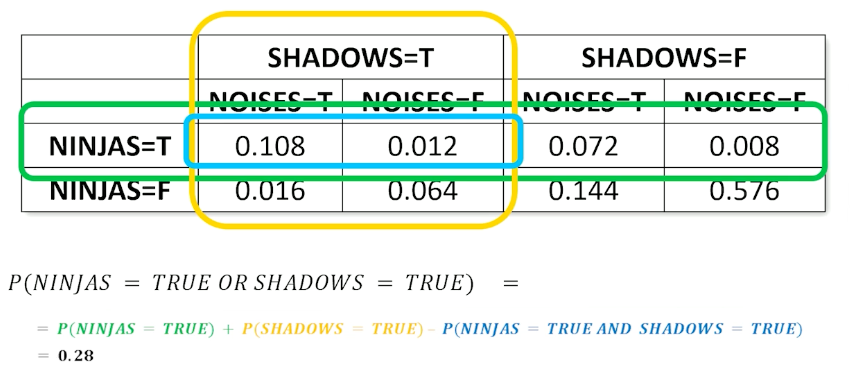

- P(NINJAS,SHADOWS, NOISES) is a full joint distribution

So from the table, about 0.576 of the time, there are no ninjas, no shadows nad noises but also 0.008 where there is a ninja without making any noise and no shadows. So there are a total of $2^3=8$ worlds and note that the probabilities must sum up to one.

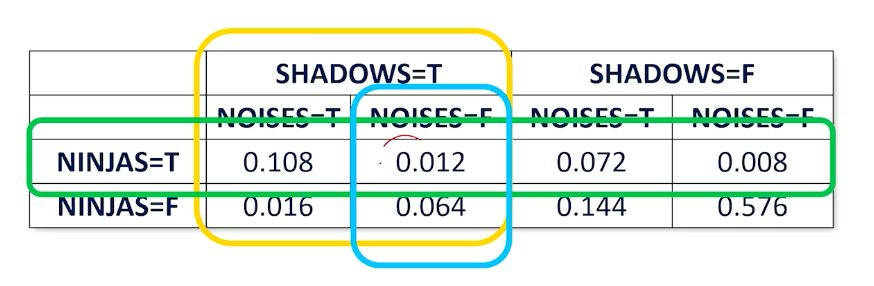

So once you have this table, then it is very easy to compute any distributions by looking up. If you are only interested in subset cases, e.g ninja and shadow, then you can do so by summing up marginally.

For example the green box is the sum where ninja is present.

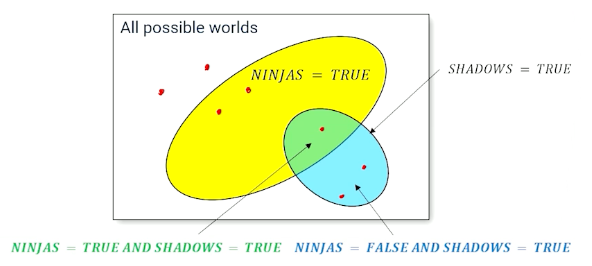

Likewise for another case where you want to know where ninjas or shadows exist:

Again, random variables are things that we care about and want our AI systems to account for. We don’t know the value of the random variables but we know the probability of seeing each possible value. We can compute the probability of any combination of values for a set of random variables (probability of being in different possible words). Sometimes we know how some probabilities will change in response to other variables, called conditional probabilities.

Conditional Probabilities

We are more likely to care about the probability of value of a random variable GIVEN something else is believed to be true.

- What is the probability of ninjas given I see shadows?

So e.g $P(ninja \lvert shadows) = \frac{P(ninja \cap shadows)}{P(shadows)}$

Generally, we refer to such things as $P(Y \lvert E = e)$ where $e$ denotes the evidence while $Y$ is the query.

\[\begin{aligned} P(Y=y \lvert E=e) &= \frac{P(Y=y \cap E=e)}{P(E=e)} \\ &= \alpha P(Y=y, E=e) \end{aligned}\]Under normal literature, $\frac{1}{P(E=e)}$ is called the normalization constant $\alpha$. This has to do with the marginalization of probabilities.

Hidden Variables

Conditional probability query doesn’t always reference all variables in the full joint distribution.

For example,consider $P(Y=y \lvert E=e)$, then if there are more than 2 variables, $P(Y=y \lvert E=e)$ is a partial joint distribution. We can convert it to a full distribution as follows:

\[P(Y=y \lvert E=e) = \alpha \sum_{x \in H} P(Y=y, E=e, H=x)\]In other words, iterate over all possible H (hidden) values.

Note that you can do it multiple times:

\[P(Y=y \lvert E=e) = \alpha \sum_{x_2 \in H_2} \sum_{x_1 \in H_1} P(Y=y, E=e, H_1 = x_1, H_2=x_2)\]Variable Independence And Product Rule

This is to serve as an introduction to bayesian networks.

Two random variables A and B are independent if any of the following are true:

- $P(A \lvert B) = P(A)$

- $P(B \lvert A) = P(B)$

- $P(A,B) = P(A)P(B)$

Product rule allows us to change any joint distribution between variables and turn it into a conditional probability.

\[\begin{aligned} P(A=a,B=b) &= P(A=a \lvert B=b) P(B=b) \\ &= P(B=b \lvert A = a) P(A=a) \\ \end{aligned}\]And using the equation above, gives us the bayes rule.

\[\begin{aligned} P(A =a \lvert B=b) &= \frac{P(B=b \lvert A=a)P(A=a)}{P(B=b)} \\ &= \alpha P(B=b \lvert A=a)P(A=a) \end{aligned}\]Bayes Rule With Multiple Evidence Variables

What if I have multiple evidence variables $e_1, e_2$

\[\begin{aligned} P(Y=y \lvert E_1,=e_1, E_2 = e_2) &= \alpha P(E_2 = e_2 \lvert Y=y,E_1=e_1)P(Y=y , E_1 = e_1) \\ &= \alpha P(E_2 = e_2 \lvert Y=y,E_1=e_1) P(E_1 = e_1 \lvert Y=y) P(y=y) \end{aligned}\]The first step is making use of bayes rule, while the second step is making use of product rule.

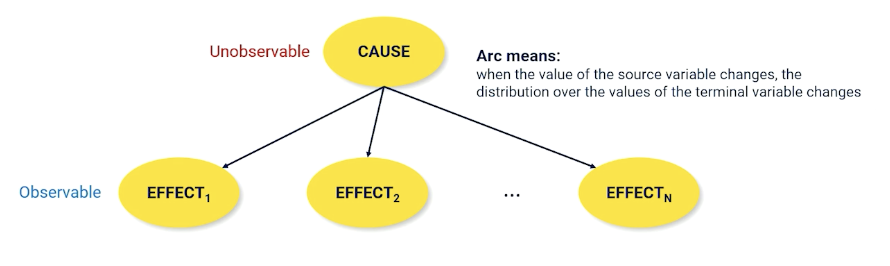

Bayes is particularly useful because we are going to run into lots and lots of situations out in the real world where we often have something called a cause and effect between random variables.

A lot of problems have the form $P(CAUSE \lvert EFFECT)$

- The cause is usually something that is unobservable, so I want to know what has caused something that I cannot directly observe given that it has some effect on the world that I can observe.

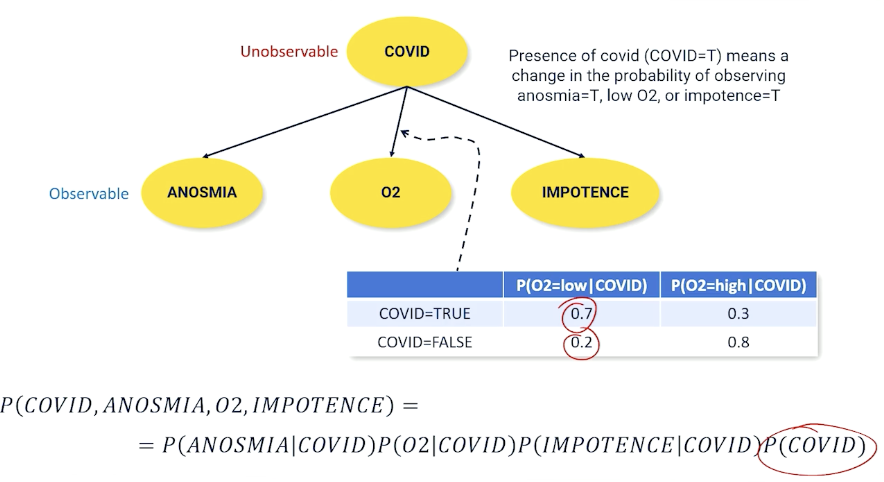

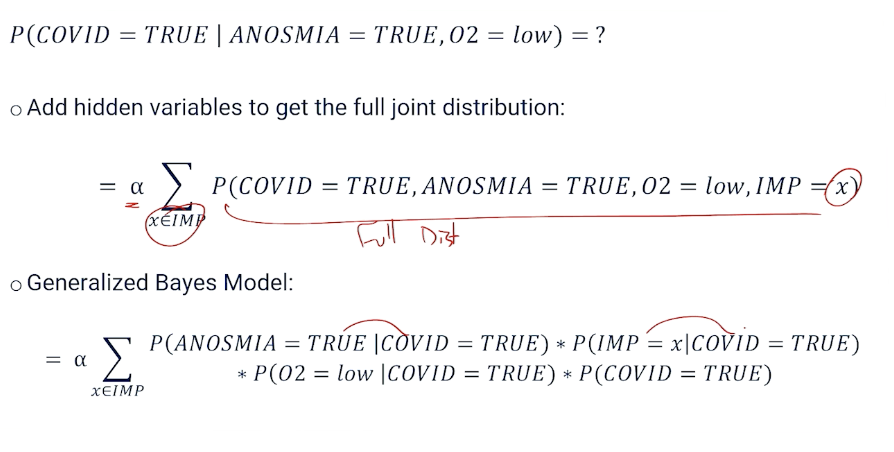

To give an example, such as medical diagnosis $P(COVID \lvert ANOSMIA)$, what is the probability that someone has covid given some of the effects of covid. Anosmia is the loss of smell. To rephrase, how do we detect someone has covid without testing, can we estimate it based on something that is easy to observe?

\[P(Covid = true \lvert Anosmia = True) = \alpha P(A = True \lvert C = True) P(C = True)\]The interesting bit is when the effects are independent, then we arrive at the generalized bayes model:

\[P(Cause|E_1,...,E_n) = \alpha P(Cause)P(E_1 \lvert Cause)P(E_2 |Cause) \cdots P(E_n \lvert cause)\]There is the naive bayes assumption, that effect variables are always independent or their casual effects are small to be negligible.

Language Example

Given a target word $W_T$, if we find out the probability whether it exists in a sentence of words $w_1, …, w_n$, then:

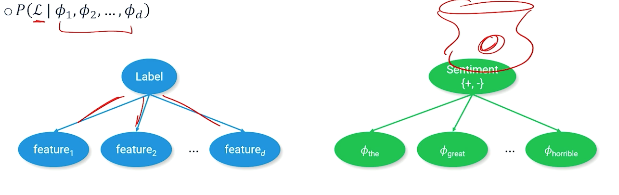

\[P(W_T = 1 \lvert w_1,...,w_n) = P(W_T=1)\prod_i^n P(w_i | W_T = 1)\]Bayesian networks

Bayesian network is just a way of visualizing the casual relationship between random variables. Recall that the cause is usually unobservable - the one we care about but cannot directly observe.

nodes are connected when they have a conditional non independent relationship. So each of effect variables is conditioned on the cause and each of the effects have no edges denoting they are independent.

\[P(X_1, X_2, ...) = \prod_i^N P(X_i \lvert Parents(X_i))\]- You don’t ever have the full joint distribution, but:

- You must have a conditional probability distribution for each arc in the bayes net.

Example:

What if we have a missing variables? then we can use the hidden variables:

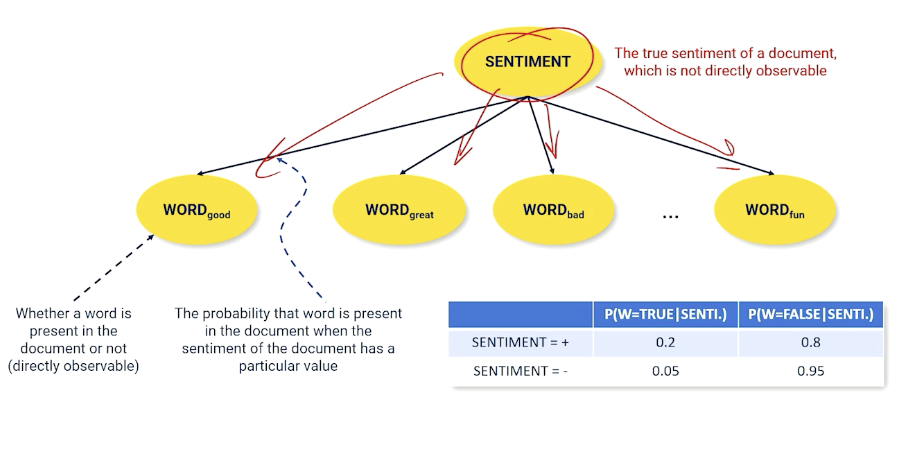

Documents Are Probabilistic Word Emissions

Another example using movie sentiment instead:

Imagine we have a document, it has an underlying sentiment, and what is doing is spitting out words. If I have a positive sentiment, I am going to spit out more words like good, great, fun etc, and spit out fewer words like bad into this document, and vice versa.

So, whether the word is present in the document which is something directly observable is conditioned on what the underlying unobservable sentiment of that document.

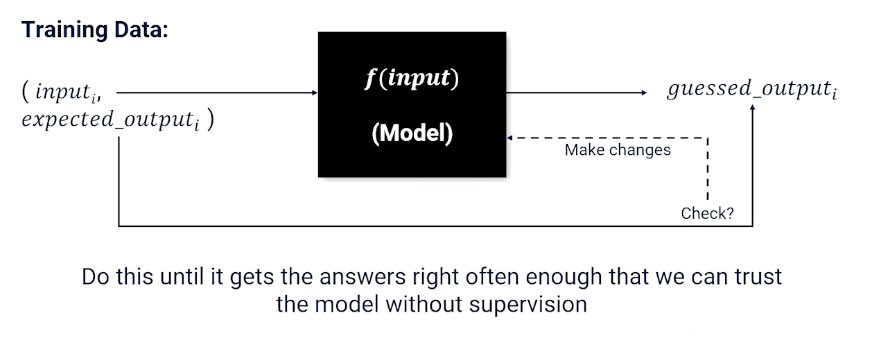

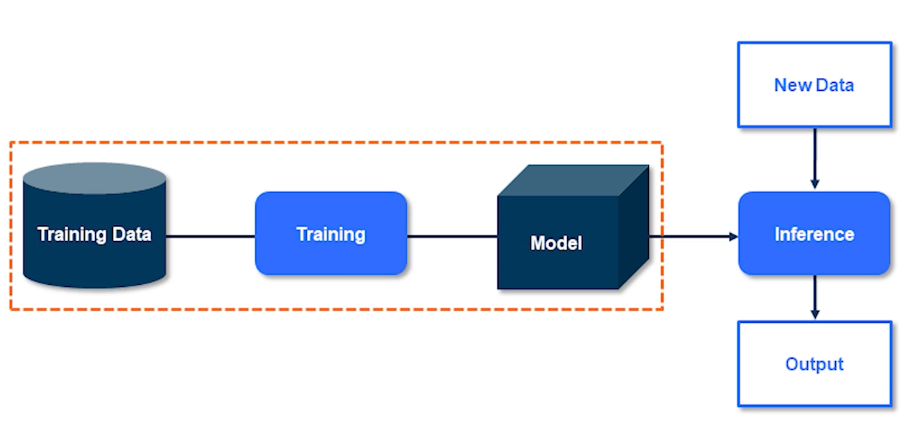

Supervised Learning

Mainly a review of Neural Networks and Deep learning as well as supervised learning.

Supervised learning is to learn a function from a training set of data consisting of pairs of inputs and expected outputs.

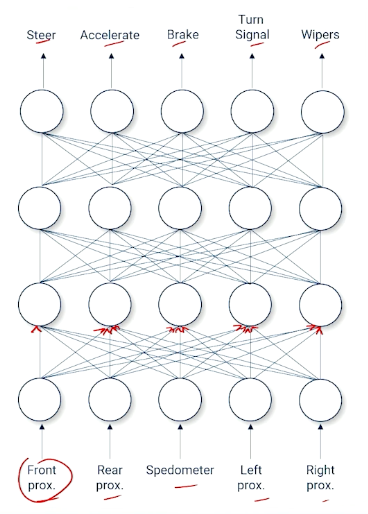

Neural Network And Nodes

Mainly talking about nodes representing the gating function, and edges represented weight. The gating function is also known as the activation function such as :

- sigmoid $\sigma(x) = \frac{1}{1+e^{-x}}$

- Tanh

- reLU

Gradient Descent

Remember how to update each weights can be done by gradient descent, which is dependent on the loss function, and ideally we have 0 loss.

Example of loss functions (the are others but lecturer did not mention them):

- MSE : $(y-\hat{y})^2$

So we can see how the weights will affect the loss and use the gradient to update the weights to minimize our loss. The steps is skipped but just know that the gradient of each weight is a function of the incoming activation values and the weight applied to each incoming activation value, applied recursively.

\[\begin{aligned} \frac{\partial L(target_k, output_k)}{\partial w} &= \frac{\partial L(target_k, \sigma (in_k))}{\partial w} \\ &= \frac{\partial L(target_k, \sigma (\sum_j w_{jk}out_j))}{\partial w} \\ &= \frac{\partial (target_k - \sigma (\sum_j w_{jk}out_j))^2}{\partial w} \end{aligned}\](Feel free to study back propagation yourself).

In summary:

- Neural Networks are function approximators

- Supervised Learning: given examples and expected responses

- Need to know how to measure the error/loss of a learning system

- The weights of neural network combined with loss create a landscape

- Gradient descent: Move each weight such that overall loss is going downhill

- Each weight adjustment can be computed separately allowing for parallelization using GPU(s)

Modern Deep Learning Libraries

There are other libraries like keras, tensorflow and pytorch. This course is going to focus on pytorch.

- Neural network is a collection of “modules”

- Each module is a layer that store weights and activations (if any)

- Each module knows how to update its own weights (if any)

- Modules keep track of who passes it information (called a chain)

- Each module knows how to distribute loss to child modules

- Pytorch supports parallelization on GPUs

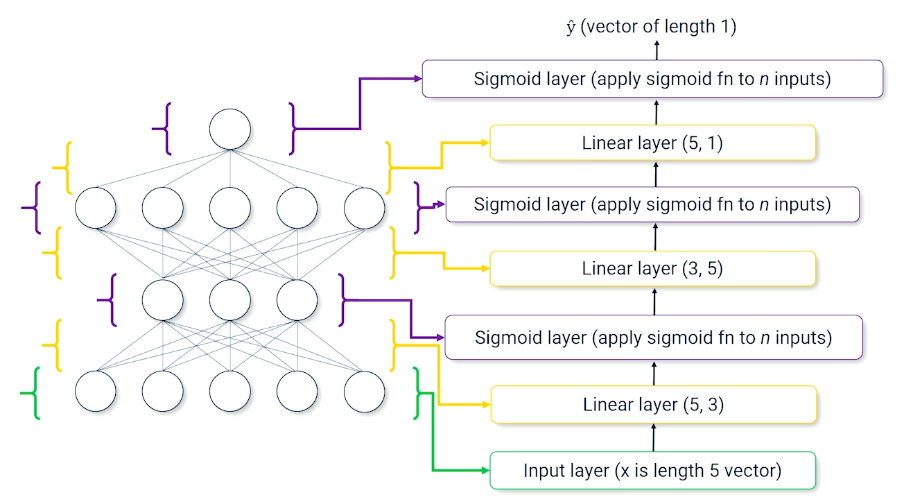

Example of a neural network broken down into layers:

Pytorch Model Example

Note that we have init to define the layers (in the above image),and we can define how the layers are link with the forward method.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

class BatClassifier(nn.Module):

def __init__(self):

self.linear1=nn.Linear(5,3)

self.sig2=nn.Sigmoid()

self.linear3=nn.Linear(3,5)

self.sig4=nn.Sigmoid()

self.linear5=nn.Linear(5,1)

self.sig6=nn.Sigmoid()

def forward(self,x):

inter1 = self.linear1(x)

inter2 = self.sig2(inter1)

inter3 = self.linear3(inter2)

inter4 = self.sig4(inter3)

inter5 = self.linear(inter4)

y_hat = self.sig6(inter5)

return y_hat

# To predict

model = BatClassifier()

y_hat = model(x)

In pseudo code for training the model, we just need to define the loss function and call backward().

1

2

3

4

5

6

# To train

model = BatClassifier()

foreach x,y in data:

y_hat = model(x)

loss = LossFn(y_hat,y)

loss.backwards()

- Pytorch and other modern deep learning APIs make it easy to create and train neural networks

- Getting the data is often the bottleneck

- Specific design of the neural network can be somewhat of a black art

- Pytorch can push data and computations to GPUs, allowing for parallel processing, which can greatly speed up training

- Parallelization is one of the reasons why neural networks are favored function approximators.

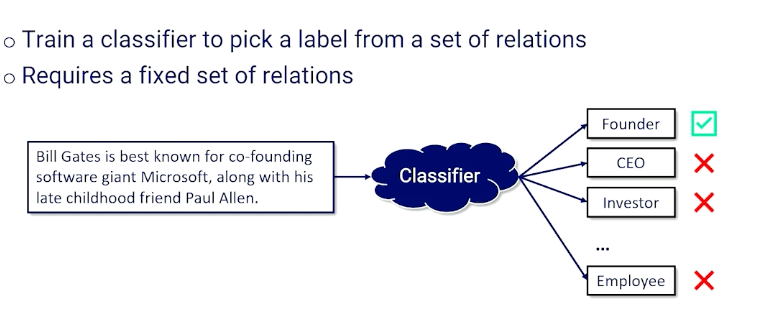

Module 3: Classification

Introduction to Classification

Examples of classifications: Bayesian, Binary, Multinomial classification. It is important to understand classification because many topics in NLP can be considered as a classification problem.

- Document topics (news, politics, sports)

- Sentiment classification

- Spam detection

- Text complexity

- Language / dialect detection

- Toxicity detection

- Multiple-choice question answering

- Language generation

Features of classification

| Inputs | Outputs |

|---|---|

| word, sentence, paragraph, document | a label from a finite set of labels |

- Classification is a mapping from $V^* \rightarrow \mathcal{L}$

- $V$ is the input vocabulary, a set of words

- $V^*$ is the set of all sequence of words

- $X$ is a random variable of inputs, such that each value of $X$ is from $V^*$.

- X can take on the value of all possible text sequences

- $Y$ is a random variable of outputs taken from $\ell \in \mathcal{L}$

- $P(X,Y)$ is the true distribution of labeled texts

- The joint probability wit hall possible text document and all possible labels

- $P(Y)$ is the distribution of labels

- irrespective of documents, how frequently we would see each label

The problem is we don’t typically know $P(X,Y)$ or $P(Y)$ except by data.

- Human experts label some data

- Feed data to a supervised machine learning algorithm that approximates the function $classify: V^* \rightarrow \mathcal{L}$

- Did it work? Apply $\textit{classify}$ for some proportion of data that is withheld for testing

An additional source of problem is $V^*$ is generally considered unstructured.

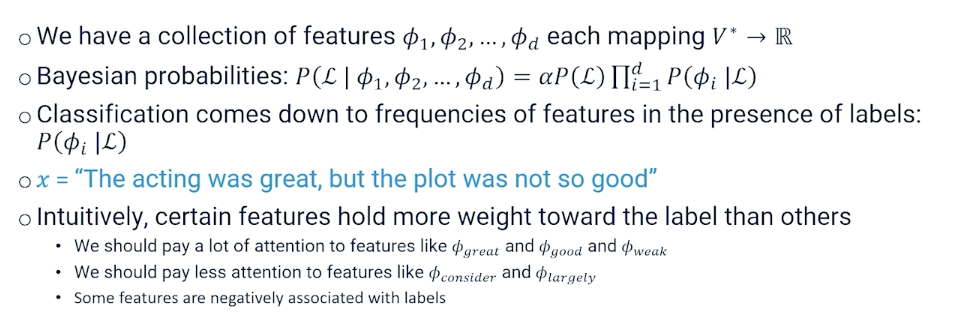

- Supervised learning likes structured inputs, which we call features ($\phi$)

- Features are what the algorithm is allowed to see and know about

- In a perfect world, we simply throw away all unnecessary parts of an input and keep the useful stuff.

- However, we don’t always know what is useful

- But we often have some insight - feature engineering; have a way of breaking down the unstructured data to be something more useful.

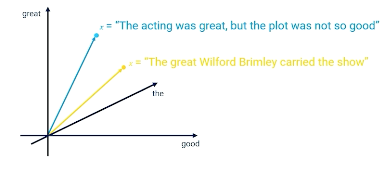

Bag of words

In bag of words,

- Features are word presence

- Discard word order

- $\phi$ = { a , the, acting, great, good, plot, …}

- When these words are alone, we call them unigrams, and in pairs will be bigrams, trigrams and so on.

- $x$ is a d-dimensional vector of Features

- Bag of words can also be word frequency instead of word presence. e.g $\phi_{the} = 2, \phi_{was} = 2$

- Other definitions are possible as well.

With that,

- A feature vector $\phi(x) \in \mathbb{R}^d$

- Example = $[1, 2, 2, 1, 1, 0, 0, 1, \cdots, 0]$

- Every input $x$ is a point in $d$-dimensional space

So if you imagine if every document can be represented in 2 dimensional space, then document can be represented as a vector based on its features:

Then we can start to do things like classifications, similarity etc.

- Features are what we select for an algorithm to operate on

- Good feature sets lead to good algorithm performance.

- Bad feature set leads to degraded algorithm performance

Bayesian Classification

Probabilistic approach to guessing the label that accompanies a text (sentence, document), an example as follows where we are trying to find the label or the sentiment of the document:

Apply The Bayes equation:

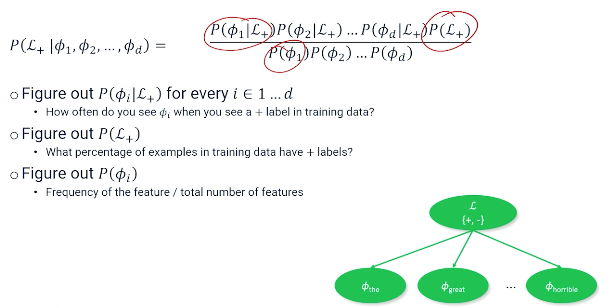

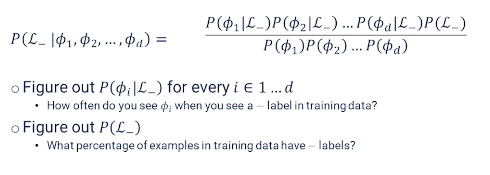

\[P(\mathcal{L}\lvert \phi_1,\phi_2,\phi_3,...,\phi_d) = \frac{P(\phi_1,\phi_2, ..., \phi_d \lvert \mathcal{L}) P(\mathcal{L})}{P(\phi_1,\phi_2,...,\phi_d)}\]But this equation is very complicated, we can apply the naive bayes assumption - words features are independent of each other:

\[\frac{P(\phi_1 \lvert \mathcal{L})P(\phi_2 \lvert \mathcal{L})...P(\phi_d \lvert \mathcal{L})P(\mathcal{L})}{P(\phi_1)P(\phi_2)...P(\phi_d)}\]An example:

Can you can also do the same for the negative label:

Ultimately, we still need to turn these probabilities into a classification. One way is to see whether:

\[P(\mathcal{L+}\lvert \phi_1,\phi_2,\phi_3,...,\phi_d) > P(\mathcal{L-}\lvert \phi_1,\phi_2,\phi_3,...,\phi_d)\]We can set $\alpha = \frac{1}{P(\phi_1)P(\phi_2)…P(\phi_d)}$ to ignore the denominator which makes things easier to compute (and also often safe to ignore the denominator in NLP).

In summary:

- Bayesian model sees text as a latent (unobservable) phenomenon that emits observable features.

- The frequency of which these observable features appear in the text is evidence for the label.

- Learning the bayesian model means filling in the probability distributions from the co-occurrence of features and labels.

- If unigrams don’t give good results, try bigrams or trigrams.

However these comes with limitations; we might want to apply some ML in the future where some of these features are really important to the classification and be able to assign particular weights to those features, possibly even negative weights to say these things might be statistically related to a particular label but actually generally want to think of them as harmful to a particular classification. So the ability to have negative features instead of just every feature being positive with a low or high probability.

Log Probabilities And Smoothing

Two problems:

- Multiplying probabilities makes numbers very small very fast and eventually floating point precision becomes a problem.

- sometimes probabilities are zero and multiplying zero against other probabilities makes everything zero.

To manage the first problem: shift to log scale. For instance, $log(1)=0, log(0) = -\infty$. Now we do not have to worry about numbers zeroing out (through small probabilities can become very large).

Also, changing to log scale now, we sum things up instead of multiplying them.

- $P(A)P(B) \rightarrow = log P(A) + log P(B)$

- $\prod P(\phi_i) = sum P(\phi_i)$

- $\frac{P(A)}{P(B)} = log P(A) - log P(B)$

For the second problems, zeros can still appear. Suppose “cheese” is a feature but never appears in a positive review, then the conditional probability will be 0 irregardless of the features values ; which also affects our normalization constant $\alpha$.

To solve this, modify the equation so that we never encounter features with zero probability by “pretending” that there is a non zero count of text by adding 1 to the numerator and denominator:

\[P_{smooth} (\phi_i) = \frac{1+count(\phi_i)}{1+\sum count(\phi_i)}\]We call this technique “smoothing”, naturally this introduces a small amount of error, but it usually does not affect the classification outcome because the error is applied uniformly across all features.

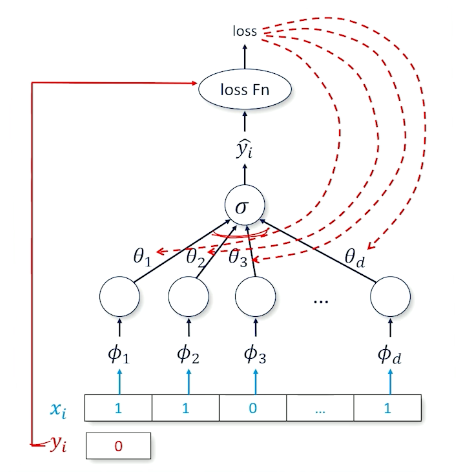

Binary Logistic Regression

A small recap:

In logistic regression, we have a set of coefficients:

- $\theta_1, …, \theta_d, \theta_i \in \mathbb{R}$

- Also called weights or parameters

- If you set the coefficients just right, you can design a system that gets classification correct for a majority of inputs.

- But you need to learn them.

On top of this, you need:

- Binary labels $\mathcal{L}$

- A scoring function $score(x; \theta) = \sum \theta_j \phi_j(x)$

- This can be re-written in matrix form: $ \mathbf{\theta^T \phi}(x)$

- Then we can have $classify(x) = sign(score(x;\theta))$

How to learn the weights?

- Probabilistically $P(Y=+1 \lvert X = x; \theta) = f(score(x;\theta))$

- $f$ converts score into a probability distribution $[0,1]$

- An example of $f =\sigma$ the sigmoid function (aka logistic function)

- So the sigmoid will return a +1 or -1 whether it is less than 0.5 (or any cut off)

Parameter Learning

Learn the optimal set of parameters $\theta^*$ such that the score function produces the correct classification for every input $x$:

\[\begin{aligned} \theta^* &= \underset{\theta \in \mathbb{R}^d}{argmax} \prod_{i=1}^n P(y=y \lvert X=x_i ; \theta) \\ \theta^* &= \underset{\theta \in \mathbb{R}^d}{argmax} \sum_{i=1}^n log P(y=y \lvert X=x_i ; \theta) \\ \theta^* &= \underset{\theta \in \mathbb{R}^d}{argmin} \sum_{i=1}^n - log P(y=y \lvert X=x_i ; \theta) \\ \end{aligned}\]Recall that $P(Y=y \lvert X=x ; \theta) = \sigma(y\cdot score(x;\theta))$ and $\sigma = (1+e^{-x})^{-1}$

\[\begin{aligned} \theta^* &= \underset{\theta \in \mathbb{R}^d}{argmin} \sum_{i=1}^n - log \sigma(y\cdot score(x_i;\theta)) \\ \theta^* &= \underset{\theta \in \mathbb{R}^d}{argmin} \sum_{i=1}^n log (1+exp(-y_i \cdot score(x_i ;\theta))) \\ \theta^* &= \underset{\theta \in \mathbb{R}^d}{argmin} \sum_{i=1}^n log (1+exp(-y_i \cdot \mathbf{\theta^T \phi}(x_i))) \\ \end{aligned}\]Parameter Learning With Neural Networks

Consider the case of a 1 layer neural network:

- Sigmoid at the head to produce a probability close to 1 or close to 0

- Target is ${0,1}$ instead of ${-1,1}$

- Recall $loss(\hat{y},y) = log(\sigma(y\cdot \hat{y}))$

- Multiplies the score $(\hat{y})$ by target $y=\pm 1$ before sending it through sigmoid then log

- So, to start thinking about this we are going to use another tool box called binary cross entropy which ia standard loss function:

Cross entropy means when when y is true, you want $log(p(\hat{y}))$ to be high and vice versa.

The last thing to talk about is batching, which is just splitting up the data and loading it.

Training

So the entire training looks like this:

1

2

3

4

5

6

7

initialize θ

For t = 1 to max_epochs:

For b = 0 to num_batches:

batch = data[b*m:(b+1)*m]

y_hats = f(batch_xs; θ)

L = loss(y_hats, batch_ys)

θ = θ - α(∂L/∂θ)

Note that $\alpha$ refers to the learning rate which is usually a hyperparameter, too low and not learn anything and too high you “overshoot” and not converge.

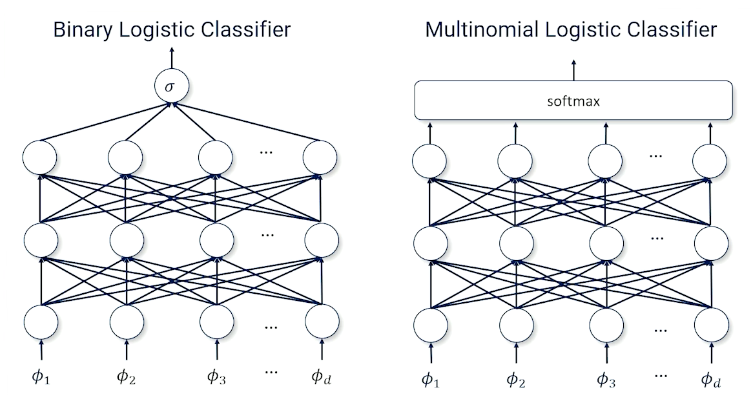

Multinomial Logistic Classification

Suppose we have more than 2 labels, $k$ labels $\mathcal{L} = {\ell_1, …, \ell_k}$

- Set of features $f_j : V^* \times \mathcal{L} \rightarrow \mathbb{R}$

- $k \times d$ input-output parameters

- $k$ is the number of labels

- $d$ is the number of features

- for document $x$ with label $y$, iterate through all labels and all features and set $f_{\ell, \phi}(x,y)$ if feature $\phi$ and label $\ell$ are in the document

- What we have:

- A set of $k$ labels $\ell_1, …, \ell_k$

- A collection of $m$ input-output features $f_1(x,y),…,f_m(x,y)$

- Parameters for every input-output features $\theta_1,…,\theta_m$

- We are going to want to turn the score into probability

- Previously we have used sigmoid $\sigma$

- But sigmoid only works for a single comparision, so, what can we do?

Softmax

- Need a new tool that can take arbitrary many inputs : Softmax

- Softmax takes a vector of numbers and makes one close to 1 and the rest closes to 0, with the vector summing up to 1

- Exponential to make big numbers really big and small numbers really small

Multinomial Logistic Classification As Probability

We want a probability distribution over labels in a document

\[P(Y \lvert X=x ; \theta) = softmax([score(x,y_1;\theta),...,score(x,y_n;\theta)])\]And one of this numbers will nbe close to 1 and the rest are going to be close to 0.

If we are interested in a specific label, then we can simply calculate $P(Y = \ell \lvert X=x;\theta)$

\[\begin{aligned} P(Y=\ell \lvert X = x ; \theta) &= \frac{e^{score(x,\ell ; \theta)}}{\sum_{\ell'}e^{score(x,\ell' ; \theta)}} \\ &= \frac{e^{score(x,\ell ; \theta)}}{z(x;\theta)} \end{aligned}\]$z(x;\theta)$ is what makes multinomial logistic regression computationally expensive - to compute the probability even for a single label, you need to calculate / sum across all labels and normalize.

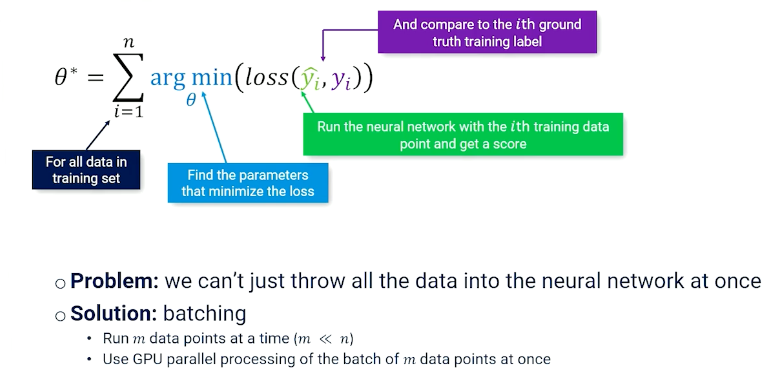

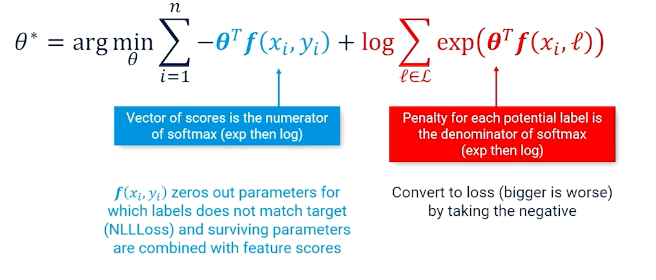

Multinomial Logistic Classification: Parameter Learning

We start from the same equation as before,

\[\begin{aligned} \theta^* &= \underset{\theta}{argmin} \sum_{i=1}^n - log P(y=y \lvert X=x_i ; \theta) \\ \theta^* &= \underset{\theta}{argmin} \sum_{i=1}^n - log \bigg[ \frac{exp(score(x,y_i ; \theta))}{z(x;\theta)} \bigg] \\ \theta^* &= \underset{\theta}{argmin} \sum_{i=1}^n - log (exp(score(x,y_i ; \theta))) + log {z(x;\theta)} \\ \theta^* &= \underset{\theta}{argmin} \sum_{i=1}^n - score(x,y_i ; \theta) + log {z(x;\theta)} \\ \end{aligned}\]- Find the parameters that push up the score for the correct label but push down the score for all other labels

- Otherwise, one can just win by making parameters $\infty$

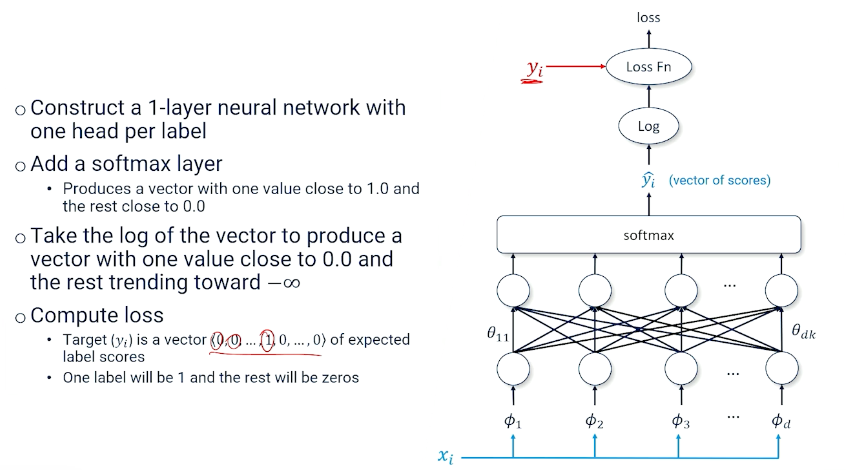

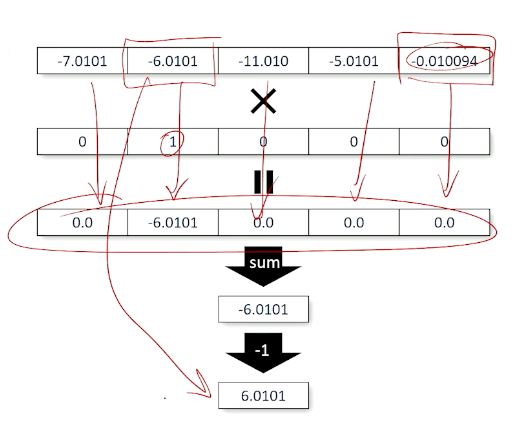

So, the output is a vector of predicted log scores, and vector of target values, and we can multiply them together:

In summary, this is also known as the negative log likelihood loss, so we can have the predicted log scores, vector of target values and we can multiply them divided by the sum, and taking the negative:

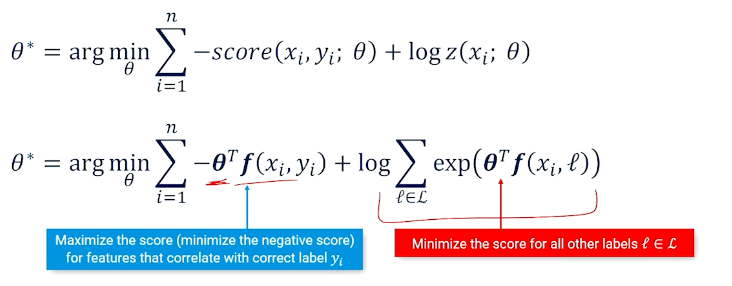

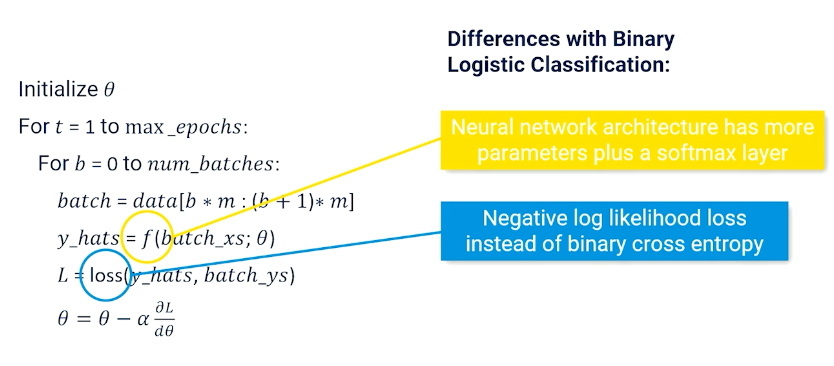

Multinomial Logistic Classification: Neural Net Training

The same as before:

So now the $f$ is more complicated and the loss is the negative log likelihood.

Note, in typicals APIs the cross entropy loss module combines the softmax, log and negative log loss together.

Multilayer Classification Networks

More layers in a neural network can approximate more complex functions. So you can still take the binary logistic classifier or multiple classifications and add more layers.

So this also opens up/allow more sophisticated neural network architectures such as LSTM, Transformers etc.

Module 4: Language Modeling

Language Modeling

A simplified (yet useful) approximation of the complex phenomenon of language production. To recall the use cases:

- Autocomplete

- Summarization

- Translation

- Spelling and grammar correction

- Text generation

- Chatbots

- Speech recognition

- Image captioning

What makes a good language model?

- model fluency of a sequence of words, not every aspect of language production

- Fluent: Looks like accurate language (for the purposes of this topic)

- Does a sequence of words look like what we expect from fluent language?

- Can we choose words to put in a sequence such that it looks like fluent language?

Modeling Fluency

The question becomes, how do we model fluency? Consider the following:

- A set of words that our system knows $\mathcal{V}$

- How many words does a system needs to know?

- Example: English has $> 600,000$ words

- May want to limit that to the top 50,000 most frequently used words

- What if we come across a word that is not in the dictionary?

- Add special symbols to the dictionary to handle out-of-vocabulary words

-

UNK: an unknown word (sometimes also OOV)

-

- Other special symbols:

-

SOS: Start of sequence -

EOS: End of sequence

-

So how can we make use of all these to start modeling? We can resort back to probability:

- What is the probability that a sequence of words $w_1, …, w_n$ would occur in a corpus of text produced by fluent language users?

- Fluency can be approximated by probability, e.g $P(w_1,…,w_n)$

- Let’s create a bunch of random variables $W_1,…,W_n$ such that each variable $W_i$ can take on the value of a word in vocabulary $\mathcal{V}$

Modeling Fluency Examples

Recall the chain rule from bayesian statistics:

\[P(W_1=w_1,...,W_n = w_n) = \prod_{t=1}^n P(W_t = w_t \lvert W_1=w_1,...,W_{t-1}=w_{t-1})\]The conditional part is the “history” of the t-th words (also called the “context”).

One of the challenges we will face is figuring out how to squeeze the history/context into a representation that makes an algorithm better at predicting the t+1 word. For an arbitrary long sequence, it can be intractable to have a large (or infinite) number of random variables.

- Must limit the history/context by creating a fixed window of previous words

How?

- First model is to throw away the history completely (unigram approach)

- We assume all words are independent of all other words, i.e

- $\prod P(W_t = w_t)$

- Obviously wrong

Consider the bigram approach:

- Assume a word is only dependent on the immediately previous word:

- $\prod P(W_t = w_t \lvert W_{t-1}=w_{t-1})$

- We can extend this to look behind $$ words.

We are not going to make k random variables and try to learn a joint probability across all variables. Instead, we are going to try to approximate the conditional probability using a neural network.

\[P(W_1=w_1,...,W_{n-1} = w_{n-1},W_{n} = w_{n}) = \prod_{t=1}^n P(W_t = w_t \lvert W_1=w_1,...,W_{t-1}=w_{t-1} ; \theta)\]Can we go a little further? Can we go all the back to one and not be limited by $k$? I.e can our neural network do it in n-gram where n is the length of every sequence in the document? Then we will have a powerful model.

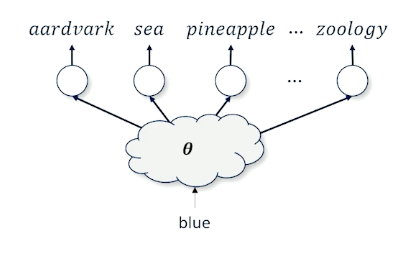

Neural Language Models

We want to take a word blue and figure out what is the next best word.

- The issues is there are 50,000 words

- We also have to deal with the context (words before blue)

There are also other problems:

- Network networks require inputs to be real-valued numbers

- One possible solution is to use the one-hot vector

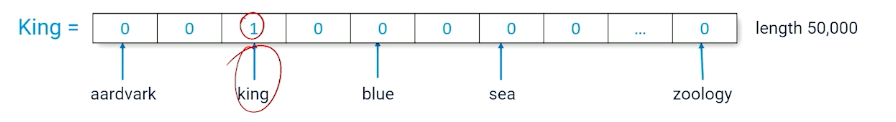

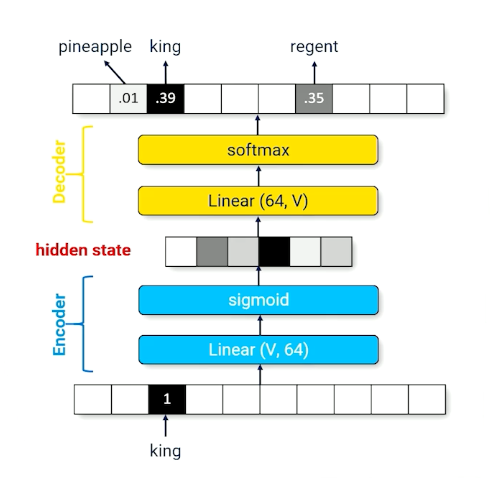

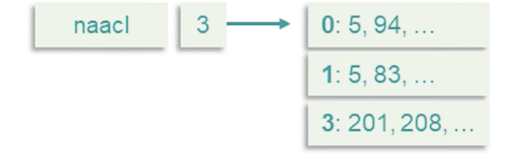

A Token is the index of a word (e.g in the above image, king =2)

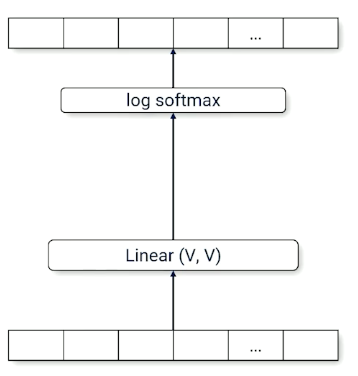

Here is a bigram model, using the one hot vector as input, takes in a single word, and output a single word:

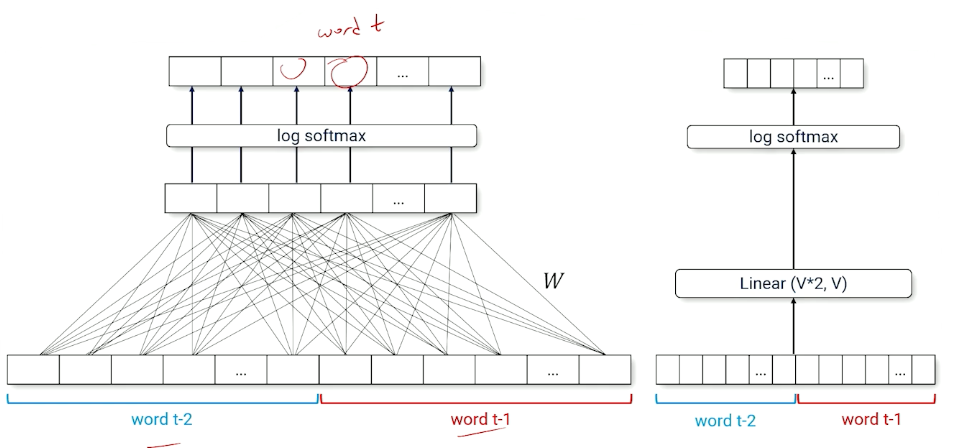

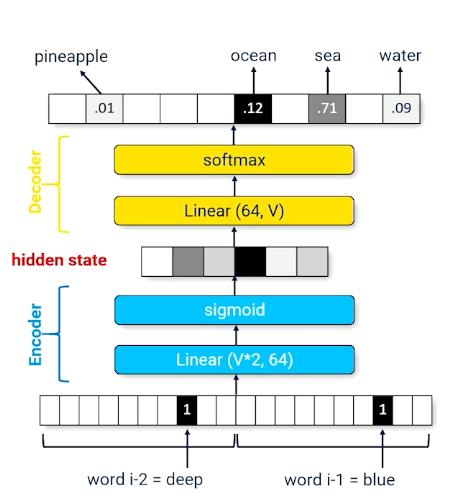

Consider a trigram model it will look like this:

Notice that the linear layer is now $\mathcal{V}*2$, and does not “scale” well.

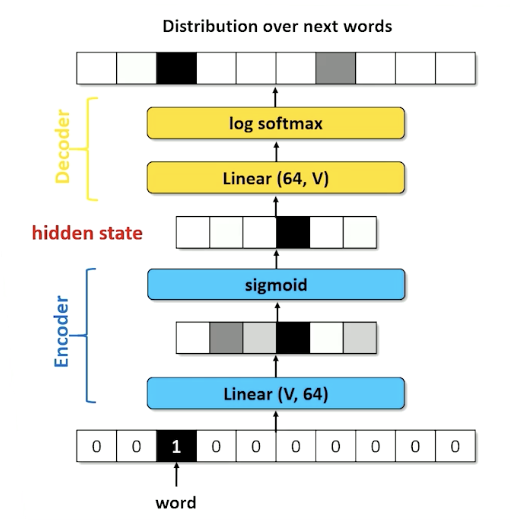

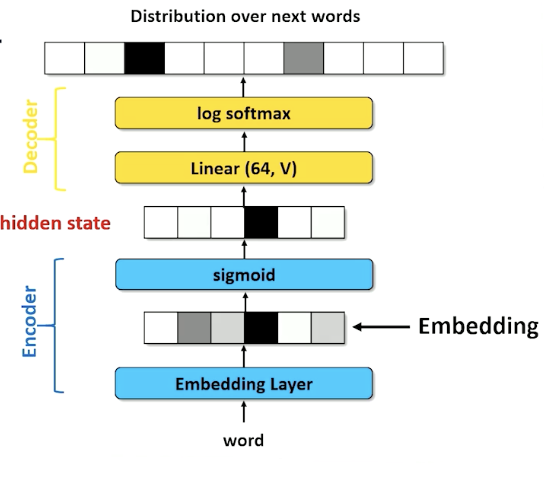

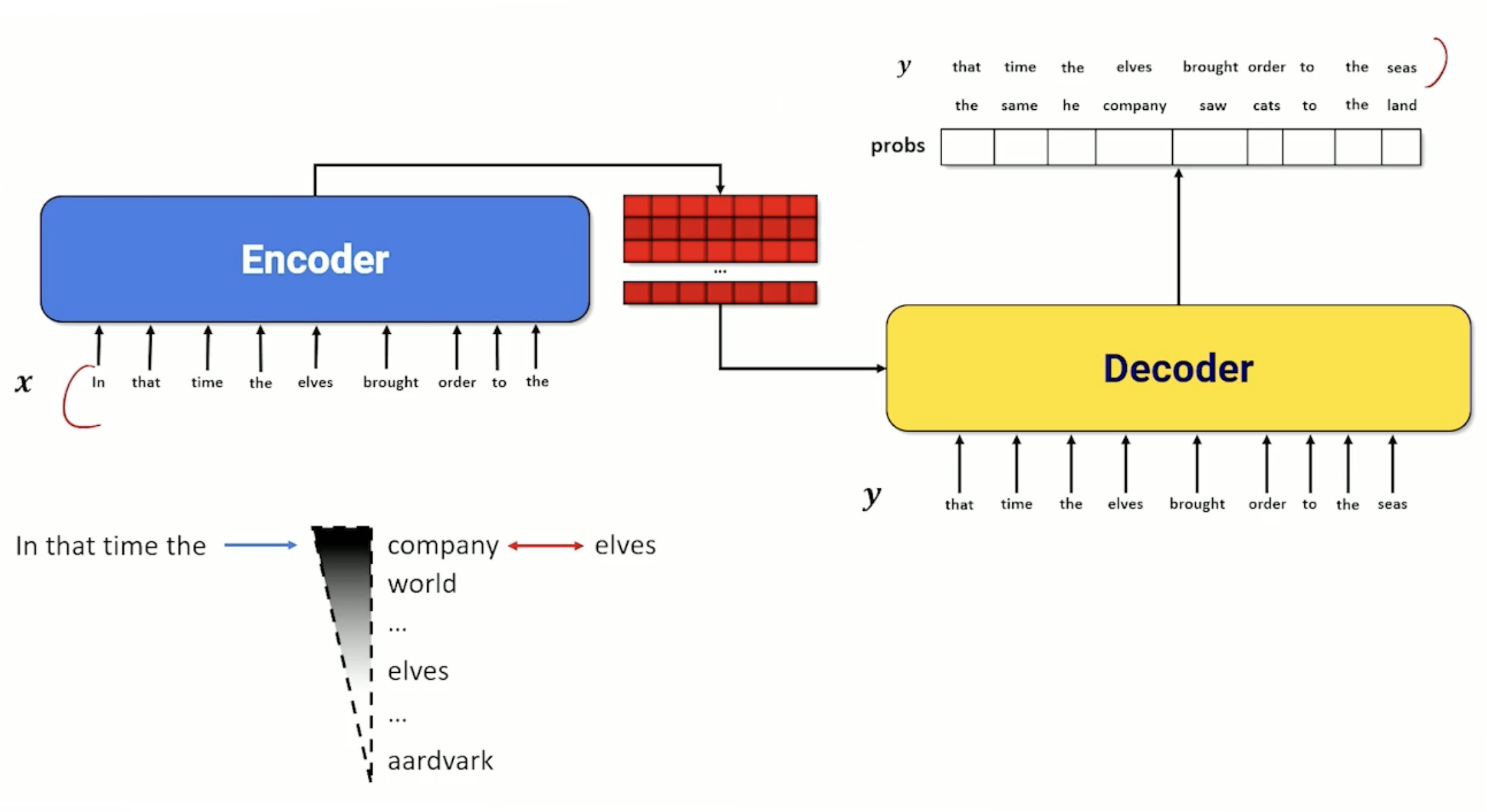

Encoders and Decoders

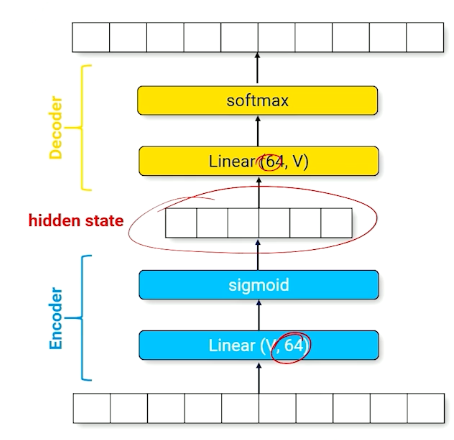

Ultimately, we need a way to deal with context and history size. Introducing encoders and Decoders

-

encoder Send input through a smaller-than-necessary layer to force the neural network to find a small set of parameters that produced intermediate activations that approximates the output

The above image shows the encoder, which is forcing the input layer to be represented by a layer of size 64.

- A good set of parameters will represent the input with a minimum amount of corruption

- Can be “uncompressed” to produce a prediction over the full range of outputs

decoder : A set of parameters that recovers information to produce the output

Now,

- Consider a neural network that implements the identity function

- Neural network is forced to make compromise

- “KING” produces a hidden state activation similar to “regent”

There will definitely be some information loss, and, what is the use of going through such material? We can change the network such that it outputs the next word instead:

- The hidden state now compresses multiple words into a fixed size vector

- can be reinterpreted as summarizing the history (context vector) - Used interchangeably

Extra note about pytorch, there is a the pytorch.nn.Embedding(vocab_size, hidden_size) that:

- Takes a token id (integer) as an input

- Converts to a one hot vector of length vocab_size,

- maps a vector of length hidden_size.

Encoders perform compression, which forces compromises which forces generalization. We know we have a good compressor (encoder) if we can successfully decompress. However there are still problems:

- We cannot have different architectures with different size of context history, like one for bigram, trigram etc. That is not going to work.

- With very large scale, its hard to deal with hard long complicated sequences.

Solution? RNN!

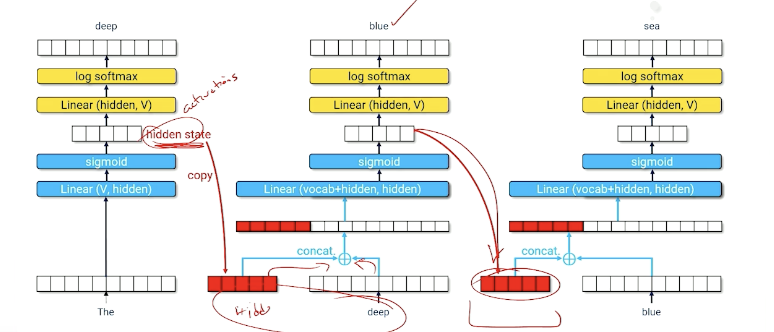

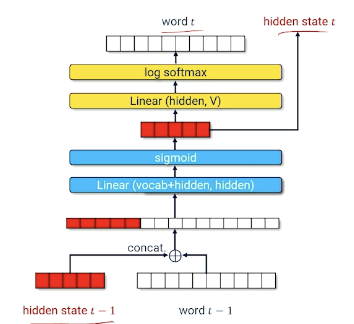

Recurrent Neural Networks

Really want one architecture that can manage all these cases.

- Text sequences can have different history lengths

- Recurrence: process each time-slice recursively

The idea is to use the hidden state and use it back as an input.

Or to represent it in another form:

- Hidden state encodes useful information about everything that has come before

- Neural net must learn how to compress hidden state $t-1$ and word $t-1$ into a useful new hidden state that produces a good guess about word $t$.

Training An RNN

- Training data:

- $x$ token id of $word_{t-1}$

- $y$ token id of $word_t$

- Input: $x + \text{hidden state}_{t-1}$

- Output: $ \langle log P(w_1), …, log P(w_{\lvert \mathcal{V}\lvert}) \rangle$

- Cross entropy loss

- loss = - loss $P(w_1)$

- So if our loss is 0, means our model mostly got it correct, and otherwise.

So, once you have trained the network, how do you start prediction?

- Start with a word $(word_i)$ and an empty hidden state (all zeros)

- Generate a distribution: $ \langle log P(w_1), …, log P(w_{\lvert \mathcal{V}\lvert}) \rangle$

- $word_{t+1}$ is the argmax of the distribution (i.e the one with the highest score/probability)

- Feed the output back into the neural network for the next time slice $t+1$.

- In other words use generated word $w_{t+1}$, plus the new hidden state as input to produce word $w_{t+2}$

- Repeat until you are done

Now, what else can we do to improve the generation of the neural network?

Generative Sampling

The problem with argmax is it can sometimes results in a local maximum, such as some words always having high scores. Instead of argmax, we can do multinomial sampling from $\langle P(w_1),…,P(w_{\lvert \mathcal{v} \lvert}) \rangle$. We can pick a position/score based on how strong that probability distribution is.

For example:

- History = A dark and stormy, what comes next?

- So suppose you output words with [(night,0.4), (sea, 0.1), …]

- You will pick night with 0.4, sea with 0.1, and so on.

- This works if your prediction is nice and peak around certain words

-

However, sometimes multinomial sampling can appear too random, especially when there are alot of options with similar probabilities.

- Can we “train” the neural network to be more “peaky”? E.g instead of $[0.1,0.1,0.1,…]$ we want $[0.8, 0.01,…]$

To achieve this, we can introduce a new variable called temperature, $T \in (0,1]$ and sample from:

\[norm \bigg(\bigg\langle \frac{log P(w_1)}{T}, ..., \frac{log P(w_{\lvert \mathcal{v} \lvert})}{T}\bigg\rangle\bigg)\]Divide every probability score by this temperature value, and then normalize this so it is between 0 and 1. Let’s observe what will happen:

- Divide by $T=1$ means the distribution is unchanged

- Dividing by a number $T <1$ means all the numbers increase

- as temperature goes to 0, the highest score goes to infinity.

- This will look / act more like argmax.

However, RNN can still make mistakes as generation from a RNN is an iterative process of feeding outputs into the neural network as inputs. RNNs tend to forget parts of context-hard to pack things into the context vector.

So, LSTM!

Long Short-Term Memory Networks

- Hard to learn how to pack information into a fixed-length hidden state vector

- Every time slice the RNN might re-use part of the vector, overwriting or corrupting previous information.

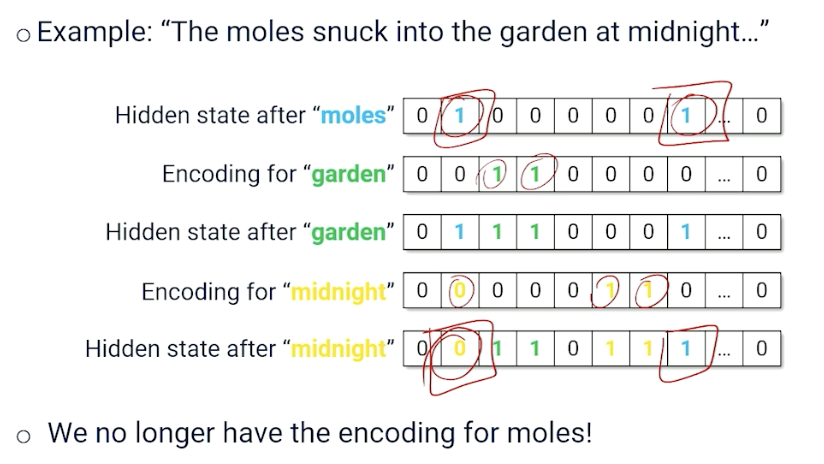

An example (The vector here represents an embedding):

If you notice, the hidden state after midnight has overwritten a piece of information here so that we can capture midnight; so both midnight and moles need to use index 1; we effectively lose the information about moles.

That brings us to LSTM, would be nice if the neural network could learn:

- What is worth forgetting

- Which part of the new word input is worth saving

- This means we can maintain a “better/valid” hidden state longer.

How does Long short Term Memory (LSTM) achieve this?

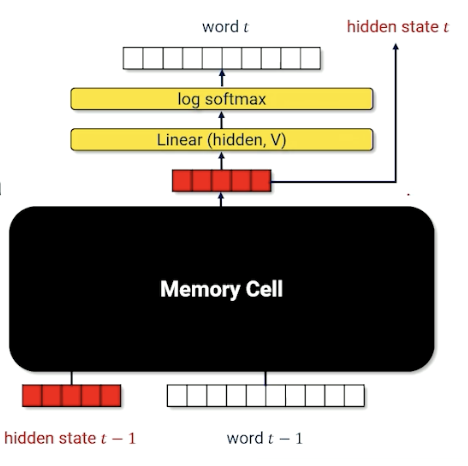

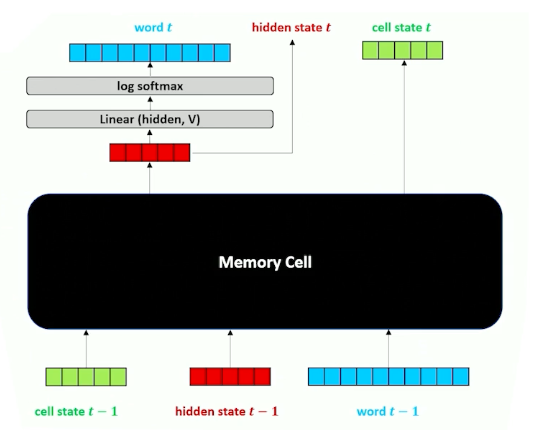

- Replace internal parts of a RNN encoder with a specialized module called a memory cell.

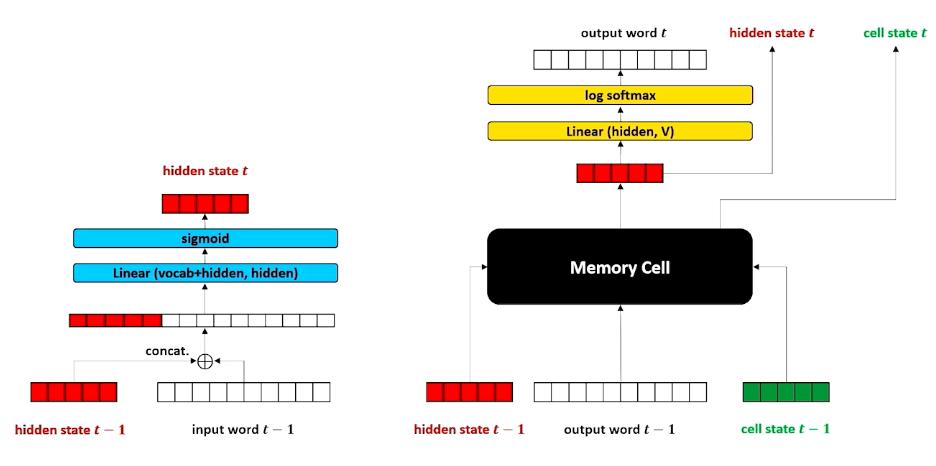

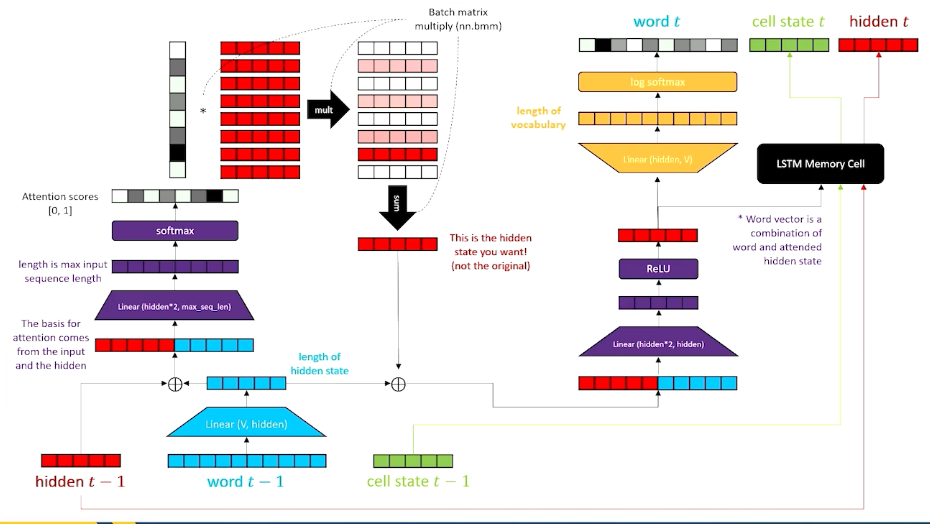

LSTM: A Visual Walkthrough

Let’s first look at the computational graph:

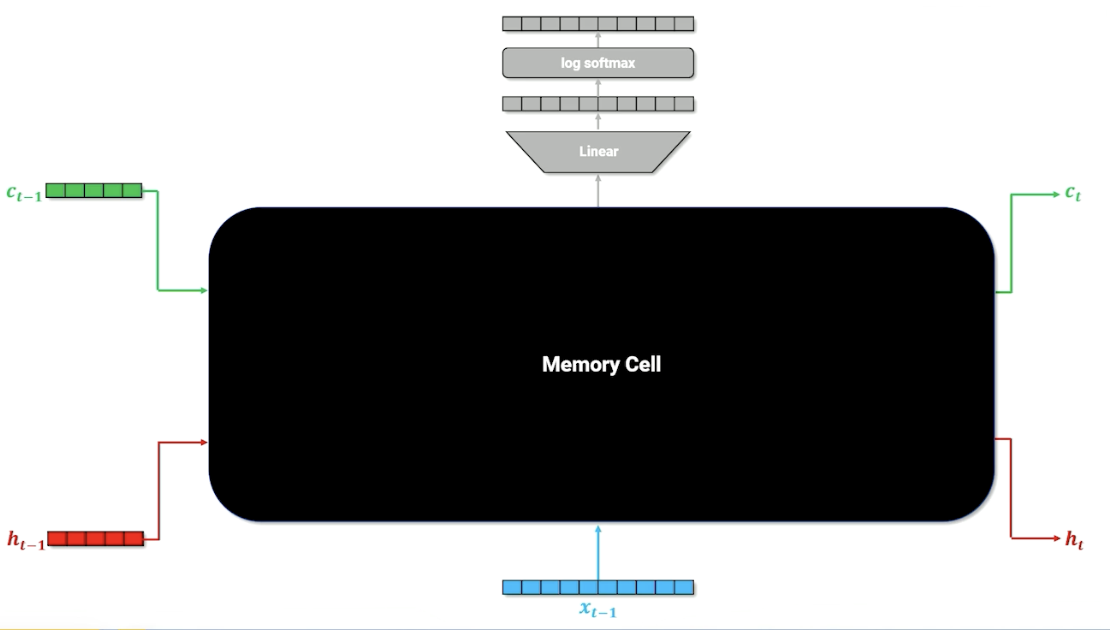

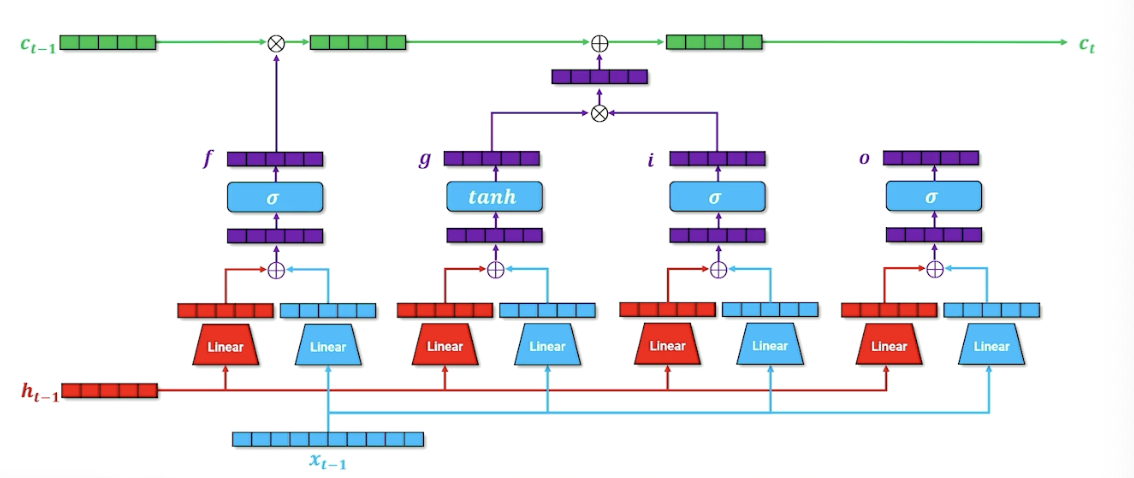

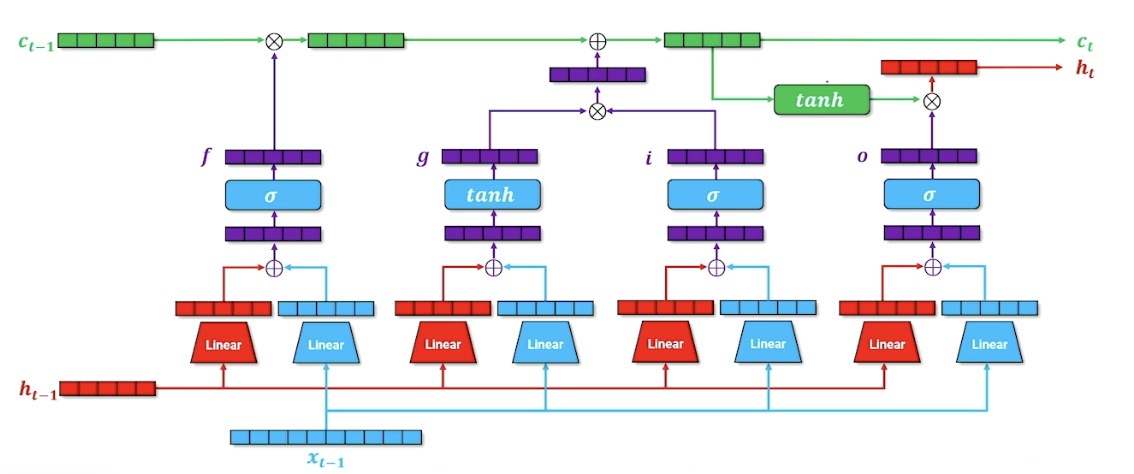

$x$ is the one hot vector of the word, and $h$ is still the context/history, similar with RNN. There are now is an additional parameter $c$, the cell memory. Let’s look deeper into this cell memory (black box) to know what is going on:

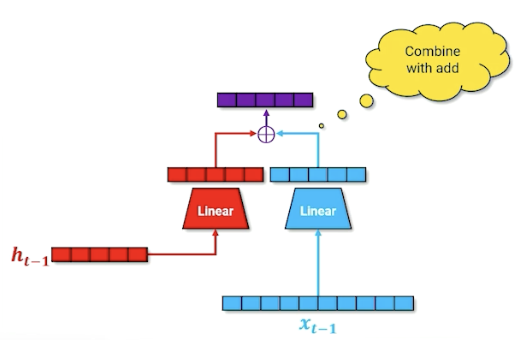

Remember, the linear layer compress the one hot into something useful. We also do the same for the hidden layer and combine them:

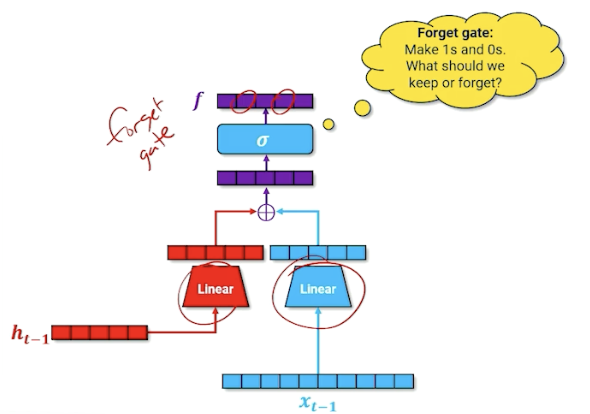

Then, we take that and run it through a sigmoid (which will turn some values to 0 or 1). We are going to call this the forget gate.

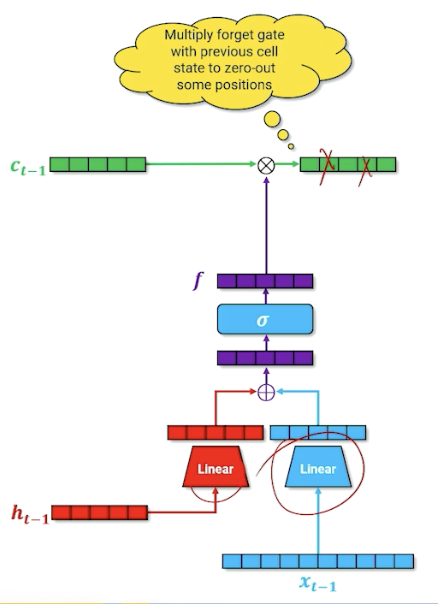

We take the output of the forget gate, and multiply it with the cell memory - think of it as “deleting” information in the cell memory into the next time step; this is how the neural network learns what to forget. (For those familiar, this is the forget gate)

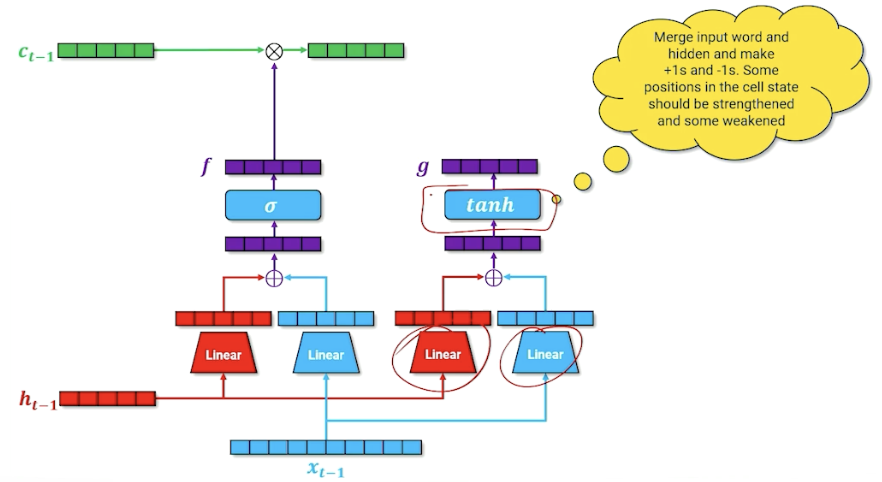

In addition, we are going to do another parallel structure inside or our neural network that is going to do our cell memory update. Note that the linear layers will learn a different set of parameters. (For those familiar, this is part of the input gate)

The goal is to produce a new cell memory, we do not use a sigmoid but tanh. To recall, tanh produces numbers that are $[-1,1]$ instead of sigmoid $[0,1]$. So what we are going here is merging our word and our hidden state to try to figure out what things should be $+1, -1$. The way we can interpret this is. this is going to be introduced to our cell memory from the previous state, by saying which input in the cell should be strengthened or weakened by adding/substracting one.

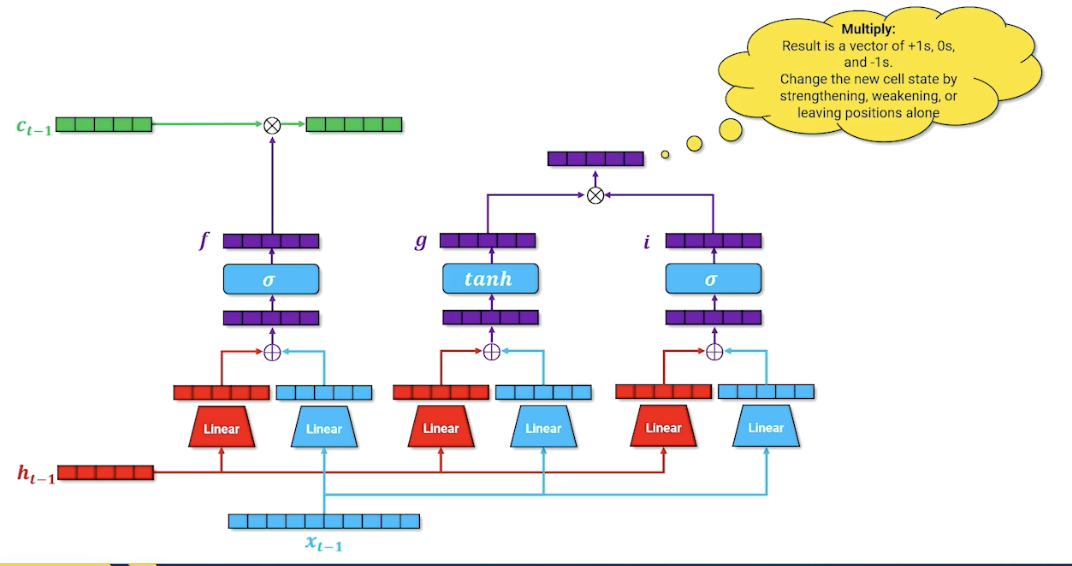

We are not going to add this to the cell memory yet, and going to introduce another parallel structure and have another gate that essentially considers whether this word $x$ should be added to the cell memory. (For those familiar, this is called the input gate). Is this word important that should be carried on or it can be ignored?

We take the input gate $i$ and multiply by the new cell memory $g$:

This resulting vector not just has plus ones and negatives one but also zeros, so either strengthen, weaken our cell memory or zero, leaving it unchanged. We finally take this and multiply with $c_{t-1}$ to form the new $c_t$.

For one last time, we have another parallel block (For those familiar, this is the output gate), the idea here is to say what needs to be part of the next hidden state, which is going to be used for both the decoder but it is also going to be passed on to the next layer time step in our recurrence.

We are also going to incorporate in our cell memory, so if we want to figure out what goes into our hidden state, it is going to be a combination of what we want to go forward through our output state as well as whatever cell memory is going to say. The second tnah here is going to squish everything back down to $[-1,1]$ (Lecture mentioned $[0,1]$ somehow but its tanh? ) and combining it with our output gate to produce our new hidden state.

LSTM: A Mathematical Walkthrough

The forget gate can be written as:

\[f = \sigma(W_{x,f}x + b_{x,f} + W_{h,f}h + b_{h,f})\]The input gate / cell memory can written as:

\[\begin{aligned} f &= \sigma(W_{x,i}x + b_{x,i} + W_{h,i}h + b_{h,i}) \\ g &= tanh(W_{x,g}x + b_{x,g} + W_{h,g}h + b_{h,g}) \\ \end{aligned}\]Then update cell state $c$:

\[c_i = (f \times c_{i-1}) + (i \times g)\]Then, we can now calculate our output gate as well as update our hidden state:

\[\begin{aligned} o &= \sigma(W_{x,o}x + b_{x,o} + W_{h,o}h + b_{h,o}) \\ h_i &= o \times tanh(c_i) \end{aligned}\]In summary,

- The full LSTM encoder-decoder network containing the memory cell

- Hidden state summarizes the history of the text

- Cell state is information needed for the memory cell to do its job, passed from time step to time step

As a side note, usually for LSTM, we refer to the entire block as one LSTM block and do not map out the internals of it.

LSTM cells pass extra cell state information that does not try to encode text history but information about how to process that history - meta knowledge about the text history. LSTM were SOTA for a long time and are still useful when handling recurrent data if indeterminate length, and holds “Short-term” memory longer. But LSTM can still get overwhelmed trying to decide what to encode into the context vector as history gets longer and longer.

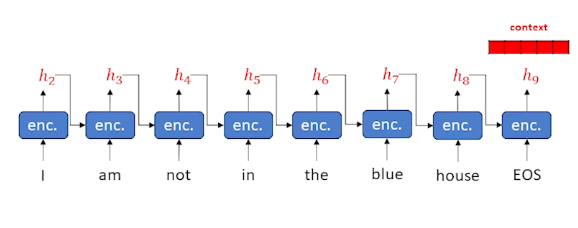

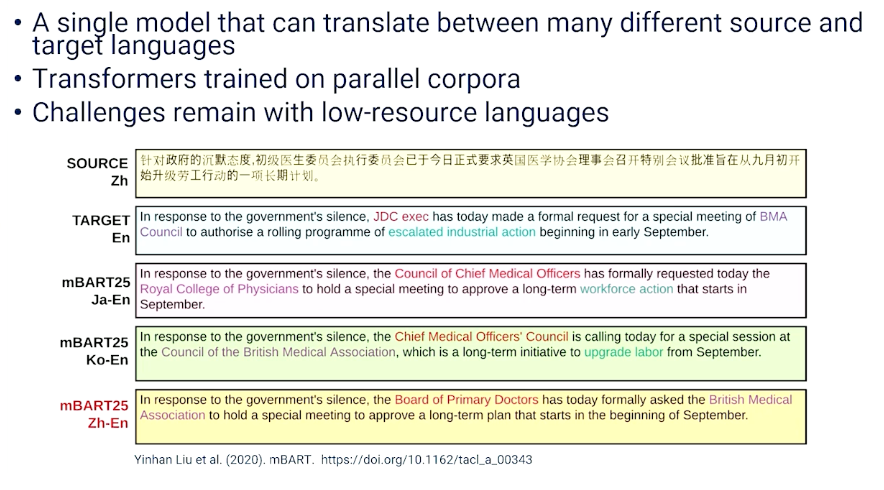

Sequence-to-Sequence Models

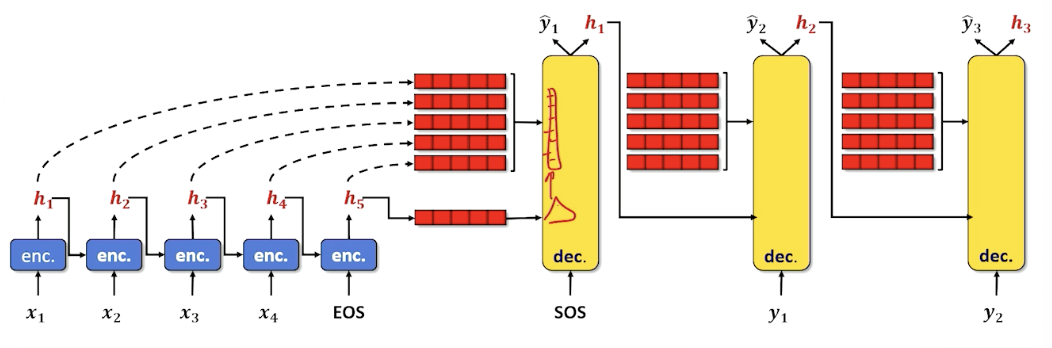

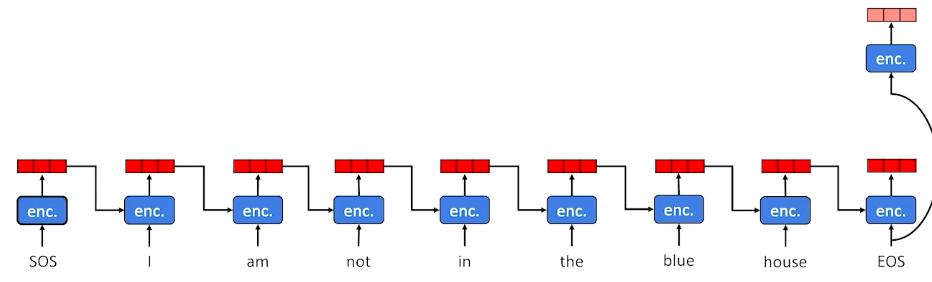

Special class model that is build on top of LSTM. Recall the structure of LSTM:

This is because sometimes data is of the form:

- $x_i = SOS\ x_{i,1}, x_{i,2}, …, x_{i,n}\ EOS$

- $y_i = SOS\ y_{i,1}, y_{i,2}, …, y_{i,n}\ EOS$

- Each x,y is a sequence of words that are clumped together and are considered a single whole.

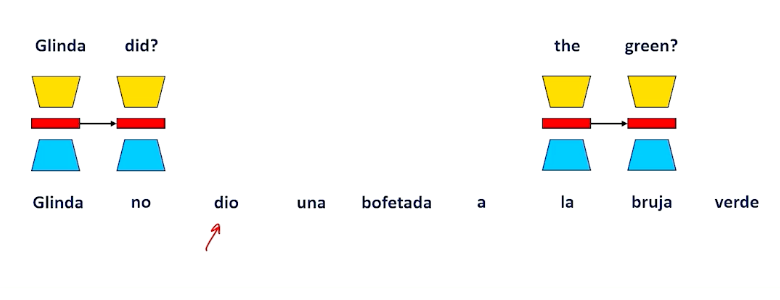

The reason why this is a special case is because this comes up very often especially in translation. So translation is usually about converting a phrase (or a sequence) in one language into another language.

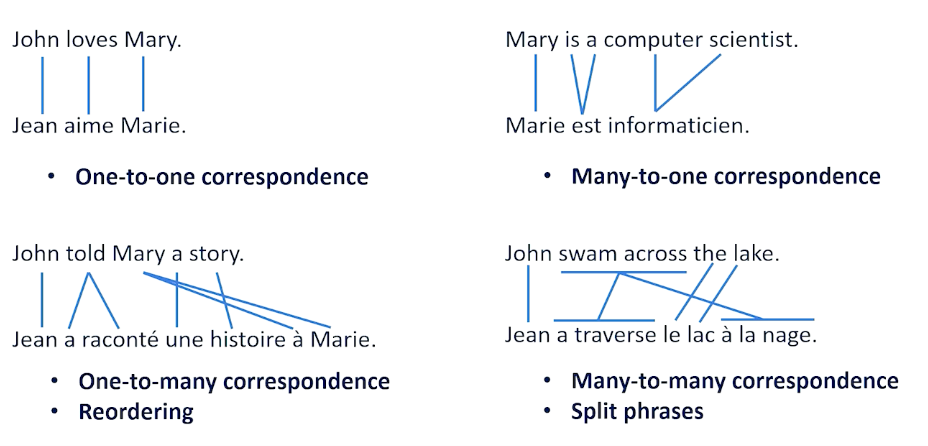

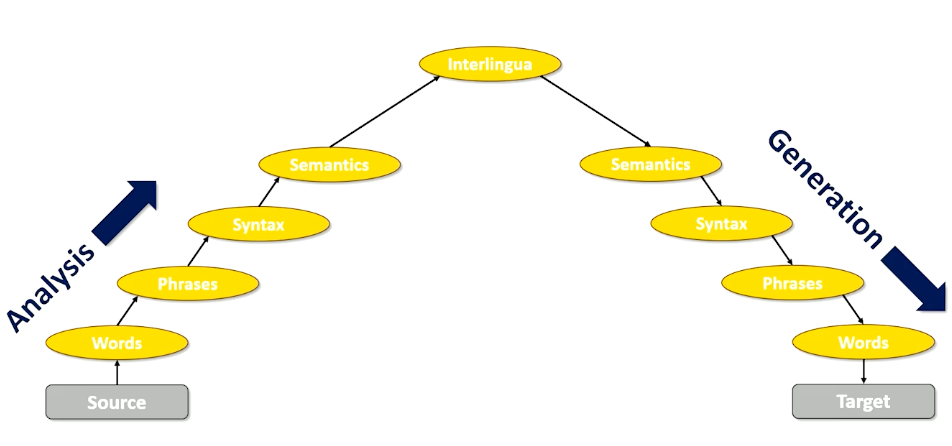

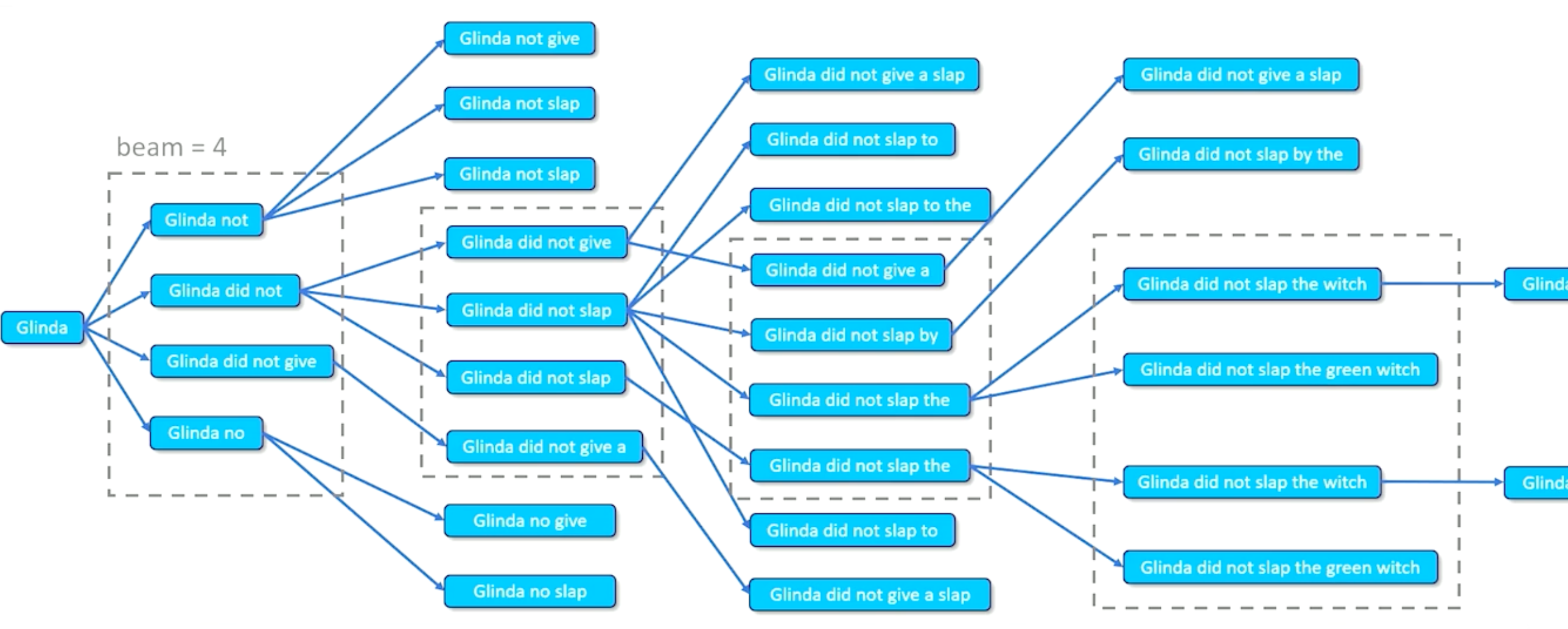

Translation also comes with its own problems, such as the phrasing, words not having a one to one mapping, etc. Sometimes we need to look the full sequence to be better informed on our prediction. In order to handle sequence to sequence data:

- sweep up an arbitrary input sequence and encode it into a hidden state context vector.

- Good for picking up on negations, adjective order, etc. Because the whole input sequence is in the context vector.

- Then decode it into an arbitrary length sequence

- Stop decoding when

EOSis generated

Sequence-to-Sequence Example

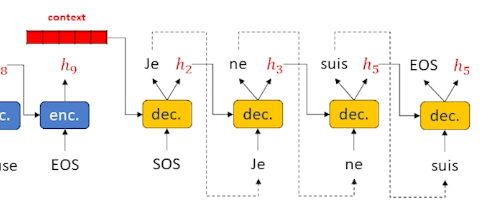

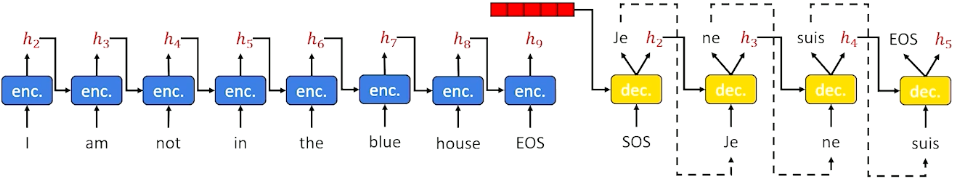

Consider the following example where we keep finding words and update our hidden state:

Now, we take that context, and start sending it into the decoder:

And when we see the EOS tag we can stop.

This is a little different from the previous LSTM where we did it word by word. Essentially, we have taken the decoder and put it to one side. The decoder need some sort of output word (as opposed to an input word) as well as a hidden state that keeps track of what is going on. As a result, we need to put another encoder in the decoder layer, we cannot work directly on words and hidden state. So in a Sequence to sequence, the decoder itself has an encoder inside. The encoder (within the decoder) is usually an LSTM as it works really well, and in most diagrams the cell state is omitted to keep the diagrams clean.

In summary:

- Separate the encoder and decoder

- Change the decoder to take an output word and hidden

- Allow us to do one of the most important things in modern neural language modeling: Attention

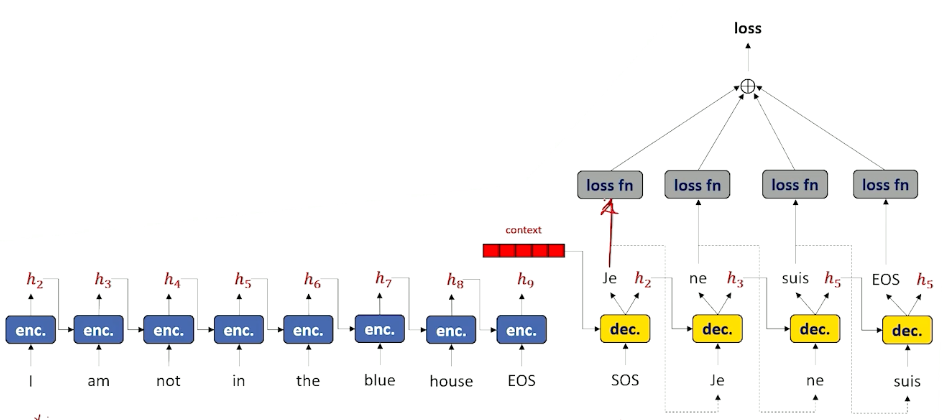

Sequence-to-Sequence Model Training

How do we train it when we have a separate encoder and decoder?

Now that it is separate, our training loop is going to have two parts as well. It is going to iterate through the encoder and then it is going to iterate through the encoding.

For the encoder:

- Set hidden state $h_0^{enc} = \vec{0}$

- Do until $x_j = EOS$

- Encode $x_j$ and $h_j^{enc}$ to get $h_{j+1}^{enc}$

- Increment $j$

For the decoder:

- Set $x_0^{dec} = SOS$

- Set $h_0^{dec} = h_{j+1}^{enc}$

- Do until $\hat{y}_j^{dec} = EOS$ or until we hit max length

- Decode $x_j^{dec}$ and $h_j^{dec}$ to get $\hat{y}_{j+1}^{dec} \text{ and } h_{j+1}^{dec}$

- Set $x_{j+1}^{dec} = \hat{y}_{j+1}^{dec}$

- Because this is training: loss = loss + loss_fn($\hat{y}_{j+1}^{dec}, y_{j+1}$)

- Increment $j$

Once we have done all of this, back propagate loss. But this time our loss is the aggregated loss.

Visually we can look at it like this (the loss reflects the entire sequence at once instead of word by word):

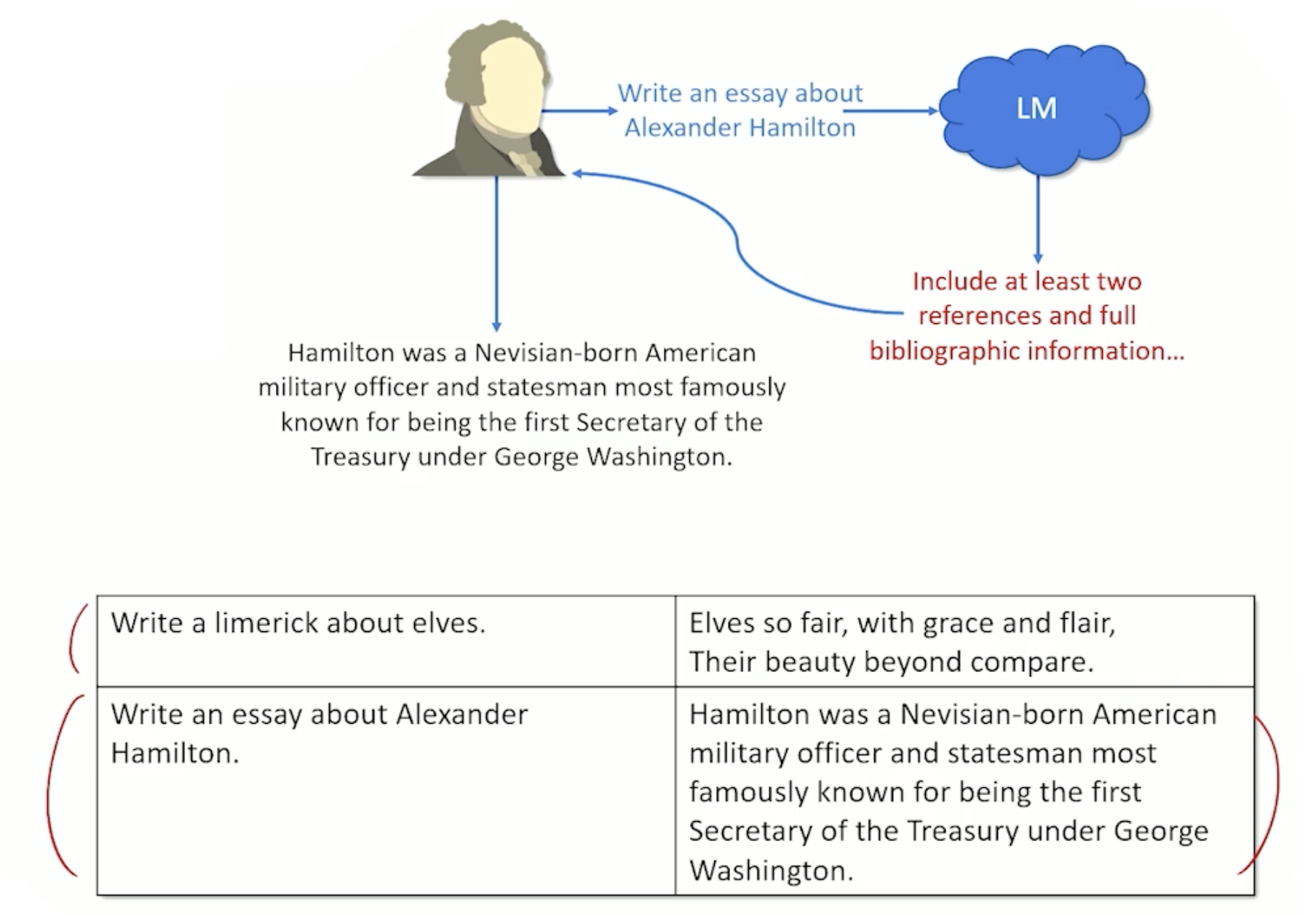

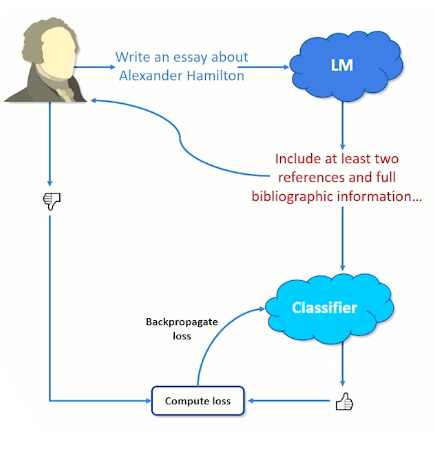

Teacher Forcing

We are going to introduce a new trick to make the neural network learn even better, known as teacher forcing.

- Instead of using the decoder’s output at time $t$ as the input to the decoder at time $t+1$…

- Pretend our decoder gets the output token correct every time.

- Use the true training label $y_{i,t}$ as input to the decoder at time $t+1$

So for our decoder, It looks like this instead:

- Do until $y_j=EOS$

- Decode $y_j$ and $h_j^{dec}$ to get $\hat{y}_{j+1}^{dec} \text{ and } h_{j+1}^{dec}$

- Set $x_{j+1}^{dec} = y_{j+1}^{dec}$ i.e ignore the prediction and set to the true value

- However, for our loss function, we still use the prediction:

- loss = loss + loss_fn($\hat{y}_{j+1}^{dec}, y_{j+1}$)

- Increment $j$

So this is good in the sense because in the early phase, our decoder is getting all the words wrong, which compounds the problem - i.e your next word is built on top of a previous wrong word. With teacher forcing, we just use the prediction to compute the loss but use the true word to proceed.

We can get a little more fancy with this:

- Can perform teacher forcing some percentage of hte time and feed in decoded tokens the remaining percentage of the time.

In summary:

- Teacher forcing makes training more efficient

- Sequence to sequence works for data that can be split into discrete input and output sentences:

- translation

- X and Y can be adjacent sentences to learn to generate entire sentences at once

Moving on to attention next - how we cna make sequence to sequence a little bit more intelligent by being smarter about how it looks back at its own encoder in order to help me figure out what to do in specific slices in the decoder step. This will help out with some of the issues with forgetting and overwriting of our context vectors.

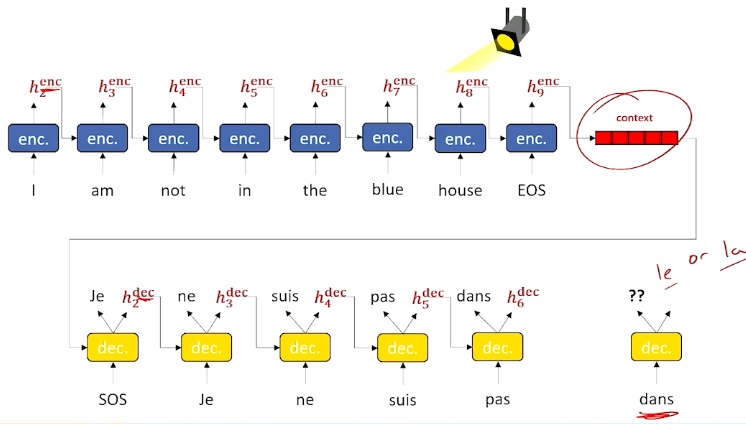

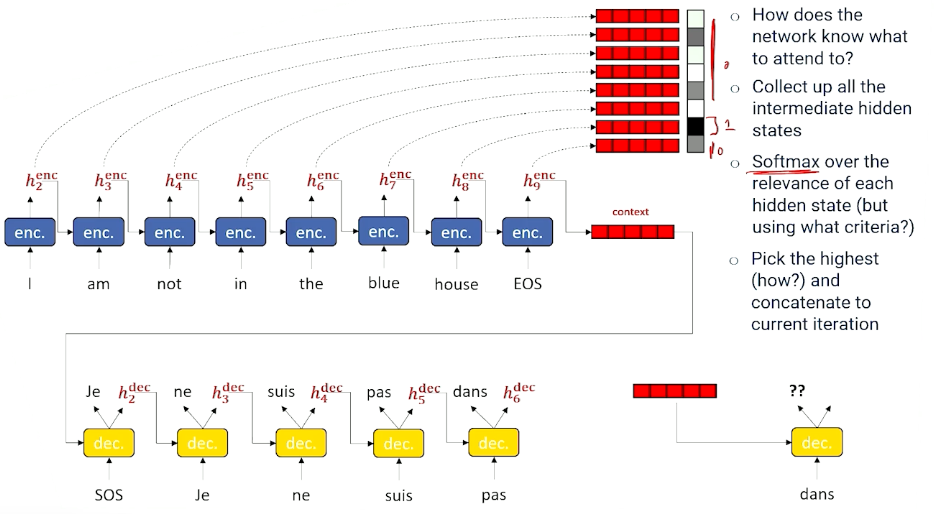

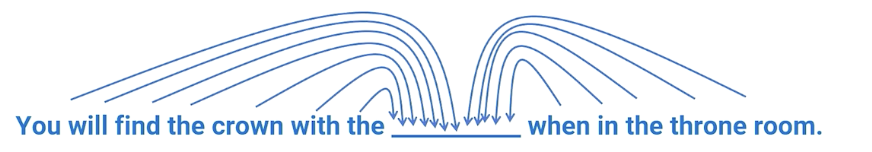

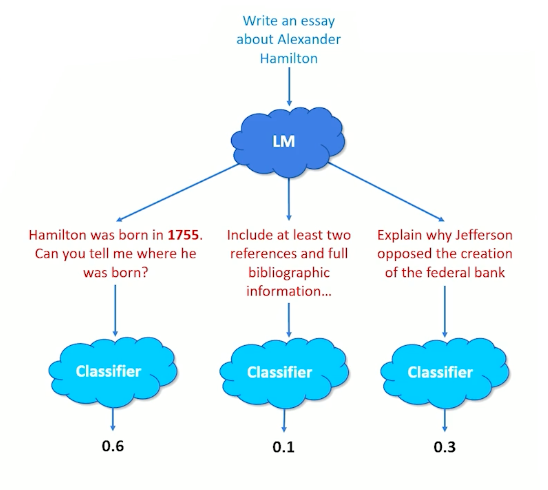

Attention, part 1

Finally, Attention is all you need.

Recall that:

- Parts of the hidden state are continuously overwritten and can forget

- Do we need to throw away all the intermediate hidden states during sequence encoding?

- Attention: The decoder to look back through the input and focus a “spotlight” on various points that will be most relevant to making the next prediction of the next output token.

- Pull the attended information forward to the next prediction.

Will be great if at prediction of the next word, to look back / spotlight at the decoder stage to identify what was encoded at that particular time slice and if the network knew that, may be able to bring this encoder information forward and make much better choice.

The next question is, how do we know which time slice to look at then? In which section should we pay attention to?

- Grab every single encoder output hidden state and save them and stack them up in a big Q.

- Decide which is the most important to us, so we have a tool for doing selection - Using softmax!

- But what criteria to use for softmax? We still need to figure this out.

- Assuming we are able to do so, take the best one, and use it as input for the next decoder step.

- And we do this for every step.

Attention, part 2

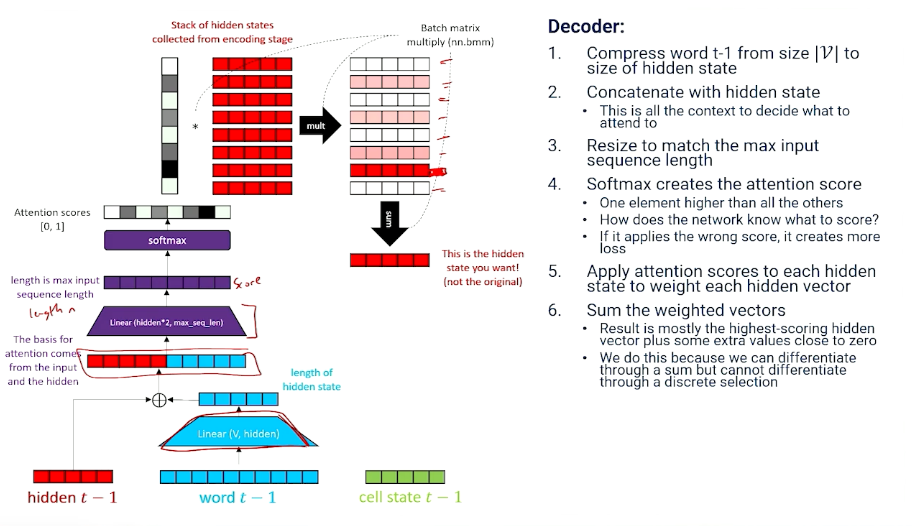

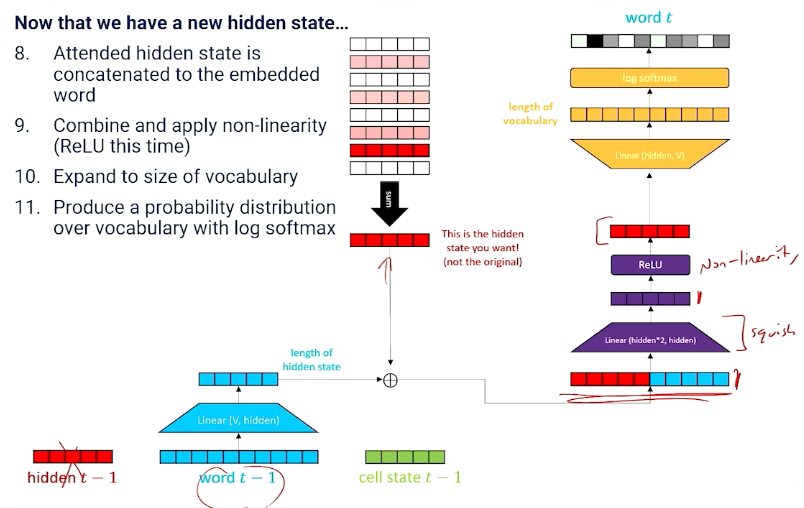

Let’s take a deeper look now - How we get attention to the decoder:

To summarize,

- Attention: dot product (inner product) of a softmaxed context vector and a matrix of candidates.

- Everything that might be relevant to choosing what to attend is packed into the context vector

- Network learns how to generate the attention score with linear transformation.

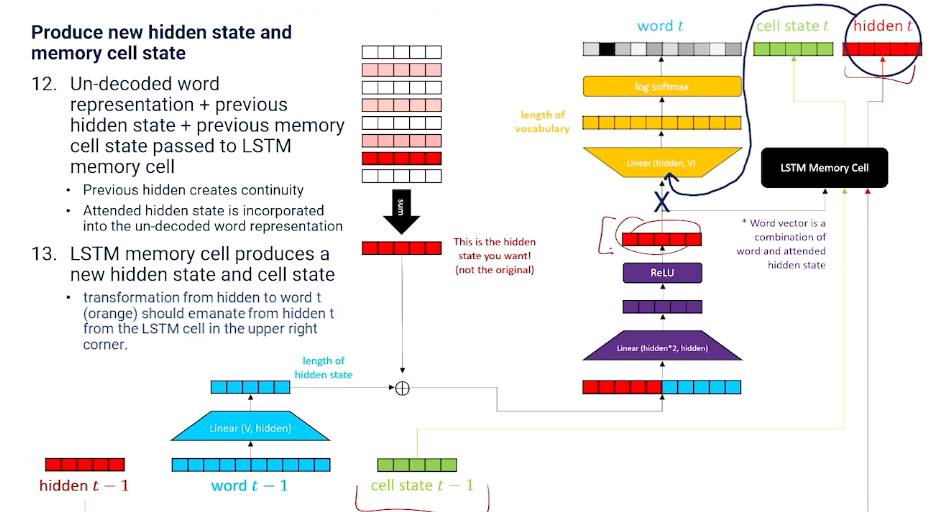

Now, on to the decoder part (we have only finished the attention portion):

We still need to produce new hidden state and memory cell state!

And we do so with a LSTM memory cell layer.

Putting it all together this is what we get:

In summary,

- Attention is the mathematical equivalent of “learn to choose from inputs” and is applied in the decoder.

- Attention does not require sequence to sequence, it can be applied to a regular LSTM if there is a buffer of previous words and intermediate hidden states to draw on.

Different Token Representations

Taking a slight detour here to talk about token representation.

- Up to now we have assumed a token is a word and the vocabulary is a word and the vocabulary is a very large number of words.

- vocabulary would be letters $\mathcal{V} = [a,b,c,…,z,1,2,3,… punctuation]$

- vocabulary size is small, but the language model has to learn to spell out every word one letter at a time.

There is another way thinking about tokens inbetween having letters and full words, and thats something called subword tokens.

- Breakdown complicated words into commonly recurring pieces.

- For example “Tokenize” $\rightarrow$ “token” + “ize”

- vocabulary would contain common words, common word roots, suffixes, prefixes, letters and punctuation

- Neural network mostly works on word tokens but occasionally has to learn how to use context to assemble a complex word.

- In the worst case, neural network can learn to spell really rare, complex words from letters.

- Sub-word vocabulary can cover most of english with fewer tokens ~50,000

- One additional advantage: No need for out-of-vocab (UNK) tokens, as handling these is difficult.

Perplexity, part 1

How do we test / evaluate NLP/language models? How do we know when they work well? Even if the loss is low, is it learning what we want it to learn or learning anything useful?

Recall that:

-

Language models are all about modeling fluency: probability ~ fluency.

\[P(W_1=w_1,...,W_{n-1} = w_{n-1},W_{n} = w_{n}) = \prod_{t=1}^n P(W_t = w_t \lvert W_1=w_1,...,W_{t-1}=w_{t-1} ; \theta)\] That is: The probability of every word is conditioned on its history

Now, perplexity:

- A measure of how “surprised” (perplexed) a model is by an input sequence

- A good language model is one that is good at guessing what comes next

Branching factor

- Branching factor: number of things that are likely to come next.

- If all options are equally likely, then branching factor = number of options

- But if different options have different probabilities, then:

- Branching factor = $\frac{1}{P(option)}$

- Etc flipping a coin where $P(heads) =0.5$, then branching factor is $\frac{1}{0.5}=2$.

- If $P(heads) = 0.75$ then branching factor is $\frac{1}{0.75}=1.33$.

- Branching factor = $\frac{1}{P(option)}$

Coming back:

- The probability of a word differs given its history $P(w_t \lvert w_1, …, w_{t-1})$

- So the branching factor will be $\frac{1}{P(w_t \lvert w_1, …, w_{t-1})}$

- The more plausible alternatives, the more likely the model will make the wrong choice.

Ok, but we have introduced a new problem, consider:

-

Probability of a sequence of words (or the probability of this sentence) is:

\[P(w_1,...,w_n) = \prod_{t=1}^n P(w_t \lvert w_1,...,w_{t-1})\] - But, longer sequences would have a smaller probability - So this makes things unfair as well; longer sequences will likely have a smaller probability

-

so we need to normalize by length by taking the $n^{th}$ root, which is done by using the geometric mean:

\[P_{norm}(w_1,...,w_n) = \prod_{t=1}^n P(w_t \lvert w_1,...,w_{t-1})^{\frac{1}{n}}\] -

And so the branching factor of a sequence of words:

\[\prod_{t=1}^n \frac{1}{P(w_t \lvert w_1,...,w_{t-1})^{\frac{1}{n}}}\] -

Perplexity is just the branching factor of a sequence of words:

\[perplexity(w_1,...,w_n) = \prod_{t=1}^n \sqrt[n]{\frac{1}{P(w_t \lvert w_1,...,w_{t-1} ; \theta)}}\]

Perplexity, part 2

Taking the previous equation, and transforming it into log space:

\[\begin{aligned} perplexity(w_1,...,w_n) &= exp\bigg( \sum_t^n log(1) - \frac{1}{n}log P(w_t \lvert w_1, ..., w_{t-1};\theta) \bigg) \\ &= exp\bigg( \sum_t^n - \frac{1}{n}log P(w_t \lvert w_1, ..., w_{t-1};\theta) \bigg) \end{aligned}\]Perplexity is also the per-word exponentiated, normalized cross entropy summed up together: so now, we have established a relationship between perplexity and loss.

Therefore, in the case of RNN:

- Recurrent neural networks generate one time slice at a time

- Perplexity is the exponentiated negative log likelihood loss of the neural network:

- $perplexity(w_t) = exp(-log P(w_t \lvert w_1,…,w_{t-1};\theta)) = exp( Loss(\hat{y},target))$

To recap,

- Perplexity is loss, the lower the better

- Perplexity is how surprised the language model is to see a word in a sentence

- More possible branches means model is more likely to take the wrong branch

- Perplexity($w_t$) =$x$ means the odds of getting the right token is equivalent to rolling a 1 on an $x$-sided die.

- Example: Perplexity($w_t$) = 45 means the model has a 1/45 chance of getting the right token.

- But if it was 2 then that means it has 1/2 chance of getting the right token.

With that:

- During training, we look for loss to go down

- During testing (on unseen data), we look for a low perplexity

- The best language models in the world achieve a perplexity of ~20 on internet corpora

- Perplexity doesn’t mean non-sense - It means that the model makes a choice other than one that exists in a corpus

- Even if we have a high perplexity, it doesn’t mean only one word is going to make sense and a whole bunch of words are not going to make sense; maybe the top 20 words still make sense or reasonable words, e.g “Why did the chicken cross the road” vs “cross the street”.

- It is still going to be fluent and we are not going to be necessarily upset. It just wasn’t the one perfect word according to some test set.

- Even if we have a high perplexity, it doesn’t mean only one word is going to make sense and a whole bunch of words are not going to make sense; maybe the top 20 words still make sense or reasonable words, e.g “Why did the chicken cross the road” vs “cross the street”.

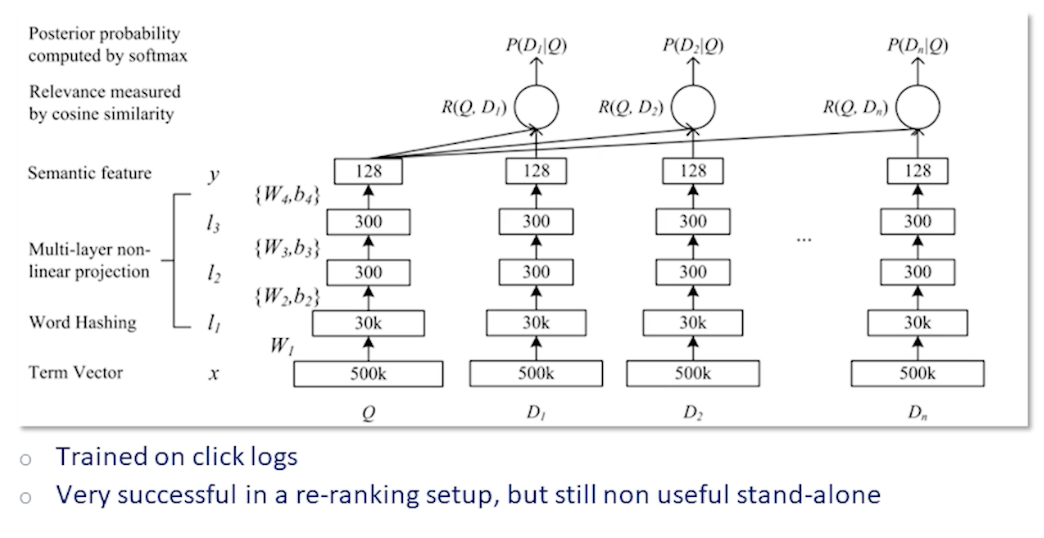

Module 5: Semantics

Document Semantics

- Semantics: What word and phrase mean

-

Problem: Neural Networks don’t know what word means

- By extension neural network don’t know what a document is about

- Words are just one-hot vectors

So, How do we represent the meaning of a document?

- Multi-hot

- Set a 1 in each position for every word in a document.

- The values are the same but some words are more important than others.

- If you want to figure out if two documents are related to each other you want to rely on words that are effective at distinguishing documents

- Look for ways to determine which words are important

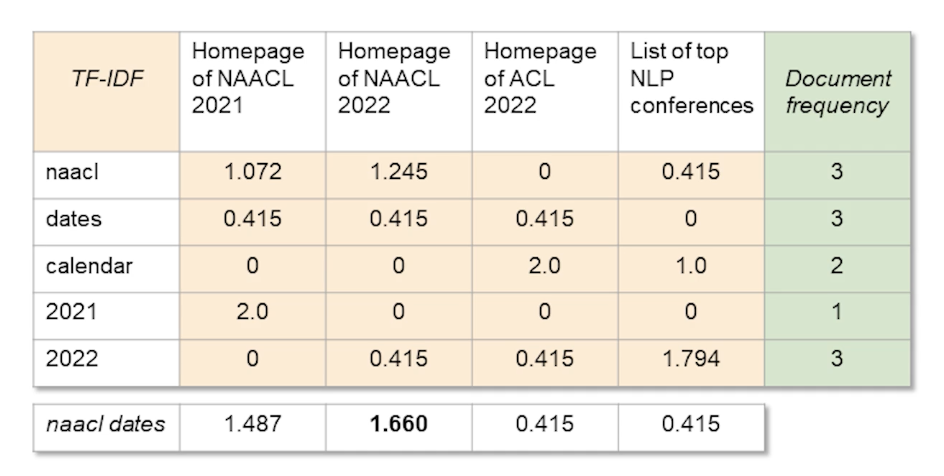

Term Frequency-Inverse Document Frequency

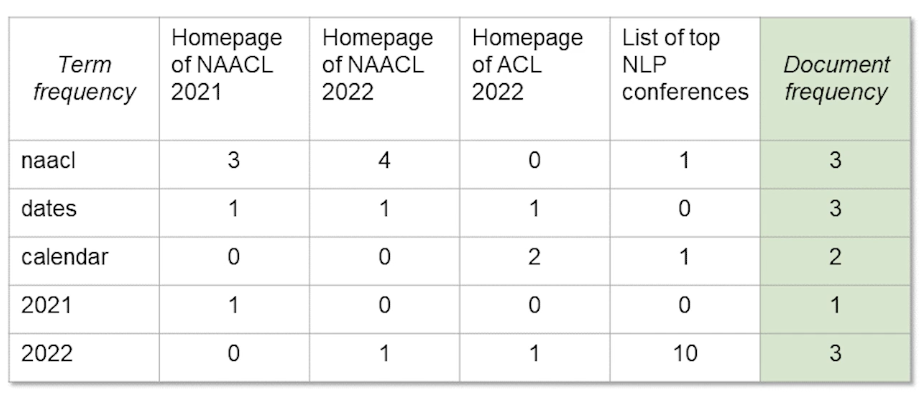

TFIDF - a specific technique trying to decide whether two document are semantically similar to each other without really having the semantics of words and documents themselves.

- Give weight to each word based on how important it is

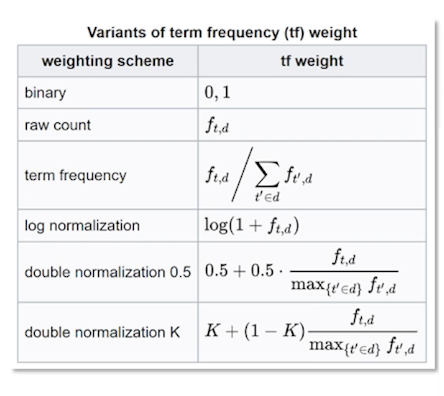

Term frequency : If a word shows up frequently in a document, it must be more important than other words:

\[TF(w,d) = log(1+freq(w,d))\]- $freq(w,d)$ is how many times $w$ appears in document $d$

- The log forces the TF to grow very slowly as the frequency of a word gets larger.

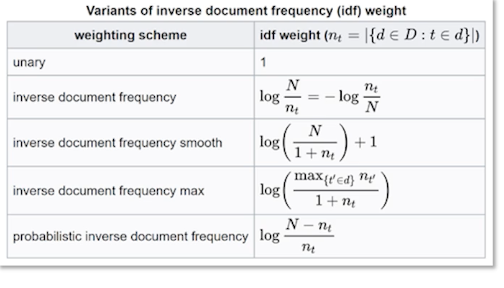

Inverse Document Frequency : Words that are common in all documents are not very important

\[IDF(w,D) = log \bigg( \frac{1 + \lvert D \lvert }{1 + count(w,D)}\bigg)+1\]- $ \lvert D \lvert$ is number of documents

- $count(w,D)$ is how many documents in $D$ contains $w$

- As the count increases, the ratio goes towards 1.

So, TF-IDF:

\[TFIDF(w,d,D) = TF(w,d) \times IDF(w,D)\]- Apply TF-IDF to every word $w$ in every document $d$ to get a vector

In summary:

- TF-IDF provides a more nuanced document representation of a document than a multi-hot

- Next we look at how to compute document similarity and retrieve the most similar documents

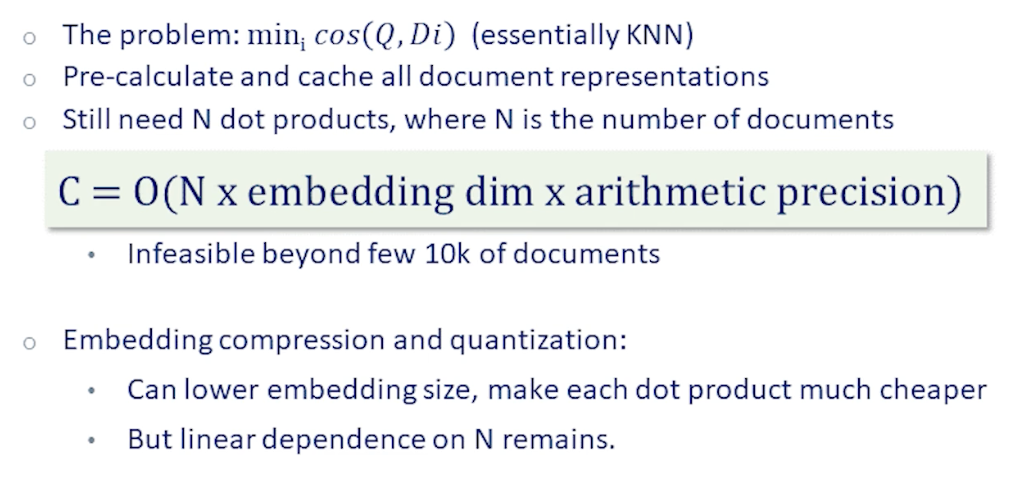

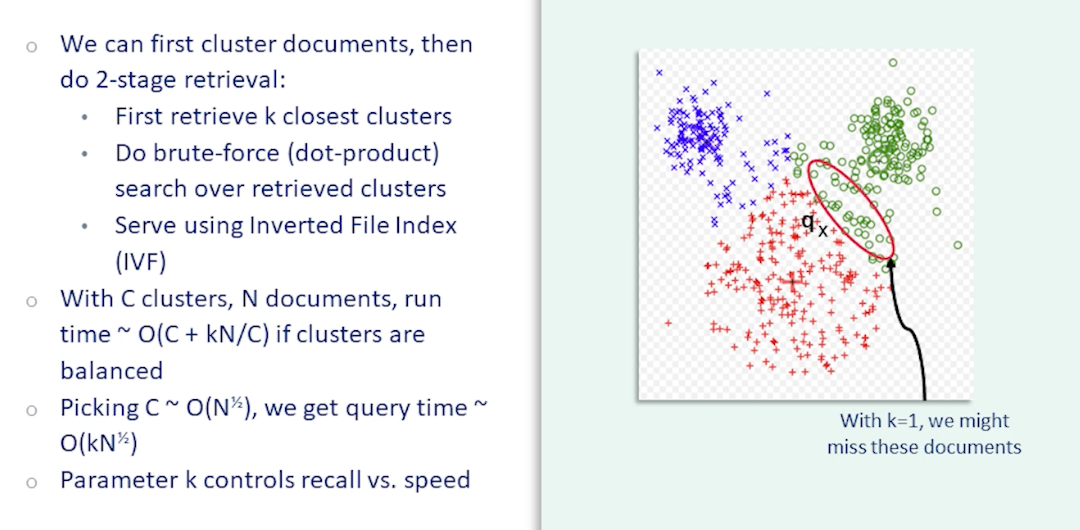

Measuring The Similarity Documents

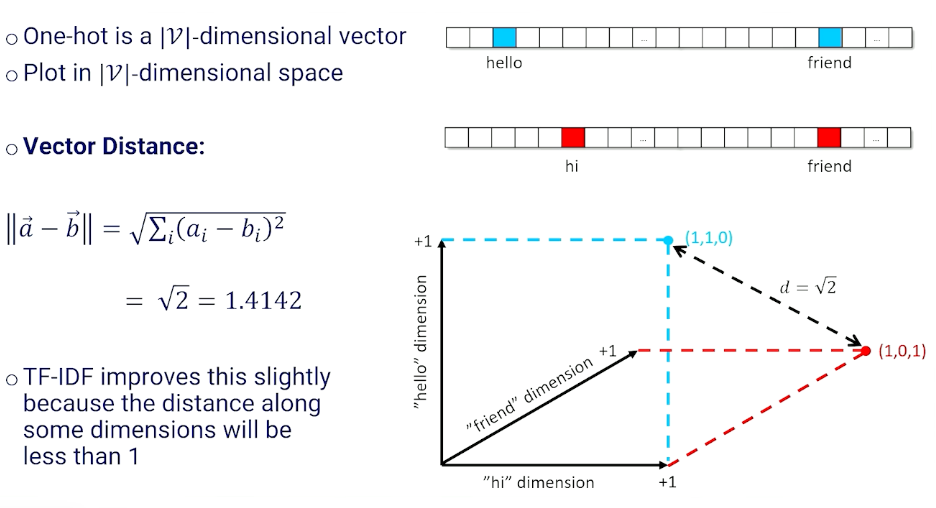

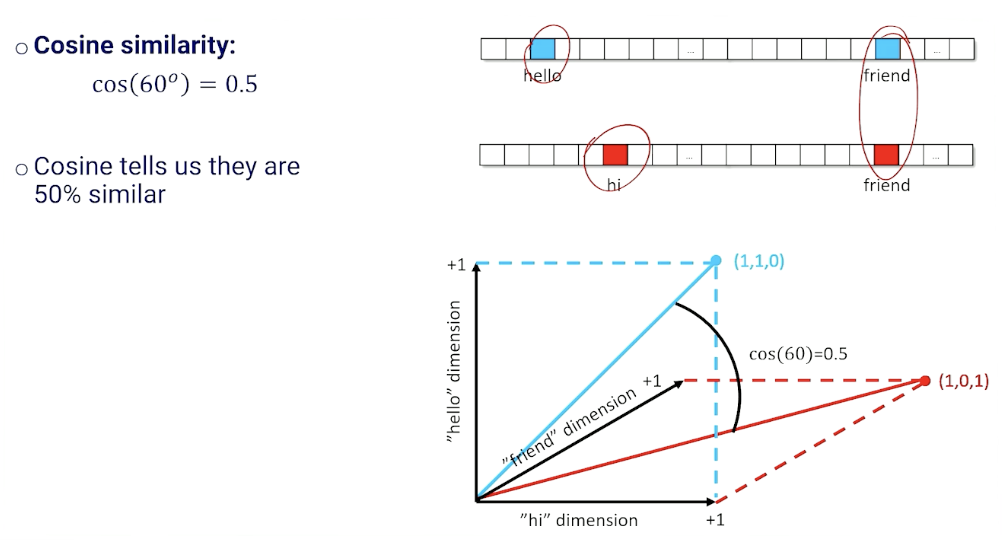

- Document Retrieval: given a word or a phrase (itself a document), compare the query to all other documents and return the most similar.

- Given two documents, represented as vectors, how do we know how similar they are?

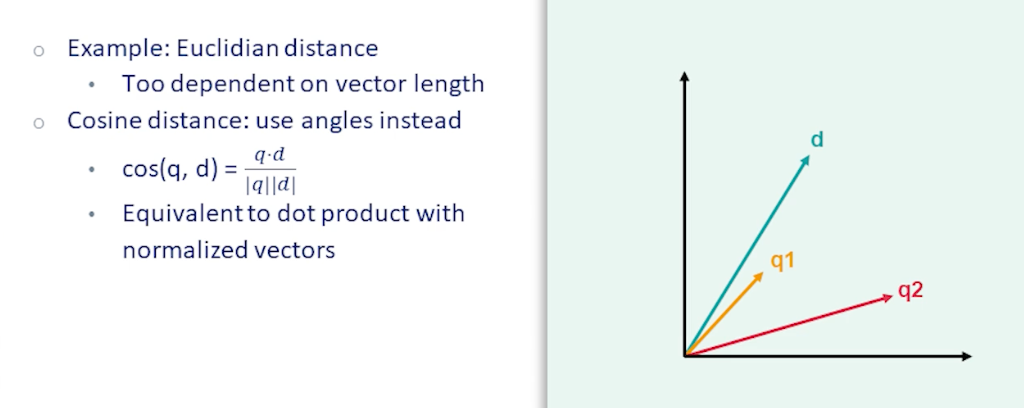

One way is to use the vector distance:

Another way is to use the cosine distance:

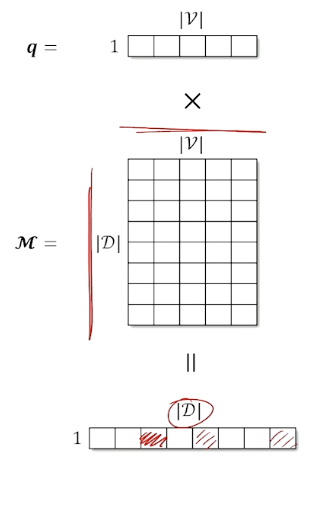

Now, on document retrieval:

- Create a $1 \times \lvert \mathcal{V} \lvert$ normalized documetn vector for the query($q$)

- Create a normalized vector for every document and stack to create a $\lvert \mathcal{D} \lvert \times \lvert \mathcal{V} \lvert$ matrix $\mathcal{M}$.

- Matrix multiply to get scores

- Normalized the final vector

- This is the same as computing the cosine similarity

- Take the argmax to get the index of the document with the highest score (or get the top-k to return more than one)

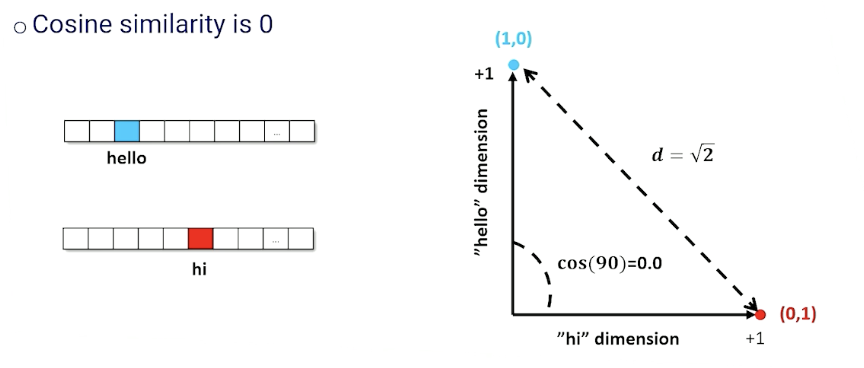

There are still two issues:

- Require a $\mathcal{V}$ length vector to represent a document

- Does not capture the fact that some words are similar (“hi” vs “hello”)

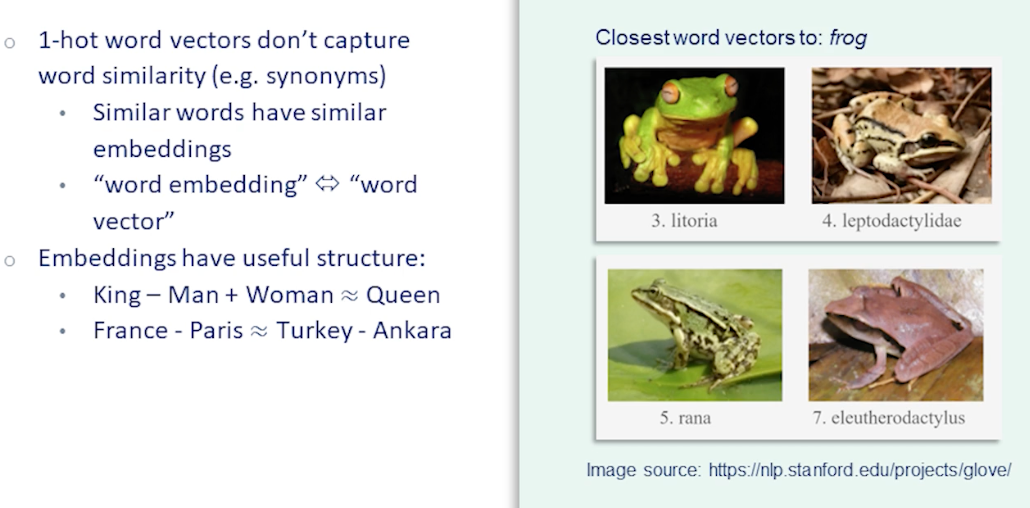

Word Embeddings

The problem with TF-IDF just now is the way we index them in a vector even though they probably mean the same thing.

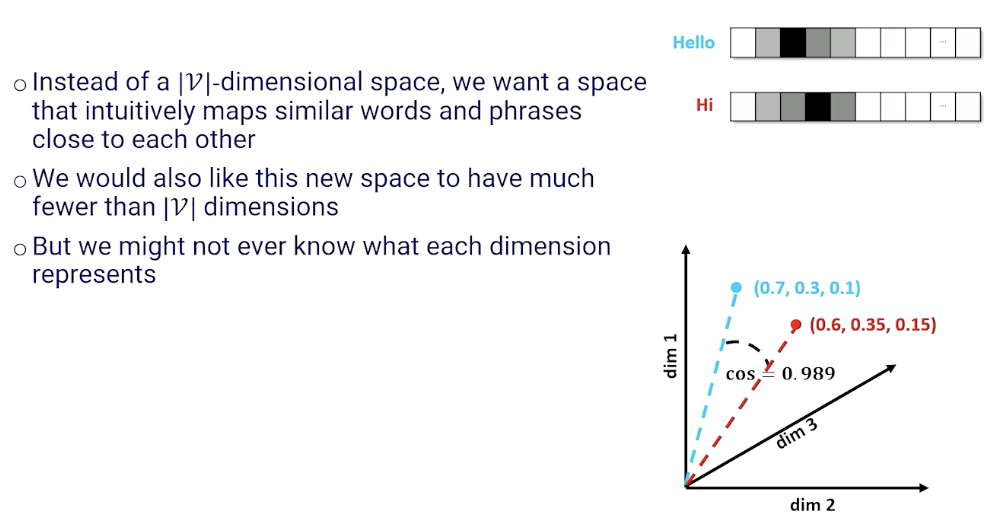

Instead of a $\lvert \mathcal{V} \lvert$ - dimensional space, we want a space that intuitively maps similar words and phrases close to each other. This is also known as embeddings:

So, how are we going to get these embeddings?

- Take a one hot (or multi hot) and reduce it to a $d$ -dimensional space where $d \ll \lvert \mathcal{V} \lvert $

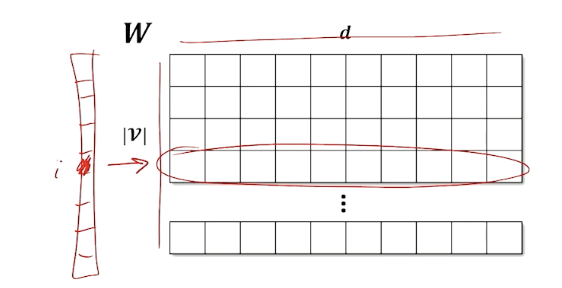

- Use a linear layer with $\lvert \mathcal{V} \lvert \times d$ parameters $\mathcal{W}$

- Compress a one-hot into a d-dimensional vector

Why does this work?

- One-hot for the $i^{th}$ word selects a set of $d$ weights and all others are zeroed out

- These remaining weights are a $d$-dimensional representation of the indexed word

- Because we are multiplying by 1s and 0s, the $d$ weights $W_i$ are identical to the $d$-dimensional vector of activations at the next layer up.

Looking at the matrix of weights in the linear layer:

- Each row in $W$ is an embedding for each token in vocabulary

- One-hot selects the row

- Learn weights that capture whatever relationship we need

Word Embeddings Example

Recall the RNN architecture where we have an encoder layer:

We call the lienar compression layer in the encoding the embedding layer:

- token to one hot

- Linear from length $\lvert \mathcal{V} \lvert$ to $d$ length vector

- For simplicity moving forward, we are going to assume we can turn the word directly to an embedding.

- Embedding layer in an RNN learns weights of an affine (linear) transformation

- Whatever reduces loss in the output probability

- Must have compromises (words having similar activations)

- We hope those compromises map similar words to similar vectors

- Still no guarantee on semantic similarity

- Embedding layer is task specific

- Because ultimately it is being fed into a NN with a loss function for a specific task.

- Question: can we learn a general set of embedding?

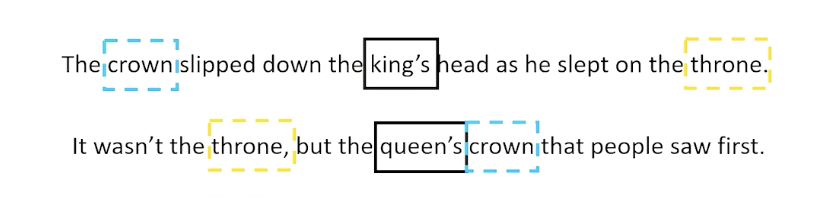

Word2vec

Maybe we don’t need to know what each word means:

- Distributional semantics : a word is known by by the company it keeps

- Words that man approximately the same thing tend to be surrounded by the same word

- For example we always see king and queen together, maybe they mean something very similar in terms of semantics

- Again - the idea is we don’t need to know what the word mean if words with similar meaning have similar embeddings

Then the question is:

- How can we train an embedding to use this concept of distributional semantics

- Can a word be predicted based on surrounding words?

- The crown was left on the throne when the ???? went to bed at night.

- Probably king or queen is a reasonable choice; although we can’t quite tell which is better

- Can we predict the surrounding words based on a single word?

- Take out a word in the middle of a sequence and ask a neural network to guess the missing word

- Loss is the cross entropy of the probability distribution over words

Similarly, we can look at the opposite of the problem:

- Can a window of words be predicted based on the word in the middle of the window?

- Take a word and guess the words to the left and right

- Loss is the cross entropy of each blank given the word in the middle

- Break up into skip grams (bigram on non-adjacent tokens)

- E.g given word $w_t$, predict $w_{t-i}, w_{t+1}$ for some $i$.

- Predict each skip-gram separately

This is also known as the word2vec approach and there are actually two approaches to word2vec:

- continuous bag of words

- or skip grams

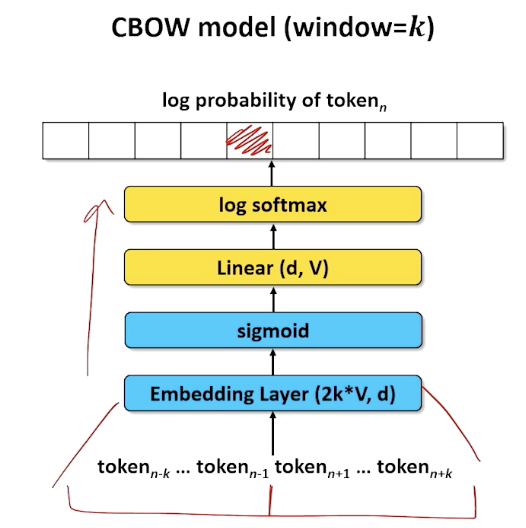

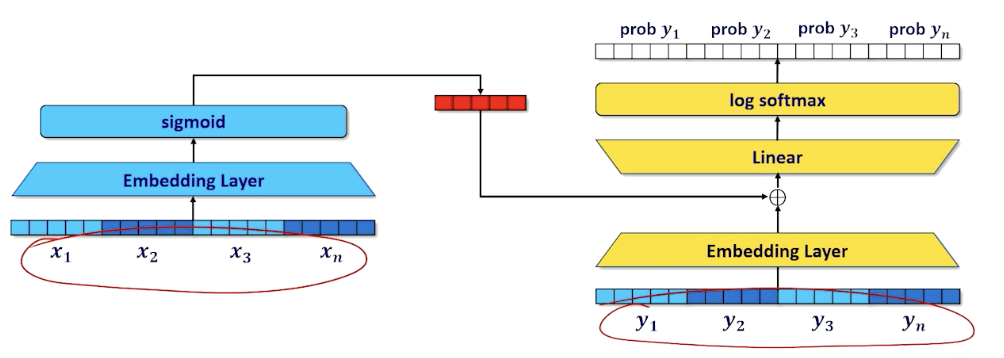

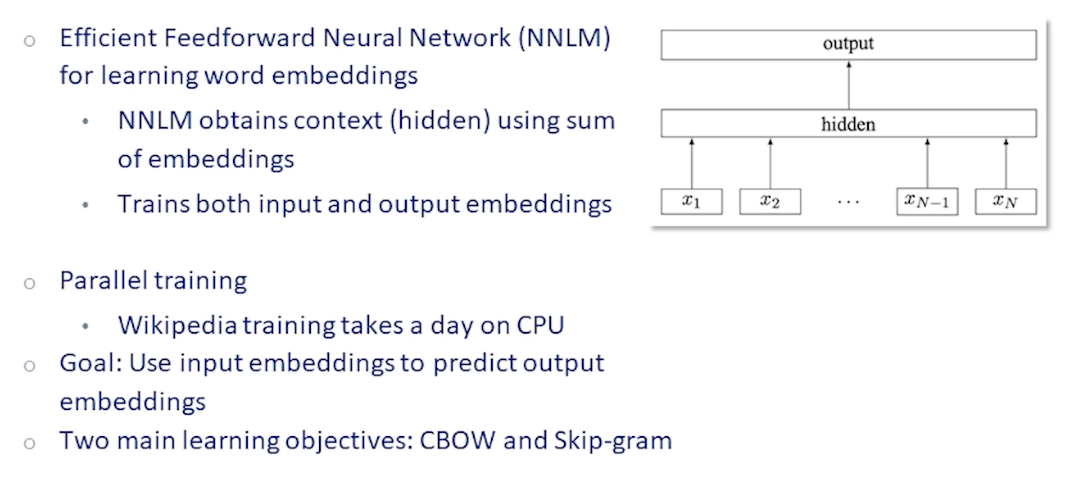

Let’s look at the continuous bag of words (known as CBOW) first, for a window of size $k$, what we are doing is we are taking a particular chunk of document and note that the document is missing a word in the middle.

So we take the $2k$ words off the left and right of the target word and asking the neural network to predict the missing word, in this case the embedding layer is $2k*V$ and outputs a/compresses it into $d$ dimension.

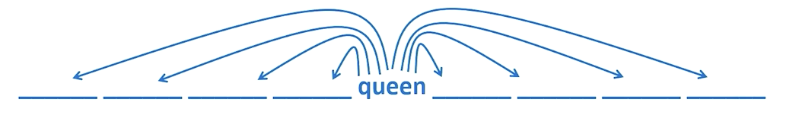

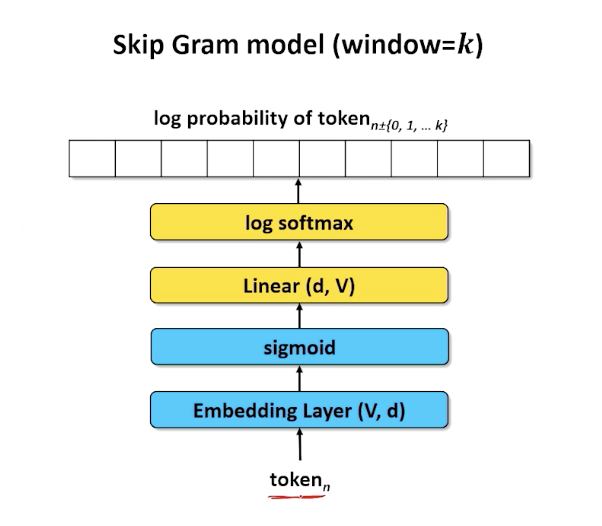

Now, let’s look at the skip gram model which is to look at a particular word and trying to guess the surrounding words

- So again, the word will be turn into a dimension of size $d$

- Because this is skip gram, we do not really care about the positions of the words but instead look at words with high activations.

- And the k highest activations are the words that are most likely to be in window around our $n^{th}$ word

- Doesn’t need to be a sentence, can be jumbled up - we are just looking for words

- Compute the entropy of each of the prediction matches the actual words we would find in a span taken from our actual document.

- The math side of things is omitted which is a little complicated.

Embedding

Let’s see what we can do for our embeddings, remember in either CBOW or skip gram we have the embedding layer

- We can extract teh weights from the embedding layer

- Each row is the embedding for a different token in the vocabulary

We can now do something interesting:

- such as computing the cosine similarity using the embedding.

- $cos(\vec{v}{queen}, \vec{v})$

- We can also do retrieval; find documents that are semantically similar

- Which Wikipedia document is most relevant to “Bank deposit”

- Chattehoochee river

- Great Recession of 2017

- Which Wikipedia document is most relevant to “Bank deposit”

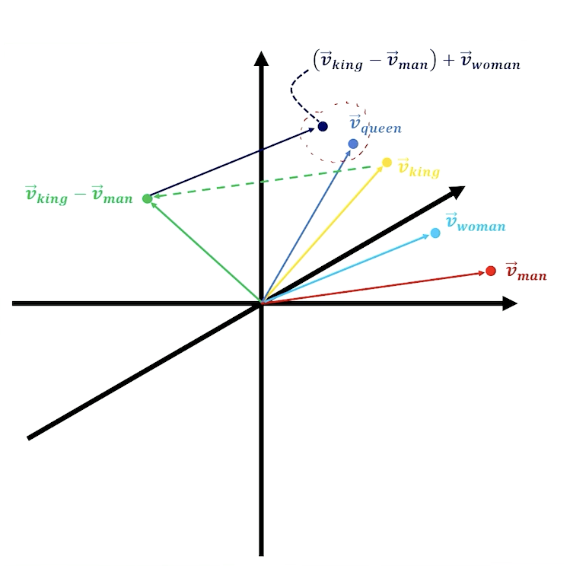

- Can also do analogies - add and subtract word embeddings to compute analogies

- Georgia - California + peaches = ?

Again, to recap - words that represent similar concepts tend to be clustered closely in d-dimensional embedding space.

- Vectors can be added and subtracted

E.g King - man + woman = Queen

Also:

- Most points in embedding space are not words

- Search for the nearest discrete point

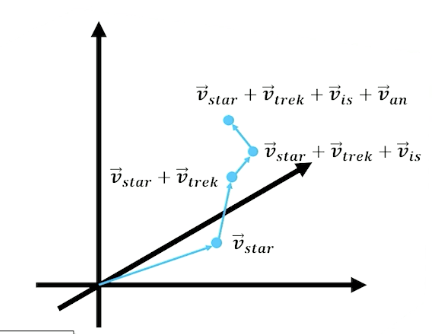

What about document embedding?

- Add each word vector

- Word vectors pointing in the same direction strengthen the semantic concept

- Normalize the resultant vector

- Value of any of the $d$ dimension is the relative strength of that abstract semantic concept

- Necessary for cosine similarity

In summary:

- Word2vec is a learned set of word embeddings

- Can either use continuous bag of words (cbow) or skip grams

- Word embeddings can be used in word similarity, document similarity, retrieval, analogical reasoning etc

- Text generation must learn an embedding that helps it predict the next word, but the embedding linear layer can be seeded with word2vec embeddings

- We will find embeddings in just about all neural approaches to natural language processing.

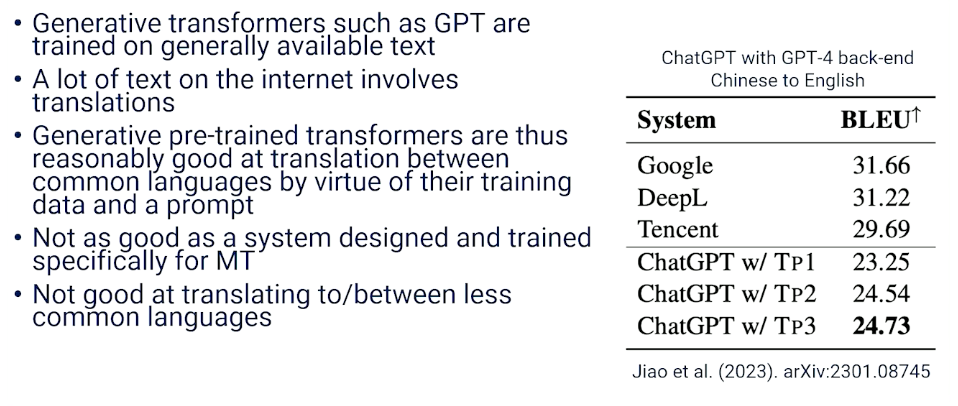

Module 6: Modern Neural Architectures

Introduction to Transformers

Current state of the art, at least in 2023.

Recall that:

- RNNs process one time slice at a time, passing the hidden state to the next time slice

- LSTMs improved the encoding to the hidden state

- Seq2seq collects up hidden states from different time steps and let the decoder choose what to use using attention

- Note how the decoder is side-by-side by the encoder and has it’s own inputs in addition to the hidden state (token from the previous time step)

- $x_s$ are the inputs to encoder, $h_s$ are the outputs

- $y_s$ are the inputs to decoder, $\hat{y}_s$ are outputs

- Loss computed on $\hat{y}_s$

To add:

- Recurrent Neural networks, LSTMs, and Seq2seq exists because recurrence means we don’t have to make one really wide neural network with multiple tokens as inputs and outputs.

- The wide model was originally considered a bad idea because:

- inflexible with regard to input and output sequence lengths

- computationally expensive

- But sequence lengths is not a big deal if you make $n$ ridiculously long (~1,024 tokens) and just pad one-hots with zeros if the input sequence was shorter.

- Computation also got alot better capable of more parallelization

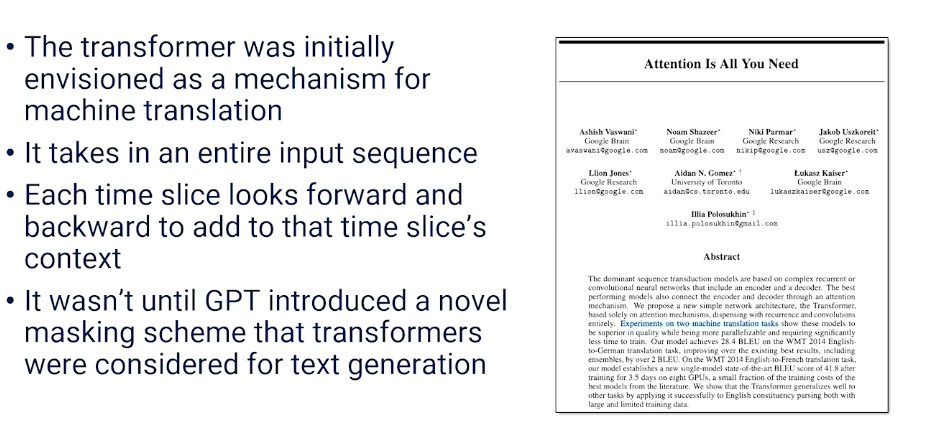

- 2017: First transformer (65M parameters) was train on 8 GPUs for 3.5 days

- 2020: GPT3 Transformer (175B parameters) was trained on 1,024 GPUs for a month

Transformers: Combining 3 Concepts

Introducing transformers - Combine 3 insights/concepts.

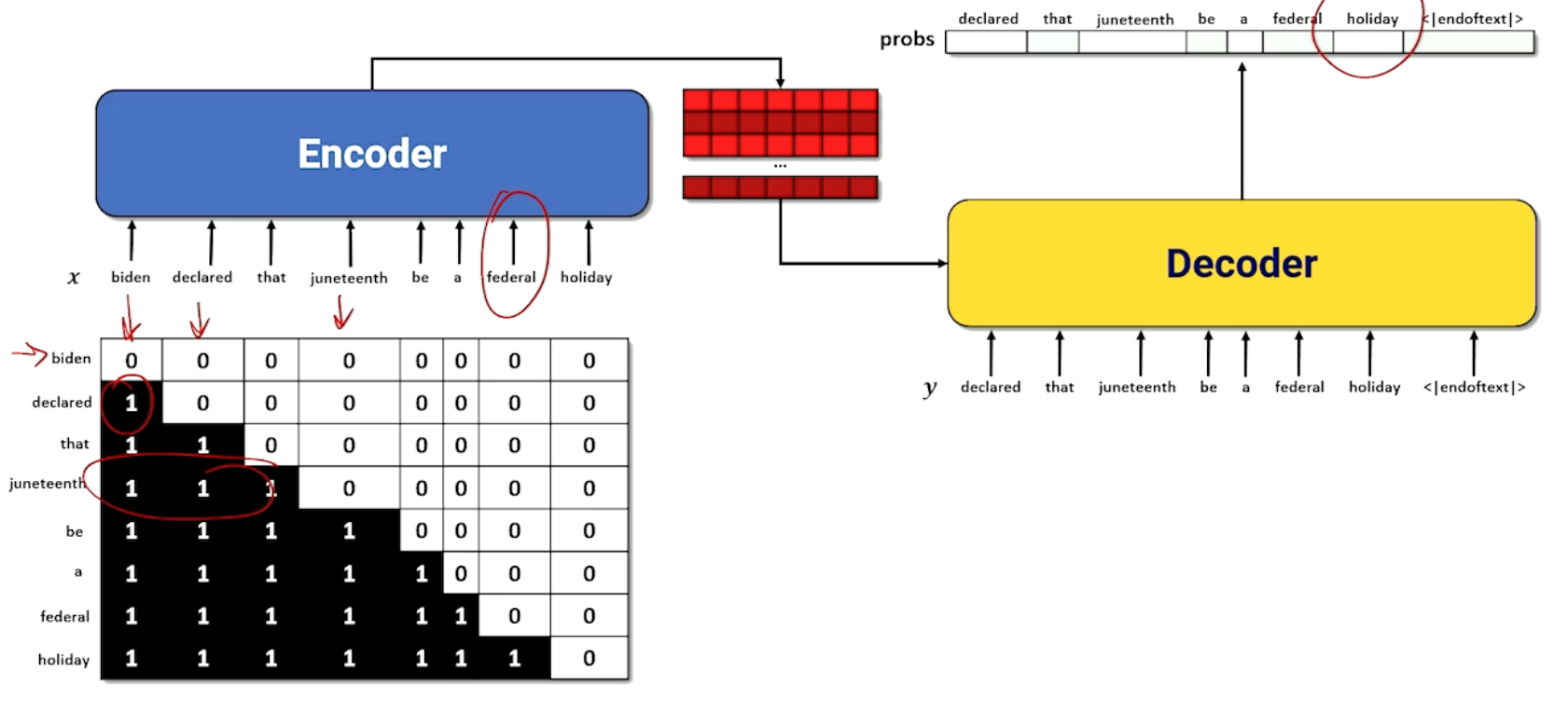

First concept: Encoder/Decoder that operates on a large input input window consisting of a sequence of tokens

- Entire input and output sequence provided at once

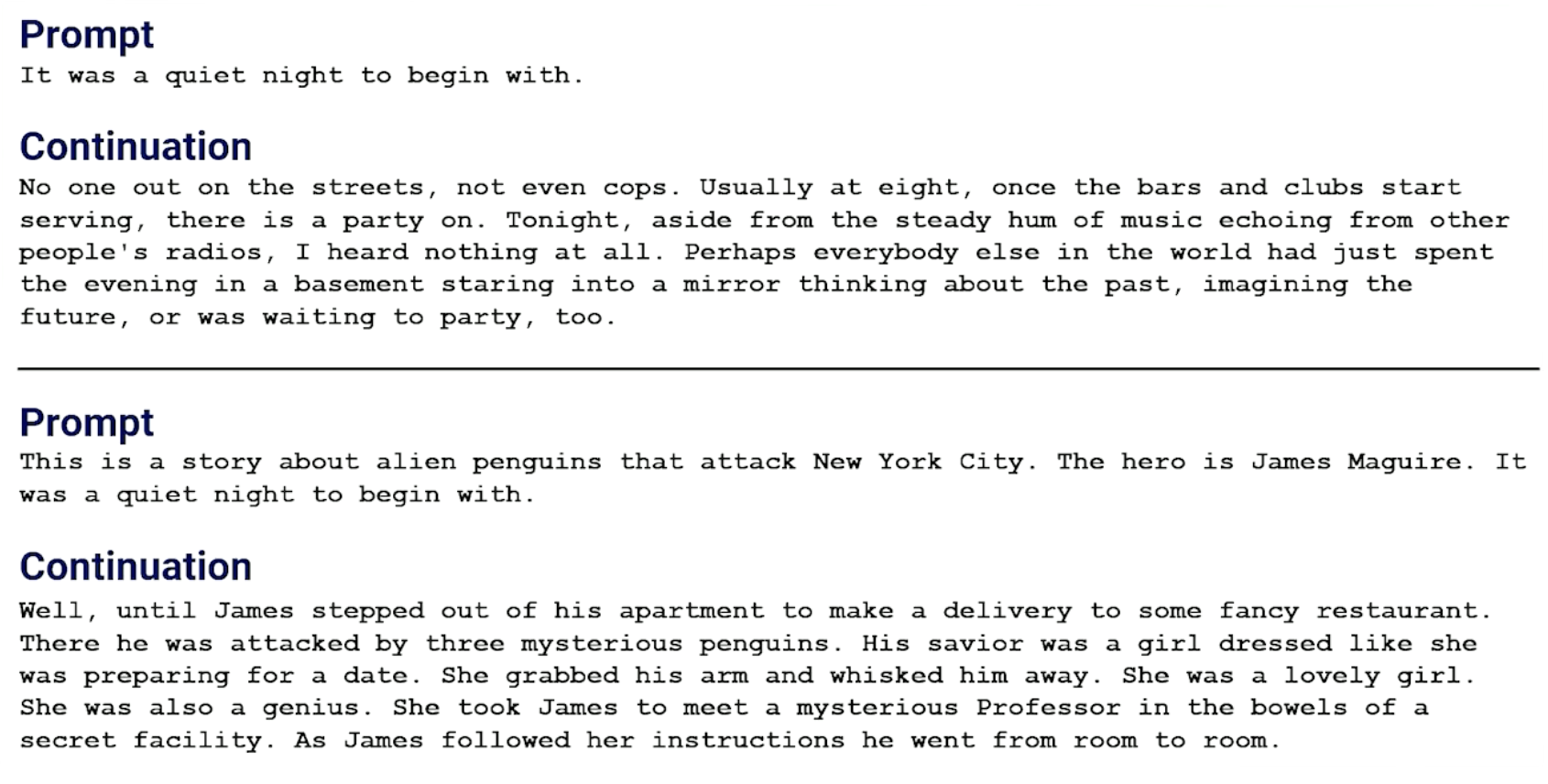

- Like Seq2seq the decoder takes a hidden state and entire output sequence, which no longer needs to be generated token by token.

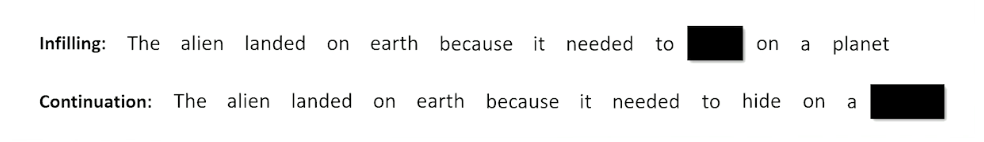

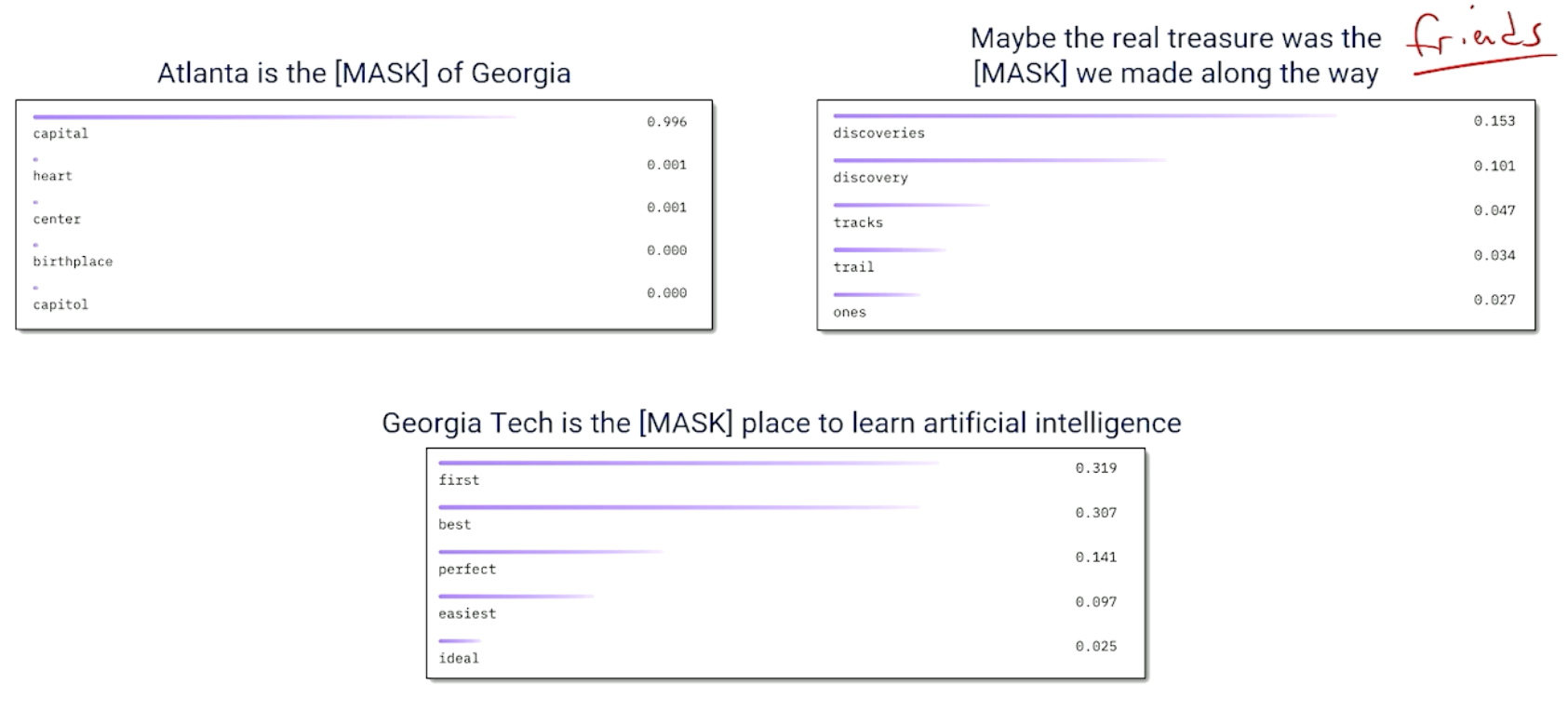

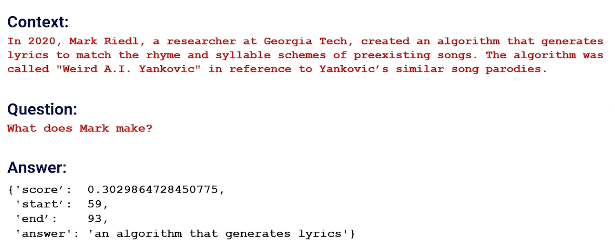

Second concept: Masking

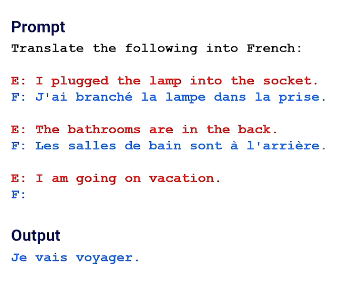

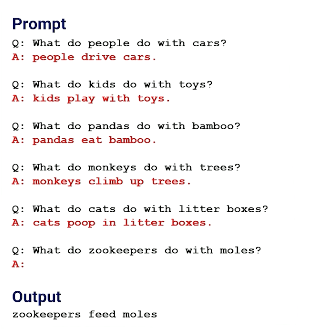

- Blacking out tokens so that the network has to guess the missing token

- One way is infilling: Guess a word in an arbitrary position in the middle of a sequence

- Another way is continuation: Guess the word at the end of the sequence.

- Transformers are sometimes called masked language models

- Recall: We have seen this in word2vec is a robust way of learning to embed

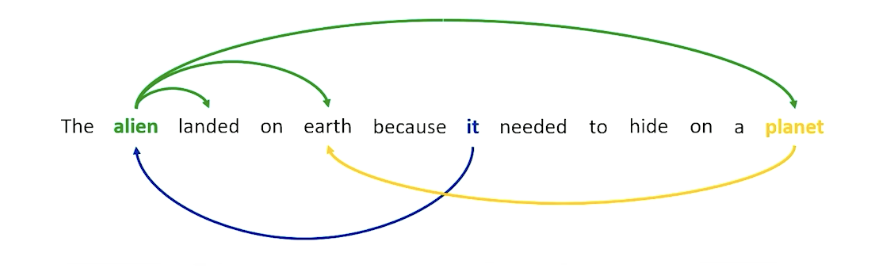

Third concept: Self-attention

- Instead of collecting up hidden states from previous time steps, all time steps are “Flowing” through the network in parallel

- Each time step can attend (softmax and dot product) to every other time slice.

- As if network has to guess each word - what other words increase the odds of the actual word?

- For example, a word like alien might want to attend to words like landed and earth, because alien is involving in the landing and is involved in this entity called earth and earth is the planet. So earth and planet seems to mean the same thing, as well as alien and “it”.

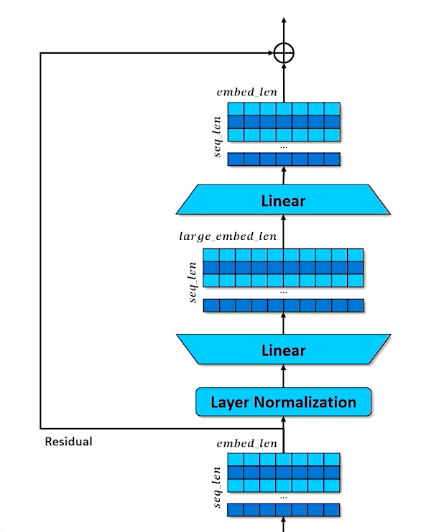

So when we put all of these things together, we are going to have a very large network:

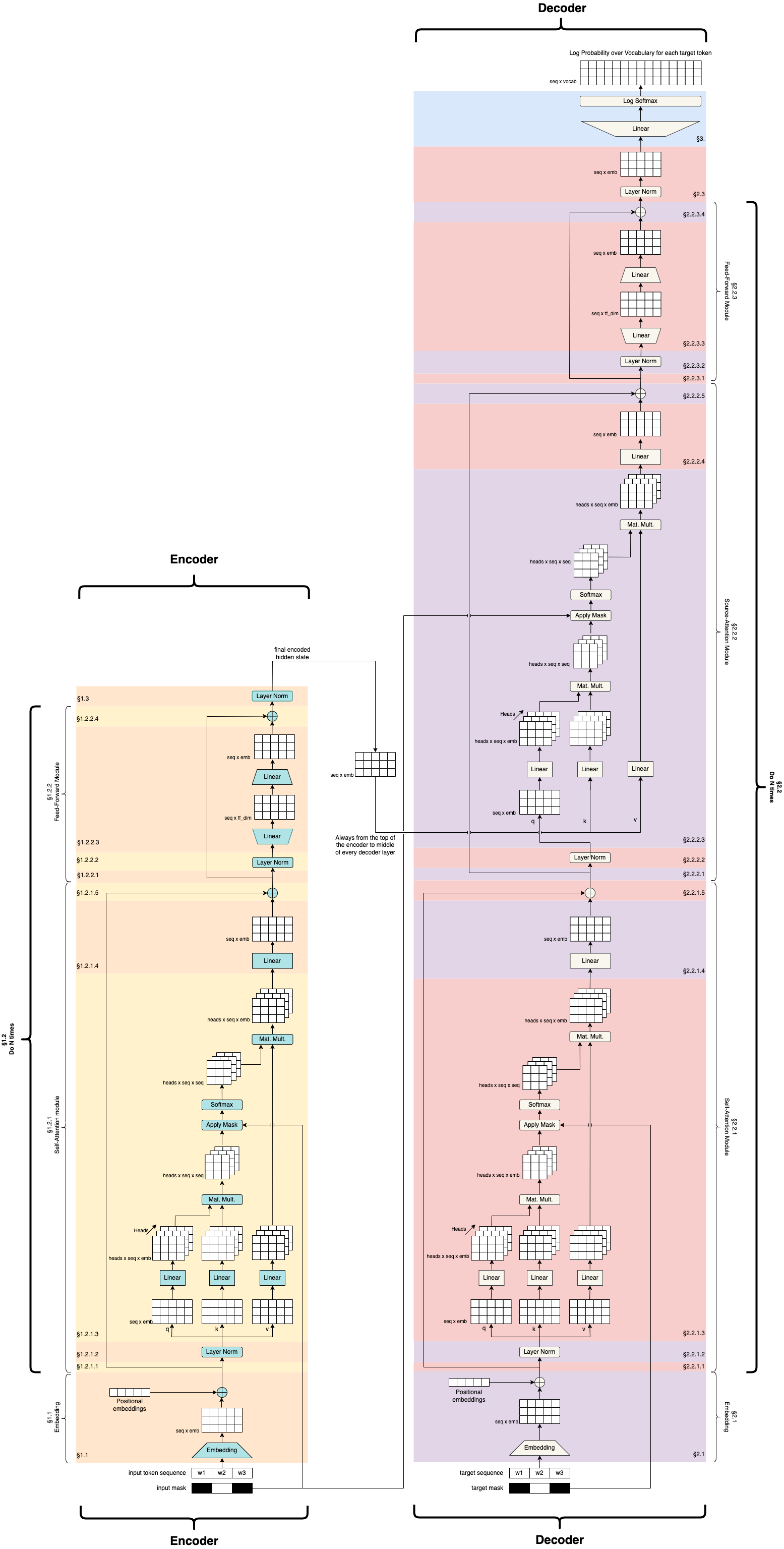

Credit: https://github.com/markriedl/transformer-walkthrough

Credit: https://github.com/markriedl/transformer-walkthrough

So we have an encoder/decoder situation with encoder and decoder side by side as we are often used to seeing, and then we start to deal with all these concepts of attention and masking and so on.

We will walk through the encoder and decoder, and discuss some note worthy transformer architecture such as BERT and GPT.

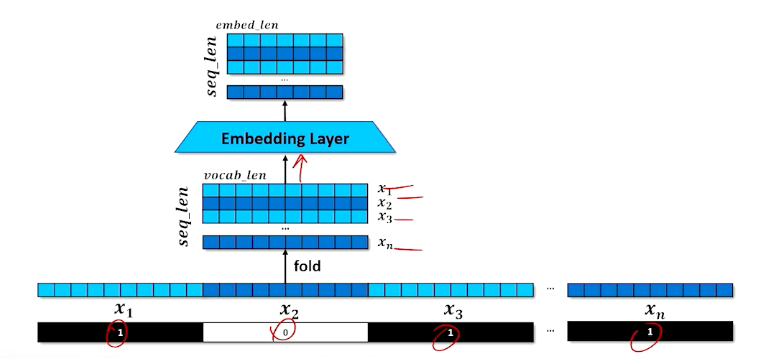

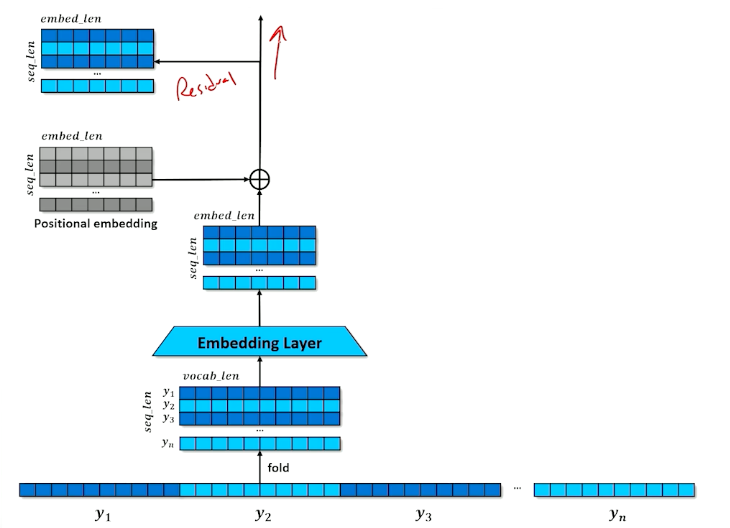

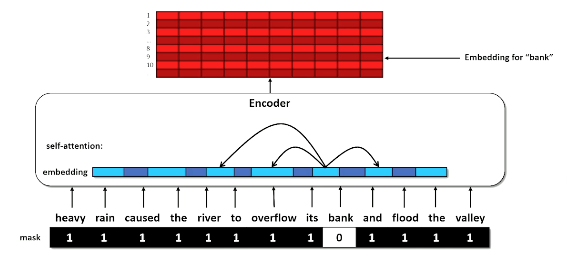

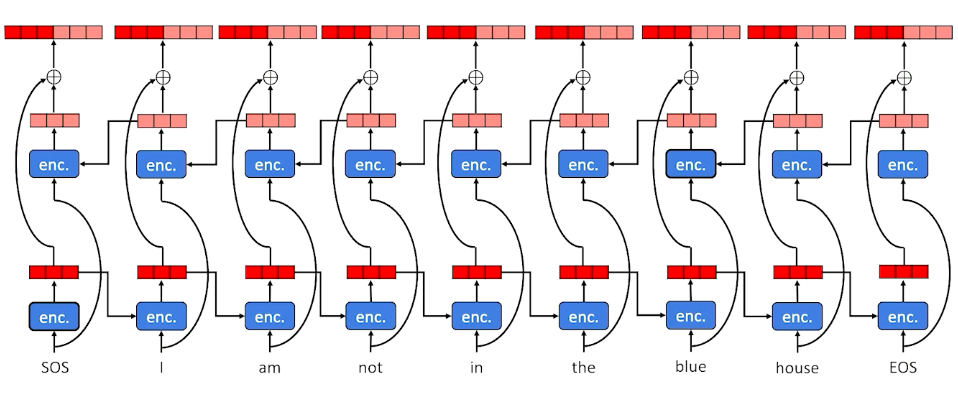

Transformer Encoder

The focus here is on self attention inside the encoder - each position in the sequence is able to select and incorporate the embeddings of the most relevant other positions.

- Given a sequence of tokens $x_1,…,x_n$ and a mask (in the above image $x_2$ is masked)

- Stack the one-hots to create a matrix

- Embed the one-hots into a stack of embeddings

The problem is:

- The network doesn’t know what order the tokens came in

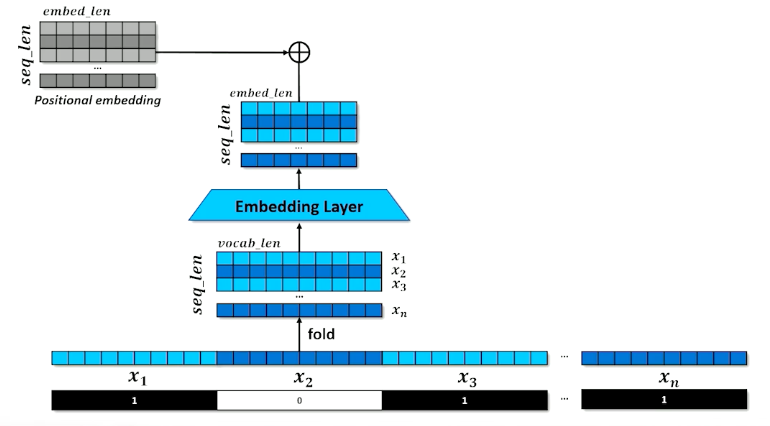

- Add positional information to each element of each embedding

What is a positional embeddings?

- Sine and Cosine functions at different scales

- Each embedding get a small number added or subtracted

- Identical tokens in different places in the input will look different

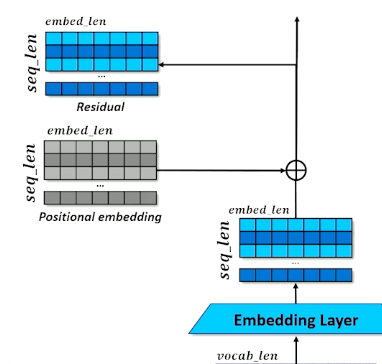

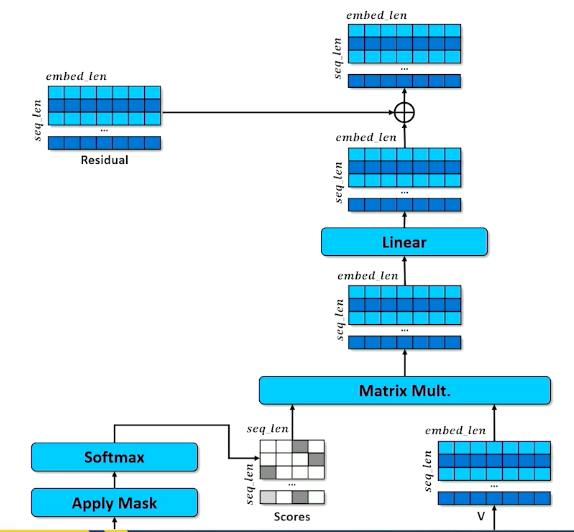

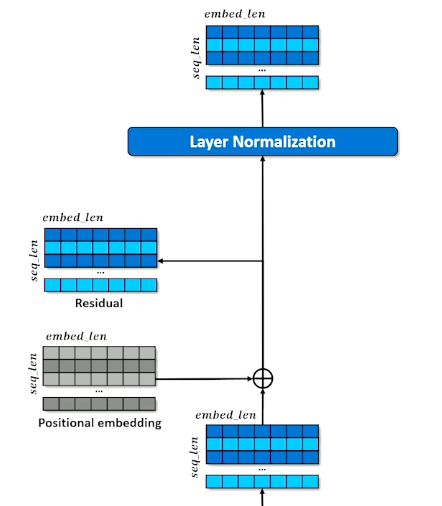

Set aside a residual

- A branch in the computational graph

- Tensor is untouched on one branch and manipulated on the other branch

- The manipulations are then applied to the residual and merged with the residual copy

- This will be revisited in the future to understand why we need this residual

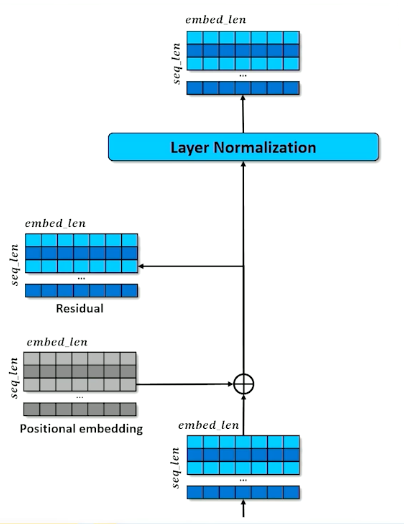

- All dimensions of all tokens are made relative to the mean $x’ = \frac{x-mean(x)}{\sigma(x) + \epsilon}$

- After normalizing, add trainable parameters $(W,b)$ so that the network can adjust the mean and standard deviation how it needs $x’’ = Wx’ +b$

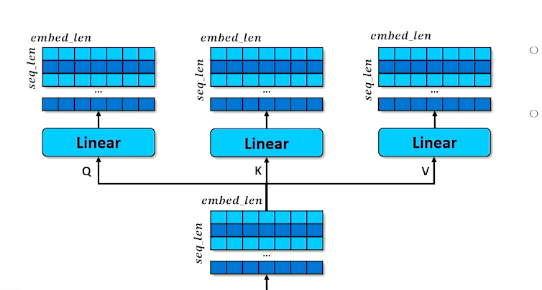

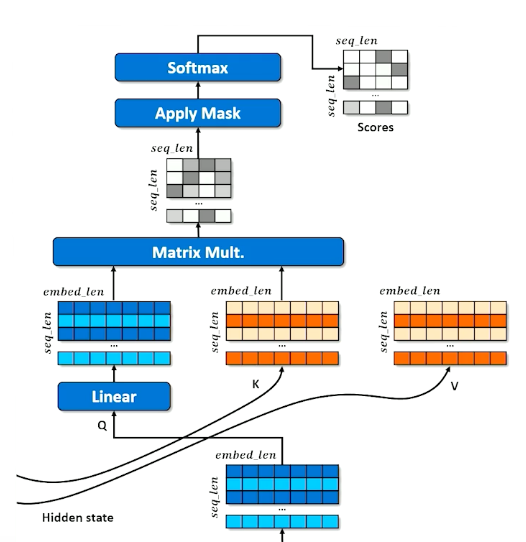

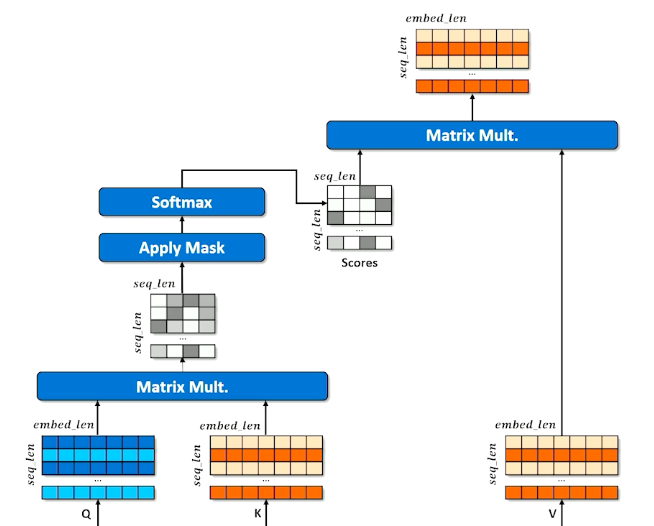

Transformers: Self-Attention

Self-attention is a mechanism to capture dependencies and relationships within input sequences. It allows the model to identify and weigh the importance of different parts of the input sequence by attending to itself.

- Make three copies of the embeddings

- Don’t over think it, just think of a hash table

- A normal hash table matches a query (Q) to a key (K) and retrieves the associated values (V)

- Instead, we have a “soft” hash table in which we try to find the best match between a query and a key that gives us the best value

- First we apply affine transformation to Q,K and V

- Learning happens here - how can Q, K and V be transformed to make the retrieval work best (results in the best hidden state to inform the decoder)

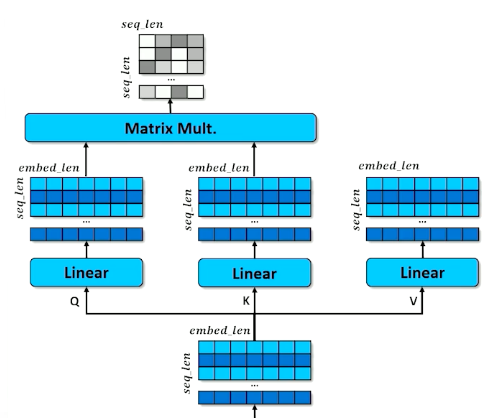

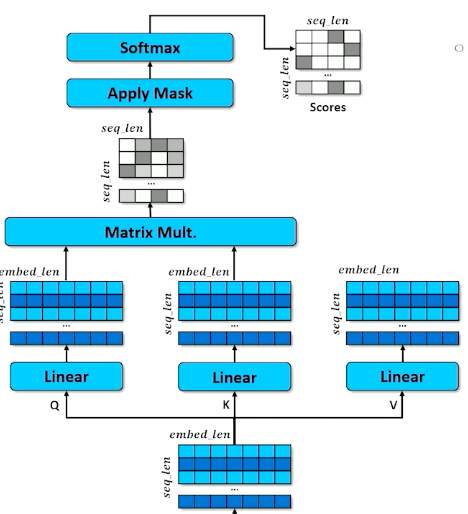

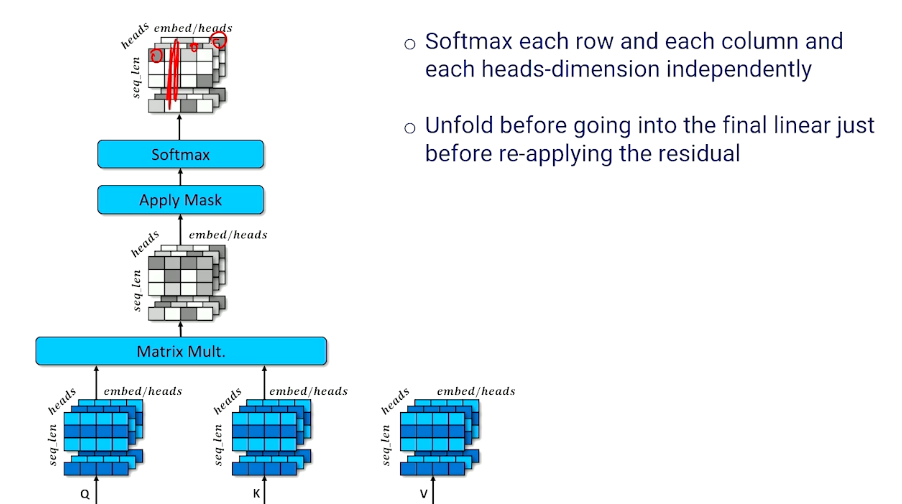

- How does a query match against a key?

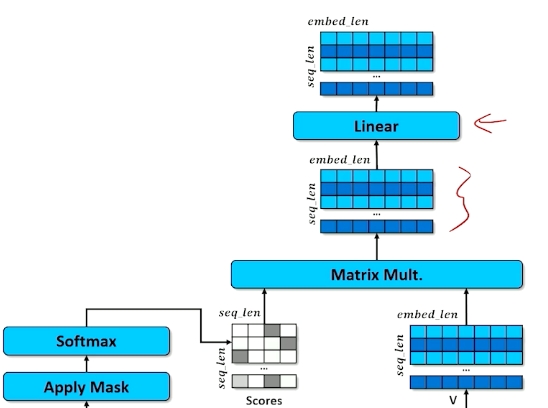

- Multiply Q and K together to get raw scores

- Apply the mask to indicate tokens that cannot be attended to (make numbers in masked rows close to 0)

- Apply softmax to “pick” the row each token thinks is most important

- Each cell in the scores matrix indicates how much each position likes each other position

- Softmax means each row should have a column close to 1 and the rest close to 0

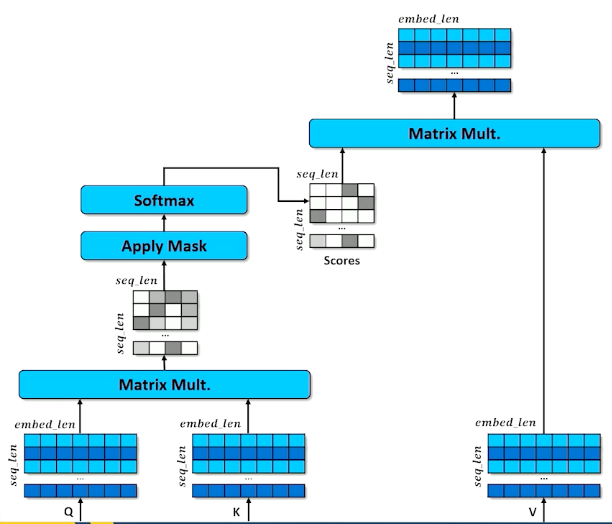

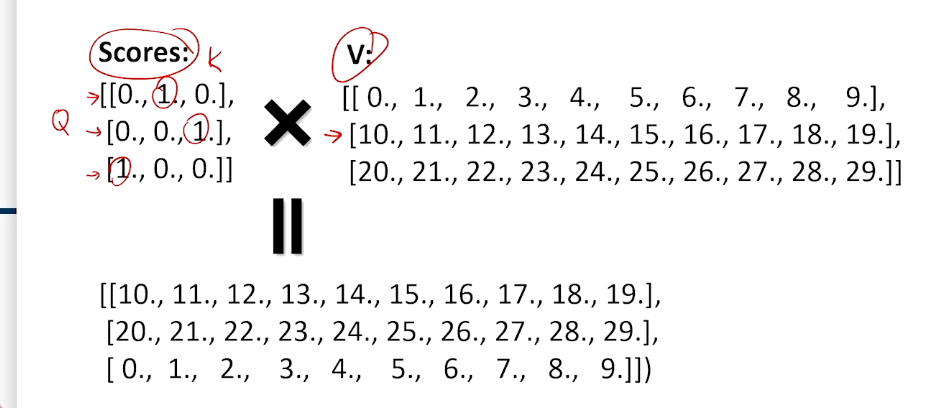

- Multiplying the scores against V means each position (row) in the encoded $x$ grabs a portion of every other row, proportional to score.

Example:

- In Q, the softmax is 0/1 for simplicity, in reality they are all probabilities so we will get a little of everything.

- So what we can see is it is shuffling/jumbling up the embeddings - the one closest to 1 will preserve the most values.

We will see in the next section how we make use of this “jumbled up” embeddings.

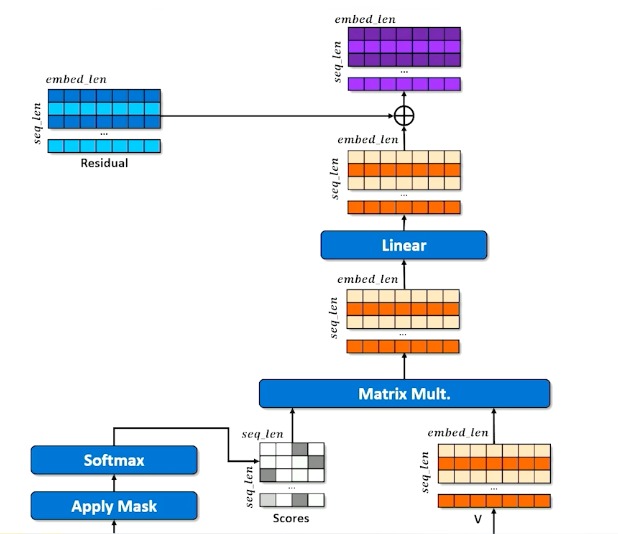

Self-Attention Process: Outputs

Apply a linear layer learns to do whatever is necessary to make this jumbled up set of embeddings to the next stage. (Instructor says whenever you are not sure if it is required to alter the weights, just a linear layer ![]() )

)

Now, coming back to the residual:

- Remember it was a pure copy of the embeddings

- Results of the self-attention are added to the residual

- Important to retain the original embedding in the original order

- reinforce or inhibiting embedding values (according to the transformation just before it)

- Now each embedding is some amount of the original embedding as well as some proportion of every other embedding attended to.

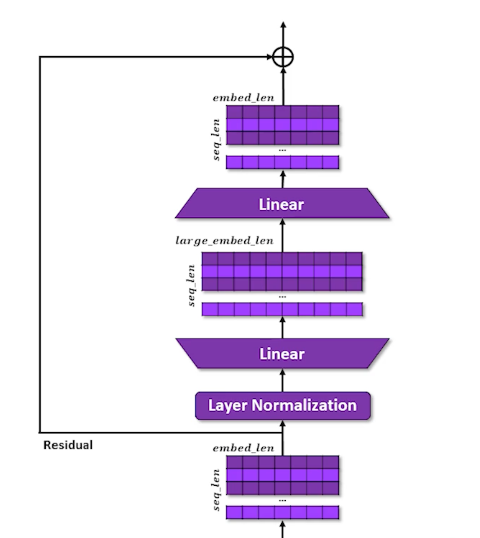

- Set aside the residual a second time

- Layer Normalization

- Learn to expand and compress the embeddings

- Expansion give capacity to spread concepts out

- Compression forces compromises but in a different configuration

- Re-apply residual

In summary:

- Stack the embed a sequence of $n$ tokens

- Split the embedding into query, key, and value and transform them so that Q and K can be combined to select a token from V with softmax

- Add the attended-to V to teh residual so that certain token embeddings are reinforced or diminished but never lost

- Do steps 2 and 3 $k$ more times to produce a final stack of $n$ hidden states, one for each of the $n$ original tokens

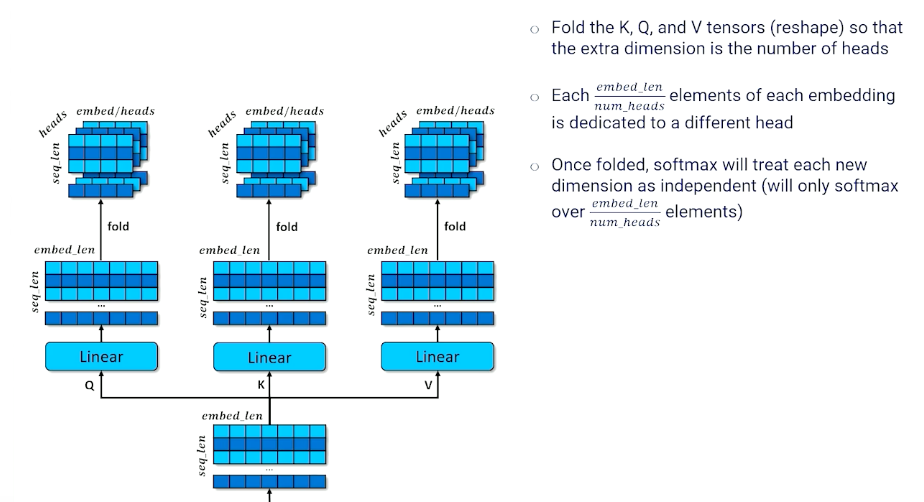

Multi-Headed Self-Attention

One last small detail to our encoder: Multi head

- The encoder configuration is single-headed self attention

- Each token can attend to one other token

- But we might want each token to attend to more than one token to account for different contexts or word senses

- Multi-headed attention applies self attention $h$ times

Note that we sacrifice embed_len in this case, but we can simply extend it in the earlier layers if we need to.

Then, we still do the same thing to multiply our Q and K together:

To summarize:

- Each token position can select $h$ different token positions

- Each $h^{th}$ of each token embedding is a separate embed

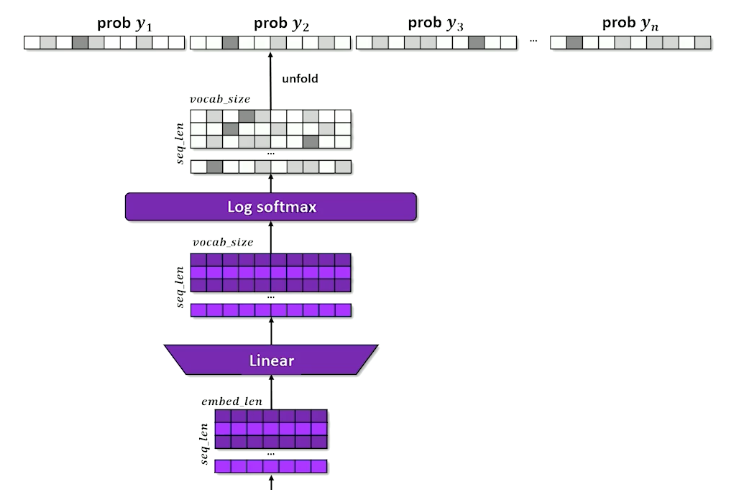

- When unfolded, each row is now a combination of several other token embeddings